Shihao Yang

ZeroS: Zero-Sum Linear Attention for Efficient Transformers

Feb 05, 2026Abstract:Linear attention methods offer Transformers $O(N)$ complexity but typically underperform standard softmax attention. We identify two fundamental limitations affecting these approaches: the restriction to convex combinations that only permits additive information blending, and uniform accumulated weight bias that dilutes attention in long contexts. We propose Zero-Sum Linear Attention (ZeroS), which addresses these limitations by removing the constant zero-order term $1/t$ and reweighting the remaining zero-sum softmax residuals. This modification creates mathematically stable weights, enabling both positive and negative values and allowing a single attention layer to perform contrastive operations. While maintaining $O(N)$ complexity, ZeroS theoretically expands the set of representable functions compared to convex combinations. Empirically, it matches or exceeds standard softmax attention across various sequence modeling benchmarks.

* Camera-ready version. Accepted at NeurIPS 2025

CAPS: Unifying Attention, Recurrence, and Alignment in Transformer-based Time Series Forecasting

Feb 02, 2026Abstract:This paper presents $\textbf{CAPS}$ (Clock-weighted Aggregation with Prefix-products and Softmax), a structured attention mechanism for time series forecasting that decouples three distinct temporal structures: global trends, local shocks, and seasonal patterns. Standard softmax attention entangles these through global normalization, while recent recurrent models sacrifice long-term, order-independent selection for order-dependent causal structure. CAPS combines SO(2) rotations for phase alignment with three additive gating paths -- Riemann softmax, prefix-product gates, and a Clock baseline -- within a single attention layer. We introduce the Clock mechanism, a learned temporal weighting that modulates these paths through a shared notion of temporal importance. Experiments on long- and short-term forecasting benchmarks surpass vanilla softmax and linear attention mechanisms and demonstrate competitive performance against seven strong baselines with linear complexity. Our code implementation is available at https://github.com/vireshpati/CAPS-Attention.

HyCoRA: Hyper-Contrastive Role-Adaptive Learning for Role-Playing

Nov 11, 2025Abstract:Multi-character role-playing aims to equip models with the capability to simulate diverse roles. Existing methods either use one shared parameterized module across all roles or assign a separate parameterized module to each role. However, the role-shared module may ignore distinct traits of each role, weakening personality learning, while the role-specific module may overlook shared traits across multiple roles, hindering commonality modeling. In this paper, we propose a novel HyCoRA: Hyper-Contrastive Role-Adaptive learning framework, which efficiently improves multi-character role-playing ability by balancing the learning of distinct and shared traits. Specifically, we propose a Hyper-Half Low-Rank Adaptation structure, where one half is a role-specific module generated by a lightweight hyper-network, and the other half is a trainable role-shared module. The role-specific module is devised to represent distinct persona signatures, while the role-shared module serves to capture common traits. Moreover, to better reflect distinct personalities across different roles, we design a hyper-contrastive learning mechanism to help the hyper-network distinguish their unique characteristics. Extensive experimental results on both English and Chinese available benchmarks demonstrate the superiority of our framework. Further GPT-4 evaluations and visual analyses also verify the capability of HyCoRA to capture role characteristics.

Are Statistical Methods Obsolete in the Era of Deep Learning?

May 27, 2025Abstract:In the era of AI, neural networks have become increasingly popular for modeling, inference, and prediction, largely due to their potential for universal approximation. With the proliferation of such deep learning models, a question arises: are leaner statistical methods still relevant? To shed insight on this question, we employ the mechanistic nonlinear ordinary differential equation (ODE) inverse problem as a testbed, using physics-informed neural network (PINN) as a representative of the deep learning paradigm and manifold-constrained Gaussian process inference (MAGI) as a representative of statistically principled methods. Through case studies involving the SEIR model from epidemiology and the Lorenz model from chaotic dynamics, we demonstrate that statistical methods are far from obsolete, especially when working with sparse and noisy observations. On tasks such as parameter inference and trajectory reconstruction, statistically principled methods consistently achieve lower bias and variance, while using far fewer parameters and requiring less hyperparameter tuning. Statistical methods can also decisively outperform deep learning models on out-of-sample future prediction, where the absence of relevant data often leads overparameterized models astray. Additionally, we find that statistically principled approaches are more robust to accumulation of numerical imprecision and can represent the underlying system more faithful to the true governing ODEs.

Physics-Informed Inference Time Scaling via Simulation-Calibrated Scientific Machine Learning

Apr 22, 2025Abstract:High-dimensional partial differential equations (PDEs) pose significant computational challenges across fields ranging from quantum chemistry to economics and finance. Although scientific machine learning (SciML) techniques offer approximate solutions, they often suffer from bias and neglect crucial physical insights. Inspired by inference-time scaling strategies in language models, we propose Simulation-Calibrated Scientific Machine Learning (SCaSML), a physics-informed framework that dynamically refines and debiases the SCiML predictions during inference by enforcing the physical laws. SCaSML leverages derived new physical laws that quantifies systematic errors and employs Monte Carlo solvers based on the Feynman-Kac and Elworthy-Bismut-Li formulas to dynamically correct the prediction. Both numerical and theoretical analysis confirms enhanced convergence rates via compute-optimal inference methods. Our numerical experiments demonstrate that SCaSML reduces errors by 20-50% compared to the base surrogate model, establishing it as the first algorithm to refine approximated solutions to high-dimensional PDE during inference. Code of SCaSML is available at https://github.com/Francis-Fan-create/SCaSML.

Linear Transformers as VAR Models: Aligning Autoregressive Attention Mechanisms with Autoregressive Forecasting

Feb 11, 2025

Abstract:Autoregressive attention-based time series forecasting (TSF) has drawn increasing interest, with mechanisms like linear attention sometimes outperforming vanilla attention. However, deeper Transformer architectures frequently misalign with autoregressive objectives, obscuring the underlying VAR structure embedded within linear attention and hindering their ability to capture the data generative processes in TSF. In this work, we first show that a single linear attention layer can be interpreted as a dynamic vector autoregressive (VAR) structure. We then explain that existing multi-layer Transformers have structural mismatches with the autoregressive forecasting objective, which impair interpretability and generalization ability. To address this, we show that by rearranging the MLP, attention, and input-output flow, multi-layer linear attention can also be aligned as a VAR model. Then, we propose Structural Aligned Mixture of VAR (SAMoVAR), a linear Transformer variant that integrates interpretable dynamic VAR weights for multivariate TSF. By aligning the Transformer architecture with autoregressive objectives, SAMoVAR delivers improved performance, interpretability, and computational efficiency, comparing to SOTA TSF models.

EFiGP: Eigen-Fourier Physics-Informed Gaussian Process for Inference of Dynamic Systems

Jan 23, 2025

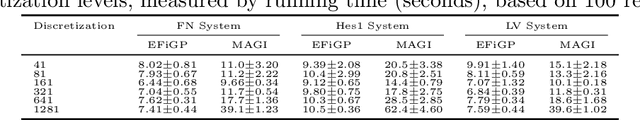

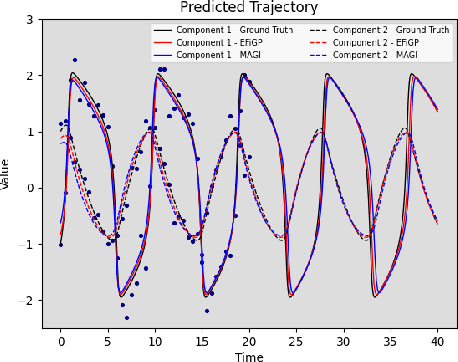

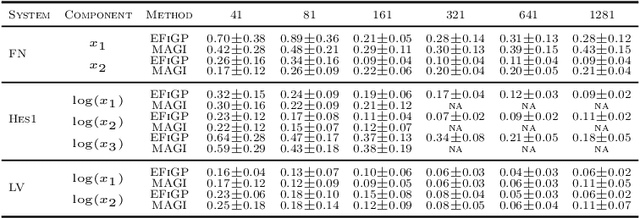

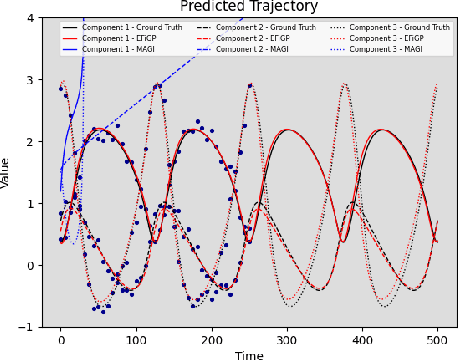

Abstract:Parameter estimation and trajectory reconstruction for data-driven dynamical systems governed by ordinary differential equations (ODEs) are essential tasks in fields such as biology, engineering, and physics. These inverse problems -- estimating ODE parameters from observational data -- are particularly challenging when the data are noisy, sparse, and the dynamics are nonlinear. We propose the Eigen-Fourier Physics-Informed Gaussian Process (EFiGP), an algorithm that integrates Fourier transformation and eigen-decomposition into a physics-informed Gaussian Process framework. This approach eliminates the need for numerical integration, significantly enhancing computational efficiency and accuracy. Built on a principled Bayesian framework, EFiGP incorporates the ODE system through probabilistic conditioning, enforcing governing equations in the Fourier domain while truncating high-frequency terms to achieve denoising and computational savings. The use of eigen-decomposition further simplifies Gaussian Process covariance operations, enabling efficient recovery of trajectories and parameters even in dense-grid settings. We validate the practical effectiveness of EFiGP on three benchmark examples, demonstrating its potential for reliable and interpretable modeling of complex dynamical systems while addressing key challenges in trajectory recovery and computational cost.

Coupled Integral PINN for conservation law

Nov 18, 2024

Abstract:The Physics-Informed Neural Network (PINN) is an innovative approach to solve a diverse array of partial differential equations (PDEs) leveraging the power of neural networks. This is achieved by minimizing the residual loss associated with the explicit physical information, usually coupled with data derived from initial and boundary conditions. However, a challenge arises in the context of nonlinear conservation laws where derivatives are undefined at shocks, leading to solutions that deviate from the true physical phenomena. To solve this issue, the physical solution must be extracted from the weak formulation of the PDE and is typically further bounded by entropy conditions. Within the numerical framework, finite volume methods (FVM) are employed to address conservation laws. These methods resolve the integral form of conservation laws and delineate the shock characteristics. Inspired by the principles underlying FVM, this paper introduces a novel Coupled Integrated PINN methodology that involves fitting the integral solutions of equations using additional neural networks. This technique not only augments the conventional PINN's capability in modeling shock waves, but also eliminates the need for spatial and temporal discretization. As such, it bypasses the complexities of numerical integration and reconstruction associated with non-convex fluxes. Finally, we show that the proposed new Integrated PINN performs well in conservative law and outperforms the vanilla PINN when tackle the challenging shock problems using examples of Burger's equation, Buckley-Leverett Equation and Euler System.

Autoregressive Moving-average Attention Mechanism for Time Series Forecasting

Oct 04, 2024

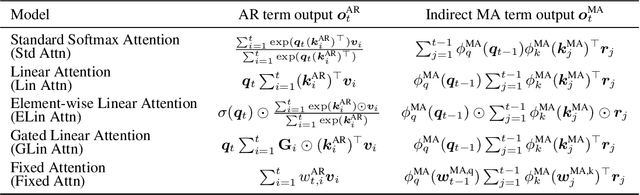

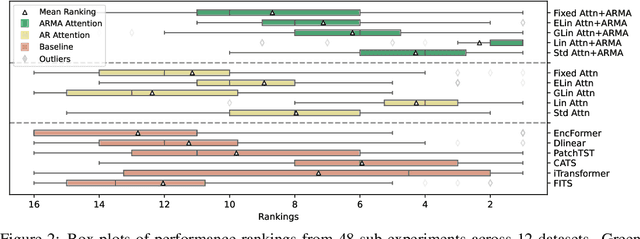

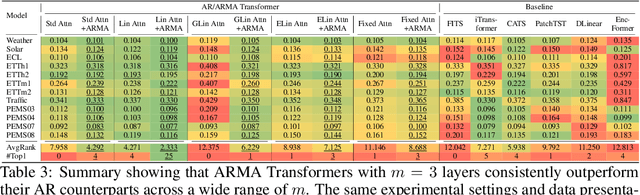

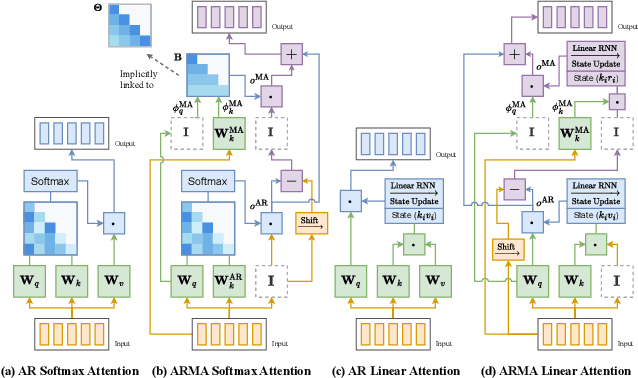

Abstract:We propose an Autoregressive (AR) Moving-average (MA) attention structure that can adapt to various linear attention mechanisms, enhancing their ability to capture long-range and local temporal patterns in time series. In this paper, we first demonstrate that, for the time series forecasting (TSF) task, the previously overlooked decoder-only autoregressive Transformer model can achieve results comparable to the best baselines when appropriate tokenization and training methods are applied. Moreover, inspired by the ARMA model from statistics and recent advances in linear attention, we introduce the full ARMA structure into existing autoregressive attention mechanisms. By using an indirect MA weight generation method, we incorporate the MA term while maintaining the time complexity and parameter size of the underlying efficient attention models. We further explore how indirect parameter generation can produce implicit MA weights that align with the modeling requirements for local temporal impacts. Experimental results show that incorporating the ARMA structure consistently improves the performance of various AR attentions on TSF tasks, achieving state-of-the-art results.

Inverse Probability of Treatment Weighting with Deep Sequence Models Enables Accurate treatment effect Estimation from Electronic Health Records

Jun 13, 2024

Abstract:Observational data have been actively used to estimate treatment effect, driven by the growing availability of electronic health records (EHRs). However, EHRs typically consist of longitudinal records, often introducing time-dependent confoundings that hinder the unbiased estimation of treatment effect. Inverse probability of treatment weighting (IPTW) is a widely used propensity score method since it provides unbiased treatment effect estimation and its derivation is straightforward. In this study, we aim to utilize IPTW to estimate treatment effect in the presence of time-dependent confounding using claims records. Previous studies have utilized propensity score methods with features derived from claims records through feature processing, which generally requires domain knowledge and additional resources to extract information to accurately estimate propensity scores. Deep sequence models, particularly recurrent neural networks and self-attention-based architectures, have demonstrated good performance in modeling EHRs for various downstream tasks. We propose that these deep sequence models can provide accurate IPTW estimation of treatment effect by directly estimating the propensity scores from claims records without the need for feature processing. We empirically demonstrate this by conducting comprehensive evaluations using synthetic and semi-synthetic datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge