Yeping Wang

Hierarchically Accelerated Coverage Path Planning for Redundant Manipulators

Feb 26, 2025

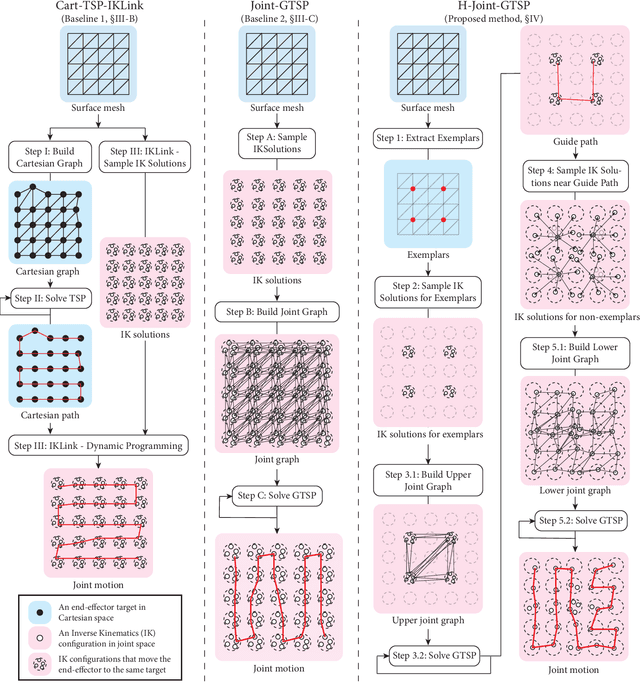

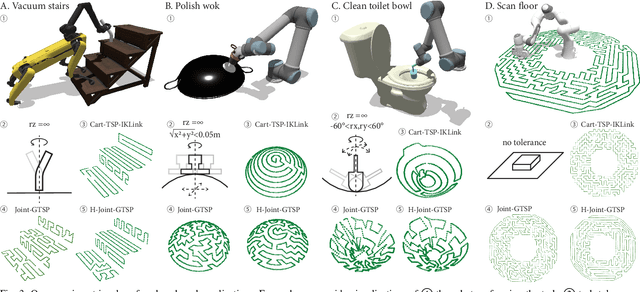

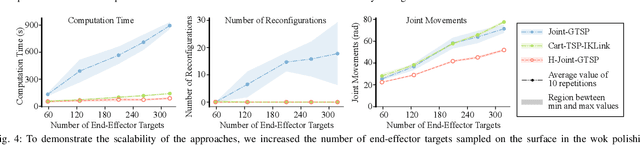

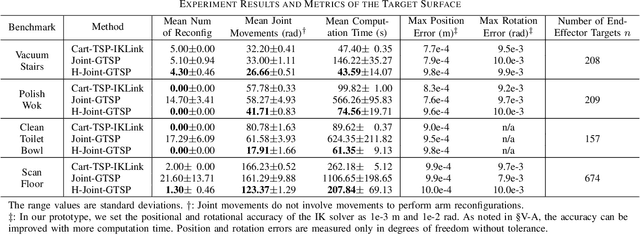

Abstract:Many robotic applications, such as sanding, polishing, wiping and sensor scanning, require a manipulator to dexterously cover a surface using its end-effector. In this paper, we provide an efficient and effective coverage path planning approach that leverages a manipulator's redundancy and task tolerances to minimize costs in joint space. We formulate the problem as a Generalized Traveling Salesman Problem and hierarchically streamline the graph size. Our strategy is to identify guide paths that roughly cover the surface and accelerate the computation by solving a sequence of smaller problems. We demonstrate the effectiveness of our method through a simulation experiment and an illustrative demonstration using a physical robot.

Anytime Planning for End-Effector Trajectory Tracking

Feb 05, 2025

Abstract:End-effector trajectory tracking algorithms find joint motions that drive robot manipulators to track reference trajectories. In practical scenarios, anytime algorithms are preferred for their ability to quickly generate initial motions and continuously refine them over time. In this paper, we present an algorithmic framework that adapts common graph-based trajectory tracking algorithms to be anytime and enhances their efficiency and effectiveness. Our key insight is to identify guide paths that approximately track the reference trajectory and strategically bias sampling toward the guide paths. We demonstrate the effectiveness of the proposed framework by restructuring two existing graph-based trajectory tracking algorithms and evaluating the updated algorithms in three experiments.

Coupled Integral PINN for conservation law

Nov 18, 2024

Abstract:The Physics-Informed Neural Network (PINN) is an innovative approach to solve a diverse array of partial differential equations (PDEs) leveraging the power of neural networks. This is achieved by minimizing the residual loss associated with the explicit physical information, usually coupled with data derived from initial and boundary conditions. However, a challenge arises in the context of nonlinear conservation laws where derivatives are undefined at shocks, leading to solutions that deviate from the true physical phenomena. To solve this issue, the physical solution must be extracted from the weak formulation of the PDE and is typically further bounded by entropy conditions. Within the numerical framework, finite volume methods (FVM) are employed to address conservation laws. These methods resolve the integral form of conservation laws and delineate the shock characteristics. Inspired by the principles underlying FVM, this paper introduces a novel Coupled Integrated PINN methodology that involves fitting the integral solutions of equations using additional neural networks. This technique not only augments the conventional PINN's capability in modeling shock waves, but also eliminates the need for spatial and temporal discretization. As such, it bypasses the complexities of numerical integration and reconstruction associated with non-convex fluxes. Finally, we show that the proposed new Integrated PINN performs well in conservative law and outperforms the vanilla PINN when tackle the challenging shock problems using examples of Burger's equation, Buckley-Leverett Equation and Euler System.

Motion Comparator: Visual Comparison of Robot Motions

Jul 03, 2024Abstract:Roboticists compare robot motions for tasks such as parameter tuning, troubleshooting, and deciding between possible motions. However, most existing visualization tools are designed for individual motions and lack the features necessary to facilitate robot motion comparison. In this paper, we utilize a rigorous design framework to develop Motion Comparator, a web-based tool that facilitates the comprehension, comparison, and communication of robot motions. Our design process identified roboticists' needs, articulated design challenges, and provided corresponding strategies. Motion Comparator includes several key features such as multi-view coordination, quaternion visualization, time warping, and comparative designs. To demonstrate the applications of Motion Comparator, we discuss four case studies in which our tool is used for motion selection, troubleshooting, parameter tuning, and motion review.

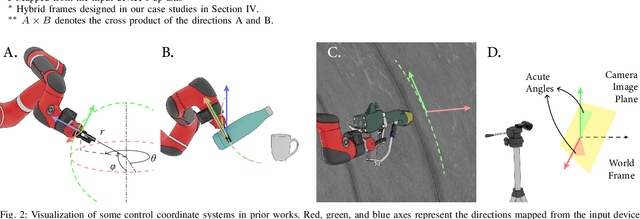

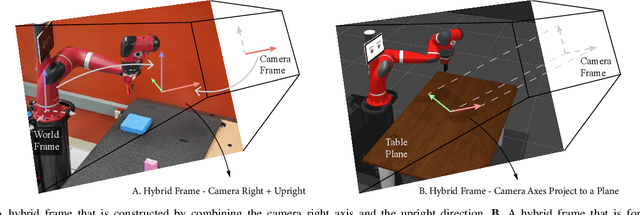

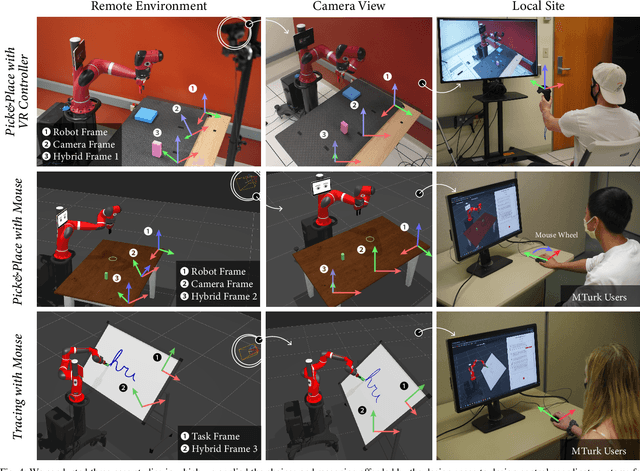

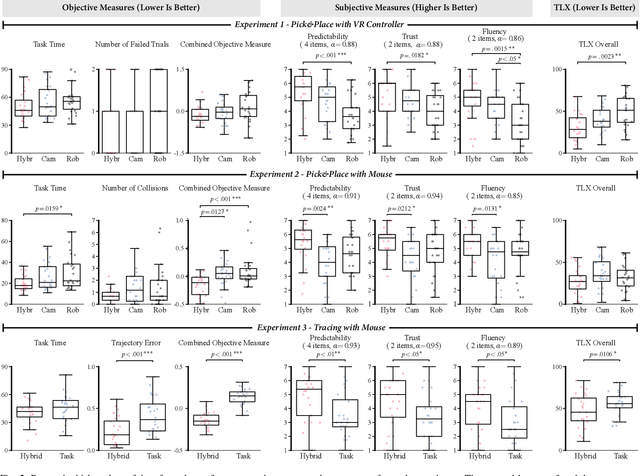

A Design Space of Control Coordinate Systems in Telemanipulation

Mar 09, 2024

Abstract:Teleoperation systems map operator commands from an input device into some coordinate frame in the remote environment. This frame, which we call a control coordinate system, should be carefully chosen as it determines how operators should move to get desired robot motions. While specific choices made by individual systems have been described in prior work, a design space, i.e., an abstraction that encapsulates the range of possible options, has not been codified. In this paper, we articulate a design space of control coordinate systems, which can be defined by choosing a direction in the remote environment for each axis of the input device. Our key insight is that there is a small set of meaningful directions in the remote environment. Control coordinate systems in prior works can be organized by the alignments of their axes with these directions and new control coordinate systems can be designed by choosing from these directions. We also provide three design criteria to reason about the suitability of control coordinate systems for various scenarios. To demonstrate the utility of our design space, we use it to organize prior systems and design control coordinate systems for three scenarios that we assess through human-subject experiments. Our results highlight the promise of our design space as a conceptual tool to assist system designers to design control coordinate systems that are effective and intuitive for operators.

IKLink: End-Effector Trajectory Tracking with Minimal Reconfigurations

Feb 25, 2024Abstract:Many applications require a robot to accurately track reference end-effector trajectories. Certain trajectories may not be tracked as single, continuous paths due to the robot's kinematic constraints or obstacles elsewhere in the environment. In this situation, it becomes necessary to divide the trajectory into shorter segments. Each such division introduces a reconfiguration, in which the robot deviates from the reference trajectory, repositions itself in configuration space, and then resumes task execution. The occurrence of reconfigurations should be minimized because they increase the time and energy usage. In this paper, we present IKLink, a method for finding joint motions to track reference end-effector trajectories while executing minimal reconfigurations. Our graph-based method generates a diverse set of Inverse Kinematics (IK) solutions for every waypoint on the reference trajectory and utilizes a dynamic programming algorithm to find the globally optimal motion by linking the IK solutions. We demonstrate the effectiveness of IKLink through a simulation experiment and an illustrative demonstration using a physical robot.

Unlocking the Performance of Proximity Sensors by Utilizing Transient Histograms

Aug 25, 2023Abstract:We provide methods which recover planar scene geometry by utilizing the transient histograms captured by a class of close-range time-of-flight (ToF) distance sensor. A transient histogram is a one dimensional temporal waveform which encodes the arrival time of photons incident on the ToF sensor. Typically, a sensor processes the transient histogram using a proprietary algorithm to produce distance estimates, which are commonly used in several robotics applications. Our methods utilize the transient histogram directly to enable recovery of planar geometry more accurately than is possible using only proprietary distance estimates, and consistent recovery of the albedo of the planar surface, which is not possible with proprietary distance estimates alone. This is accomplished via a differentiable rendering pipeline, which simulates the transient imaging process, allowing direct optimization of scene geometry to match observations. To validate our methods, we capture 3,800 measurements of eight planar surfaces from a wide range of viewpoints, and show that our method outperforms the proprietary-distance-estimate baseline by an order of magnitude in most scenarios. We demonstrate a simple robotics application which uses our method to sense the distance to and slope of a planar surface from a sensor mounted on the end effector of a robot arm.

Exploiting Task Tolerances in Mimicry-based Telemanipulation

Jul 28, 2023

Abstract:We explore task tolerances, i.e., allowable position or rotation inaccuracy, as an important resource to facilitate smooth and effective telemanipulation. Task tolerances provide a robot flexibility to generate smooth and feasible motions; however, in teleoperation, this flexibility may make the user's control less direct. In this work, we implemented a telemanipulation system that allows a robot to autonomously adjust its configuration within task tolerances. We conducted a user study comparing a telemanipulation paradigm that exploits task tolerances (functional mimicry) to a paradigm that requires the robot to exactly mimic its human operator (exact mimicry), and assess how the choice in paradigm shapes user experience and task performance. Our results show that autonomous adjustments within task tolerances can lead to performance improvements without sacrificing perceived control of the robot. Additionally, we find that users perceive the robot to be more under control, predictable, fluent, and trustworthy in functional mimicry than in exact mimicry.

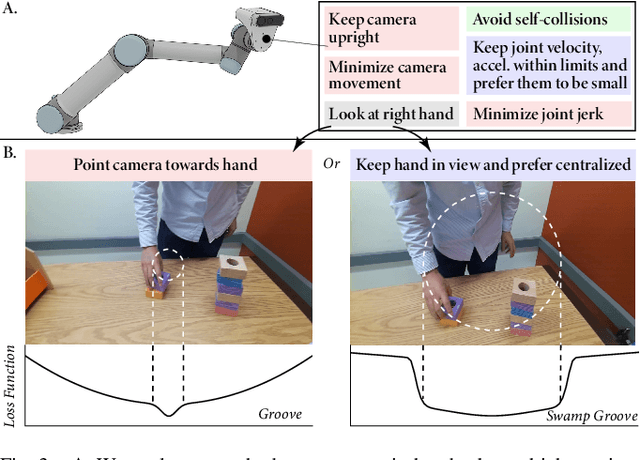

Periscope: A Robotic Camera System to Support Remote Physical Collaboration

May 12, 2023Abstract:We investigate how robotic camera systems can offer new capabilities to computer-supported cooperative work through the design, development, and evaluation of a prototype system called Periscope. With Periscope, a local worker completes manipulation tasks with guidance from a remote helper who observes the workspace through a camera mounted on a semi-autonomous robotic arm that is co-located with the worker. Our key insight is that the helper, the worker, and the robot should all share responsibility of the camera view-an approach we call shared camera control. Using this approach, we present a set of modes that distribute the control of the camera between the human collaborators and the autonomous robot depending on task needs. We demonstrate the system's utility and the promise of shared camera control through a preliminary study where 12 dyads collaboratively worked on assembly tasks and discuss design and research implications of our work for future robotic camera system that facilitate remote collaboration.

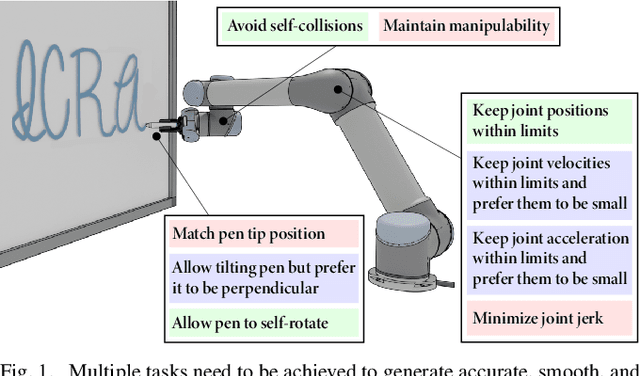

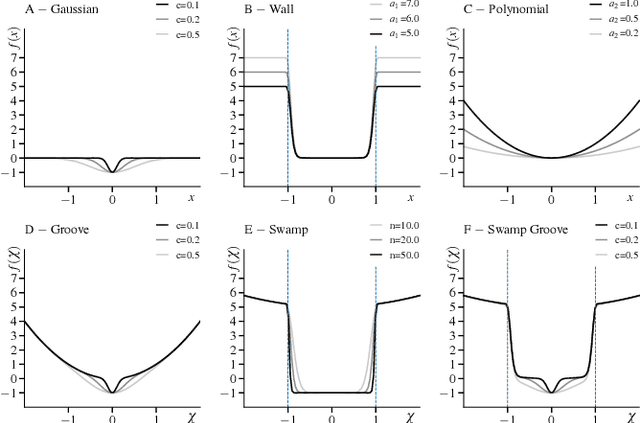

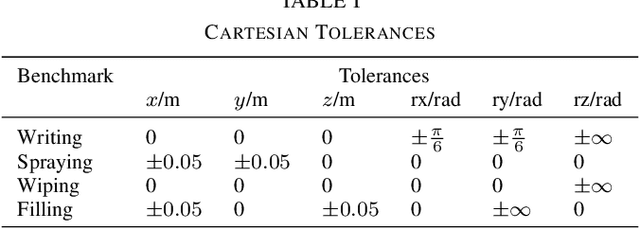

RangedIK: An Optimization-based Robot Motion Generation Method for Ranged-Goal Tasks

Feb 27, 2023

Abstract:Generating feasible robot motions in real-time requires achieving multiple tasks (i.e., kinematic requirements) simultaneously. These tasks can have a specific goal, a range of equally valid goals, or a range of acceptable goals with a preference toward a specific goal. To satisfy multiple and potentially competing tasks simultaneously, it is important to exploit the flexibility afforded by tasks with a range of goals. In this paper, we propose a real-time motion generation method that accommodates all three categories of tasks within a single, unified framework and leverages the flexibility of tasks with a range of goals to accommodate other tasks. Our method incorporates tasks in a weighted-sum multiple-objective optimization structure and uses barrier methods with novel loss functions to encode the valid range of a task. We demonstrate the effectiveness of our method through a simulation experiment that compares it to state-of-the-art alternative approaches, and by demonstrating it on a physical camera-in-hand robot that shows that our method enables the robot to achieve smooth and feasible camera motions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge