Carter Sifferman

Recovering Parametric Scenes from Very Few Time-of-Flight Pixels

Sep 19, 2025

Abstract:We aim to recover the geometry of 3D parametric scenes using very few depth measurements from low-cost, commercially available time-of-flight sensors. These sensors offer very low spatial resolution (i.e., a single pixel), but image a wide field-of-view per pixel and capture detailed time-of-flight data in the form of time-resolved photon counts. This time-of-flight data encodes rich scene information and thus enables recovery of simple scenes from sparse measurements. We investigate the feasibility of using a distributed set of few measurements (e.g., as few as 15 pixels) to recover the geometry of simple parametric scenes with a strong prior, such as estimating the 6D pose of a known object. To achieve this, we design a method that utilizes both feed-forward prediction to infer scene parameters, and differentiable rendering within an analysis-by-synthesis framework to refine the scene parameter estimate. We develop hardware prototypes and demonstrate that our method effectively recovers object pose given an untextured 3D model in both simulations and controlled real-world captures, and show promising initial results for other parametric scenes. We additionally conduct experiments to explore the limits and capabilities of our imaging solution.

Efficient Detection of Objects Near a Robot Manipulator via Miniature Time-of-Flight Sensors

Sep 19, 2025

Abstract:We provide a method for detecting and localizing objects near a robot arm using arm-mounted miniature time-of-flight sensors. A key challenge when using arm-mounted sensors is differentiating between the robot itself and external objects in sensor measurements. To address this challenge, we propose a computationally lightweight method which utilizes the raw time-of-flight information captured by many off-the-shelf, low-resolution time-of-flight sensor. We build an empirical model of expected sensor measurements in the presence of the robot alone, and use this model at runtime to detect objects in proximity to the robot. In addition to avoiding robot self-detections in common sensor configurations, the proposed method enables extra flexibility in sensor placement, unlocking configurations which achieve more efficient coverage of a radius around the robot arm. Our method can detect small objects near the arm and localize the position of objects along the length of a robot link to reasonable precision. We evaluate the performance of the method with respect to object type, location, and ambient light level, and identify limiting factors on performance inherent in the measurement principle. The proposed method has potential applications in collision avoidance and in facilitating safe human-robot interaction.

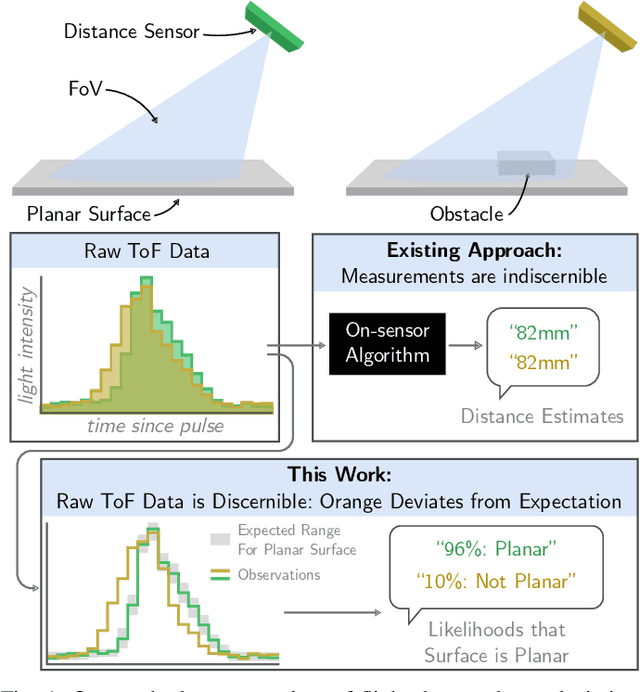

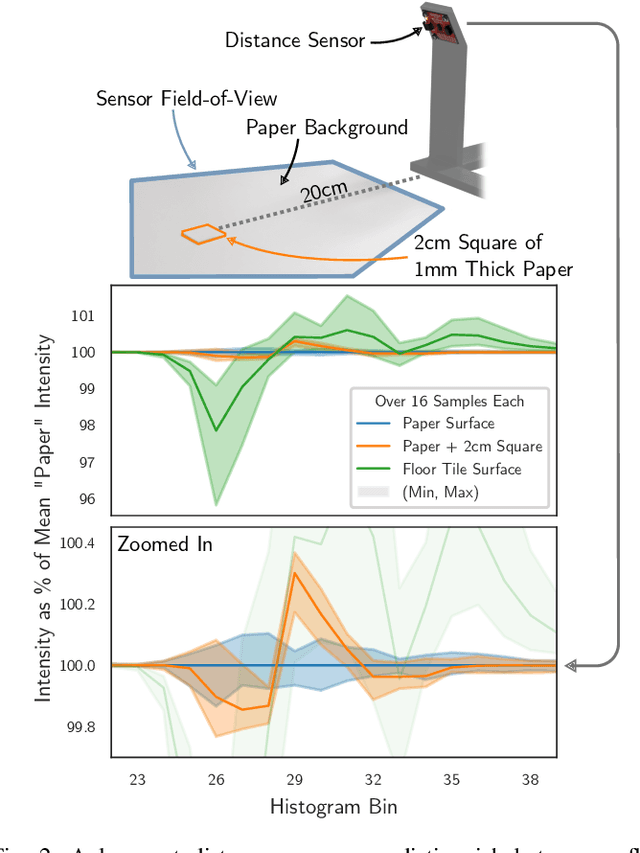

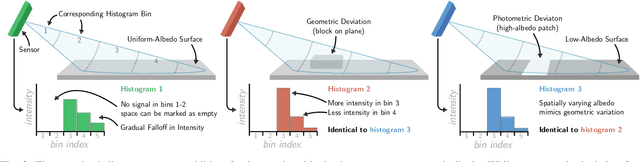

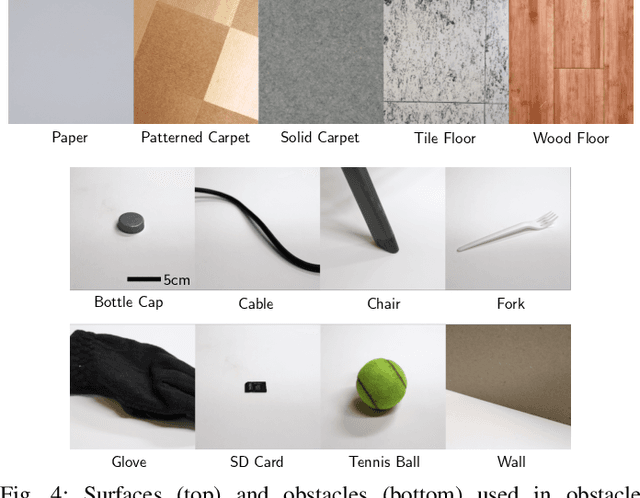

Using a Distance Sensor to Detect Deviations in a Planar Surface

Aug 07, 2024

Abstract:We investigate methods for determining if a planar surface contains geometric deviations (e.g., protrusions, objects, divots, or cliffs) using only an instantaneous measurement from a miniature optical time-of-flight sensor. The key to our method is to utilize the entirety of information encoded in raw time-of-flight data captured by off-the-shelf distance sensors. We provide an analysis of the problem in which we identify the key ambiguity between geometry and surface photometrics. To overcome this challenging ambiguity, we fit a Gaussian mixture model to a small dataset of planar surface measurements. This model implicitly captures the expected geometry and distribution of photometrics of the planar surface and is used to identify measurements that are likely to contain deviations. We characterize our method on a variety of surfaces and planar deviations across a range of scenarios. We find that our method utilizing raw time-of-flight data outperforms baselines which use only derived distance estimates. We build an example application in which our method enables mobile robot obstacle and cliff avoidance over a wide field-of-view.

Towards 3D Vision with Low-Cost Single-Photon Cameras

Mar 29, 2024Abstract:We present a method for reconstructing 3D shape of arbitrary Lambertian objects based on measurements by miniature, energy-efficient, low-cost single-photon cameras. These cameras, operating as time resolved image sensors, illuminate the scene with a very fast pulse of diffuse light and record the shape of that pulse as it returns back from the scene at a high temporal resolution. We propose to model this image formation process, account for its non-idealities, and adapt neural rendering to reconstruct 3D geometry from a set of spatially distributed sensors with known poses. We show that our approach can successfully recover complex 3D shapes from simulated data. We further demonstrate 3D object reconstruction from real-world captures, utilizing measurements from a commodity proximity sensor. Our work draws a connection between image-based modeling and active range scanning and is a step towards 3D vision with single-photon cameras.

IKLink: End-Effector Trajectory Tracking with Minimal Reconfigurations

Feb 25, 2024Abstract:Many applications require a robot to accurately track reference end-effector trajectories. Certain trajectories may not be tracked as single, continuous paths due to the robot's kinematic constraints or obstacles elsewhere in the environment. In this situation, it becomes necessary to divide the trajectory into shorter segments. Each such division introduces a reconfiguration, in which the robot deviates from the reference trajectory, repositions itself in configuration space, and then resumes task execution. The occurrence of reconfigurations should be minimized because they increase the time and energy usage. In this paper, we present IKLink, a method for finding joint motions to track reference end-effector trajectories while executing minimal reconfigurations. Our graph-based method generates a diverse set of Inverse Kinematics (IK) solutions for every waypoint on the reference trajectory and utilizes a dynamic programming algorithm to find the globally optimal motion by linking the IK solutions. We demonstrate the effectiveness of IKLink through a simulation experiment and an illustrative demonstration using a physical robot.

Unlocking the Performance of Proximity Sensors by Utilizing Transient Histograms

Aug 25, 2023Abstract:We provide methods which recover planar scene geometry by utilizing the transient histograms captured by a class of close-range time-of-flight (ToF) distance sensor. A transient histogram is a one dimensional temporal waveform which encodes the arrival time of photons incident on the ToF sensor. Typically, a sensor processes the transient histogram using a proprietary algorithm to produce distance estimates, which are commonly used in several robotics applications. Our methods utilize the transient histogram directly to enable recovery of planar geometry more accurately than is possible using only proprietary distance estimates, and consistent recovery of the albedo of the planar surface, which is not possible with proprietary distance estimates alone. This is accomplished via a differentiable rendering pipeline, which simulates the transient imaging process, allowing direct optimization of scene geometry to match observations. To validate our methods, we capture 3,800 measurements of eight planar surfaces from a wide range of viewpoints, and show that our method outperforms the proprietary-distance-estimate baseline by an order of magnitude in most scenarios. We demonstrate a simple robotics application which uses our method to sense the distance to and slope of a planar surface from a sensor mounted on the end effector of a robot arm.

Exploiting Task Tolerances in Mimicry-based Telemanipulation

Jul 28, 2023

Abstract:We explore task tolerances, i.e., allowable position or rotation inaccuracy, as an important resource to facilitate smooth and effective telemanipulation. Task tolerances provide a robot flexibility to generate smooth and feasible motions; however, in teleoperation, this flexibility may make the user's control less direct. In this work, we implemented a telemanipulation system that allows a robot to autonomously adjust its configuration within task tolerances. We conducted a user study comparing a telemanipulation paradigm that exploits task tolerances (functional mimicry) to a paradigm that requires the robot to exactly mimic its human operator (exact mimicry), and assess how the choice in paradigm shapes user experience and task performance. Our results show that autonomous adjustments within task tolerances can lead to performance improvements without sacrificing perceived control of the robot. Additionally, we find that users perceive the robot to be more under control, predictable, fluent, and trustworthy in functional mimicry than in exact mimicry.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge