Shaobing Xu

CTS-CBS: A New Approach for Multi-Agent Collaborative Task Sequencing and Path Finding

Mar 26, 2025Abstract:This paper addresses a generalization problem of Multi-Agent Pathfinding (MAPF), called Collaborative Task Sequencing - Multi-Agent Pathfinding (CTS-MAPF), where agents must plan collision-free paths and visit a series of intermediate task locations in a specific order before reaching their final destinations. To address this problem, we propose a new approach, Collaborative Task Sequencing - Conflict-Based Search (CTS-CBS), which conducts a two-level search. In the high level, it generates a search forest, where each tree corresponds to a joint task sequence derived from the jTSP solution. In the low level, CTS-CBS performs constrained single-agent path planning to generate paths for each agent while adhering to high-level constraints. We also provide heoretical guarantees of its completeness and optimality (or sub-optimality with a bounded parameter). To evaluate the performance of CTS-CBS, we create two datasets, CTS-MAPF and MG-MAPF, and conduct comprehensive experiments. The results show that CTS-CBS adaptations for MG-MAPF outperform baseline algorithms in terms of success rate (up to 20 times larger) and runtime (up to 100 times faster), with less than a 10% sacrifice in solution quality. Furthermore, CTS-CBS offers flexibility by allowing users to adjust the sub-optimality bound omega to balance between solution quality and efficiency. Finally, practical robot tests demonstrate the algorithm's applicability in real-world scenarios.

Griffin: Aerial-Ground Cooperative Detection and Tracking Dataset and Benchmark

Mar 10, 2025

Abstract:Despite significant advancements, autonomous driving systems continue to struggle with occluded objects and long-range detection due to the inherent limitations of single-perspective sensing. Aerial-ground cooperation offers a promising solution by integrating UAVs' aerial views with ground vehicles' local observations. However, progress in this emerging field has been hindered by the absence of public datasets and standardized evaluation benchmarks. To address this gap, this paper presents a comprehensive solution for aerial-ground cooperative 3D perception through three key contributions: (1) Griffin, a large-scale multi-modal dataset featuring over 200 dynamic scenes (30k+ frames) with varied UAV altitudes (20-60m), diverse weather conditions, and occlusion-aware 3D annotations, enhanced by CARLA-AirSim co-simulation for realistic UAV dynamics; (2) A unified benchmarking framework for aerial-ground cooperative detection and tracking tasks, including protocols for evaluating communication efficiency, latency tolerance, and altitude adaptability; (3) AGILE, an instance-level intermediate fusion baseline that dynamically aligns cross-view features through query-based interaction, achieving an advantageous balance between communication overhead and perception accuracy. Extensive experiments prove the effectiveness of aerial-ground cooperative perception and demonstrate the direction of further research. The dataset and codes are available at https://github.com/wang-jh18-SVM/Griffin.

RINO: Accurate, Robust Radar-Inertial Odometry with Non-Iterative Estimation

Nov 12, 2024

Abstract:Precise localization and mapping are critical for achieving autonomous navigation in self-driving vehicles. However, ego-motion estimation still faces significant challenges, particularly when GNSS failures occur or under extreme weather conditions (e.g., fog, rain, and snow). In recent years, scanning radar has emerged as an effective solution due to its strong penetration capabilities. Nevertheless, scanning radar data inherently contains high levels of noise, necessitating hundreds to thousands of iterations of optimization to estimate a reliable transformation from the noisy data. Such iterative solving is time-consuming, unstable, and prone to failure. To address these challenges, we propose an accurate and robust Radar-Inertial Odometry system, RINO, which employs a non-iterative solving approach. Our method decouples rotation and translation estimation and applies an adaptive voting scheme for 2D rotation estimation, enhancing efficiency while ensuring consistent solving time. Additionally, the approach implements a loosely coupled system between the scanning radar and an inertial measurement unit (IMU), leveraging Error-State Kalman Filtering (ESKF). Notably, we successfully estimated the uncertainty of the pose estimation from the scanning radar, incorporating this into the filter's Maximum A Posteriori estimation, a consideration that has been previously overlooked. Validation on publicly available datasets demonstrates that RINO outperforms state-of-the-art methods and baselines in both accuracy and robustness. Our code is available at https://github.com/yangsc4063/rino.

CSDO: Enhancing Efficiency and Success in Large-Scale Multi-Vehicle Trajectory Planning

May 31, 2024Abstract:This paper presents an efficient algorithm, naming Centralized Searching and Decentralized Optimization (CSDO), to find feasible solution for large-scale Multi-Vehicle Trajectory Planning (MVTP) problem. Due to the intractable growth of non-convex constraints with the number of agents, exploring various homotopy classes that imply different convex domains, is crucial for finding a feasible solution. However, existing methods struggle to explore various homotopy classes efficiently due to combining it with time-consuming precise trajectory solution finding. CSDO, addresses this limitation by separating them into different levels and integrating an efficient Multi-Agent Path Finding (MAPF) algorithm to search homotopy classes. It first searches for a coarse initial guess using a large search step, identifying a specific homotopy class. Subsequent decentralized Quadratic Programming (QP) refinement processes this guess, resolving minor collisions efficiently. Experimental results demonstrate that CSDO outperforms existing MVTP algorithms in large-scale, high-density scenarios, achieving up to 95% success rate in 50m $\times$ 50m random scenarios around one second. Source codes are released in https://github.com/YangSVM/CSDOTrajectoryPlanning.

DenserRadar: A 4D millimeter-wave radar point cloud detector based on dense LiDAR point clouds

May 08, 2024

Abstract:The 4D millimeter-wave (mmWave) radar, with its robustness in extreme environments, extensive detection range, and capabilities for measuring velocity and elevation, has demonstrated significant potential for enhancing the perception abilities of autonomous driving systems in corner-case scenarios. Nevertheless, the inherent sparsity and noise of 4D mmWave radar point clouds restrict its further development and practical application. In this paper, we introduce a novel 4D mmWave radar point cloud detector, which leverages high-resolution dense LiDAR point clouds. Our approach constructs dense 3D occupancy ground truth from stitched LiDAR point clouds, and employs a specially designed network named DenserRadar. The proposed method surpasses existing probability-based and learning-based radar point cloud detectors in terms of both point cloud density and accuracy on the K-Radar dataset.

PreGSU-A Generalized Traffic Scene Understanding Model for Autonomous Driving based on Pre-trained Graph Attention Network

Apr 16, 2024

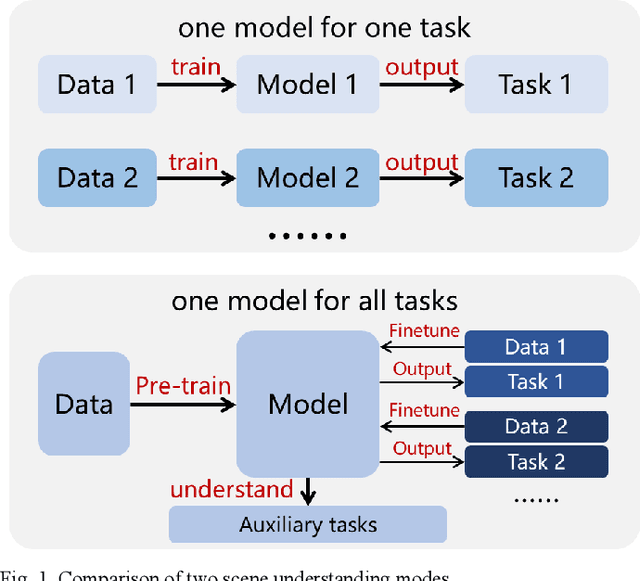

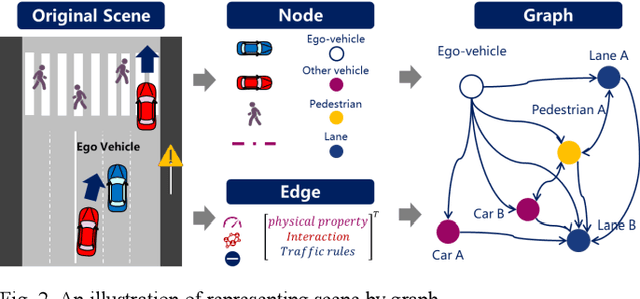

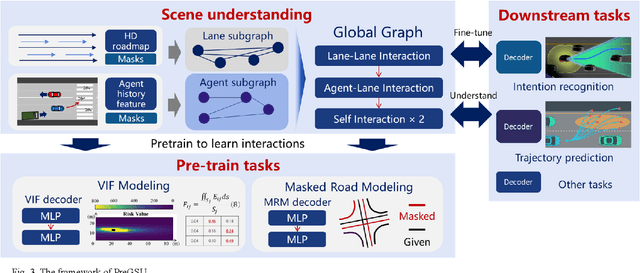

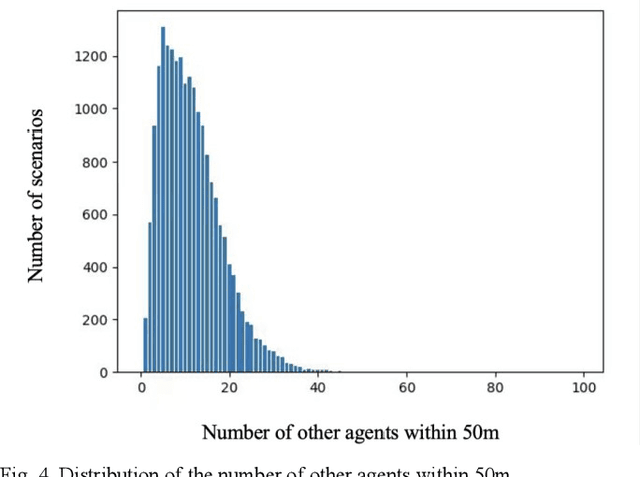

Abstract:Scene understanding, defined as learning, extraction, and representation of interactions among traffic elements, is one of the critical challenges toward high-level autonomous driving (AD). Current scene understanding methods mainly focus on one concrete single task, such as trajectory prediction and risk level evaluation. Although they perform well on specific metrics, the generalization ability is insufficient to adapt to the real traffic complexity and downstream demand diversity. In this study, we propose PreGSU, a generalized pre-trained scene understanding model based on graph attention network to learn the universal interaction and reasoning of traffic scenes to support various downstream tasks. After the feature engineering and sub-graph module, all elements are embedded as nodes to form a dynamic weighted graph. Then, four graph attention layers are applied to learn the relationships among agents and lanes. In the pre-train phase, the understanding model is trained on two self-supervised tasks: Virtual Interaction Force (VIF) modeling and Masked Road Modeling (MRM). Based on the artificial potential field theory, VIF modeling enables PreGSU to capture the agent-to-agent interactions while MRM extracts agent-to-road connections. In the fine-tuning process, the pre-trained parameters are loaded to derive detailed understanding outputs. We conduct validation experiments on two downstream tasks, i.e., trajectory prediction in urban scenario, and intention recognition in highway scenario, to verify the generalized ability and understanding ability. Results show that compared with the baselines, PreGSU achieves better accuracy on both tasks, indicating the potential to be generalized to various scenes and targets. Ablation study shows the effectiveness of pre-train task design.

UniLiDAR: Bridge the domain gap among different LiDARs for continual learning

Mar 13, 2024

Abstract:LiDAR-based 3D perception algorithms have evolved rapidly alongside the emergence of large datasets. Nonetheless, considerable performance degradation often ensues when models trained on a specific dataset are applied to other datasets or real-world scenarios with different LiDAR. This paper aims to develop a unified model capable of handling different LiDARs, enabling continual learning across diverse LiDAR datasets and seamless deployment across heterogeneous platforms. We observe that the gaps among datasets primarily manifest in geometric disparities (such as variations in beams and point counts) and semantic inconsistencies (taxonomy conflicts). To this end, this paper proposes UniLiDAR, an occupancy prediction pipeline that leverages geometric realignment and semantic label mapping to facilitate multiple datasets training and mitigate performance degradation during deployment on heterogeneous platforms. Moreover, our method can be easily combined with existing 3D perception models. The efficacy of the proposed approach in bridging LiDAR domain gaps is verified by comprehensive experiments on two prominent datasets: OpenOccupancy-nuScenes and SemanticKITTI. UniLiDAR elevates the mIoU of occupancy prediction by 15.7% and 12.5%, respectively, compared to the model trained on the directly merged dataset. Moreover, it outperforms several SOTA methods trained on individual datasets. We expect our research to facilitate further study of 3D generalization, the code will be available soon.

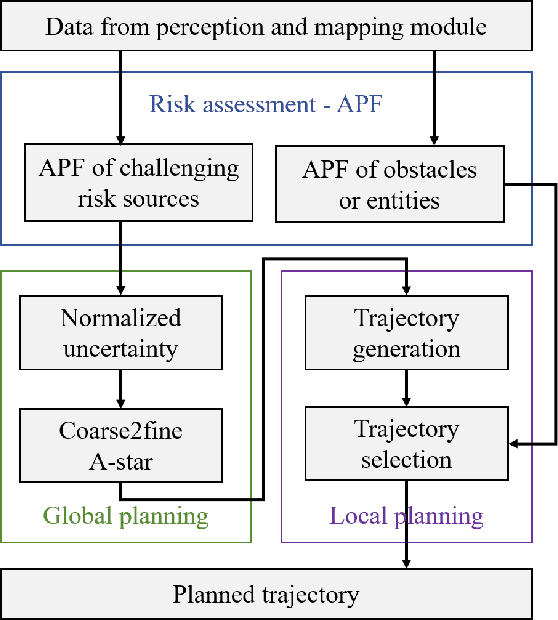

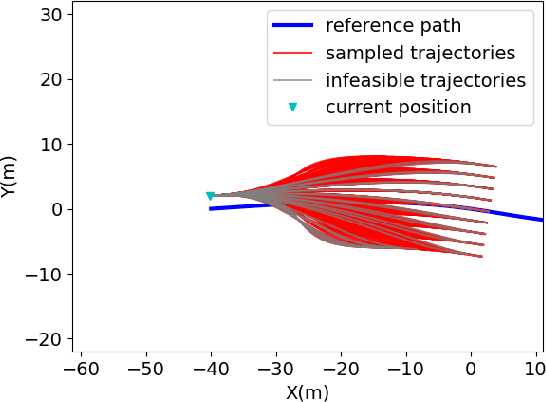

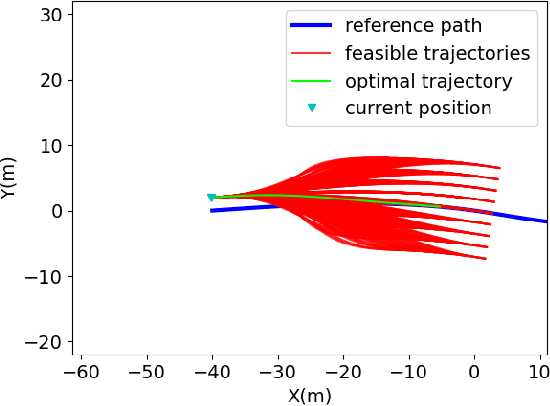

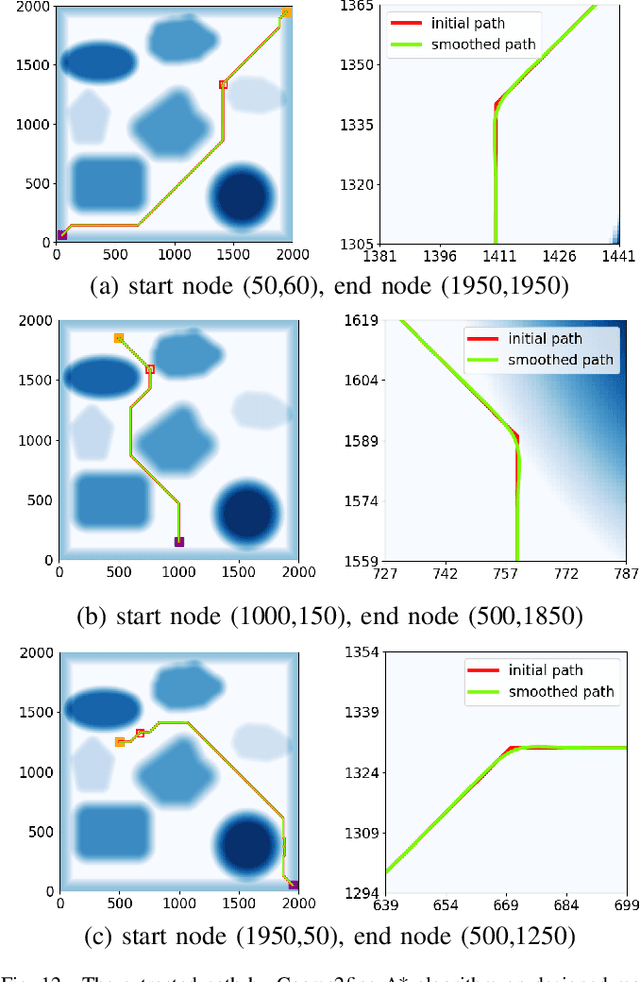

A Risk-aware Planning Framework of UGVs in Off-Road Environment

Feb 04, 2024

Abstract:Planning module is an essential component of intelligent vehicle study. In this paper, we address the risk-aware planning problem of UGVs through a global-local planning framework which seamlessly integrates risk assessment methods. In particular, a global planning algorithm named Coarse2fine A* is proposed, which incorporates a potential field approach to enhance the safety of the planning results while ensuring the efficiency of the algorithm. A deterministic sampling method for local planning is leveraged and modified to suit off-road environment. It also integrates a risk assessment model to emphasize the avoidance of local risks. The performance of the algorithm is demonstrated through simulation experiments by comparing it with baseline algorithms, where the results of Coarse2fine A* are shown to be approximately 30% safer than those of the baseline algorithms. The practicality and effectiveness of the proposed planning framework are validated by deploying it on a real-world system consisting of a control center and a practical UGV platform.

A Survey on Datasets for Decision-making of Autonomous Vehicle

Jun 29, 2023

Abstract:Autonomous vehicles (AV) are expected to reshape future transportation systems, and decision-making is one of the critical modules toward high-level automated driving. To overcome those complicated scenarios that rule-based methods could not cope with well, data-driven decision-making approaches have aroused more and more focus. The datasets to be used in developing data-driven methods dramatically influences the performance of decision-making, hence it is necessary to have a comprehensive insight into the existing datasets. From the aspects of collection sources, driving data can be divided into vehicle, environment, and driver related data. This study compares the state-of-the-art datasets of these three categories and summarizes their features including sensors used, annotation, and driving scenarios. Based on the characteristics of the datasets, this survey also concludes the potential applications of datasets on various aspects of AV decision-making, assisting researchers to find appropriate ones to support their own research. The future trends of AV dataset development are summarized.

4D Millimeter-Wave Radar in Autonomous Driving: A Survey

Jun 14, 2023

Abstract:The 4D millimeter-wave (mmWave) radar, capable of measuring the range, azimuth, elevation, and velocity of targets, has attracted considerable interest in the autonomous driving community. This is attributed to its robustness in extreme environments and outstanding velocity and elevation measurement capabilities. However, despite the rapid development of research related to its sensing theory and application, there is a notable lack of surveys on the topic of 4D mmWave radar. To address this gap and foster future research in this area, this paper presents a comprehensive survey on the use of 4D mmWave radar in autonomous driving. Reviews on the theoretical background and progress of 4D mmWave radars are presented first, including the signal processing flow, resolution improvement ways, extrinsic calibration process, and point cloud generation methods. Then it introduces related datasets and application algorithms in autonomous driving perception and localization and mapping tasks. Finally, this paper concludes by predicting future trends in the field of 4D mmWave radar. To the best of our knowledge, this is the first survey specifically for the 4D mmWave radar.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge