Seyedali Mirjalili

Thinking Beyond Tokens: From Brain-Inspired Intelligence to Cognitive Foundations for Artificial General Intelligence and its Societal Impact

Jul 01, 2025

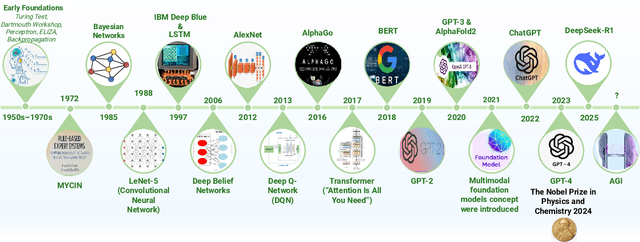

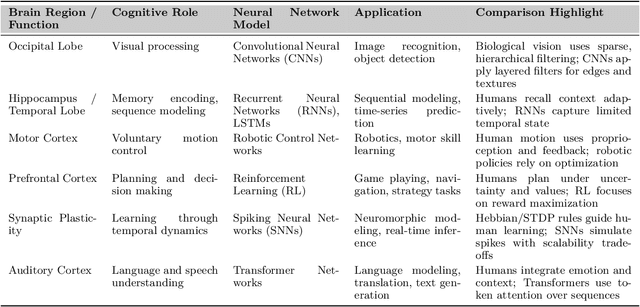

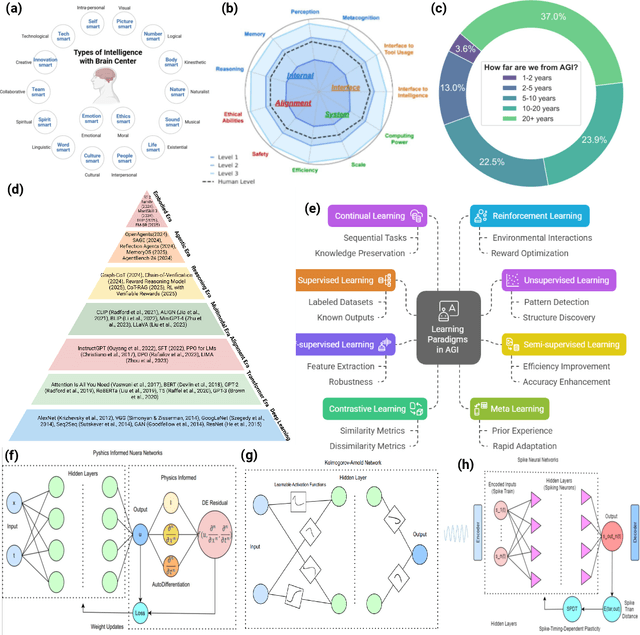

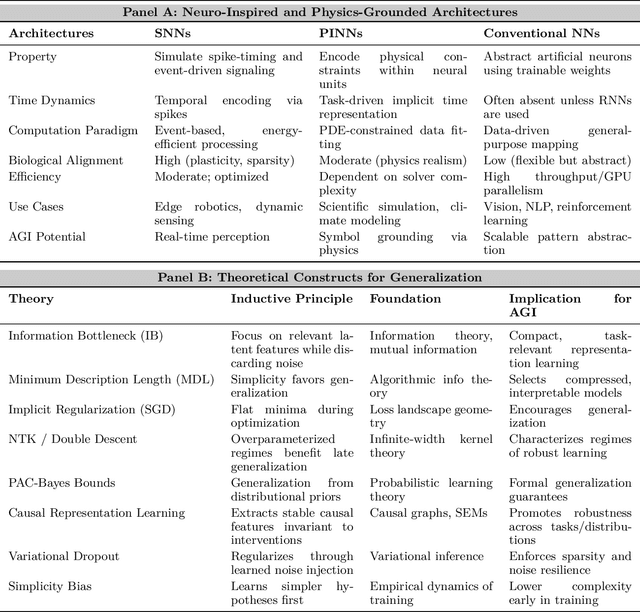

Abstract:Can machines truly think, reason and act in domains like humans? This enduring question continues to shape the pursuit of Artificial General Intelligence (AGI). Despite the growing capabilities of models such as GPT-4.5, DeepSeek, Claude 3.5 Sonnet, Phi-4, and Grok 3, which exhibit multimodal fluency and partial reasoning, these systems remain fundamentally limited by their reliance on token-level prediction and lack of grounded agency. This paper offers a cross-disciplinary synthesis of AGI development, spanning artificial intelligence, cognitive neuroscience, psychology, generative models, and agent-based systems. We analyze the architectural and cognitive foundations of general intelligence, highlighting the role of modular reasoning, persistent memory, and multi-agent coordination. In particular, we emphasize the rise of Agentic RAG frameworks that combine retrieval, planning, and dynamic tool use to enable more adaptive behavior. We discuss generalization strategies, including information compression, test-time adaptation, and training-free methods, as critical pathways toward flexible, domain-agnostic intelligence. Vision-Language Models (VLMs) are reexamined not just as perception modules but as evolving interfaces for embodied understanding and collaborative task completion. We also argue that true intelligence arises not from scale alone but from the integration of memory and reasoning: an orchestration of modular, interactive, and self-improving components where compression enables adaptive behavior. Drawing on advances in neurosymbolic systems, reinforcement learning, and cognitive scaffolding, we explore how recent architectures begin to bridge the gap between statistical learning and goal-directed cognition. Finally, we identify key scientific, technical, and ethical challenges on the path to AGI.

Smart Buildings Energy Consumption Forecasting using Adaptive Evolutionary Ensemble Learning Models

Jun 13, 2025Abstract:Smart buildings are gaining popularity because they can enhance energy efficiency, lower costs, improve security, and provide a more comfortable and convenient environment for building occupants. A considerable portion of the global energy supply is consumed in the building sector and plays a pivotal role in future decarbonization pathways. To manage energy consumption and improve energy efficiency in smart buildings, developing reliable and accurate energy demand forecasting is crucial and meaningful. However, extending an effective predictive model for the total energy use of appliances at the building level is challenging because of temporal oscillations and complex linear and non-linear patterns. This paper proposes three hybrid ensemble predictive models, incorporating Bagging, Stacking, and Voting mechanisms combined with a fast and effective evolutionary hyper-parameters tuner. The performance of the proposed energy forecasting model was evaluated using a hybrid dataset comprising meteorological parameters, appliance energy use, temperature, humidity, and lighting energy consumption from various sections of a building, collected by 18 sensors located in Stambroek, Mons, Belgium. To provide a comparative framework and investigate the efficiency of the proposed predictive model, 15 popular machine learning (ML) models, including two classic ML models, three NNs, a Decision Tree (DT), a Random Forest (RF), two Deep Learning (DL) and six Ensemble models, were compared. The prediction results indicate that the adaptive evolutionary bagging model surpassed other predictive models in both accuracy and learning error. Notably, it achieved accuracy gains of 12.6%, 13.7%, 12.9%, 27.04%, and 17.4% compared to Extreme Gradient Boosting (XGB), Categorical Boosting (CatBoost), GBM, LGBM, and Random Forest (RF).

Hybrid Wave-wind System Power Optimisation Using Effective Ensemble Covariance Matrix Adaptation Evolutionary Algorithm

May 28, 2025Abstract:Floating hybrid wind-wave systems combine offshore wind platforms with wave energy converters (WECs) to create cost-effective and reliable energy solutions. Adequately designed and tuned WECs are essential to avoid unwanted loads disrupting turbine motion while efficiently harvesting wave energy. These systems diversify energy sources, enhancing energy security and reducing supply risks while providing a more consistent power output by smoothing energy production variability. However, optimising such systems is complex due to the physical and hydrodynamic interactions between components, resulting in a challenging optimisation space. This study uses a 5-MW OC4-DeepCwind semi-submersible platform with three spherical WECs to explore these synergies. To address these challenges, we propose an effective ensemble optimisation (EEA) technique that combines covariance matrix adaptation, novelty search, and discretisation techniques. To evaluate the EEA performance, we used four sea sites located along Australia's southern coast. In this framework, geometry and power take-off parameters are simultaneously optimised to maximise the average power output of the hybrid wind-wave system. Ensemble optimisation methods enhance performance, flexibility, and robustness by identifying the best algorithm or combination of algorithms for a given problem, addressing issues like premature convergence, stagnation, and poor search space exploration. The EEA was benchmarked against 14 advanced optimisation methods, demonstrating superior solution quality and convergence rates. EEA improved total power output by 111%, 95%, and 52% compared to WOA, EO, and AHA, respectively. Additionally, in comparisons with advanced methods, LSHADE, SaNSDE, and SLPSO, EEA achieved absorbed power enhancements of 498%, 638%, and 349% at the Sydney sea site, showcasing its effectiveness in optimising hybrid energy systems.

Artificial Protozoa Optimizer (APO): A novel bio-inspired metaheuristic algorithm for engineering optimization

May 06, 2025

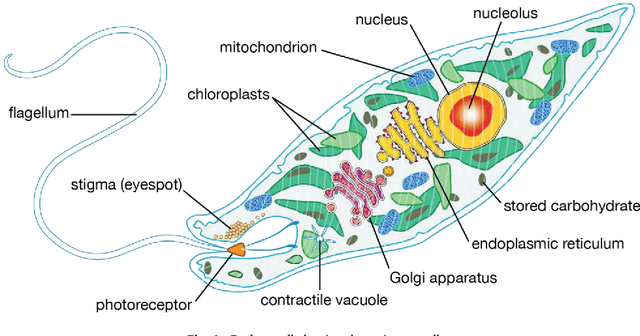

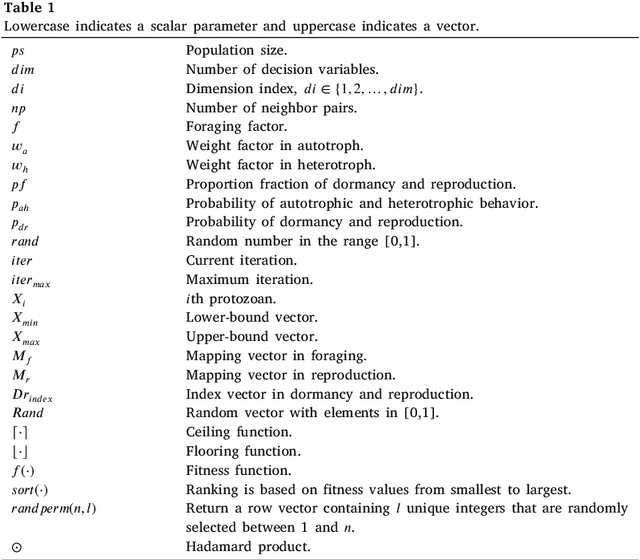

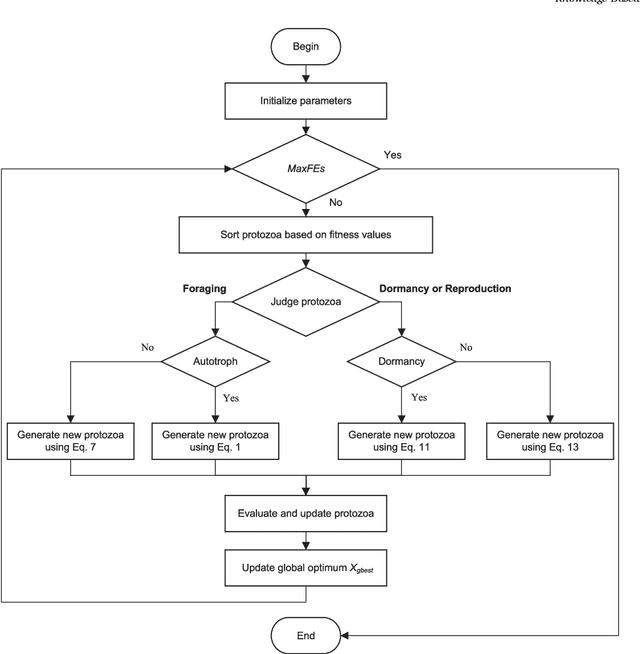

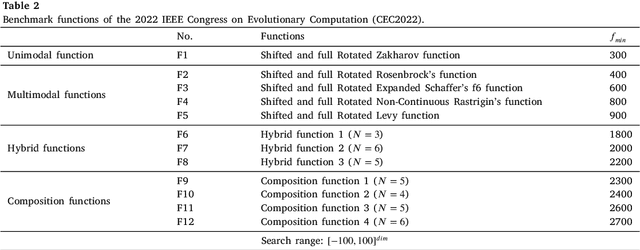

Abstract:This study proposes a novel artificial protozoa optimizer (APO) that is inspired by protozoa in nature. The APO mimics the survival mechanisms of protozoa by simulating their foraging, dormancy, and reproductive behaviors. The APO was mathematically modeled and implemented to perform the optimization processes of metaheuristic algorithms. The performance of the APO was verified via experimental simulations and compared with 32 state-of-the-art algorithms. Wilcoxon signed-rank test was performed for pairwise comparisons of the proposed APO with the state-of-the-art algorithms, and Friedman test was used for multiple comparisons. First, the APO was tested using 12 functions of the 2022 IEEE Congress on Evolutionary Computation benchmark. Considering practicality, the proposed APO was used to solve five popular engineering design problems in a continuous space with constraints. Moreover, the APO was applied to solve a multilevel image segmentation task in a discrete space with constraints. The experiments confirmed that the APO could provide highly competitive results for optimization problems. The source codes of Artificial Protozoa Optimizer are publicly available at https://seyedalimirjalili.com/projects and https://ww2.mathworks.cn/matlabcentral/fileexchange/162656-artificial-protozoa-optimizer.

MOANA: Multi-Objective Ant Nesting Algorithm for Optimization Problems

Nov 08, 2024

Abstract:This paper presents the Multi-Objective Ant Nesting Algorithm (MOANA), a novel extension of the Ant Nesting Algorithm (ANA), specifically designed to address multi-objective optimization problems (MOPs). MOANA incorporates adaptive mechanisms, such as deposition weight parameters, to balance exploration and exploitation, while a polynomial mutation strategy ensures diverse and high-quality solutions. The algorithm is evaluated on standard benchmark datasets, including ZDT functions and the IEEE Congress on Evolutionary Computation (CEC) 2019 multi-modal benchmarks. Comparative analysis against state-of-the-art algorithms like MOPSO, MOFDO, MODA, and NSGA-III demonstrates MOANA's superior performance in terms of convergence speed and Pareto front coverage. Furthermore, MOANA's applicability to real-world engineering optimization, such as welded beam design, showcases its ability to generate a broad range of optimal solutions, making it a practical tool for decision-makers. MOANA addresses key limitations of traditional evolutionary algorithms by improving scalability and diversity in multi-objective scenarios, positioning it as a robust solution for complex optimization tasks.

Predicting the Stay Length of Patients in Hospitals using Convolutional Gated Recurrent Deep Learning Model

Sep 26, 2024

Abstract:Predicting hospital length of stay (LoS) stands as a critical factor in shaping public health strategies. This data serves as a cornerstone for governments to discern trends, patterns, and avenues for enhancing healthcare delivery. In this study, we introduce a robust hybrid deep learning model, a combination of Multi-layer Convolutional (CNNs) deep learning, Gated Recurrent Units (GRU), and Dense neural networks, that outperforms 11 conventional and state-of-the-art Machine Learning (ML) and Deep Learning (DL) methodologies in accurately forecasting inpatient hospital stay duration. Our investigation delves into the implementation of this hybrid model, scrutinising variables like geographic indicators tied to caregiving institutions, demographic markers encompassing patient ethnicity, race, and age, as well as medical attributes such as the CCS diagnosis code, APR DRG code, illness severity metrics, and hospital stay duration. Statistical evaluations reveal the pinnacle LoS accuracy achieved by our proposed model (CNN-GRU-DNN), which averages at 89% across a 10-fold cross-validation test, surpassing LSTM, BiLSTM, GRU, and Convolutional Neural Networks (CNNs) by 19%, 18.2%, 18.6%, and 7%, respectively. Accurate LoS predictions not only empower hospitals to optimise resource allocation and curb expenses associated with prolonged stays but also pave the way for novel strategies in hospital stay management. This avenue holds promise for catalysing advancements in healthcare research and innovation, inspiring a new era of precision-driven healthcare practices.

Hybrid Inception Architecture with Residual Connection: Fine-tuned Inception-ResNet Deep Learning Model for Lung Inflammation Diagnosis from Chest Radiographs

Oct 05, 2023Abstract:Diagnosing lung inflammation, particularly pneumonia, is of paramount importance for effectively treating and managing the disease. Pneumonia is a common respiratory infection caused by bacteria, viruses, or fungi and can indiscriminately affect people of all ages. As highlighted by the World Health Organization (WHO), this prevalent disease tragically accounts for a substantial 15% of global mortality in children under five years of age. This article presents a comparative study of the Inception-ResNet deep learning model's performance in diagnosing pneumonia from chest radiographs. The study leverages Mendeleys chest X-ray images dataset, which contains 5856 2D images, including both Viral and Bacterial Pneumonia X-ray images. The Inception-ResNet model is compared with seven other state-of-the-art convolutional neural networks (CNNs), and the experimental results demonstrate the Inception-ResNet model's superiority in extracting essential features and saving computation runtime. Furthermore, we examine the impact of transfer learning with fine-tuning in improving the performance of deep convolutional models. This study provides valuable insights into using deep learning models for pneumonia diagnosis and highlights the potential of the Inception-ResNet model in this field. In classification accuracy, Inception-ResNet-V2 showed superior performance compared to other models, including ResNet152V2, MobileNet-V3 (Large and Small), EfficientNetV2 (Large and Small), InceptionV3, and NASNet-Mobile, with substantial margins. It outperformed them by 2.6%, 6.5%, 7.1%, 13%, 16.1%, 3.9%, and 1.6%, respectively, demonstrating its significant advantage in accurate classification.

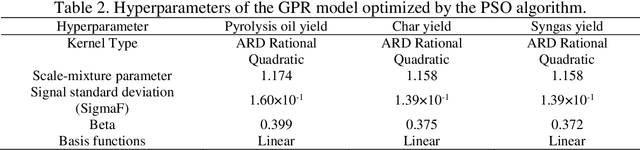

Using evolutionary machine learning to characterize and optimize co-pyrolysis of biomass feedstocks and polymeric wastes

May 24, 2023

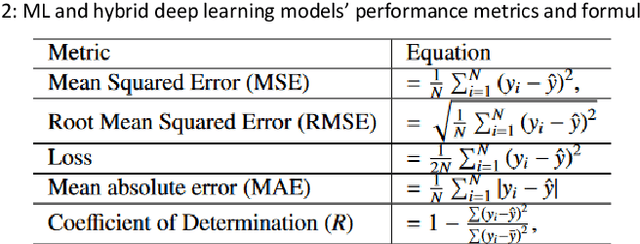

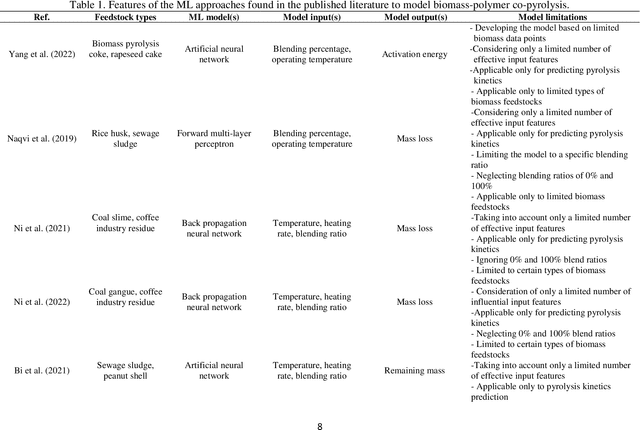

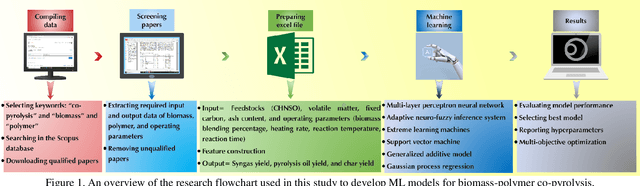

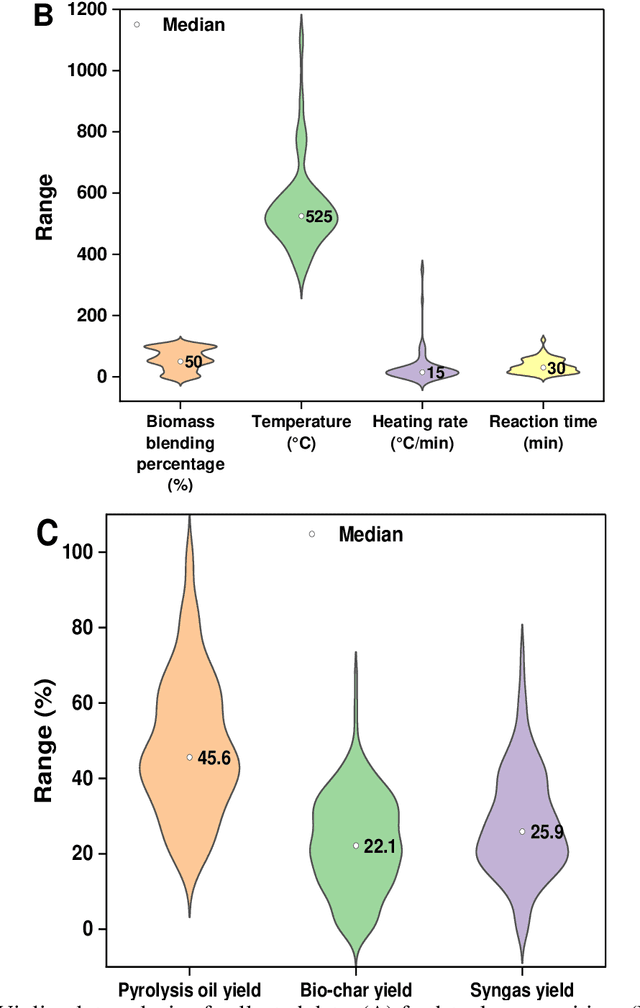

Abstract:Co-pyrolysis of biomass feedstocks with polymeric wastes is a promising strategy for improving the quantity and quality parameters of the resulting liquid fuel. Numerous experimental measurements are typically conducted to find the optimal operating conditions. However, performing co-pyrolysis experiments is highly challenging due to the need for costly and lengthy procedures. Machine learning (ML) provides capabilities to cope with such issues by leveraging on existing data. This work aims to introduce an evolutionary ML approach to quantify the (by)products of the biomass-polymer co-pyrolysis process. A comprehensive dataset covering various biomass-polymer mixtures under a broad range of process conditions is compiled from the qualified literature. The database was subjected to statistical analysis and mechanistic discussion. The input features are constructed using an innovative approach to reflect the physics of the process. The constructed features are subjected to principal component analysis to reduce their dimensionality. The obtained scores are introduced into six ML models. Gaussian process regression model tuned by particle swarm optimization algorithm presents better prediction performance (R2 > 0.9, MAE < 0.03, and RMSE < 0.06) than other developed models. The multi-objective particle swarm optimization algorithm successfully finds optimal independent parameters.

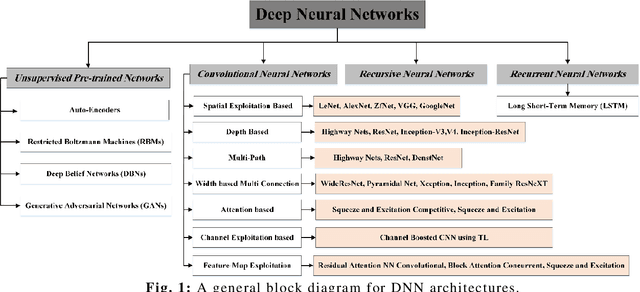

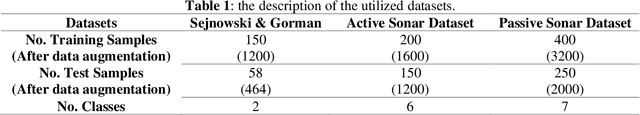

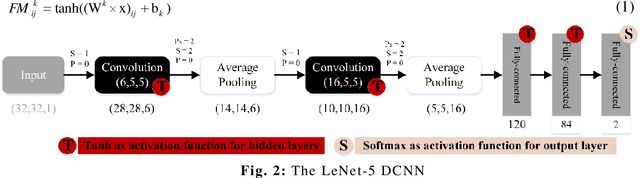

Evolving Deep Neural Network by Customized Moth Flame Optimization Algorithm for Underwater Targets Recognition

Feb 16, 2023

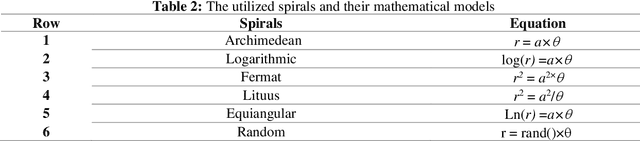

Abstract:This chapter proposes using the Moth Flame Optimization (MFO) algorithm for finetuning a Deep Neural Network to recognize different underwater sonar datasets. Same as other models evolved by metaheuristic algorithms, premature convergence, trapping in local minima, and failure to converge in a reasonable time are three defects MFO confronts in solving problems with high-dimension search space. Spiral flying is the key component of the MFO as it determines how the moths adjust their positions in relation to flames; thereby, the shape of spiral motions can regulate the transition behavior between the exploration and exploitation phases. Therefore, this chapter investigates the efficiency of seven spiral motions with different curvatures and slopes in the performance of the MFO, especially for underwater target classification tasks. To assess the performance of the customized model, in addition to benchmark Sejnowski & Gorman's dataset, two experimental sonar datasets, i.e., the passive sonar and active datasets, are exploited. The results of MFO and its modifications are compared to four novel nature-inspired algorithms, including Heap-Based Optimizer (HBO), Chimp Optimization Algorithm (ChOA), Ant Lion Optimization (ALO), Stochastic Fractals Search (SFS), as well as the classic Particle Swarm Optimization (PSO). The results confirm that the customized MFO shows better performance than the other state-of-the-art models so that the classification rates are increased 1.5979, 0.9985, and 2.0879 for Sejnowski & Gorman, passive, and active datasets, respectively. The results also approve that time complexity is not significantly increased by using different spiral motions.

Brain Tumor Detection and Classification Using a New Evolutionary Convolutional Neural Network

Apr 26, 2022

Abstract:A definitive diagnosis of a brain tumour is essential for enhancing treatment success and patient survival. However, it is difficult to manually evaluate multiple magnetic resonance imaging (MRI) images generated in a clinic. Therefore, more precise computer-based tumour detection methods are required. In recent years, many efforts have investigated classical machine learning methods to automate this process. Deep learning techniques have recently sparked interest as a means of diagnosing brain tumours more accurately and robustly. The goal of this study, therefore, is to employ brain MRI images to distinguish between healthy and unhealthy patients (including tumour tissues). As a result, an enhanced convolutional neural network is developed in this paper for accurate brain image classification. The enhanced convolutional neural network structure is composed of components for feature extraction and optimal classification. Nonlinear L\'evy Chaotic Moth Flame Optimizer (NLCMFO) optimizes hyperparameters for training convolutional neural network layers. Using the BRATS 2015 data set and brain image datasets from Harvard Medical School, the proposed model is assessed and compared with various optimization techniques. The optimized CNN model outperforms other models from the literature by providing 97.4% accuracy, 96.0% sensitivity, 98.6% specificity, 98.4% precision, and 96.6% F1-score, (the mean of the weighted harmonic value of CNN precision and recall).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge