Sebastian Kaltenbach

Reinforcement Learning Closures for Underresolved Partial Differential Equations using Synthetic Data

May 16, 2025Abstract:Partial Differential Equations (PDEs) describe phenomena ranging from turbulence and epidemics to quantum mechanics and financial markets. Despite recent advances in computational science, solving such PDEs for real-world applications remains prohibitively expensive because of the necessity of resolving a broad range of spatiotemporal scales. In turn, practitioners often rely on coarse-grained approximations of the original PDEs, trading off accuracy for reduced computational resources. To mitigate the loss of detail inherent in such approximations, closure models are employed to represent unresolved spatiotemporal interactions. We present a framework for developing closure models for PDEs using synthetic data acquired through the method of manufactured solutions. These data are used in conjunction with reinforcement learning to provide closures for coarse-grained PDEs. We illustrate the efficacy of our method using the one-dimensional and two-dimensional Burgers' equations and the two-dimensional advection equation. Moreover, we demonstrate that closure models trained for inhomogeneous PDEs can be effectively generalized to homogeneous PDEs. The results demonstrate the potential for developing accurate and computationally efficient closure models for systems with scarce data.

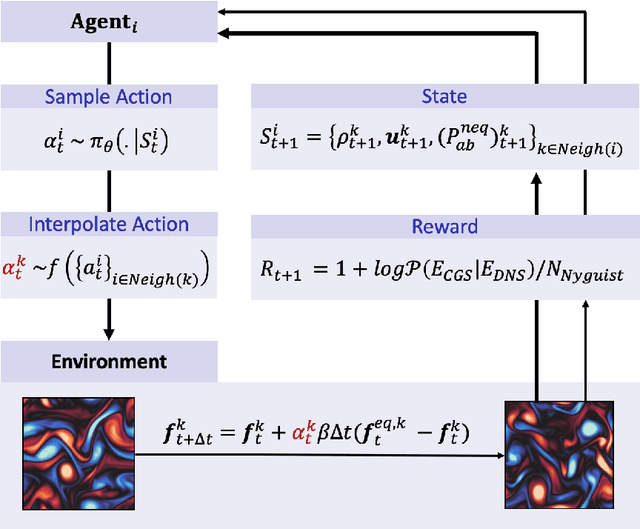

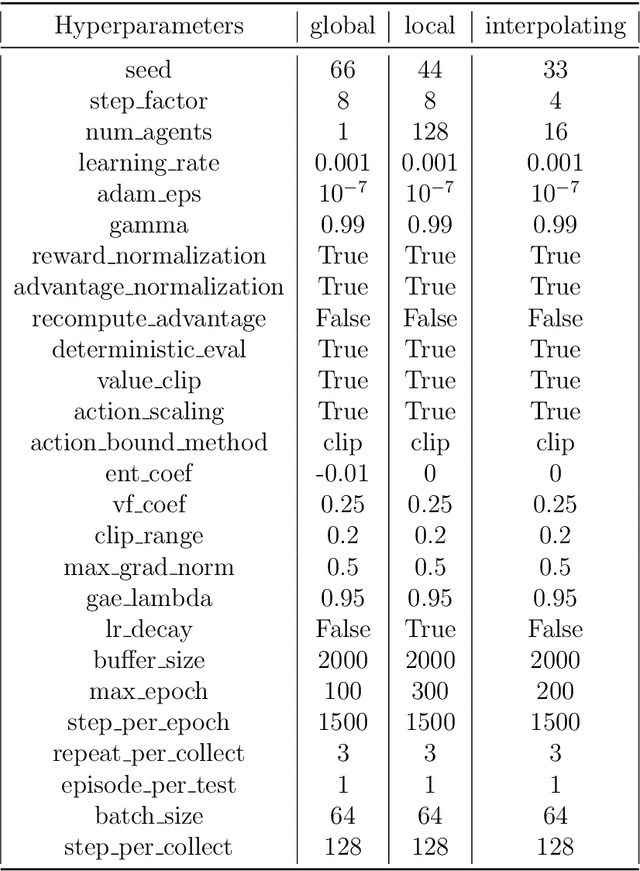

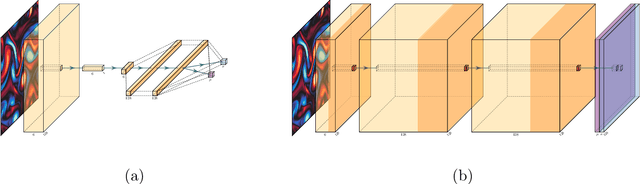

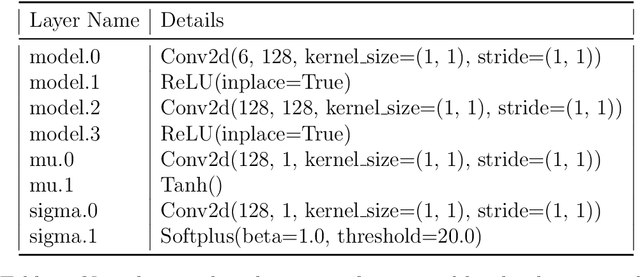

Optimal Lattice Boltzmann Closures through Multi-Agent Reinforcement Learning

Apr 19, 2025

Abstract:The Lattice Boltzmann method (LBM) offers a powerful and versatile approach to simulating diverse hydrodynamic phenomena, spanning microfluidics to aerodynamics. The vast range of spatiotemporal scales inherent in these systems currently renders full resolution impractical, necessitating the development of effective closure models for under-resolved simulations. Under-resolved LBMs are unstable, and while there is a number of important efforts to stabilize them, they often face limitations in generalizing across scales and physical systems. We present a novel, data-driven, multiagent reinforcement learning (MARL) approach that drastically improves stability and accuracy of coarse-grained LBM simulations. The proposed method uses a convolutional neural network to dynamically control the local relaxation parameter for the LB across the simulation grid. The LB-MARL framework is showcased in turbulent Kolmogorov flows. We find that the MARL closures stabilize the simulations and recover the energy spectra of significantly more expensive fully resolved simulations while maintaining computational efficiency. The learned closure model can be transferred to flow scenarios unseen during training and has improved robustness and spectral accuracy compared to traditional LBM models. We believe that MARL closures open new frontiers for efficient and accurate simulations of a multitude of complex problems not accessible to present-day LB methods alone.

Energy Matching: Unifying Flow Matching and Energy-Based Models for Generative Modeling

Apr 14, 2025Abstract:Generative models often map noise to data by matching flows or scores, but these approaches become cumbersome for incorporating partial observations or additional priors. Inspired by recent advances in Wasserstein gradient flows, we propose Energy Matching, a framework that unifies flow-based approaches with the flexibility of energy-based models (EBMs). Far from the data manifold, samples move along curl-free, optimal transport paths from noise to data. As they approach the data manifold, an entropic energy term guides the system into a Boltzmann equilibrium distribution, explicitly capturing the underlying likelihood structure of the data. We parameterize this dynamic with a single time-independent scalar field, which serves as both a powerful generator and a flexible prior for effective regularization of inverse problems. Our method substantially outperforms existing EBMs on CIFAR-10 generation (FID 3.97 compared to 8.61), while retaining the simulation-free training of transport-based approaches away from the data manifold. Additionally, we exploit the flexibility of our method and introduce an interaction energy for diverse mode exploration. Our approach focuses on learning a static scalar potential energy -- without time conditioning, auxiliary generators, or additional networks -- marking a significant departure from recent EBM methods. We believe this simplified framework significantly advances EBM capabilities and paves the way for their broader adoption in generative modeling across diverse domains.

Learning Effective Dynamics across Spatio-Temporal Scales of Complex Flows

Feb 11, 2025Abstract:Modeling and simulation of complex fluid flows with dynamics that span multiple spatio-temporal scales is a fundamental challenge in many scientific and engineering domains. Full-scale resolving simulations for systems such as highly turbulent flows are not feasible in the foreseeable future, and reduced-order models must capture dynamics that involve interactions across scales. In the present work, we propose a novel framework, Graph-based Learning of Effective Dynamics (Graph-LED), that leverages graph neural networks (GNNs), as well as an attention-based autoregressive model, to extract the effective dynamics from a small amount of simulation data. GNNs represent flow fields on unstructured meshes as graphs and effectively handle complex geometries and non-uniform grids. The proposed method combines a GNN based, dimensionality reduction for variable-size unstructured meshes with an autoregressive temporal attention model that can learn temporal dependencies automatically. We evaluated the proposed approach on a suite of fluid dynamics problems, including flow past a cylinder and flow over a backward-facing step over a range of Reynolds numbers. The results demonstrate robust and effective forecasting of spatio-temporal physics; in the case of the flow past a cylinder, both small-scale effects that occur close to the cylinder as well as its wake are accurately captured.

Generative Learning of the Solution of Parametric Partial Differential Equations Using Guided Diffusion Models and Virtual Observations

Jul 31, 2024

Abstract:We introduce a generative learning framework to model high-dimensional parametric systems using gradient guidance and virtual observations. We consider systems described by Partial Differential Equations (PDEs) discretized with structured or unstructured grids. The framework integrates multi-level information to generate high fidelity time sequences of the system dynamics. We demonstrate the effectiveness and versatility of our framework with two case studies in incompressible, two dimensional, low Reynolds cylinder flow on an unstructured mesh and incompressible turbulent channel flow on a structured mesh, both parameterized by the Reynolds number. Our results illustrate the framework's robustness and ability to generate accurate flow sequences across various parameter settings, significantly reducing computational costs allowing for efficient forecasting and reconstruction of flow dynamics.

A Learnable Prior Improves Inverse Tumor Growth Modeling

Mar 07, 2024

Abstract:Biophysical modeling, particularly involving partial differential equations (PDEs), offers significant potential for tailoring disease treatment protocols to individual patients. However, the inverse problem-solving aspect of these models presents a substantial challenge, either due to the high computational requirements of model-based approaches or the limited robustness of deep learning (DL) methods. We propose a novel framework that leverages the unique strengths of both approaches in a synergistic manner. Our method incorporates a DL ensemble for initial parameter estimation, facilitating efficient downstream evolutionary sampling initialized with this DL-based prior. We showcase the effectiveness of integrating a rapid deep-learning algorithm with a high-precision evolution strategy in estimating brain tumor cell concentrations from magnetic resonance images. The DL-Prior plays a pivotal role, significantly constraining the effective sampling-parameter space. This reduction results in a fivefold convergence acceleration and a Dice-score of 95%

Generative Learning for Forecasting the Dynamics of Complex Systems

Feb 27, 2024Abstract:We introduce generative models for accelerating simulations of complex systems through learning and evolving their effective dynamics. In the proposed Generative Learning of Effective Dynamics (G-LED), instances of high dimensional data are down sampled to a lower dimensional manifold that is evolved through an auto-regressive attention mechanism. In turn, Bayesian diffusion models, that map this low-dimensional manifold onto its corresponding high-dimensional space, capture the statistics of the system dynamics. We demonstrate the capabilities and drawbacks of G-LED in simulations of several benchmark systems, including the Kuramoto-Sivashinsky (KS) equation, two-dimensional high Reynolds number flow over a backward-facing step, and simulations of three-dimensional turbulent channel flow. The results demonstrate that generative learning offers new frontiers for the accurate forecasting of the statistical properties of complex systems at a reduced computational cost.

Closure Discovery for Coarse-Grained Partial Differential Equations using Multi-Agent Reinforcement Learning

Feb 01, 2024Abstract:Reliable predictions of critical phenomena, such as weather, wildfires and epidemics are often founded on models described by Partial Differential Equations (PDEs). However, simulations that capture the full range of spatio-temporal scales in such PDEs are often prohibitively expensive. Consequently, coarse-grained simulations that employ heuristics and empirical closure terms are frequently utilized as an alternative. We propose a novel and systematic approach for identifying closures in under-resolved PDEs using Multi-Agent Reinforcement Learning (MARL). The MARL formulation incorporates inductive bias and exploits locality by deploying a central policy represented efficiently by Convolutional Neural Networks (CNN). We demonstrate the capabilities and limitations of MARL through numerical solutions of the advection equation and the Burgers' equation. Our results show accurate predictions for in- and out-of-distribution test cases as well as a significant speedup compared to resolving all scales.

Interpretable learning of effective dynamics for multiscale systems

Sep 11, 2023

Abstract:The modeling and simulation of high-dimensional multiscale systems is a critical challenge across all areas of science and engineering. It is broadly believed that even with today's computer advances resolving all spatiotemporal scales described by the governing equations remains a remote target. This realization has prompted intense efforts to develop model order reduction techniques. In recent years, techniques based on deep recurrent neural networks have produced promising results for the modeling and simulation of complex spatiotemporal systems and offer large flexibility in model development as they can incorporate experimental and computational data. However, neural networks lack interpretability, which limits their utility and generalizability across complex systems. Here we propose a novel framework of Interpretable Learning Effective Dynamics (iLED) that offers comparable accuracy to state-of-the-art recurrent neural network-based approaches while providing the added benefit of interpretability. The iLED framework is motivated by Mori-Zwanzig and Koopman operator theory, which justifies the choice of the specific architecture. We demonstrate the effectiveness of the proposed framework in simulations of three benchmark multiscale systems. Our results show that the iLED framework can generate accurate predictions and obtain interpretable dynamics, making it a promising approach for solving high-dimensional multiscale systems.

Interpretable reduced-order modeling with time-scale separation

Mar 03, 2023Abstract:Partial Differential Equations (PDEs) with high dimensionality are commonly encountered in computational physics and engineering. However, finding solutions for these PDEs can be computationally expensive, making model-order reduction crucial. We propose such a data-driven scheme that automates the identification of the time-scales involved and can produce stable predictions forward in time as well as under different initial conditions not included in the training data. To this end, we combine a non-linear autoencoder architecture with a time-continuous model for the latent dynamics in the complex space. It readily allows for the inclusion of sparse and irregularly sampled training data. The learned, latent dynamics are interpretable and reveal the different temporal scales involved. We show that this data-driven scheme can automatically learn the independent processes that decompose a system of linear ODEs along the eigenvectors of the system's matrix. Apart from this, we demonstrate the applicability of the proposed framework in a hidden Markov Model and the (discretized) Kuramoto-Shivashinsky (KS) equation. Additionally, we propose a probabilistic version, which captures predictive uncertainties and further improves upon the results of the deterministic framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge