Sebastian Gonzalez

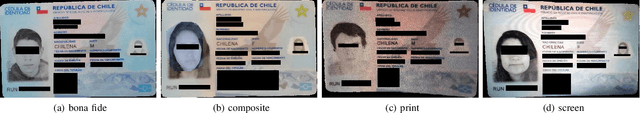

Second Competition on Presentation Attack Detection on ID Card

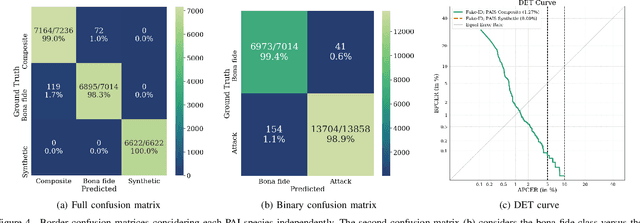

Jul 27, 2025Abstract:This work summarises and reports the results of the second Presentation Attack Detection competition on ID cards. This new version includes new elements compared to the previous one. (1) An automatic evaluation platform was enabled for automatic benchmarking; (2) Two tracks were proposed in order to evaluate algorithms and datasets, respectively; and (3) A new ID card dataset was shared with Track 1 teams to serve as the baseline dataset for the training and optimisation. The Hochschule Darmstadt, Fraunhofer-IGD, and Facephi company jointly organised this challenge. 20 teams were registered, and 74 submitted models were evaluated. For Track 1, the "Dragons" team reached first place with an Average Ranking and Equal Error rate (EER) of AV-Rank of 40.48% and 11.44% EER, respectively. For the more challenging approach in Track 2, the "Incode" team reached the best results with an AV-Rank of 14.76% and 6.36% EER, improving on the results of the first edition of 74.30% and 21.87% EER, respectively. These results suggest that PAD on ID cards is improving, but it is still a challenging problem related to the number of images, especially of bona fide images.

Zephyr quantum-assisted hierarchical Calo4pQVAE for particle-calorimeter interactions

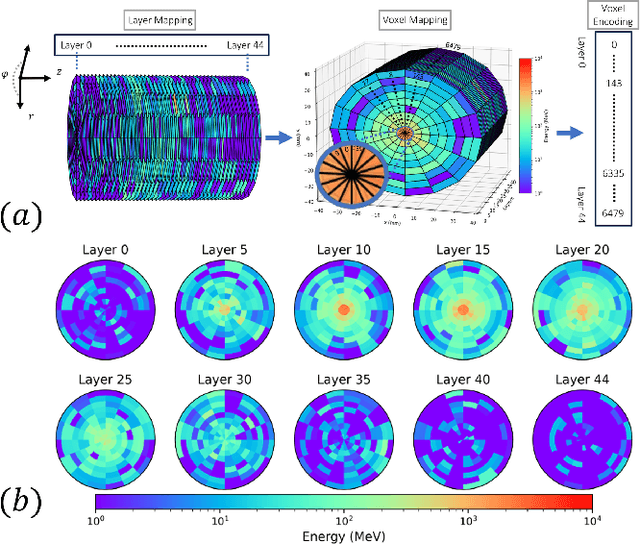

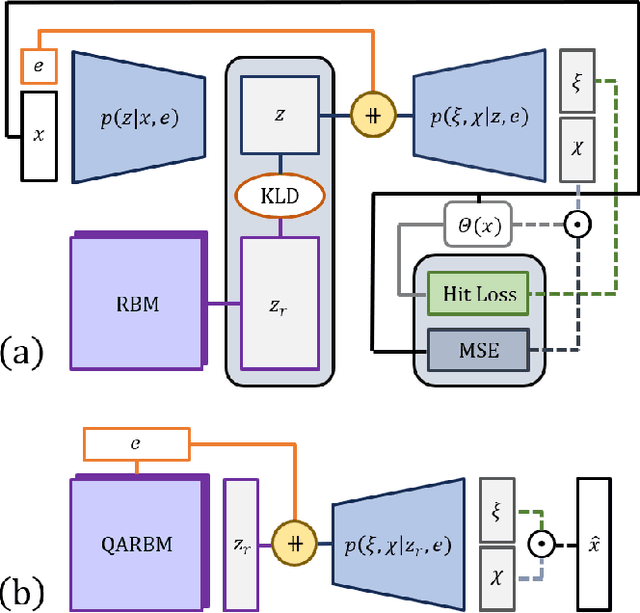

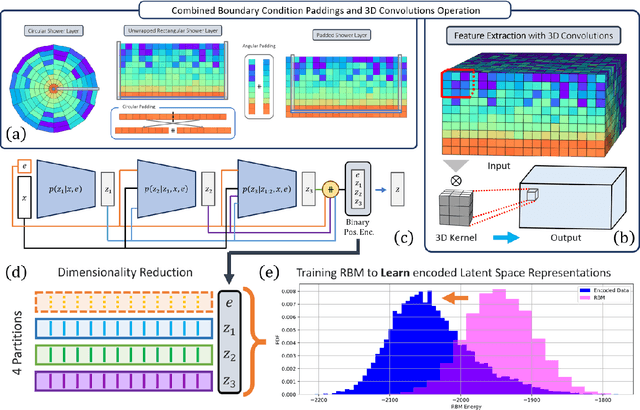

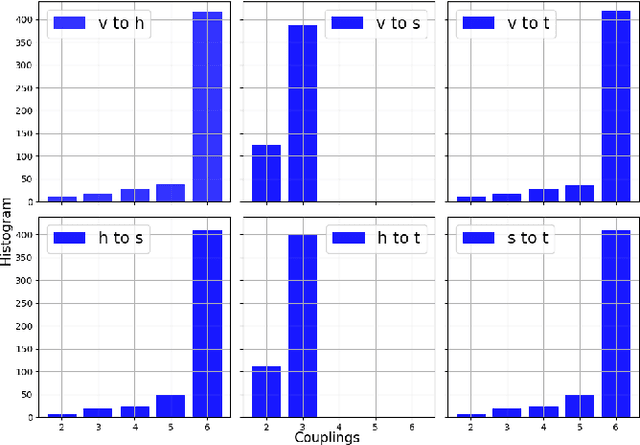

Dec 06, 2024Abstract:With the approach of the High Luminosity Large Hadron Collider (HL-LHC) era set to begin particle collisions by the end of this decade, it is evident that the computational demands of traditional collision simulation methods are becoming increasingly unsustainable. Existing approaches, which rely heavily on first-principles Monte Carlo simulations for modeling event showers in calorimeters, are projected to require millions of CPU-years annually -- far exceeding current computational capacities. This bottleneck presents an exciting opportunity for advancements in computational physics by integrating deep generative models with quantum simulations. We propose a quantum-assisted hierarchical deep generative surrogate founded on a variational autoencoder (VAE) in combination with an energy conditioned restricted Boltzmann machine (RBM) embedded in the model's latent space as a prior. By mapping the topology of D-Wave's Zephyr quantum annealer (QA) into the nodes and couplings of a 4-partite RBM, we leverage quantum simulation to accelerate our shower generation times significantly. To evaluate our framework, we use Dataset 2 of the CaloChallenge 2022. Through the integration of classical computation and quantum simulation, this hybrid framework paves way for utilizing large-scale quantum simulations as priors in deep generative models.

Conditioned quantum-assisted deep generative surrogate for particle-calorimeter interactions

Oct 30, 2024

Abstract:Particle collisions at accelerators such as the Large Hadron Collider, recorded and analyzed by experiments such as ATLAS and CMS, enable exquisite measurements of the Standard Model and searches for new phenomena. Simulations of collision events at these detectors have played a pivotal role in shaping the design of future experiments and analyzing ongoing ones. However, the quest for accuracy in Large Hadron Collider (LHC) collisions comes at an imposing computational cost, with projections estimating the need for millions of CPU-years annually during the High Luminosity LHC (HL-LHC) run \cite{collaboration2022atlas}. Simulating a single LHC event with \textsc{Geant4} currently devours around 1000 CPU seconds, with simulations of the calorimeter subdetectors in particular imposing substantial computational demands \cite{rousseau2023experimental}. To address this challenge, we propose a conditioned quantum-assisted deep generative model. Our model integrates a conditioned variational autoencoder (VAE) on the exterior with a conditioned Restricted Boltzmann Machine (RBM) in the latent space, providing enhanced expressiveness compared to conventional VAEs. The RBM nodes and connections are meticulously engineered to enable the use of qubits and couplers on D-Wave's Pegasus-structured \textit{Advantage} quantum annealer (QA) for sampling. We introduce a novel method for conditioning the quantum-assisted RBM using \textit{flux biases}. We further propose a novel adaptive mapping to estimate the effective inverse temperature in quantum annealers. The effectiveness of our framework is illustrated using Dataset 2 of the CaloChallenge \cite{calochallenge}.

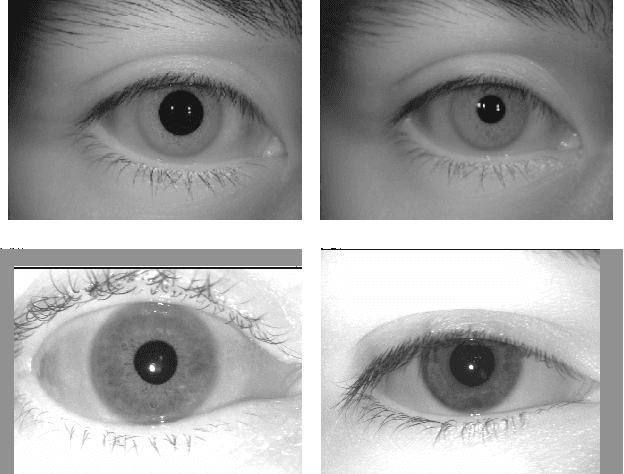

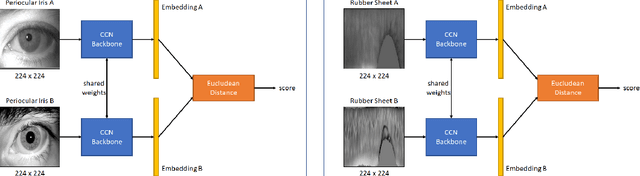

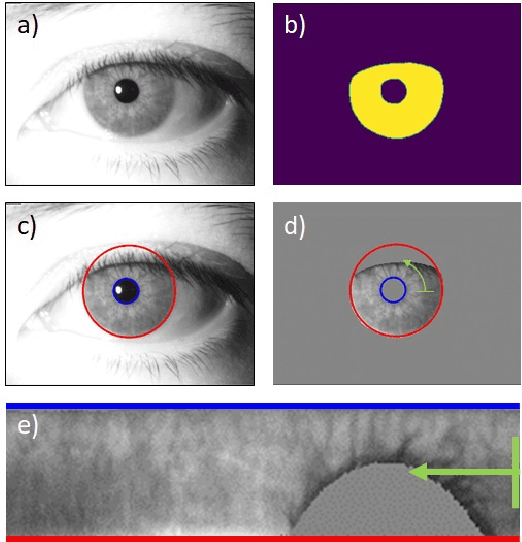

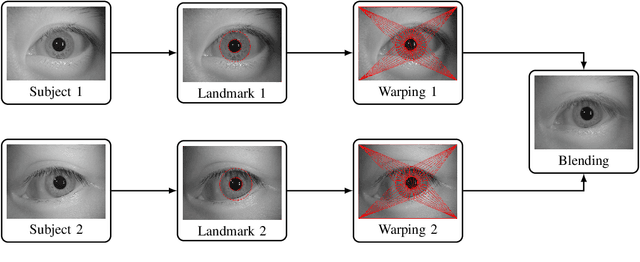

On the Feasibility of Creating Iris Periocular Morphed Images

Aug 24, 2024

Abstract:In the last few years, face morphing has been shown to be a complex challenge for Face Recognition Systems (FRS). Thus, the evaluation of other biometric modalities such as fingerprint, iris, and others must be explored and evaluated to enhance biometric systems. This work proposes an end-to-end framework to produce iris morphs at the image level, creating morphs from Periocular iris images. This framework considers different stages such as pair subject selection, segmentation, morph creation, and a new iris recognition system. In order to create realistic morphed images, two approaches for subject selection are explored: random selection and similar radius size selection. A vulnerability analysis and a Single Morphing Attack Detection algorithm were also explored. The results show that this approach obtained very realistic images that can confuse conventional iris recognition systems.

Improving Presentation Attack Detection for ID Cards on Remote Verification Systems

Jan 23, 2023

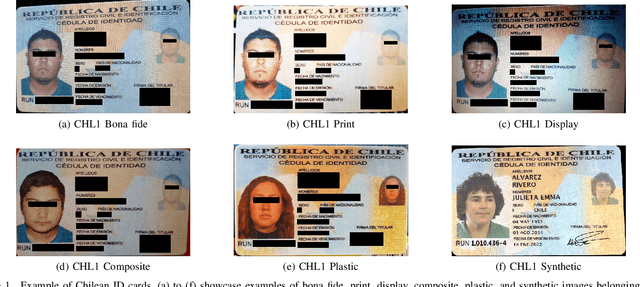

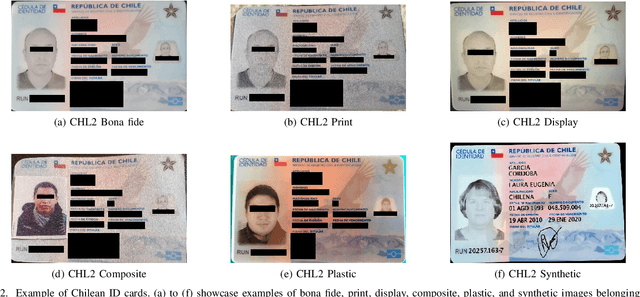

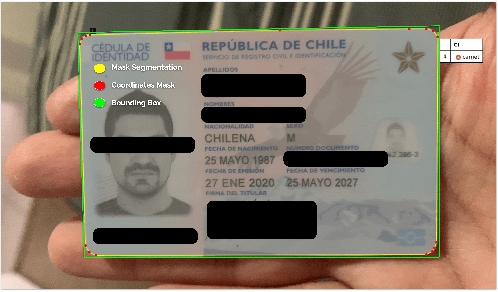

Abstract:In this paper, an updated two-stage, end-to-end Presentation Attack Detection method for remote biometric verification systems of ID cards, based on MobileNetV2, is presented. Several presentation attack species such as printed, display, composite (based on cropped and spliced areas), plastic (PVC), and synthetic ID card images using different capture sources are used. This proposal was developed using a database consisting of 190.000 real case Chilean ID card images with the support of a third-party company. Also, a new framework called PyPAD, used to estimate multi-class metrics compliant with the ISO/IEC 30107-3 standard was developed, and will be made available for research purposes. Our method is trained on two convolutional neural networks separately, reaching BPCER\textsubscript{100} scores on ID cards attacks of 1.69\% and 2.36\% respectively. The two-stage method using both models together can reach a BPCER\textsubscript{100} score of 0.92\%.

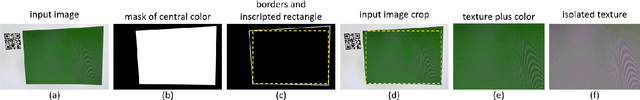

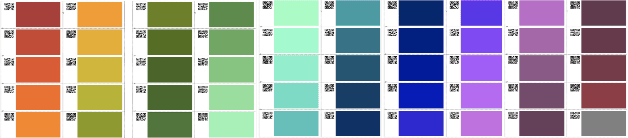

Synthetic ID Card Image Generation for Improving Presentation Attack Detection

Oct 31, 2022

Abstract:Currently, it is ever more common to access online services for activities which formerly required physical attendance. From banking operations to visa applications, a significant number of processes have been digitised, especially since the advent of the COVID-19 pandemic, requiring remote biometric authentication of the user. On the downside, some subjects intend to interfere with the normal operation of remote systems for personal profit by using fake identity documents, such as passports and ID cards. Deep learning solutions to detect such frauds have been presented in the literature. However, due to privacy concerns and the sensitive nature of personal identity documents, developing a dataset with the necessary number of examples for training deep neural networks is challenging. This work explores three methods for synthetically generating ID card images to increase the amount of data while training fraud-detection networks. These methods include computer vision algorithms and Generative Adversarial Networks. Our results indicate that databases can be supplemented with synthetic images without any loss in performance for the print/scan Presentation Attack Instrument Species (PAIS) and a loss in performance of 1% for the screen capture PAIS.

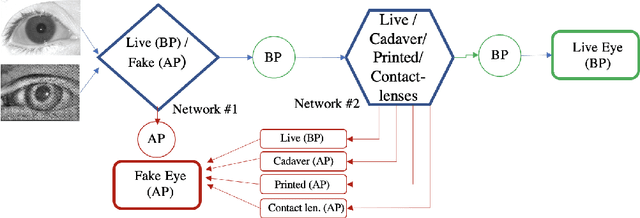

Iris Liveness Detection using a Cascade of Dedicated Deep Learning Networks

May 28, 2021

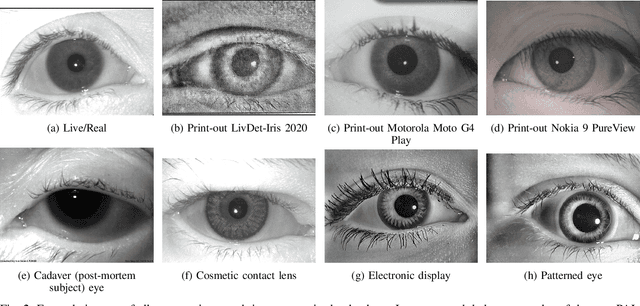

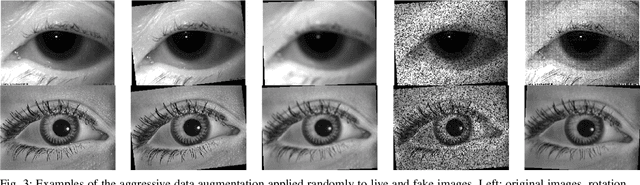

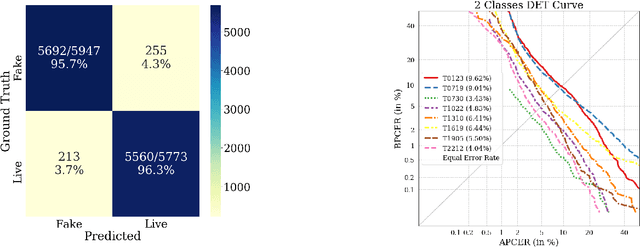

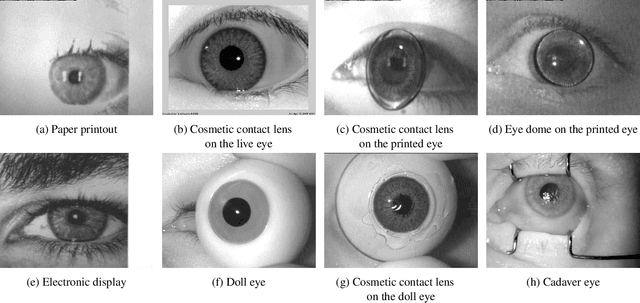

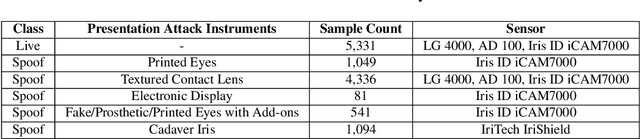

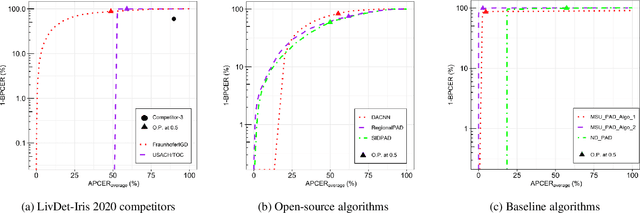

Abstract:Iris pattern recognition has significantly improved the biometric authentication field due to its high stability and uniqueness. Such physical characteristics have played an essential role in security and other related areas. However, presentation attacks, also known as spoofing techniques, can bypass biometric authentication systems using artefacts such as printed images, artificial eyes, textured contact lenses, etc. Many liveness detection methods that improve the security of these systems have been proposed. The first International Iris Liveness Detection competition, where the effectiveness of liveness detection methods is evaluated, was first launched in 2013, and its latest iteration was held in 2020. This paper proposes a serial architecture based on a MobileNetV2 modification, trained from scratch to classify bona fide iris images versus presentation attack images. The bona fide class consists of live iris images, whereas the attack presentation instrument classes are comprised of cadaver, printed, and contact lenses images, for a total of four scenarios. All the images were pre-processed and weighted per class to present a fair evaluation. This proposal won the LivDet-Iris 2020 competition using two-class scenarios. Additionally, we present new three-class and four-class scenarios that further improve the competition results. This approach is primarily focused in detecting the bona fide class over improving the detection of presentation attack instruments. For the two, three, and four classes scenarios, an Equal Error Rate (EER) of 4.04\%, 0.33\%, and 4,53\% was obtained respectively. Overall, the best serial model proposed, using three scenarios, reached an ERR of 0.33\% with an Attack Presentation Classification Error Rate (APCER) of 0.0100 and a Bona Fide Classification Error Rate (BPCER) of 0.000. This work outperforms the LivDet-Iris 2020 competition results.

Parametric Optimization of Violin Top Plates using Machine Learning

Feb 18, 2021

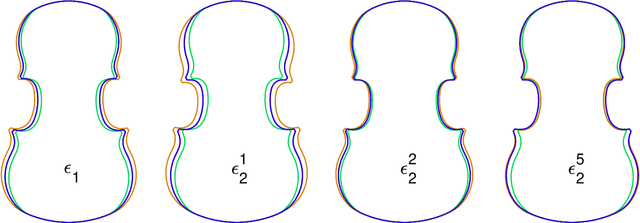

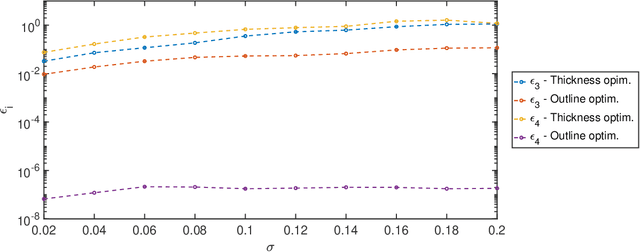

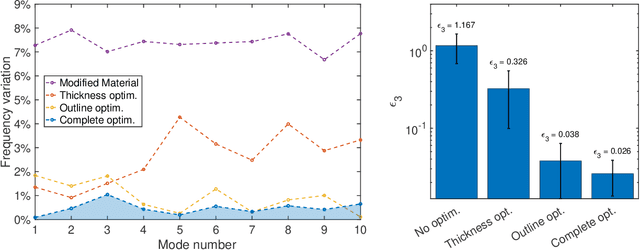

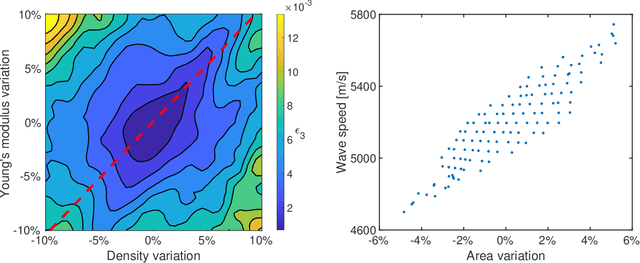

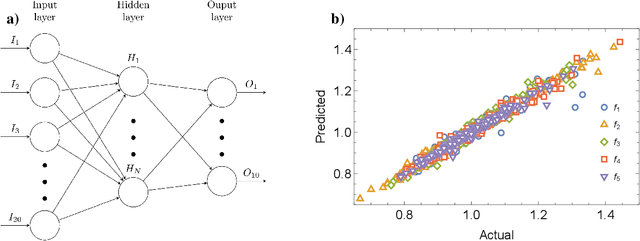

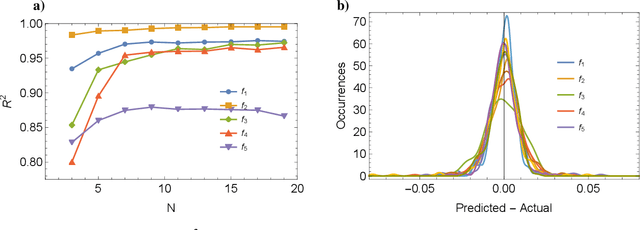

Abstract:We recently developed a neural network that receives as input the geometrical and mechanical parameters that define a violin top plate and gives as output its first ten eigenfrequencies computed in free boundary conditions. In this manuscript, we use the network to optimize several error functions, with the goal of analyzing the relationship between the eigenspectrum problem for violin top plates and their geometry. First, we focus on the violin outline. Given a vibratory feature, we find which is the best geometry of the plate to obtain it. Second, we investigate whether, from the vibrational point of view, a change in the outline shape can be compensated by one in the thickness distribution and vice versa. Finally, we analyze how to modify the violin shape to keep its response constant as its material properties vary. This is an original technique in musical acoustics, where artificial intelligence is not widely used yet. It allows us to both compute the vibrational behavior of an instrument from its geometry and optimize its shape for a given response. Furthermore, this method can be of great help to violin makers, who can thus easily understand the effects of the geometry changes in the violins they build, shedding light on one of the most relevant and, at the same time, less understood aspects of the construction process of musical instruments.

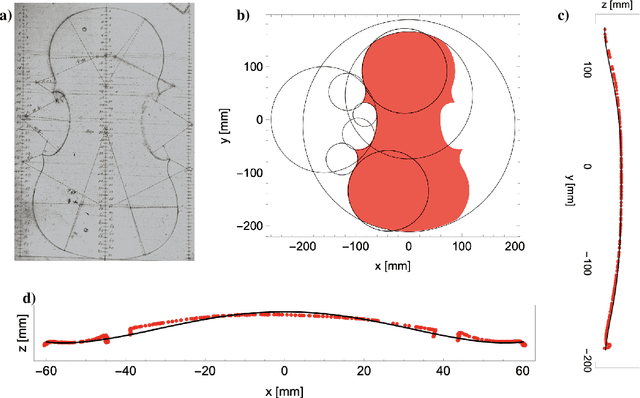

A Data-Driven Approach to Violin Making

Feb 03, 2021

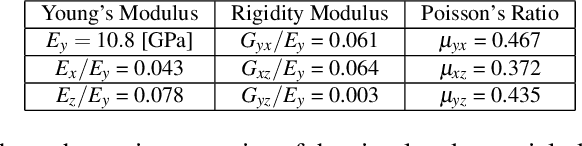

Abstract:Of all the characteristics of a violin, those that concern its shape are probably the most important ones, as the violin maker has complete control over them. Contemporary violin making, however, is still based more on tradition than understanding, and a definitive scientific study of the specific relations that exist between shape and vibrational properties is yet to come and sorely missed. In this article, using standard statistical learning tools, we show that the modal frequencies of violin tops can, in fact, be predicted from geometric parameters, and that artificial intelligence can be successfully applied to traditional violin making. We also study how modal frequencies vary with the thicknesses of the plate (a process often referred to as {\em plate tuning}) and discuss the complexity of this dependency. Finally, we propose a predictive tool for plate tuning, which takes into account material and geometric parameters.

Iris Liveness Detection Competition (LivDet-Iris) -- The 2020 Edition

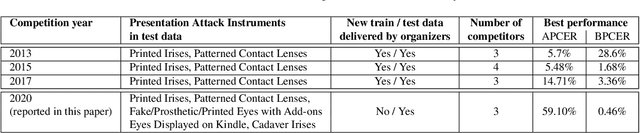

Sep 01, 2020

Abstract:Launched in 2013, LivDet-Iris is an international competition series open to academia and industry with the aim to assess and report advances in iris Presentation Attack Detection (PAD). This paper presents results from the fourth competition of the series: LivDet-Iris 2020. This year's competition introduced several novel elements: (a) incorporated new types of attacks (samples displayed on a screen, cadaver eyes and prosthetic eyes), (b) initiated LivDet-Iris as an on-going effort, with a testing protocol available now to everyone via the Biometrics Evaluation and Testing (BEAT)(https://www.idiap.ch/software/beat/) open-source platform to facilitate reproducibility and benchmarking of new algorithms continuously, and (c) performance comparison of the submitted entries with three baseline methods (offered by the University of Notre Dame and Michigan State University), and three open-source iris PAD methods available in the public domain. The best performing entry to the competition reported a weighted average APCER of 59.10\% and a BPCER of 0.46\% over all five attack types. This paper serves as the latest evaluation of iris PAD on a large spectrum of presentation attack instruments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge