Sauptik Dhar

A Survey on Proactive Customer Care: Enabling Science and Steps to Realize it

Oct 11, 2021

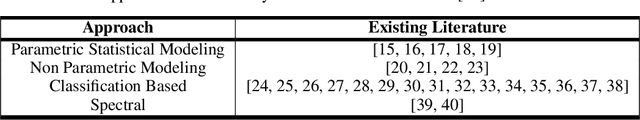

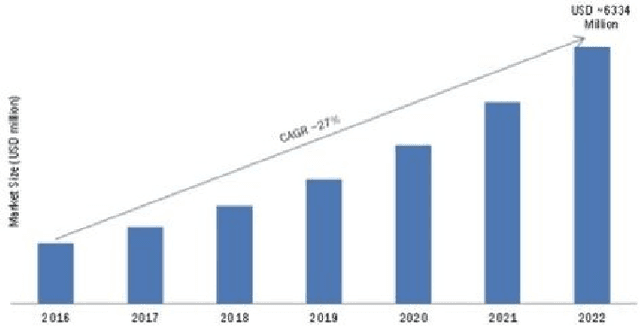

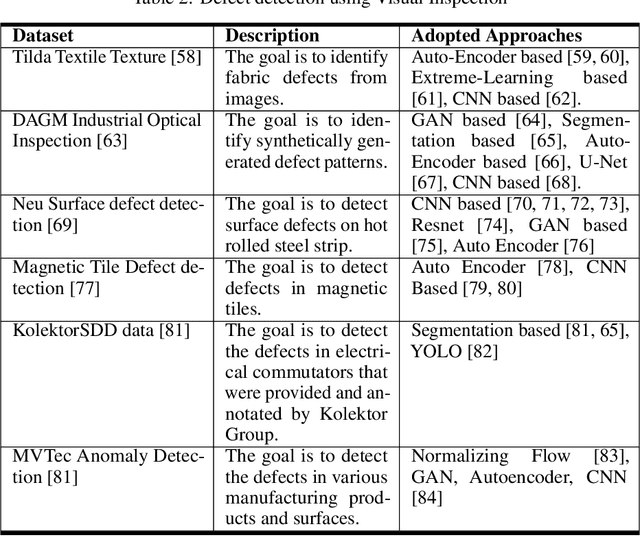

Abstract:In recent times, advances in artificial intelligence (AI) and IoT have enabled seamless and viable maintenance of appliances in home and building environments. Several studies have shown that AI has the potential to provide personalized customer support which could predict and avoid errors more reliably than ever before. In this paper, we have analyzed the various building blocks needed to enable a successful AI-driven predictive maintenance use-case. Unlike, existing surveys which mostly provide a deep dive into the recent AI algorithms for Predictive Maintenance (PdM), our survey provides the complete view; starting from business impact to recent technology advancements in algorithms as well as systems research and model deployment. Furthermore, we provide exemplar use-cases on predictive maintenance of appliances using publicly available data sets. Our survey can serve as a template needed to design a successful predictive maintenance use-case. Finally, we touch upon existing public data sources and provide a step-wise breakdown of an AI-driven proactive customer care (PCC) use-case, starting from generic anomaly detection to fault prediction and finally root-cause analysis. We highlight how such a step-wise approach can be advantageous for accurate model building and helpful for gaining insights into predictive maintenance of electromechanical appliances.

Evolving GANs: When Contradictions Turn into Compliance

Jun 18, 2021

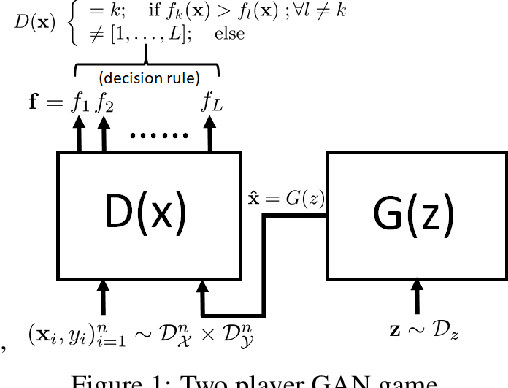

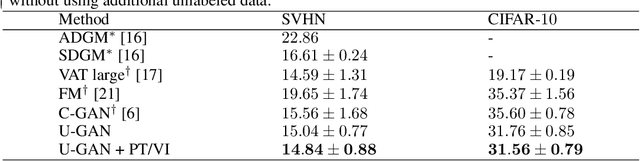

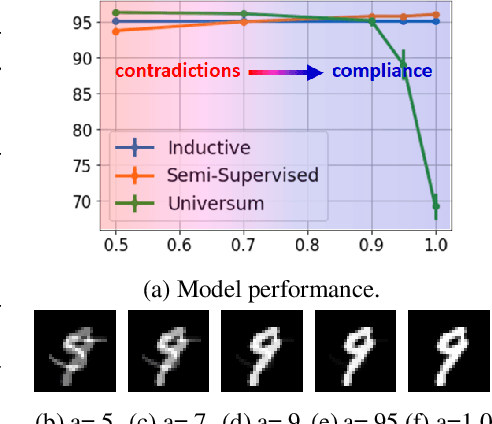

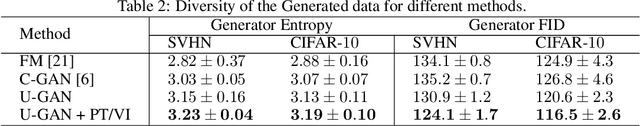

Abstract:Limited availability of labeled-data makes any supervised learning problem challenging. Alternative learning settings like semi-supervised and universum learning alleviate the dependency on labeled data, but still require a large amount of unlabeled data, which may be unavailable or expensive to acquire. GAN-based synthetic data generation methods have recently shown promise by generating synthetic samples to improve task at hand. However, these samples cannot be used for other purposes. In this paper, we propose a GAN game which provides improved discriminator accuracy under limited data settings, while generating realistic synthetic data. This provides the added advantage that now the generated data can be used for other similar tasks. We provide the theoretical guarantees and empirical results in support of our approach.

DOC3-Deep One Class Classification using Contradictions

May 17, 2021

Abstract:This paper introduces the notion of learning from contradictions (a.k.a Universum learning) for deep one class classification problems. We formalize this notion for the widely adopted one class large-margin loss, and propose the Deep One Class Classification using Contradictions (DOC3) algorithm. We show that learning from contradictions incurs lower generalization error by comparing the Empirical Radamacher Complexity (ERC) of DOC3 against its traditional inductive learning counterpart. Our empirical results demonstrate the efficacy of DOC3 algorithm achieving > 30% for CIFAR-10 and >50% for MV-Tec AD data sets in test AUCs compared to its inductive learning counterpart and in many cases improving the state-of-the-art in anomaly detection.

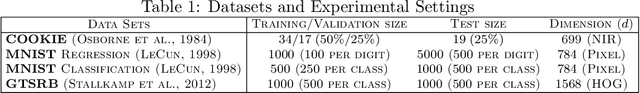

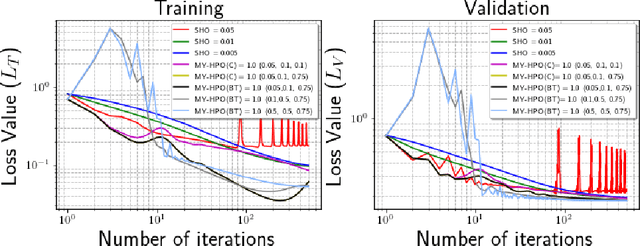

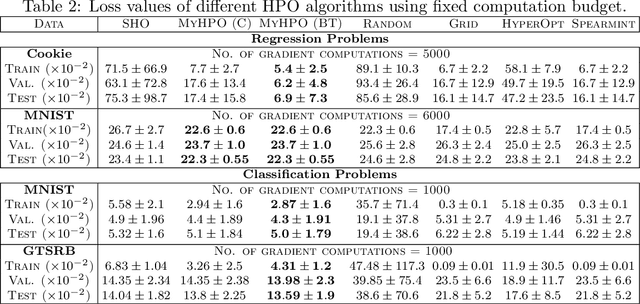

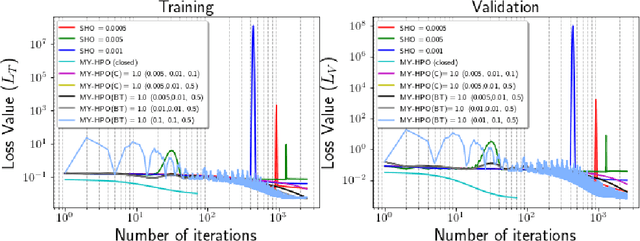

Stabilizing Bi-Level Hyperparameter Optimization using Moreau-Yosida Regularization

Jul 27, 2020

Abstract:This research proposes to use the Moreau-Yosida envelope to stabilize the convergence behavior of bi-level Hyperparameter optimization solvers, and introduces the new algorithm called Moreau-Yosida regularized Hyperparameter Optimization (MY-HPO) algorithm. Theoretical analysis on the correctness of the MY-HPO solution and initial convergence analysis is also provided. Our empirical results show significant improvement in loss values for a fixed computation budget, compared to the state-of-art bi-level HPO solvers.

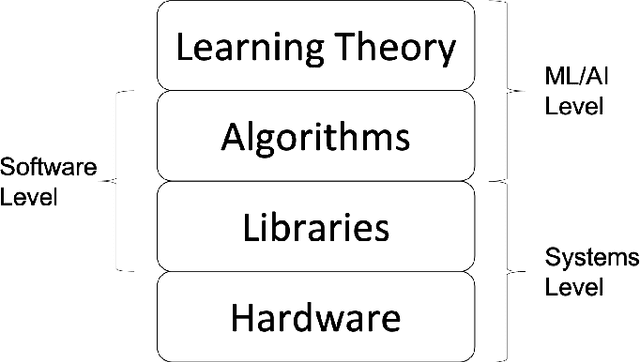

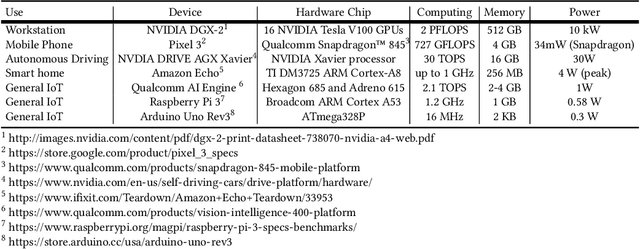

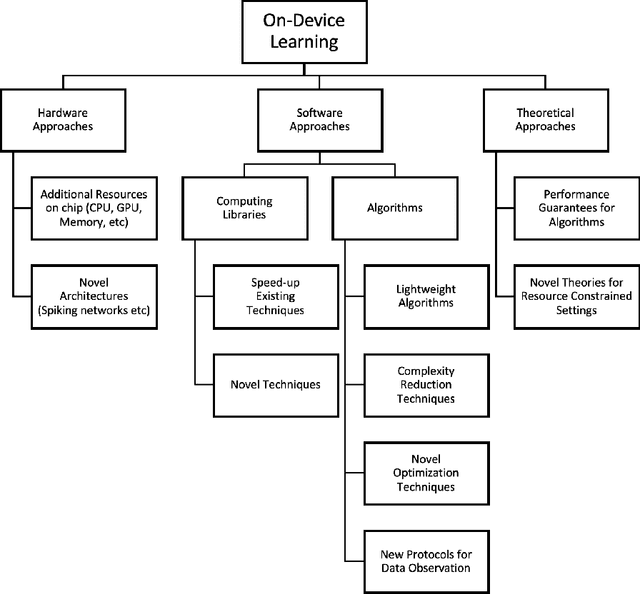

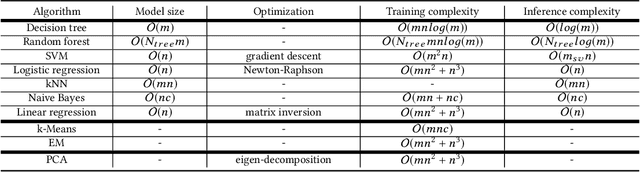

On-Device Machine Learning: An Algorithms and Learning Theory Perspective

Nov 02, 2019

Abstract:The current paradigm for using machine learning models on a device is to train a model in the cloud and perform inference using the trained model on the device. However, with the increasing number of smart devices and improved hardware, there is interest in performing model training on the device. Given this surge in interest, a comprehensive survey of the field from a device-agnostic perspective sets the stage for both understanding the state-of-the-art and for identifying open challenges and future avenues of research. Since on-device learning is an expansive field with connections to a large number of related topics in AI and machine learning (including online learning, model adaptation, one/few-shot learning, etc), covering such a large number of topics in a single survey is impractical. Instead, this survey finds a middle ground by reformulating the problem of on-device learning as resource constrained learning where the resources are compute and memory. This reformulation allows tools, techniques, and algorithms from a wide variety of research areas to be compared equitably. In addition to summarizing the state of the art, the survey also identifies a number of challenges and next steps for both the algorithmic and theoretical aspects of on-device learning.

Variable Metric Proximal Gradient Method with Diagonal Barzilai-Borwein Stepsize

Oct 15, 2019

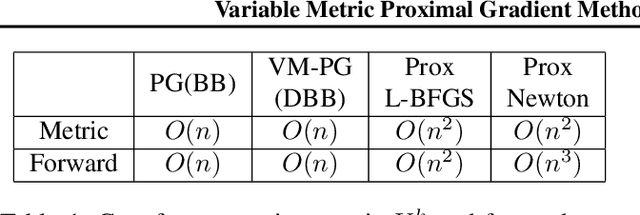

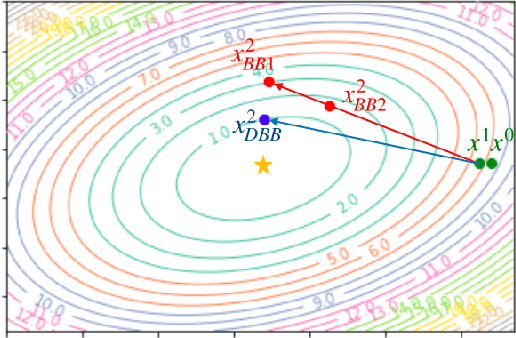

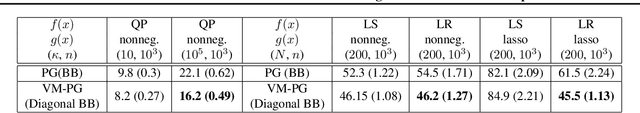

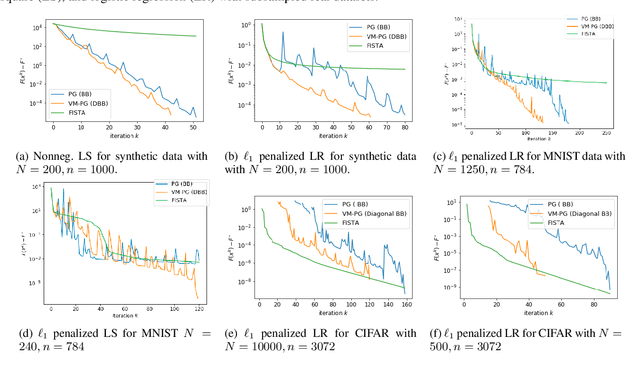

Abstract:Variable metric proximal gradient (VM-PG) is a widely used class of convex optimization method. Lately, there has been a lot of research on the theoretical guarantees of VM-PG with different metric selections. However, most such metric selections are dependent on (an expensive) Hessian, or limited to scalar stepsizes like the Barzilai-Borwein (BB) stepsize with lots of safeguarding. Instead, in this paper we propose an adaptive metric selection strategy called the diagonal Barzilai-Borwein (BB) stepsize. The proposed diagonal selection better captures the local geometry of the problem while keeping per-step computation cost similar to the scalar BB stepsize i.e. $O(n)$. Under this metric selection for VM-PG, the theoretical convergence is analyzed. Our empirical studies illustrate the improved convergence results under the proposed diagonal BB stepsize, specifically for ill-conditioned machine learning problems for both synthetic and real-world datasets.

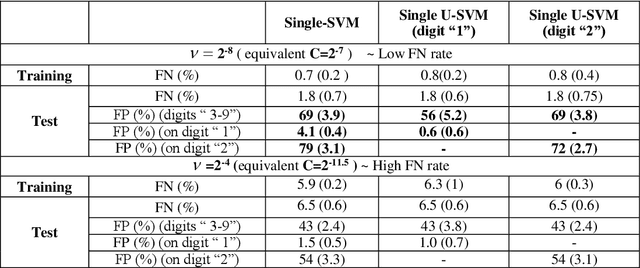

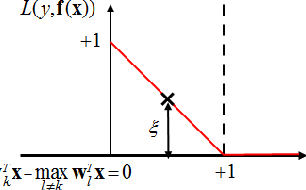

Single Class Universum-SVM

Sep 21, 2019

Abstract:This paper extends the idea of Universum learning [1, 2] to single-class learning problems. We propose Single Class Universum-SVM setting that incorporates a priori knowledge (in the form of additional data samples) into the single class estimation problem. These additional data samples or Universum belong to the same application domain as (positive) data samples from a single class (of interest), but they follow a different distribution. Proposed methodology for single class U-SVM is based on the known connection between binary classification and single class learning formulations [3]. Several empirical comparisons are presented to illustrate the utility of the proposed approach.

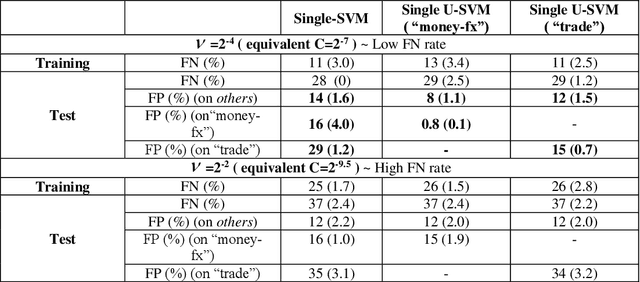

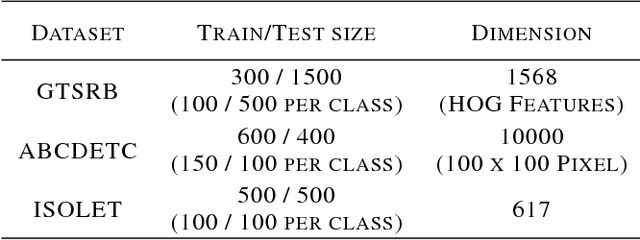

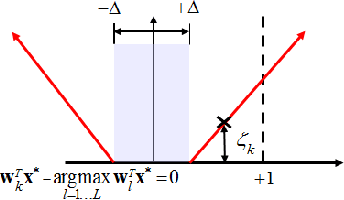

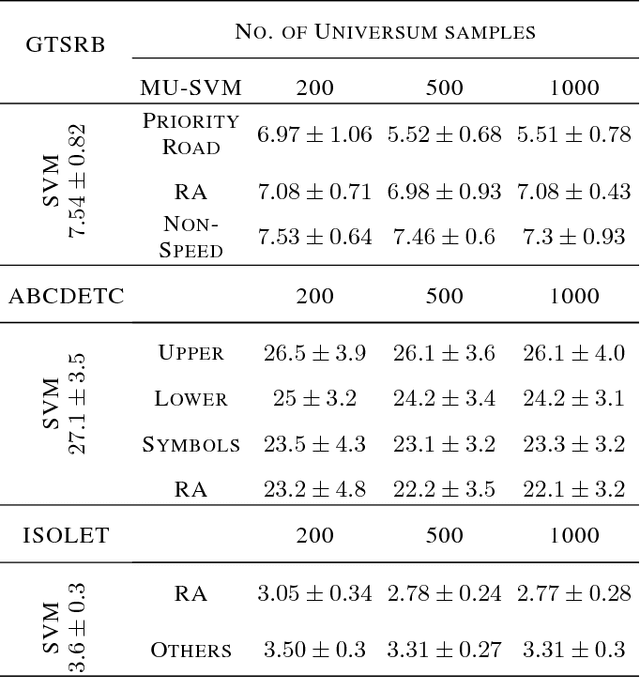

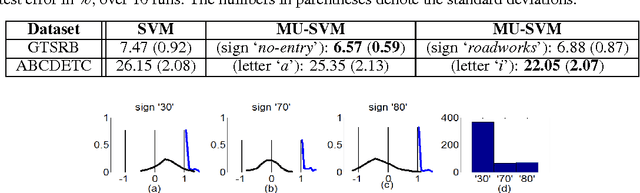

Multiclass Universum SVM

Aug 23, 2018

Abstract:We introduce Universum learning for multiclass problems and propose a novel formulation for multiclass universum SVM (MU-SVM). We also propose an analytic span bound for model selection with almost 2-4x faster computation times than standard resampling techniques. We empirically demonstrate the efficacy of the proposed MUSVM formulation on several real world datasets achieving > 20% improvement in test accuracies compared to multi-class SVM.

Universum Learning for Multiclass SVM

Sep 29, 2016

Abstract:We introduce Universum learning for multiclass problems and propose a novel formulation for multiclass universum SVM (MU-SVM). We also propose a span bound for MU-SVM that can be used for model selection thereby avoiding resampling. Empirical results demonstrate the effectiveness of MU-SVM and the proposed bound.

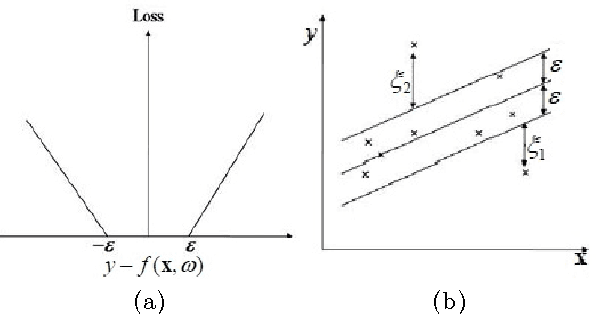

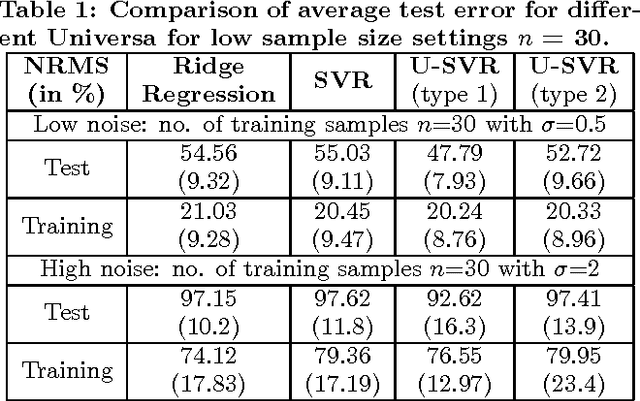

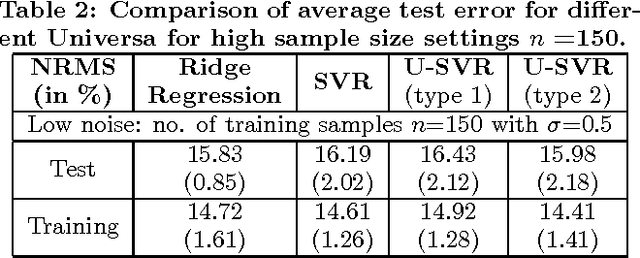

Universum Learning for SVM Regression

May 27, 2016

Abstract:This paper extends the idea of Universum learning [18, 19] to regression problems. We propose new Universum-SVM formulation for regression problems that incorporates a priori knowledge in the form of additional data samples. These additional data samples or Universum belong to the same application domain as the training samples, but they follow a different distribution. Several empirical comparisons are presented to illustrate the utility of the proposed approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge