Sangwoo Jung

GaRLILEO: Gravity-aligned Radar-Leg-Inertial Enhanced Odometry

Nov 17, 2025Abstract:Deployment of legged robots for navigating challenging terrains (e.g., stairs, slopes, and unstructured environments) has gained increasing preference over wheel-based platforms. In such scenarios, accurate odometry estimation is a preliminary requirement for stable locomotion, localization, and mapping. Traditional proprioceptive approaches, which rely on leg kinematics sensor modalities and inertial sensing, suffer from irrepressible vertical drift caused by frequent contact impacts, foot slippage, and vibrations, particularly affected by inaccurate roll and pitch estimation. Existing methods incorporate exteroceptive sensors such as LiDAR or cameras. Further enhancement has been introduced by leveraging gravity vector estimation to add additional observations on roll and pitch, thereby increasing the accuracy of vertical pose estimation. However, these approaches tend to degrade in feature-sparse or repetitive scenes and are prone to errors from double-integrated IMU acceleration. To address these challenges, we propose GaRLILEO, a novel gravity-aligned continuous-time radar-leg-inertial odometry framework. GaRLILEO decouples velocity from the IMU by building a continuous-time ego-velocity spline from SoC radar Doppler and leg kinematics information, enabling seamless sensor fusion which mitigates odometry distortion. In addition, GaRLILEO can reliably capture accurate gravity vectors leveraging a novel soft S2-constrained gravity factor, improving vertical pose accuracy without relying on LiDAR or cameras. Evaluated on a self-collected real-world dataset with diverse indoor-outdoor trajectories, GaRLILEO demonstrates state-of-the-art accuracy, particularly in vertical odometry estimation on stairs and slopes. We open-source both our dataset and algorithm to foster further research in legged robot odometry and SLAM. https://garlileo.github.io/GaRLILEO

GaRLIO: Gravity enhanced Radar-LiDAR-Inertial Odometry

Feb 11, 2025Abstract:Recently, gravity has been highlighted as a crucial constraint for state estimation to alleviate potential vertical drift. Existing online gravity estimation methods rely on pose estimation combined with IMU measurements, which is considered best practice when direct velocity measurements are unavailable. However, with radar sensors providing direct velocity data-a measurement not yet utilized for gravity estimation-we found a significant opportunity to improve gravity estimation accuracy substantially. GaRLIO, the proposed gravity-enhanced Radar-LiDAR-Inertial Odometry, can robustly predict gravity to reduce vertical drift while simultaneously enhancing state estimation performance using pointwise velocity measurements. Furthermore, GaRLIO ensures robustness in dynamic environments by utilizing radar to remove dynamic objects from LiDAR point clouds. Our method is validated through experiments in various environments prone to vertical drift, demonstrating superior performance compared to traditional LiDAR-Inertial Odometry methods. We make our source code publicly available to encourage further research and development. https://github.com/ChiyunNoh/GaRLIO

HeRCULES: Heterogeneous Radar Dataset in Complex Urban Environment for Multi-session Radar SLAM

Feb 04, 2025Abstract:Recently, radars have been widely featured in robotics for their robustness in challenging weather conditions. Two commonly used radar types are spinning radars and phased-array radars, each offering distinct sensor characteristics. Existing datasets typically feature only a single type of radar, leading to the development of algorithms limited to that specific kind. In this work, we highlight that combining different radar types offers complementary advantages, which can be leveraged through a heterogeneous radar dataset. Moreover, this new dataset fosters research in multi-session and multi-robot scenarios where robots are equipped with different types of radars. In this context, we introduce the HeRCULES dataset, a comprehensive, multi-modal dataset with heterogeneous radars, FMCW LiDAR, IMU, GPS, and cameras. This is the first dataset to integrate 4D radar and spinning radar alongside FMCW LiDAR, offering unparalleled localization, mapping, and place recognition capabilities. The dataset covers diverse weather and lighting conditions and a range of urban traffic scenarios, enabling a comprehensive analysis across various environments. The sequence paths with multiple revisits and ground truth pose for each sensor enhance its suitability for place recognition research. We expect the HeRCULES dataset to facilitate odometry, mapping, place recognition, and sensor fusion research. The dataset and development tools are available at https://sites.google.com/view/herculesdataset.

HeLiOS: Heterogeneous LiDAR Place Recognition via Overlap-based Learning and Local Spherical Transformer

Jan 31, 2025Abstract:LiDAR place recognition is a crucial module in localization that matches the current location with previously observed environments. Most existing approaches in LiDAR place recognition dominantly focus on the spinning type LiDAR to exploit its large FOV for matching. However, with the recent emergence of various LiDAR types, the importance of matching data across different LiDAR types has grown significantly-a challenge that has been largely overlooked for many years. To address these challenges, we introduce HeLiOS, a deep network tailored for heterogeneous LiDAR place recognition, which utilizes small local windows with spherical transformers and optimal transport-based cluster assignment for robust global descriptors. Our overlap-based data mining and guided-triplet loss overcome the limitations of traditional distance-based mining and discrete class constraints. HeLiOS is validated on public datasets, demonstrating performance in heterogeneous LiDAR place recognition while including an evaluation for long-term recognition, showcasing its ability to handle unseen LiDAR types. We release the HeLiOS code as an open source for the robotics community at https://github.com/minwoo0611/HeLiOS.

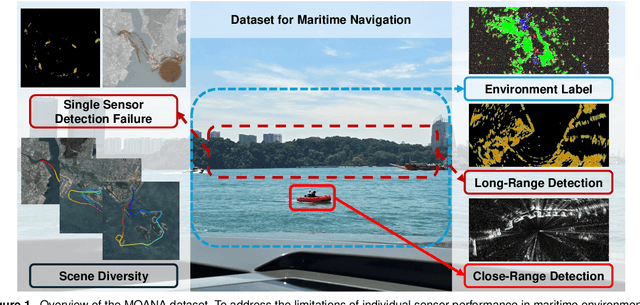

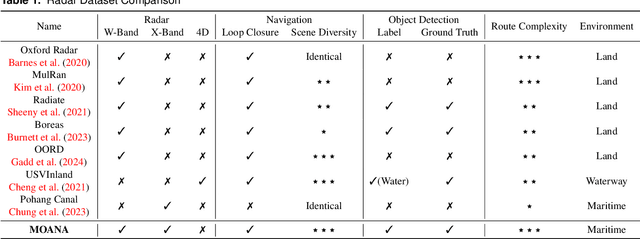

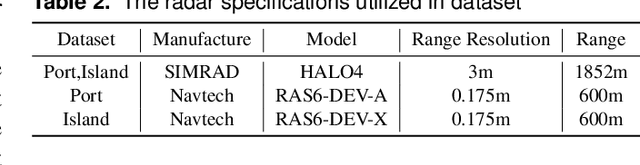

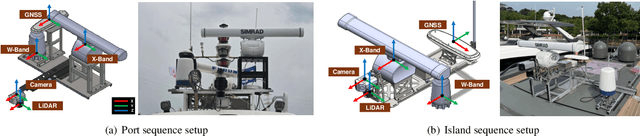

MOANA: Multi-Radar Dataset for Maritime Odometry and Autonomous Navigation Application

Dec 05, 2024

Abstract:Maritime environmental sensing requires overcoming challenges from complex conditions such as harsh weather, platform perturbations, large dynamic objects, and the requirement for long detection ranges. While cameras and LiDAR are commonly used in ground vehicle navigation, their applicability in maritime settings is limited by range constraints and hardware maintenance issues. Radar sensors, however, offer robust long-range detection capabilities and resilience to physical contamination from weather and saline conditions, making it a powerful sensor for maritime navigation. Among various radar types, X-band radar (e.g., marine radar) is widely employed for maritime vessel navigation, providing effective long-range detection essential for situational awareness and collision avoidance. Nevertheless, it exhibits limitations during berthing operations where close-range object detection is critical. To address this shortcoming, we incorporate W-band radar (e.g., Navtech imaging radar), which excels in detecting nearby objects with a higher update rate. We present a comprehensive maritime sensor dataset featuring multi-range detection capabilities. This dataset integrates short-range LiDAR data, medium-range W-band radar data, and long-range X-band radar data into a unified framework. Additionally, it includes object labels for oceanic object detection usage, derived from radar and stereo camera images. The dataset comprises seven sequences collected from diverse regions with varying levels of estimation difficulty, ranging from easy to challenging, and includes common locations suitable for global localization tasks. This dataset serves as a valuable resource for advancing research in place recognition, odometry estimation, SLAM, object detection, and dynamic object elimination within maritime environments. Dataset can be found in following link: https://sites.google.com/view/rpmmoana

OPAL: Outlier-Preserved Microscaling Quantization A ccelerator for Generative Large Language Models

Sep 06, 2024

Abstract:To overcome the burden on the memory size and bandwidth due to ever-increasing size of large language models (LLMs), aggressive weight quantization has been recently studied, while lacking research on quantizing activations. In this paper, we present a hardware-software co-design method that results in an energy-efficient LLM accelerator, named OPAL, for generation tasks. First of all, a novel activation quantization method that leverages the microscaling data format while preserving several outliers per sub-tensor block (e.g., four out of 128 elements) is proposed. Second, on top of preserving outliers, mixed precision is utilized that sets 5-bit for inputs to sensitive layers in the decoder block of an LLM, while keeping inputs to less sensitive layers to 3-bit. Finally, we present the OPAL hardware architecture that consists of FP units for handling outliers and vectorized INT multipliers for dominant non-outlier related operations. In addition, OPAL uses log2-based approximation on softmax operations that only requires shift and subtraction to maximize power efficiency. As a result, we are able to improve the energy efficiency by 1.6~2.2x, and reduce the area by 2.4~3.1x with negligible accuracy loss, i.e., <1 perplexity increase.

Quantitative 3D Map Accuracy Evaluation Hardware and Algorithm for LiDAR(-Inertial) SLAM

Aug 19, 2024Abstract:Accuracy evaluation of a 3D pointcloud map is crucial for the development of autonomous driving systems. In this work, we propose a user-independent software/hardware system that can quantitatively evaluate the accuracy of a 3D pointcloud map acquired from LiDAR(-Inertial) SLAM. We introduce a LiDAR target that functions robustly in the outdoor environment, while remaining observable by LiDAR. We also propose a software algorithm that automatically extracts representative points and calculates the accuracy of the 3D pointcloud map by leveraging GPS position data. This methodology overcomes the limitations of the manual selection method, that its result varies between users. Furthermore, two different error metrics, relative and absolute errors, are introduced to analyze the accuracy from different perspectives. Our implementations are available at: https://github.com/SangwooJung98/3D_Map_Evaluation

Co-RaL: Complementary Radar-Leg Odometry with 4-DoF Optimization and Rolling Contact

Jul 10, 2024Abstract:Robust and accurate localization in challenging environments is becoming crucial for SLAM. In this paper, we propose a unique sensor configuration for precise and robust odometry by integrating chip radar and a legged robot. Specifically, we introduce a tightly coupled radar-leg odometry algorithm for complementary drift correction. Adopting the 4-DoF optimization and decoupled RANSAC to mmWave chip radar significantly enhances radar odometry beyond the existing method, especially z-directional even when using a single radar. For the leg odometry, we employ rolling contact modeling-aided forward kinematics, accommodating scenarios with the potential possibility of contact drift and radar failure. We evaluate our method by comparing it with other chip radar odometry algorithms using real-world datasets with diverse environments while the datasets will be released for the robotics community. https://github.com/SangwooJung98/Co-RaL-Dataset

Imaging radar and LiDAR image translation for 3-DOF extrinsic calibration

Mar 27, 2024Abstract:The integration of sensor data is crucial in the field of robotics to take full advantage of the various sensors employed. One critical aspect of this integration is determining the extrinsic calibration parameters, such as the relative transformation, between each sensor. The use of data fusion between complementary sensors, such as radar and LiDAR, can provide significant benefits, particularly in harsh environments where accurate depth data is required. However, noise included in radar sensor data can make the estimation of extrinsic calibration challenging. To address this issue, we present a novel framework for the extrinsic calibration of radar and LiDAR sensors, utilizing CycleGAN as amethod of image-to-image translation. Our proposed method employs translating radar bird-eye-view images into LiDAR-style images to estimate the 3-DOF extrinsic parameters. The use of image registration techniques, as well as deskewing based on sensor odometry and B-spline interpolation, is employed to address the rolling shutter effect commonly present in spinning sensors. Our method demonstrates a notable improvement in extrinsic calibration compared to filter-based methods using the MulRan dataset.

TRansPose: Large-Scale Multispectral Dataset for Transparent Object

Jul 11, 2023

Abstract:Transparent objects are encountered frequently in our daily lives, yet recognizing them poses challenges for conventional vision sensors due to their unique material properties, not being well perceived from RGB or depth cameras. Overcoming this limitation, thermal infrared cameras have emerged as a solution, offering improved visibility and shape information for transparent objects. In this paper, we present TRansPose, the first large-scale multispectral dataset that combines stereo RGB-D, thermal infrared (TIR) images, and object poses to promote transparent object research. The dataset includes 99 transparent objects, encompassing 43 household items, 27 recyclable trashes, 29 chemical laboratory equivalents, and 12 non-transparent objects. It comprises a vast collection of 333,819 images and 4,000,056 annotations, providing instance-level segmentation masks, ground-truth poses, and completed depth information. The data was acquired using a FLIR A65 thermal infrared (TIR) camera, two Intel RealSense L515 RGB-D cameras, and a Franka Emika Panda robot manipulator. Spanning 87 sequences, TRansPose covers various challenging real-life scenarios, including objects filled with water, diverse lighting conditions, heavy clutter, non-transparent or translucent containers, objects in plastic bags, and multi-stacked objects. TRansPose dataset can be accessed from the following link: https://sites.google.com/view/transpose-dataset

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge