Minwoo Jung

TreeLoc: 6-DoF LiDAR Global Localization in Forests via Inter-Tree Geometric Matching

Feb 03, 2026Abstract:Reliable localization is crucial for navigation in forests, where GPS is often degraded and LiDAR measurements are repetitive, occluded, and structurally complex. These conditions weaken the assumptions of traditional urban-centric localization methods, which assume that consistent features arise from unique structural patterns, necessitating forest-centric solutions to achieve robustness in these environments. To address these challenges, we propose TreeLoc, a LiDAR-based global localization framework for forests that handles place recognition and 6-DoF pose estimation. We represent scenes using tree stems and their Diameter at Breast Height (DBH), which are aligned to a common reference frame via their axes and summarized using the tree distribution histogram (TDH) for coarse matching, followed by fine matching with a 2D triangle descriptor. Finally, pose estimation is achieved through a two-step geometric verification. On diverse forest benchmarks, TreeLoc outperforms baselines, achieving precise localization. Ablation studies validate the contribution of each component. We also propose applications for long-term forest management using descriptors from a compact global tree database. TreeLoc is open-sourced for the robotics community at https://github.com/minwoo0611/TreeLoc.

SHeRLoc: Synchronized Heterogeneous Radar Place Recognition for Cross-Modal Localization

Jun 18, 2025Abstract:Despite the growing adoption of radar in robotics, the majority of research has been confined to homogeneous sensor types, overlooking the integration and cross-modality challenges inherent in heterogeneous radar technologies. This leads to significant difficulties in generalizing across diverse radar data types, with modality-aware approaches that could leverage the complementary strengths of heterogeneous radar remaining unexplored. To bridge these gaps, we propose SHeRLoc, the first deep network tailored for heterogeneous radar, which utilizes RCS polar matching to align multimodal radar data. Our hierarchical optimal transport-based feature aggregation method generates rotationally robust multi-scale descriptors. By employing FFT-similarity-based data mining and adaptive margin-based triplet loss, SHeRLoc enables FOV-aware metric learning. SHeRLoc achieves an order of magnitude improvement in heterogeneous radar place recognition, increasing recall@1 from below 0.1 to 0.9 on a public dataset and outperforming state of-the-art methods. Also applicable to LiDAR, SHeRLoc paves the way for cross-modal place recognition and heterogeneous sensor SLAM. The source code will be available upon acceptance.

ImLPR: Image-based LiDAR Place Recognition using Vision Foundation Models

May 23, 2025Abstract:LiDAR Place Recognition (LPR) is a key component in robotic localization, enabling robots to align current scans with prior maps of their environment. While Visual Place Recognition (VPR) has embraced Vision Foundation Models (VFMs) to enhance descriptor robustness, LPR has relied on task-specific models with limited use of pre-trained foundation-level knowledge. This is due to the lack of 3D foundation models and the challenges of using VFM with LiDAR point clouds. To tackle this, we introduce ImLPR, a novel pipeline that employs a pre-trained DINOv2 VFM to generate rich descriptors for LPR. To our knowledge, ImLPR is the first method to leverage a VFM to support LPR. ImLPR converts raw point clouds into Range Image Views (RIV) to leverage VFM in the LiDAR domain. It employs MultiConv adapters and Patch-InfoNCE loss for effective feature learning. We validate ImLPR using public datasets where it outperforms state-of-the-art (SOTA) methods in intra-session and inter-session LPR with top Recall@1 and F1 scores across various LiDARs. We also demonstrate that RIV outperforms Bird's-Eye-View (BEV) as a representation choice for adapting LiDAR for VFM. We release ImLPR as open source for the robotics community.

The City that Never Settles: Simulation-based LiDAR Dataset for Long-Term Place Recognition Under Extreme Structural Changes

May 08, 2025Abstract:Large-scale construction and demolition significantly challenge long-term place recognition (PR) by drastically reshaping urban and suburban environments. Existing datasets predominantly reflect limited or indoor-focused changes, failing to adequately represent extensive outdoor transformations. To bridge this gap, we introduce the City that Never Settles (CNS) dataset, a simulation-based dataset created using the CARLA simulator, capturing major structural changes-such as building construction and demolition-across diverse maps and sequences. Additionally, we propose TCR_sym, a symmetric version of the original TCR metric, enabling consistent measurement of structural changes irrespective of source-target ordering. Quantitative comparisons demonstrate that CNS encompasses more extensive transformations than current real-world benchmarks. Evaluations of state-of-the-art LiDAR-based PR methods on CNS reveal substantial performance degradation, underscoring the need for robust algorithms capable of handling significant environmental changes. Our dataset is available at https://github.com/Hyunho111/CNS_dataset.

GaRLIO: Gravity enhanced Radar-LiDAR-Inertial Odometry

Feb 11, 2025Abstract:Recently, gravity has been highlighted as a crucial constraint for state estimation to alleviate potential vertical drift. Existing online gravity estimation methods rely on pose estimation combined with IMU measurements, which is considered best practice when direct velocity measurements are unavailable. However, with radar sensors providing direct velocity data-a measurement not yet utilized for gravity estimation-we found a significant opportunity to improve gravity estimation accuracy substantially. GaRLIO, the proposed gravity-enhanced Radar-LiDAR-Inertial Odometry, can robustly predict gravity to reduce vertical drift while simultaneously enhancing state estimation performance using pointwise velocity measurements. Furthermore, GaRLIO ensures robustness in dynamic environments by utilizing radar to remove dynamic objects from LiDAR point clouds. Our method is validated through experiments in various environments prone to vertical drift, demonstrating superior performance compared to traditional LiDAR-Inertial Odometry methods. We make our source code publicly available to encourage further research and development. https://github.com/ChiyunNoh/GaRLIO

HeRCULES: Heterogeneous Radar Dataset in Complex Urban Environment for Multi-session Radar SLAM

Feb 04, 2025Abstract:Recently, radars have been widely featured in robotics for their robustness in challenging weather conditions. Two commonly used radar types are spinning radars and phased-array radars, each offering distinct sensor characteristics. Existing datasets typically feature only a single type of radar, leading to the development of algorithms limited to that specific kind. In this work, we highlight that combining different radar types offers complementary advantages, which can be leveraged through a heterogeneous radar dataset. Moreover, this new dataset fosters research in multi-session and multi-robot scenarios where robots are equipped with different types of radars. In this context, we introduce the HeRCULES dataset, a comprehensive, multi-modal dataset with heterogeneous radars, FMCW LiDAR, IMU, GPS, and cameras. This is the first dataset to integrate 4D radar and spinning radar alongside FMCW LiDAR, offering unparalleled localization, mapping, and place recognition capabilities. The dataset covers diverse weather and lighting conditions and a range of urban traffic scenarios, enabling a comprehensive analysis across various environments. The sequence paths with multiple revisits and ground truth pose for each sensor enhance its suitability for place recognition research. We expect the HeRCULES dataset to facilitate odometry, mapping, place recognition, and sensor fusion research. The dataset and development tools are available at https://sites.google.com/view/herculesdataset.

HeLiOS: Heterogeneous LiDAR Place Recognition via Overlap-based Learning and Local Spherical Transformer

Jan 31, 2025Abstract:LiDAR place recognition is a crucial module in localization that matches the current location with previously observed environments. Most existing approaches in LiDAR place recognition dominantly focus on the spinning type LiDAR to exploit its large FOV for matching. However, with the recent emergence of various LiDAR types, the importance of matching data across different LiDAR types has grown significantly-a challenge that has been largely overlooked for many years. To address these challenges, we introduce HeLiOS, a deep network tailored for heterogeneous LiDAR place recognition, which utilizes small local windows with spherical transformers and optimal transport-based cluster assignment for robust global descriptors. Our overlap-based data mining and guided-triplet loss overcome the limitations of traditional distance-based mining and discrete class constraints. HeLiOS is validated on public datasets, demonstrating performance in heterogeneous LiDAR place recognition while including an evaluation for long-term recognition, showcasing its ability to handle unseen LiDAR types. We release the HeLiOS code as an open source for the robotics community at https://github.com/minwoo0611/HeLiOS.

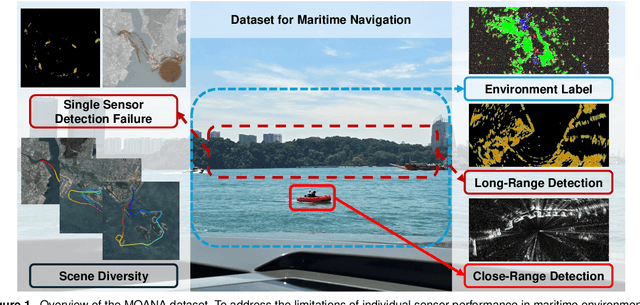

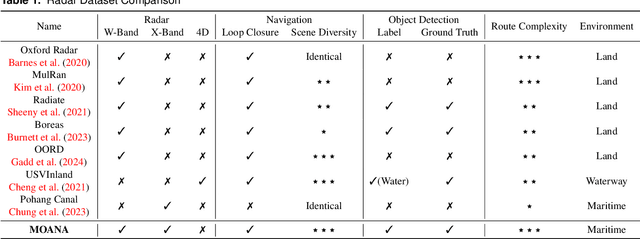

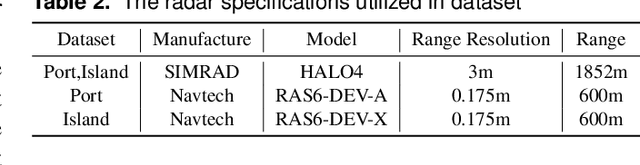

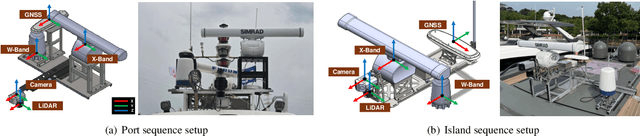

MOANA: Multi-Radar Dataset for Maritime Odometry and Autonomous Navigation Application

Dec 05, 2024

Abstract:Maritime environmental sensing requires overcoming challenges from complex conditions such as harsh weather, platform perturbations, large dynamic objects, and the requirement for long detection ranges. While cameras and LiDAR are commonly used in ground vehicle navigation, their applicability in maritime settings is limited by range constraints and hardware maintenance issues. Radar sensors, however, offer robust long-range detection capabilities and resilience to physical contamination from weather and saline conditions, making it a powerful sensor for maritime navigation. Among various radar types, X-band radar (e.g., marine radar) is widely employed for maritime vessel navigation, providing effective long-range detection essential for situational awareness and collision avoidance. Nevertheless, it exhibits limitations during berthing operations where close-range object detection is critical. To address this shortcoming, we incorporate W-band radar (e.g., Navtech imaging radar), which excels in detecting nearby objects with a higher update rate. We present a comprehensive maritime sensor dataset featuring multi-range detection capabilities. This dataset integrates short-range LiDAR data, medium-range W-band radar data, and long-range X-band radar data into a unified framework. Additionally, it includes object labels for oceanic object detection usage, derived from radar and stereo camera images. The dataset comprises seven sequences collected from diverse regions with varying levels of estimation difficulty, ranging from easy to challenging, and includes common locations suitable for global localization tasks. This dataset serves as a valuable resource for advancing research in place recognition, odometry estimation, SLAM, object detection, and dynamic object elimination within maritime environments. Dataset can be found in following link: https://sites.google.com/view/rpmmoana

Quantitative 3D Map Accuracy Evaluation Hardware and Algorithm for LiDAR(-Inertial) SLAM

Aug 19, 2024Abstract:Accuracy evaluation of a 3D pointcloud map is crucial for the development of autonomous driving systems. In this work, we propose a user-independent software/hardware system that can quantitatively evaluate the accuracy of a 3D pointcloud map acquired from LiDAR(-Inertial) SLAM. We introduce a LiDAR target that functions robustly in the outdoor environment, while remaining observable by LiDAR. We also propose a software algorithm that automatically extracts representative points and calculates the accuracy of the 3D pointcloud map by leveraging GPS position data. This methodology overcomes the limitations of the manual selection method, that its result varies between users. Furthermore, two different error metrics, relative and absolute errors, are introduced to analyze the accuracy from different perspectives. Our implementations are available at: https://github.com/SangwooJung98/3D_Map_Evaluation

ConPR: Ongoing Construction Site Dataset for Place Recognition

Jul 04, 2024Abstract:Place recognition, an essential challenge in computer vision and robotics, involves identifying previously visited locations. Despite algorithmic progress, challenges related to appearance change persist, with existing datasets often focusing on seasonal and weather variations but overlooking terrain changes. Understanding terrain alterations becomes critical for effective place recognition, given the aging infrastructure and ongoing city repairs. For real-world applicability, the comprehensive evaluation of algorithms must consider spatial dynamics. To address existing limitations, we present a novel multi-session place recognition dataset acquired from an active construction site. Our dataset captures ongoing construction progress through multiple data collections, facilitating evaluation in dynamic environments. It includes camera images, LiDAR point cloud data, and IMU data, enabling visual and LiDAR-based place recognition techniques, and supporting sensor fusion. Additionally, we provide ground truth information for range-based place recognition evaluation. Our dataset aims to advance place recognition algorithms in challenging and dynamic settings. Our dataset is available at https://github.com/dongjae0107/ConPR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge