Sangtae Ahn

DAFT-GAN: Dual Affine Transformation Generative Adversarial Network for Text-Guided Image Inpainting

Aug 09, 2024

Abstract:In recent years, there has been a significant focus on research related to text-guided image inpainting. However, the task remains challenging due to several constraints, such as ensuring alignment between the image and the text, and maintaining consistency in distribution between corrupted and uncorrupted regions. In this paper, thus, we propose a dual affine transformation generative adversarial network (DAFT-GAN) to maintain the semantic consistency for text-guided inpainting. DAFT-GAN integrates two affine transformation networks to combine text and image features gradually for each decoding block. Moreover, we minimize information leakage of uncorrupted features for fine-grained image generation by encoding corrupted and uncorrupted regions of the masked image separately. Our proposed model outperforms the existing GAN-based models in both qualitative and quantitative assessments with three benchmark datasets (MS-COCO, CUB, and Oxford) for text-guided image inpainting.

Improving the performance of object detection by preserving label distribution

Aug 28, 2023Abstract:Object detection is a task that performs position identification and label classification of objects in images or videos. The information obtained through this process plays an essential role in various tasks in the field of computer vision. In object detection, the data utilized for training and validation typically originate from public datasets that are well-balanced in terms of the number of objects ascribed to each class in an image. However, in real-world scenarios, handling datasets with much greater class imbalance, i.e., very different numbers of objects for each class , is much more common, and this imbalance may reduce the performance of object detection when predicting unseen test images. In our study, thus, we propose a method that evenly distributes the classes in an image for training and validation, solving the class imbalance problem in object detection. Our proposed method aims to maintain a uniform class distribution through multi-label stratification. We tested our proposed method not only on public datasets that typically exhibit balanced class distribution but also on custom datasets that may have imbalanced class distribution. We found that our proposed method was more effective on datasets containing severe imbalance and less data. Our findings indicate that the proposed method can be effectively used on datasets with substantially imbalanced class distribution.

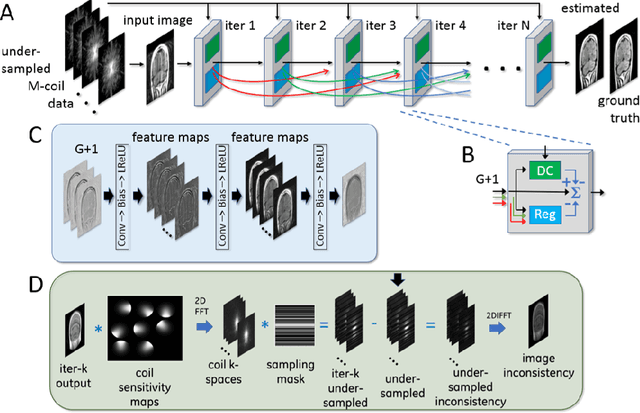

Deep learning-based reconstruction of highly accelerated 3D MRI

Mar 09, 2022

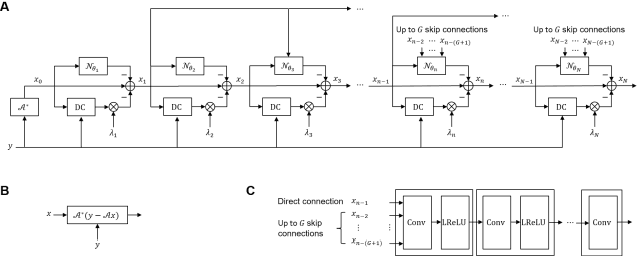

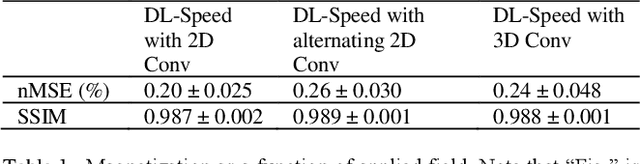

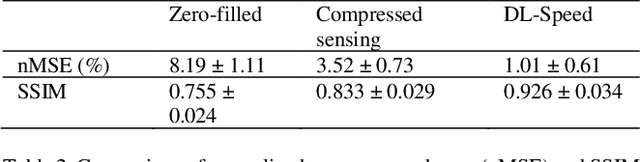

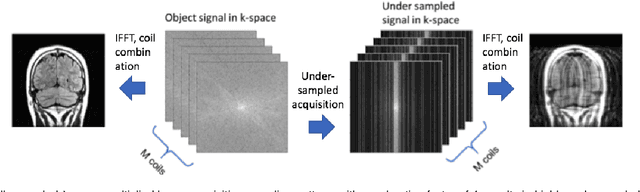

Abstract:Purpose: To accelerate brain 3D MRI scans by using a deep learning method for reconstructing images from highly-undersampled multi-coil k-space data Methods: DL-Speed, an unrolled optimization architecture with dense skip-layer connections, was trained on 3D T1-weighted brain scan data to reconstruct complex-valued images from highly-undersampled k-space data. The trained model was evaluated on 3D MPRAGE brain scan data retrospectively-undersampled with a 10-fold acceleration, compared to a conventional parallel imaging method with a 2-fold acceleration. Scores of SNR, artifacts, gray/white matter contrast, resolution/sharpness, deep gray-matter, cerebellar vermis, anterior commissure, and overall quality, on a 5-point Likert scale, were assessed by experienced radiologists. In addition, the trained model was tested on retrospectively-undersampled 3D T1-weighted LAVA (Liver Acquisition with Volume Acceleration) abdominal scan data, and prospectively-undersampled 3D MPRAGE and LAVA scans in three healthy volunteers and one, respectively. Results: The qualitative scores for DL-Speed with a 10-fold acceleration were higher than or equal to those for the parallel imaging with 2-fold acceleration. DL-Speed outperformed a compressed sensing method in quantitative metrics on retrospectively-undersampled LAVA data. DL-Speed was demonstrated to perform reasonably well on prospectively-undersampled scan data, realizing a 2-5 times reduction in scan time. Conclusion: DL-Speed was shown to accelerate 3D MPRAGE and LAVA with up to a net 10-fold acceleration, achieving 2-5 times faster scans compared to conventional parallel imaging and acceleration, while maintaining diagnostic image quality and real-time reconstruction. The brain scan data-trained DL-Speed also performed well when reconstructing abdominal LAVA scan data, demonstrating versatility of the network.

Artificial Intelligence in PET: an Industry Perspective

Jul 14, 2021

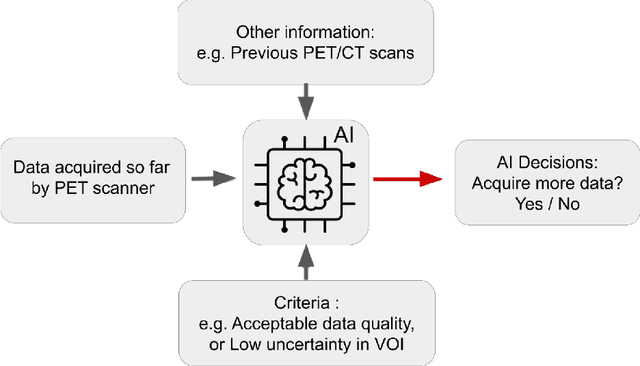

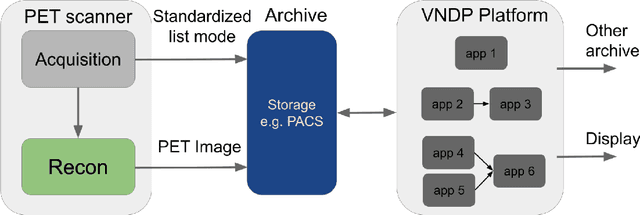

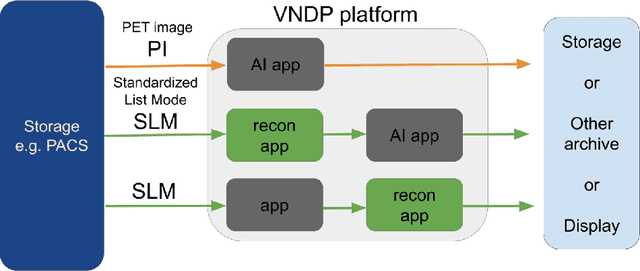

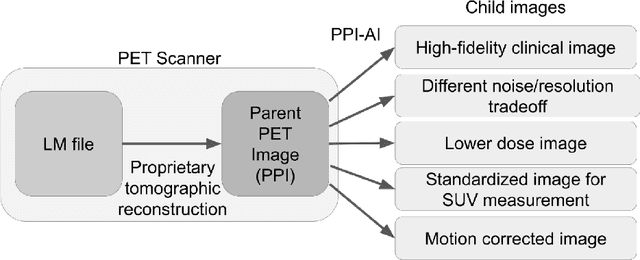

Abstract:Artificial intelligence (AI) has significant potential to positively impact and advance medical imaging, including positron emission tomography (PET) imaging applications. AI has the ability to enhance and optimize all aspects of the PET imaging chain from patient scheduling, patient setup, protocoling, data acquisition, detector signal processing, reconstruction, image processing and interpretation. AI poses industry-specific challenges which will need to be addressed and overcome to maximize the future potentials of AI in PET. This paper provides an overview of these industry-specific challenges for the development, standardization, commercialization, and clinical adoption of AI, and explores the potential enhancements to PET imaging brought on by AI in the near future. In particular, the combination of on-demand image reconstruction, AI, and custom designed data processing workflows may open new possibilities for innovation which would positively impact the industry and ultimately patients.

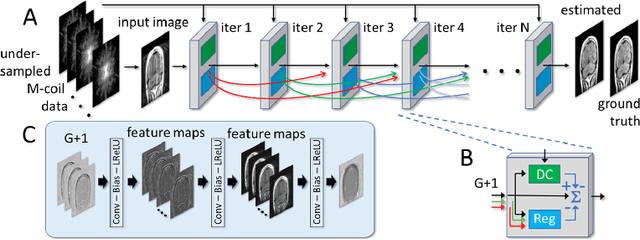

Adaptive Gradient Balancing for UndersampledMRI Reconstruction and Image-to-Image Translation

Apr 05, 2021

Abstract:Recent accelerated MRI reconstruction models have used Deep Neural Networks (DNNs) to reconstruct relatively high-quality images from highly undersampled k-space data, enabling much faster MRI scanning. However, these techniques sometimes struggle to reconstruct sharp images that preserve fine detail while maintaining a natural appearance. In this work, we enhance the image quality by using a Conditional Wasserstein Generative Adversarial Network combined with a novel Adaptive Gradient Balancing (AGB) technique that automates the process of combining the adversarial and pixel-wise terms and streamlines hyperparameter tuning. In addition, we introduce a Densely Connected Iterative Network, which is an undersampled MRI reconstruction network that utilizes dense connections. In MRI, our method minimizes artifacts, while maintaining a high-quality reconstruction that produces sharper images than other techniques. To demonstrate the general nature of our method, it is further evaluated on a battery of image-to-image translation experiments, demonstrating an ability to recover from sub-optimal weighting in multi-term adversarial training.

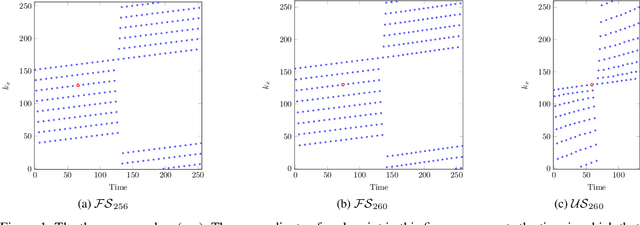

A Novel Approach for Correcting Multiple Discrete Rigid In-Plane Motions Artefacts in MRI Scans

Jun 29, 2020

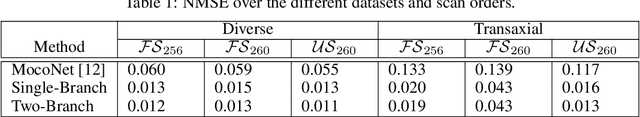

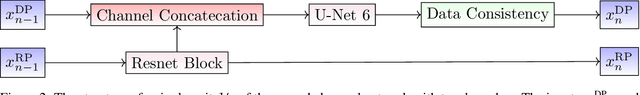

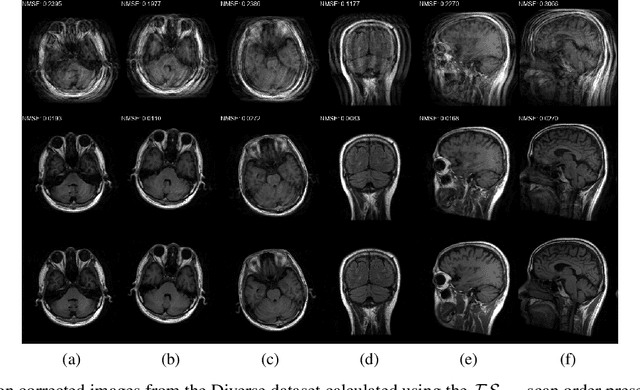

Abstract:Motion artefacts created by patient motion during an MRI scan occur frequently in practice, often rendering the scans clinically unusable and requiring a re-scan. While many methods have been employed to ameliorate the effects of patient motion, these often fall short in practice. In this paper we propose a novel method for removing motion artefacts using a deep neural network with two input branches that discriminates between patient poses using the motion's timing. The first branch receives a subset of the $k$-space data collected during a single patient pose, and the second branch receives the remaining part of the collected $k$-space data. The proposed method can be applied to artefacts generated by multiple movements of the patient. Furthermore, it can be used to correct motion for the case where $k$-space has been under-sampled, to shorten the scan time, as is common when using methods such as parallel imaging or compressed sensing. Experimental results on both simulated and real MRI data show the efficacy of our approach.

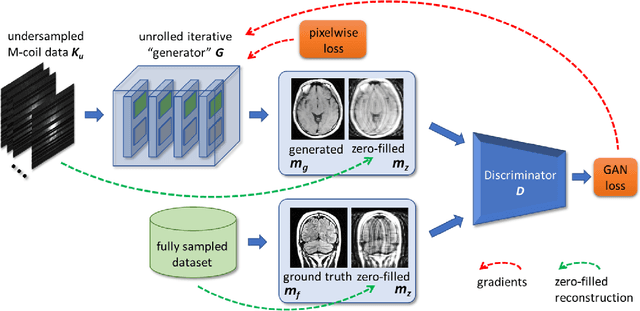

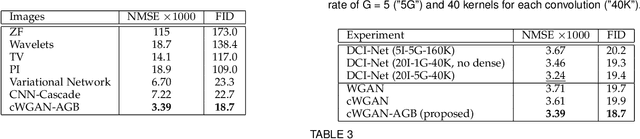

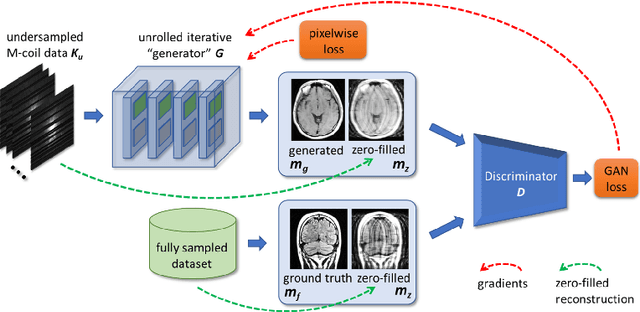

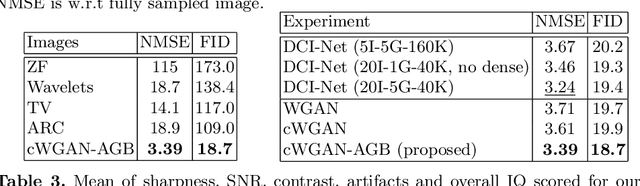

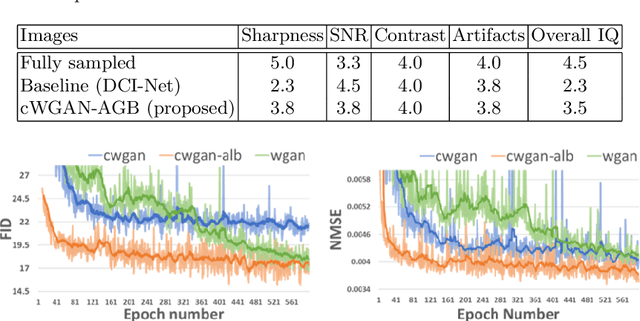

Conditional WGANs with Adaptive Gradient Balancing for Sparse MRI Reconstruction

May 02, 2019

Abstract:Recent sparse MRI reconstruction models have used Deep Neural Networks (DNNs) to reconstruct relatively high-quality images from highly undersampled k-space data, enabling much faster MRI scanning. However, these techniques sometimes struggle to reconstruct sharp images that preserve fine detail while maintaining a natural appearance. In this work, we enhance the image quality by using a Conditional Wasserstein Generative Adversarial Network combined with a novel Adaptive Gradient Balancing technique that stabilizes the training and minimizes the degree of artifacts, while maintaining a high-quality reconstruction that produces sharper images than other techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge