Uri Wollner

Deep learning-based reconstruction of highly accelerated 3D MRI

Mar 09, 2022

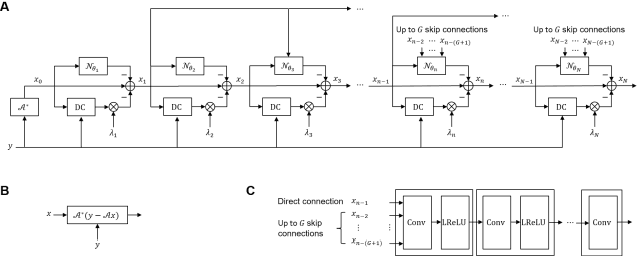

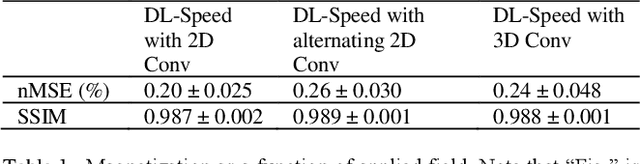

Abstract:Purpose: To accelerate brain 3D MRI scans by using a deep learning method for reconstructing images from highly-undersampled multi-coil k-space data Methods: DL-Speed, an unrolled optimization architecture with dense skip-layer connections, was trained on 3D T1-weighted brain scan data to reconstruct complex-valued images from highly-undersampled k-space data. The trained model was evaluated on 3D MPRAGE brain scan data retrospectively-undersampled with a 10-fold acceleration, compared to a conventional parallel imaging method with a 2-fold acceleration. Scores of SNR, artifacts, gray/white matter contrast, resolution/sharpness, deep gray-matter, cerebellar vermis, anterior commissure, and overall quality, on a 5-point Likert scale, were assessed by experienced radiologists. In addition, the trained model was tested on retrospectively-undersampled 3D T1-weighted LAVA (Liver Acquisition with Volume Acceleration) abdominal scan data, and prospectively-undersampled 3D MPRAGE and LAVA scans in three healthy volunteers and one, respectively. Results: The qualitative scores for DL-Speed with a 10-fold acceleration were higher than or equal to those for the parallel imaging with 2-fold acceleration. DL-Speed outperformed a compressed sensing method in quantitative metrics on retrospectively-undersampled LAVA data. DL-Speed was demonstrated to perform reasonably well on prospectively-undersampled scan data, realizing a 2-5 times reduction in scan time. Conclusion: DL-Speed was shown to accelerate 3D MPRAGE and LAVA with up to a net 10-fold acceleration, achieving 2-5 times faster scans compared to conventional parallel imaging and acceleration, while maintaining diagnostic image quality and real-time reconstruction. The brain scan data-trained DL-Speed also performed well when reconstructing abdominal LAVA scan data, demonstrating versatility of the network.

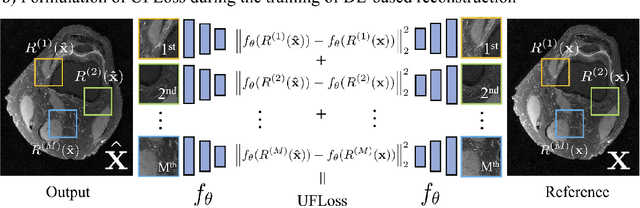

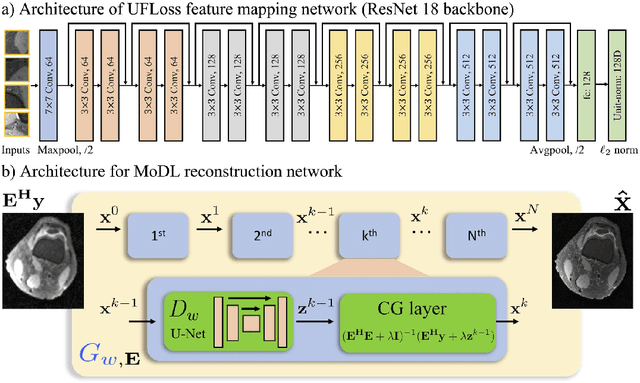

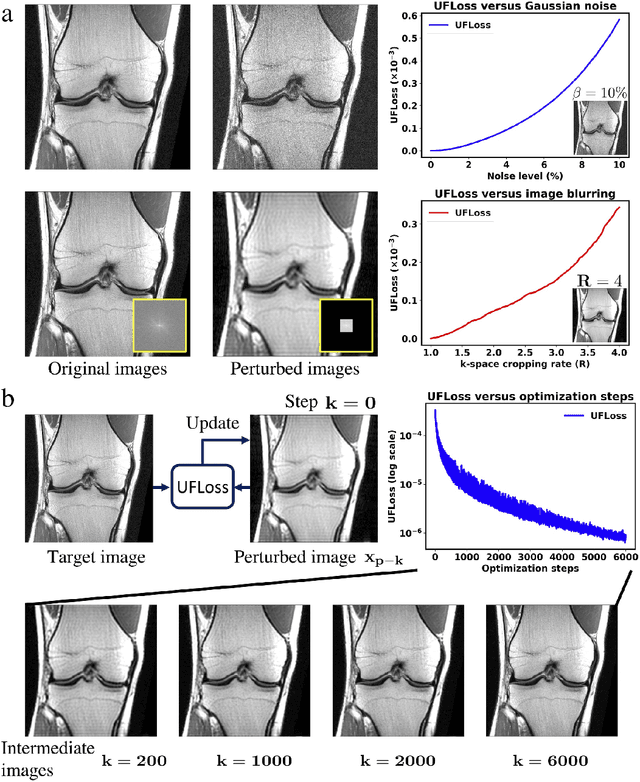

High Fidelity Deep Learning-based MRI Reconstruction with Instance-wise Discriminative Feature Matching Loss

Aug 27, 2021

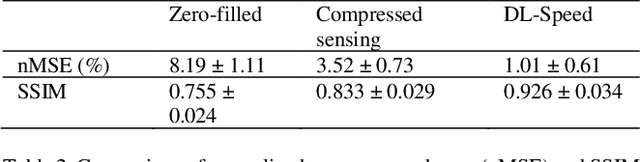

Abstract:Purpose: To improve reconstruction fidelity of fine structures and textures in deep learning (DL) based reconstructions. Methods: A novel patch-based Unsupervised Feature Loss (UFLoss) is proposed and incorporated into the training of DL-based reconstruction frameworks in order to preserve perceptual similarity and high-order statistics. The UFLoss provides instance-level discrimination by mapping similar instances to similar low-dimensional feature vectors and is trained without any human annotation. By adding an additional loss function on the low-dimensional feature space during training, the reconstruction frameworks from under-sampled or corrupted data can reproduce more realistic images that are closer to the original with finer textures, sharper edges, and improved overall image quality. The performance of the proposed UFLoss is demonstrated on unrolled networks for accelerated 2D and 3D knee MRI reconstruction with retrospective under-sampling. Quantitative metrics including NRMSE, SSIM, and our proposed UFLoss were used to evaluate the performance of the proposed method and compare it with others. Results: In-vivo experiments indicate that adding the UFLoss encourages sharper edges and more faithful contrasts compared to traditional and learning-based methods with pure l2 loss. More detailed textures can be seen in both 2D and 3D knee MR images. Quantitative results indicate that reconstruction with UFLoss can provide comparable NRMSE and a higher SSIM while achieving a much lower UFLoss value. Conclusion: We present UFLoss, a patch-based unsupervised learned feature loss, which allows the training of DL-based reconstruction to obtain more detailed texture, finer features, and sharper edges with higher overall image quality under DL-based reconstruction frameworks.

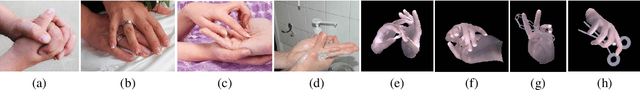

Parallel mesh reconstruction streams for pose estimation of interacting hands

Apr 25, 2021

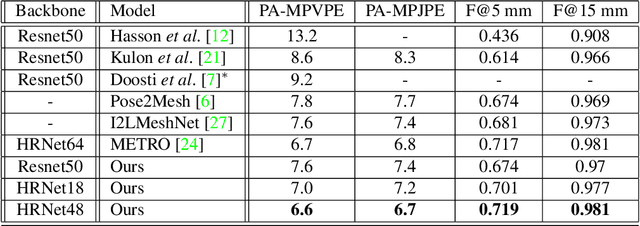

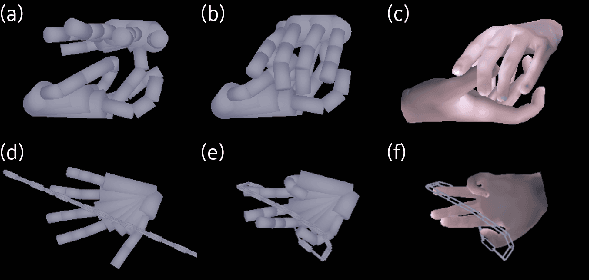

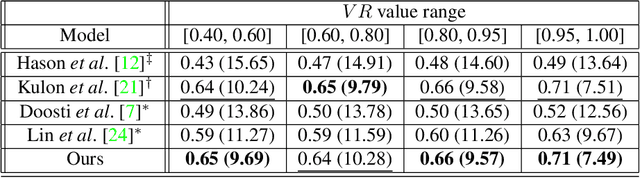

Abstract:We present a new multi-stream 3D mesh reconstruction network (MSMR-Net) for hand pose estimation from a single RGB image. Our model consists of an image encoder followed by a mesh-convolution decoder composed of connected graph convolution layers. In contrast to previous models that form a single mesh decoding path, our decoder network incorporates multiple cross-resolution trajectories that are executed in parallel. Thus, global and local information are shared to form rich decoding representations at minor additional parameter cost compared to the single trajectory network. We demonstrate the effectiveness of our method in hand-hand and hand-object interaction scenarios at various levels of interaction. To evaluate the former scenario, we propose a method to generate RGB images of closely interacting hands. Moreoever, we suggest a metric to quantify the degree of interaction and show that close hand interactions are particularly challenging. Experimental results show that the MSMR-Net outperforms existing algorithms on the hand-object FreiHAND dataset as well as on our own hand-hand dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge