Salman Ul Hassan Dar

MedLoRD: A Medical Low-Resource Diffusion Model for High-Resolution 3D CT Image Synthesis

Mar 17, 2025

Abstract:Advancements in AI for medical imaging offer significant potential. However, their applications are constrained by the limited availability of data and the reluctance of medical centers to share it due to patient privacy concerns. Generative models present a promising solution by creating synthetic data as a substitute for real patient data. However, medical images are typically high-dimensional, and current state-of-the-art methods are often impractical for computational resource-constrained healthcare environments. These models rely on data sub-sampling, raising doubts about their feasibility and real-world applicability. Furthermore, many of these models are evaluated on quantitative metrics that alone can be misleading in assessing the image quality and clinical meaningfulness of the generated images. To address this, we introduce MedLoRD, a generative diffusion model designed for computational resource-constrained environments. MedLoRD is capable of generating high-dimensional medical volumes with resolutions up to 512$\times$512$\times$256, utilizing GPUs with only 24GB VRAM, which are commonly found in standard desktop workstations. MedLoRD is evaluated across multiple modalities, including Coronary Computed Tomography Angiography and Lung Computed Tomography datasets. Extensive evaluations through radiological evaluation, relative regional volume analysis, adherence to conditional masks, and downstream tasks show that MedLoRD generates high-fidelity images closely adhering to segmentation mask conditions, surpassing the capabilities of current state-of-the-art generative models for medical image synthesis in computational resource-constrained environments.

Latent Pollution Model: The Hidden Carbon Footprint in 3D Image Synthesis

Jul 20, 2024

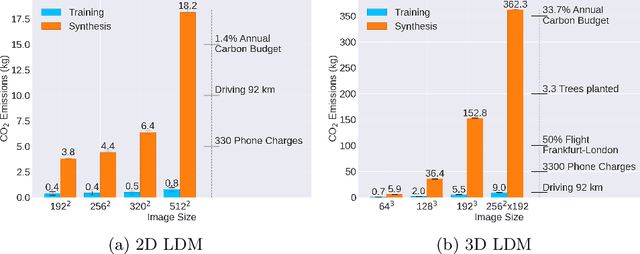

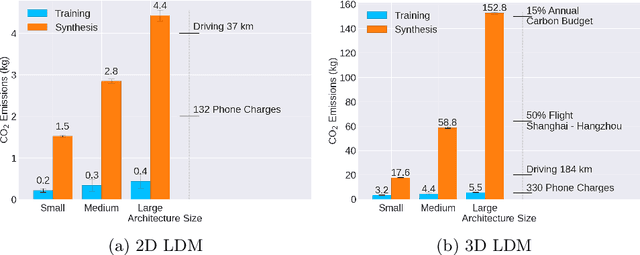

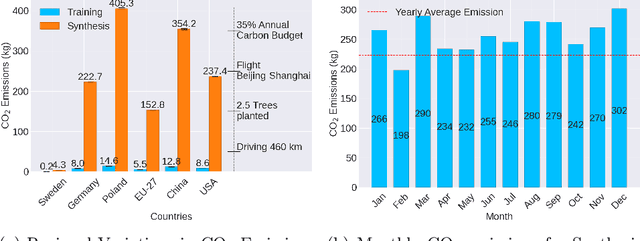

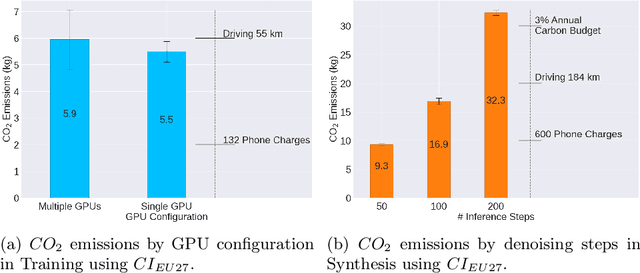

Abstract:Contemporary developments in generative AI are rapidly transforming the field of medical AI. These developments have been predominantly driven by the availability of large datasets and high computing power, which have facilitated a significant increase in model capacity. Despite their considerable potential, these models demand substantially high power, leading to high carbon dioxide (CO2) emissions. Given the harm such models are causing to the environment, there has been little focus on the carbon footprints of such models. This study analyzes carbon emissions from 2D and 3D latent diffusion models (LDMs) during training and data generation phases, revealing a surprising finding: the synthesis of large images contributes most significantly to these emissions. We assess different scenarios including model sizes, image dimensions, distributed training, and data generation steps. Our findings reveal substantial carbon emissions from these models, with training 2D and 3D models comparable to driving a car for 10 km and 90 km, respectively. The process of data generation is even more significant, with CO2 emissions equivalent to driving 160 km for 2D models and driving for up to 3345 km for 3D synthesis. Additionally, we found that the location of the experiment can increase carbon emissions by up to 94 times, and even the time of year can influence emissions by up to 50%. These figures are alarming, considering they represent only a single training and data generation phase for each model. Our results emphasize the urgent need for developing environmentally sustainable strategies in generative AI.

Unconditional Latent Diffusion Models Memorize Patient Imaging Data

Feb 01, 2024

Abstract:Generative latent diffusion models hold a wide range of applications in the medical imaging domain. A noteworthy application is privacy-preserved open-data sharing by proposing synthetic data as surrogates of real patient data. Despite the promise, these models are susceptible to patient data memorization, where models generate patient data copies instead of novel synthetic samples. This undermines the whole purpose of preserving patient data and may even result in patient re-identification. Considering the importance of the problem, surprisingly it has received relatively little attention in the medical imaging community. To this end, we assess memorization in latent diffusion models for medical image synthesis. We train 2D and 3D latent diffusion models on CT, MR, and X-ray datasets for synthetic data generation. Afterwards, we examine the amount of training data memorized utilizing self-supervised models and further investigate various factors that can possibly lead to memorization by training models in different settings. We observe a surprisingly large amount of data memorization among all datasets, with up to 41.7%, 19.6%, and 32.6% of the training data memorized in CT, MRI, and X-ray datasets respectively. Further analyses reveal that increasing training data size and using data augmentation reduce memorization, while over-training enhances it. Overall, our results suggest a call for memorization-informed evaluation of synthetic data prior to open-data sharing.

Investigating Data Memorization in 3D Latent Diffusion Models for Medical Image Synthesis

Jul 06, 2023

Abstract:Generative latent diffusion models have been established as state-of-the-art in data generation. One promising application is generation of realistic synthetic medical imaging data for open data sharing without compromising patient privacy. Despite the promise, the capacity of such models to memorize sensitive patient training data and synthesize samples showing high resemblance to training data samples is relatively unexplored. Here, we assess the memorization capacity of 3D latent diffusion models on photon-counting coronary computed tomography angiography and knee magnetic resonance imaging datasets. To detect potential memorization of training samples, we utilize self-supervised models based on contrastive learning. Our results suggest that such latent diffusion models indeed memorize training data, and there is a dire need for devising strategies to mitigate memorization.

BolT: Fused Window Transformers for fMRI Time Series Analysis

May 23, 2022

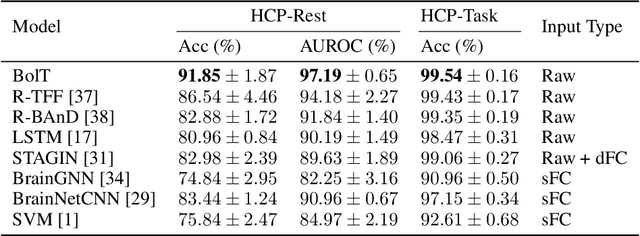

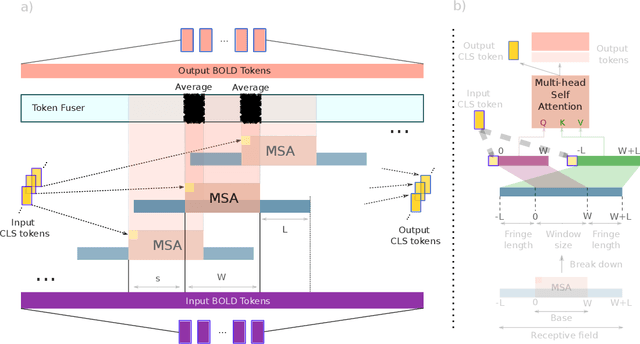

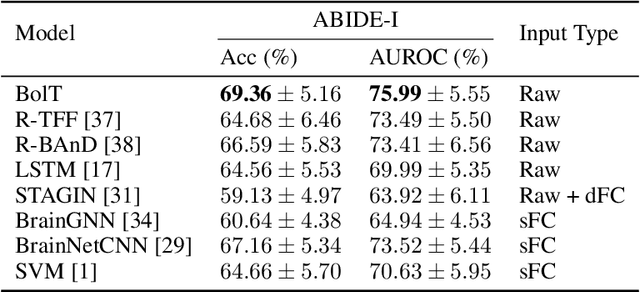

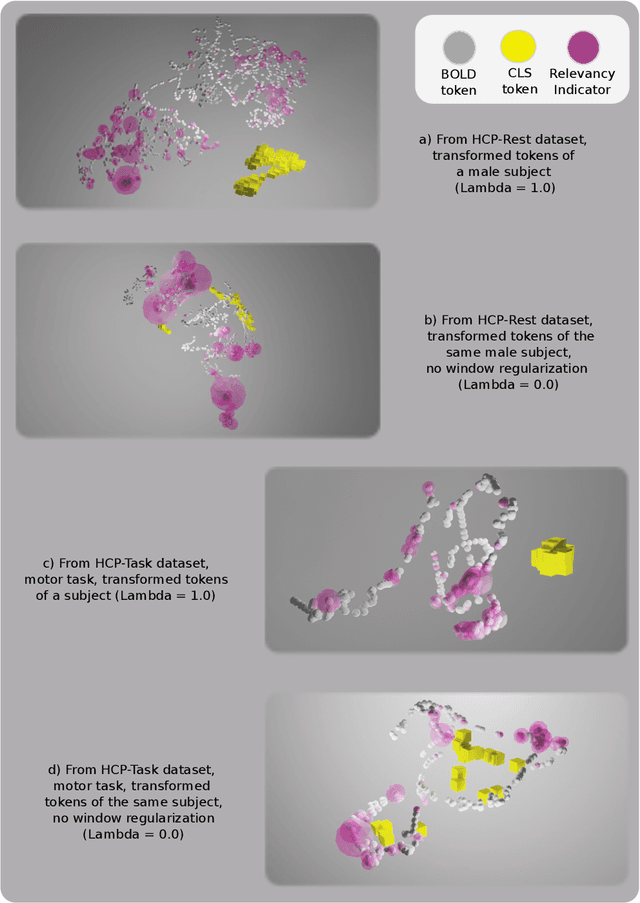

Abstract:Functional magnetic resonance imaging (fMRI) enables examination of inter-regional interactions in the brain via functional connectivity (FC) analyses that measure the synchrony between the temporal activations of separate regions. Given their exceptional sensitivity, deep-learning methods have received growing interest for FC analyses of high-dimensional fMRI data. In this domain, models that operate directly on raw time series as opposed to pre-computed FC features have the potential benefit of leveraging the full scale of information present in fMRI data. However, previous models are based on architectures suboptimal for temporal integration of representations across multiple time scales. Here, we present BolT, blood-oxygen-level-dependent transformer, for analyzing multi-variate fMRI time series. BolT leverages a cascade of transformer encoders equipped with a novel fused window attention mechanism. Transformer encoding is performed on temporally-overlapped time windows within the fMRI time series to capture short time-scale representations. To integrate information across windows, cross-window attention is computed between base tokens in each time window and fringe tokens from neighboring time windows. To transition from local to global representations, the extent of window overlap and thereby number of fringe tokens is progressively increased across the cascade. Finally, a novel cross-window regularization is enforced to align the high-level representations of global $CLS$ features across time windows. Comprehensive experiments on public fMRI datasets clearly illustrate the superior performance of BolT against state-of-the-art methods. Posthoc explanatory analyses to identify landmark time points and regions that contribute most significantly to model decisions corroborate prominent neuroscientific findings from recent fMRI studies.

A Few-Shot Learning Approach for Accelerated MRI via Fusion of Data-Driven and Subject-Driven Priors

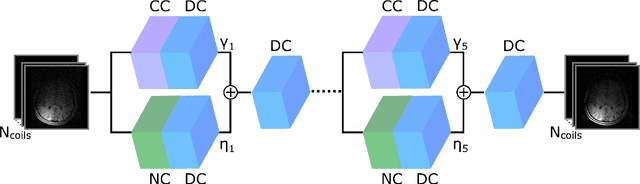

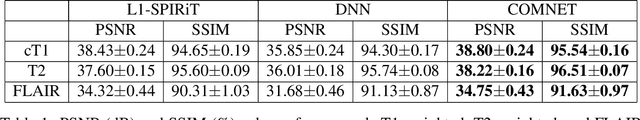

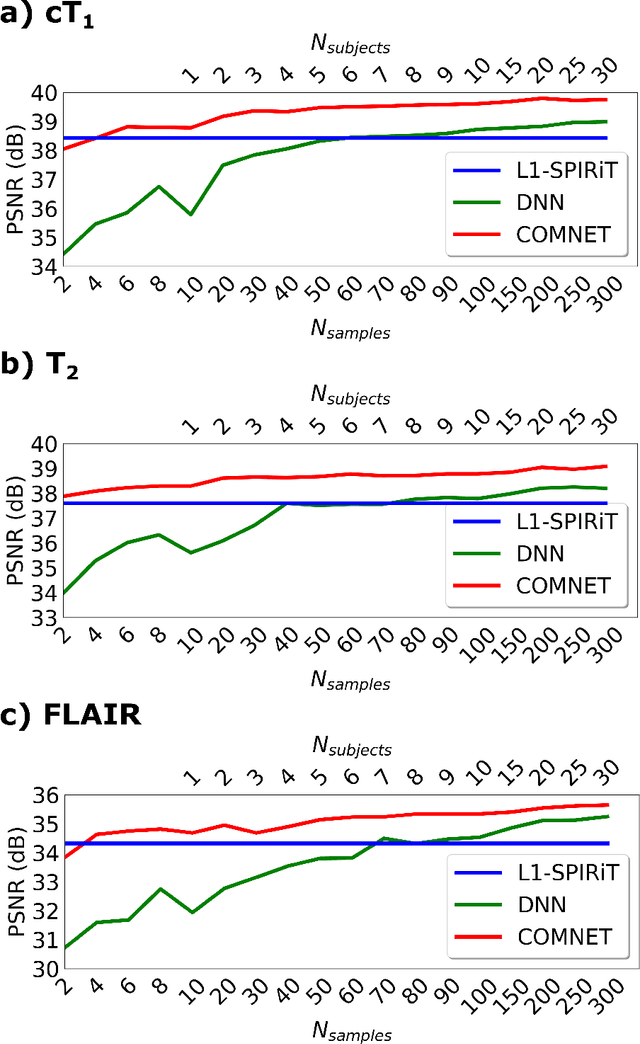

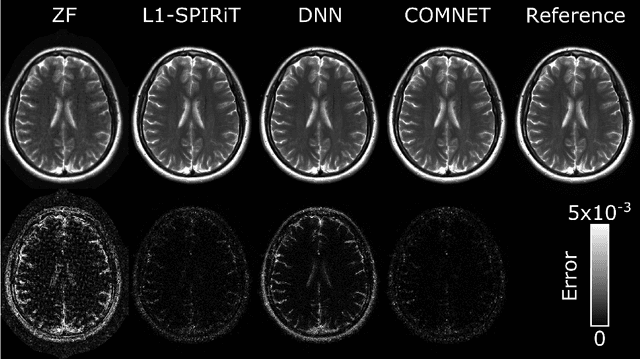

Mar 13, 2021

Abstract:Deep neural networks (DNNs) have recently found emerging use in accelerated MRI reconstruction. DNNs typically learn data-driven priors from large datasets constituting pairs of undersampled and fully-sampled acquisitions. Acquiring such large datasets, however, might be impractical. To mitigate this limitation, we propose a few-shot learning approach for accelerated MRI that merges subject-driven priors obtained via physical signal models with data-driven priors obtained from a few training samples. Demonstrations on brain MR images from the NYU fastMRI dataset indicate that the proposed approach requires just a few samples to outperform traditional parallel imaging and DNN algorithms.

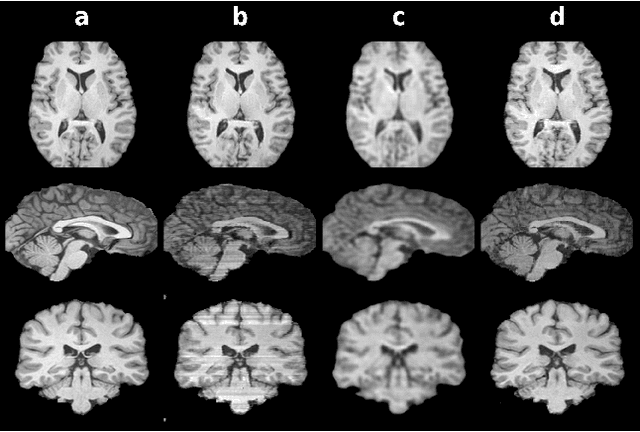

Three Dimensional MR Image Synthesis with Progressive Generative Adversarial Networks

Dec 18, 2020

Abstract:Mainstream deep models for three-dimensional MRI synthesis are either cross-sectional or volumetric depending on the input. Cross-sectional models can decrease the model complexity, but they may lead to discontinuity artifacts. On the other hand, volumetric models can alleviate the discontinuity artifacts, but they might suffer from loss of spatial resolution due to increased model complexity coupled with scarce training data. To mitigate the limitations of both approaches, we propose a novel model that progressively recovers the target volume via simpler synthesis tasks across individual orientations.

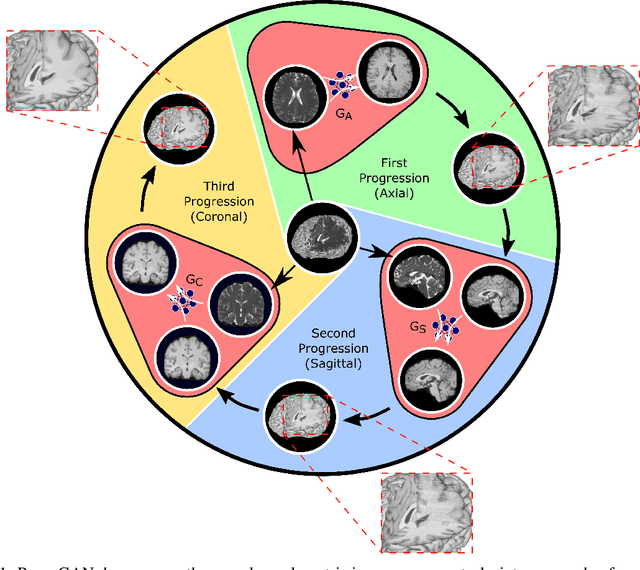

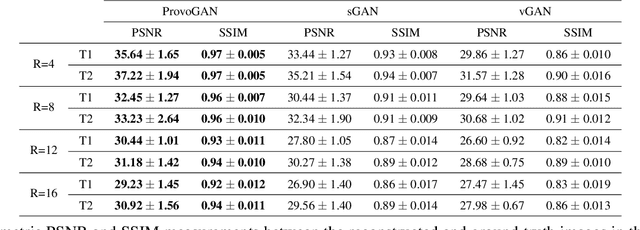

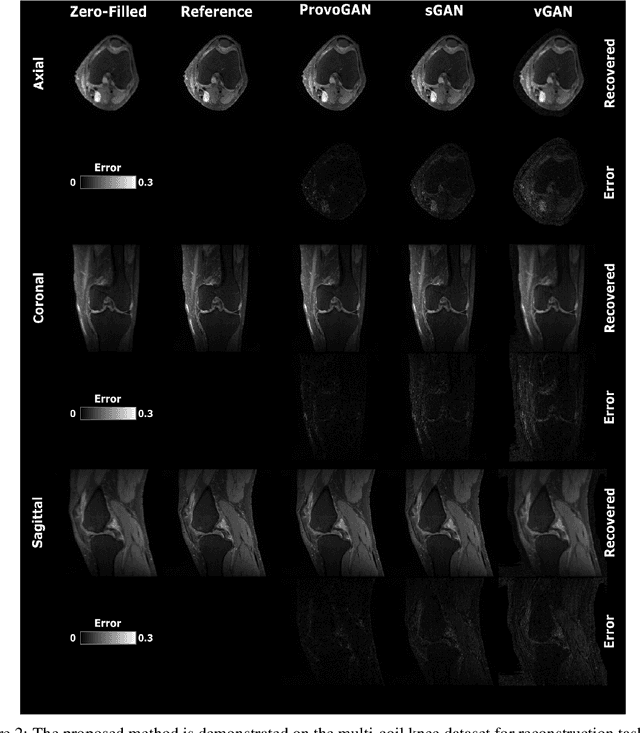

Progressively Volumetrized Deep Generative Models for Data-Efficient Contextual Learning of MR Image Recovery

Dec 03, 2020

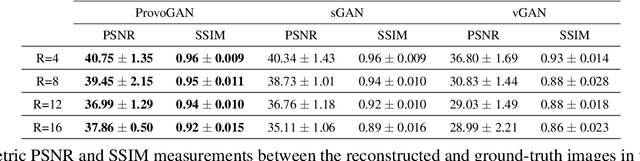

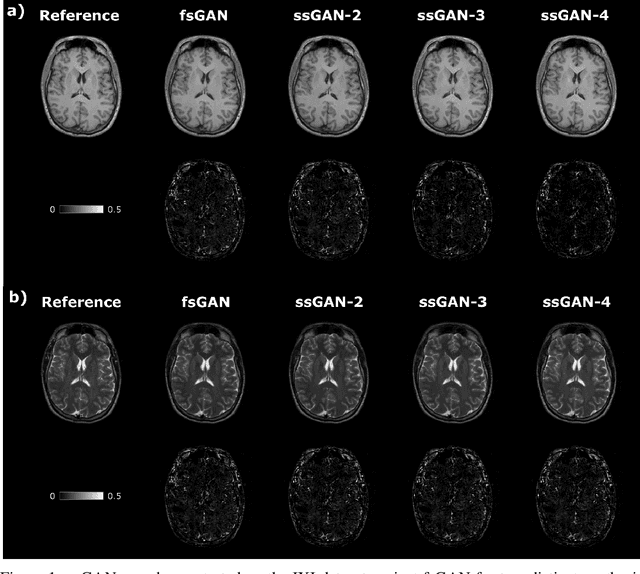

Abstract:Magnetic resonance imaging (MRI) offers the flexibility to image a given anatomic volume under a multitude of tissue contrasts. Yet, scan time considerations put stringent limits on the quality and diversity of MRI data. The gold-standard approach to alleviate this limitation is to recover high-quality images from data undersampled across various dimensions such as the Fourier domain or contrast sets. A central divide among recovery methods is whether the anatomy is processed per volume or per cross-section. Volumetric models offer enhanced capture of global contextual information, but they can suffer from suboptimal learning due to elevated model complexity. Cross-sectional models with lower complexity offer improved learning behavior, yet they ignore contextual information across the longitudinal dimension of the volume. Here, we introduce a novel data-efficient progressively volumetrized generative model (ProvoGAN) that decomposes complex volumetric image recovery tasks into a series of simpler cross-sectional tasks across individual rectilinear dimensions. ProvoGAN effectively captures global context and recovers fine-structural details across all dimensions, while maintaining low model complexity and data-efficiency advantages of cross-sectional models. Comprehensive demonstrations on mainstream MRI reconstruction and synthesis tasks show that ProvoGAN yields superior performance to state-of-the-art volumetric and cross-sectional models.

Semi-Supervised Learning of Mutually Accelerated Multi-Contrast MRI Synthesis without Fully-Sampled Ground-Truths

Nov 29, 2020

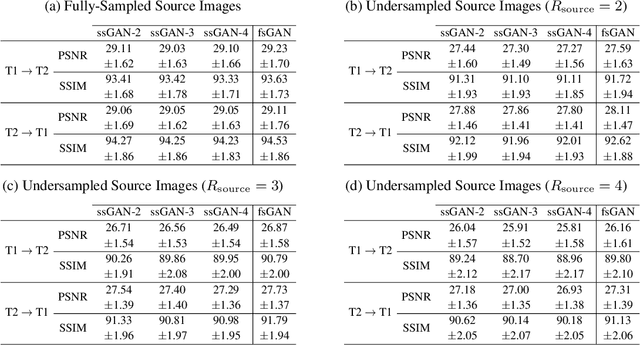

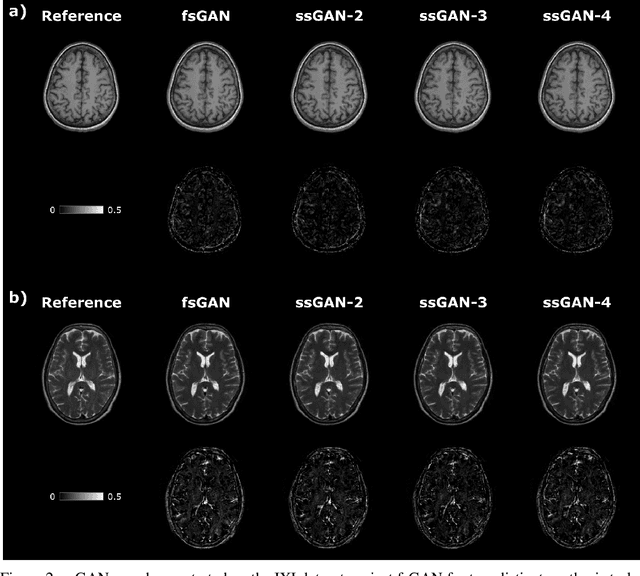

Abstract:This study proposes a novel semi-supervised learning framework for mutually accelerated multi-contrast MRI synthesis that recovers high-quality images without demanding large training sets of costly fully-sampled source or ground-truth target images. The proposed method presents a selective loss function expressed only on a subset of the acquired k-space coefficients and further leverages randomized sampling patterns across training subjects to effectively learn relationships among acquired and nonacquired k-space coefficients at all locations. Comprehensive experiments performed on multi-contrast brain images clearly demonstrate that the proposed method maintains equivalent performance to the gold-standard method based on fully-supervised training while alleviating undesirable reliance of the current synthesis methods on large-scale fully-sampled MRI acquisitions.

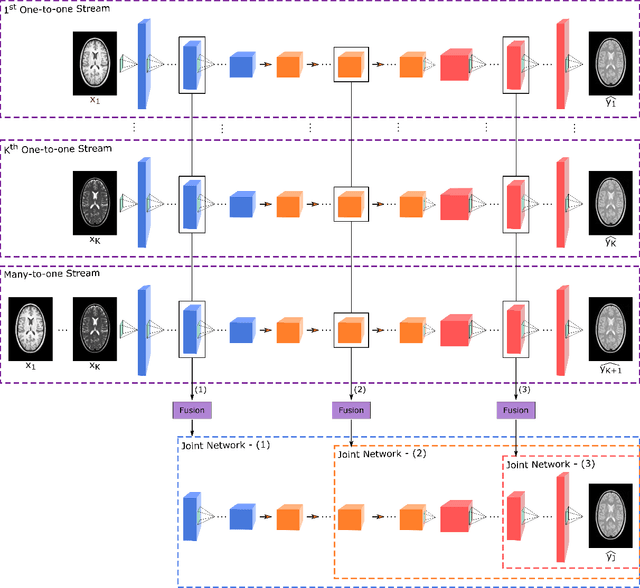

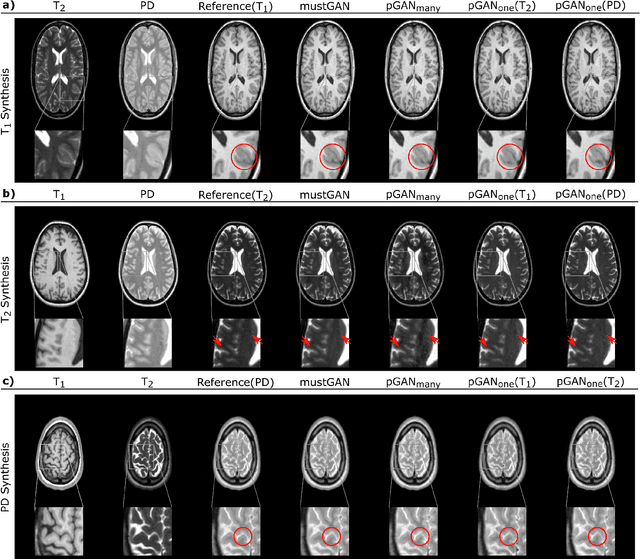

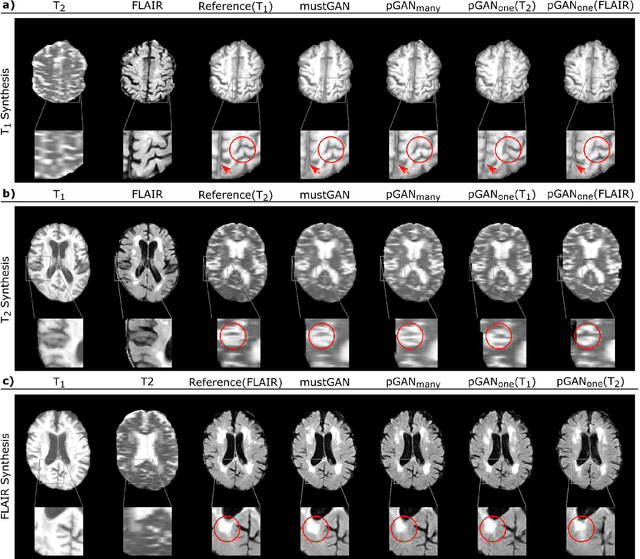

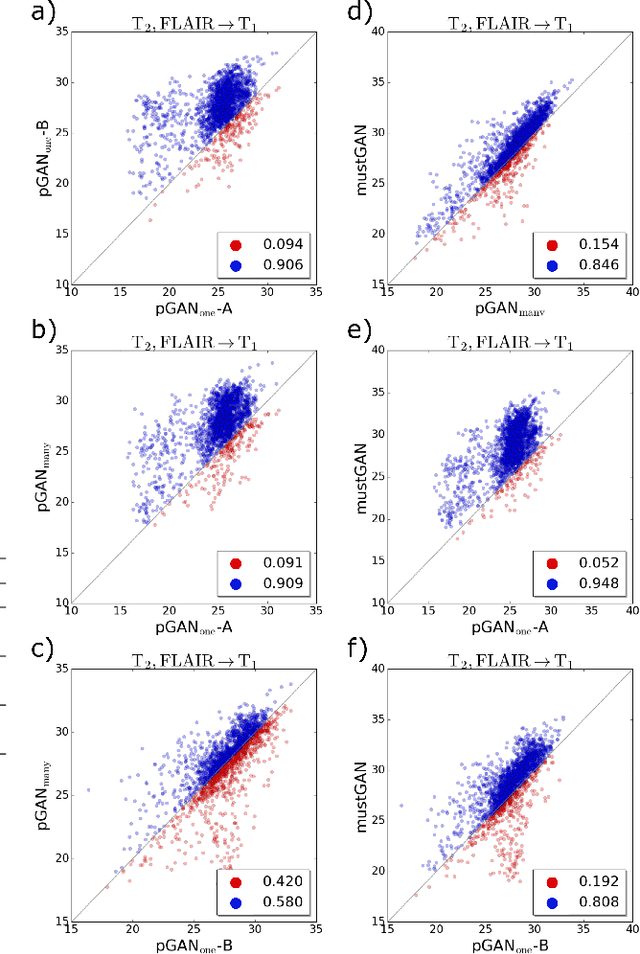

mustGAN: Multi-Stream Generative Adversarial Networks for MR Image Synthesis

Sep 25, 2019

Abstract:Multi-contrast MRI protocols increase the level of morphological information available for diagnosis. Yet, the number and quality of contrasts is limited in practice by various factors including scan time and patient motion. Synthesis of missing or corrupted contrasts can alleviate this limitation to improve clinical utility. Common approaches for multi-contrast MRI involve either one-to-one and many-to-one synthesis methods. One-to-one methods take as input a single source contrast, and they learn a latent representation sensitive to unique features of the source. Meanwhile, many-to-one methods receive multiple distinct sources, and they learn a shared latent representation more sensitive to common features across sources. For enhanced image synthesis, here we propose a multi-stream approach that aggregates information across multiple source images via a mixture of multiple one-to-one streams and a joint many-to-one stream. The shared feature maps generated in the many-to-one stream and the complementary feature maps generated in the one-to-one streams are combined with a fusion block. The location of the fusion block is adaptively modified to maximize task-specific performance. Qualitative and quantitative assessments on T1-, T2-, PD-weighted and FLAIR images clearly demonstrate the superior performance of the proposed method compared to previous state-of-the-art one-to-one and many-to-one methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge