Sahar Ghannay

LISN

A dual task learning approach to fine-tune a multilingual semantic speech encoder for Spoken Language Understanding

Jun 17, 2024Abstract:Self-Supervised Learning is vastly used to efficiently represent speech for Spoken Language Understanding, gradually replacing conventional approaches. Meanwhile, textual SSL models are proposed to encode language-agnostic semantics. SAMU-XLSR framework employed this semantic information to enrich multilingual speech representations. A recent study investigated SAMU-XLSR in-domain semantic enrichment by specializing it on downstream transcriptions, leading to state-of-the-art results on a challenging SLU task. This study's interest lies in the loss of multilingual performances and lack of specific-semantics training induced by such specialization in close languages without any SLU implication. We also consider SAMU-XLSR's loss of initial cross-lingual abilities due to a separate SLU fine-tuning. Therefore, this paper proposes a dual task learning approach to improve SAMU-XLSR semantic enrichment while considering distant languages for multilingual and language portability experiments.

Small Language Models are Good Too: An Empirical Study of Zero-Shot Classification

Apr 17, 2024

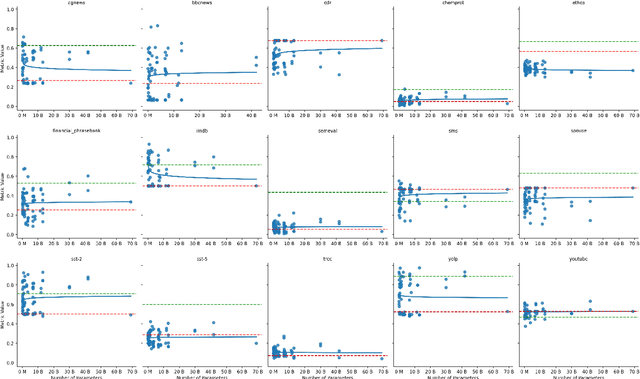

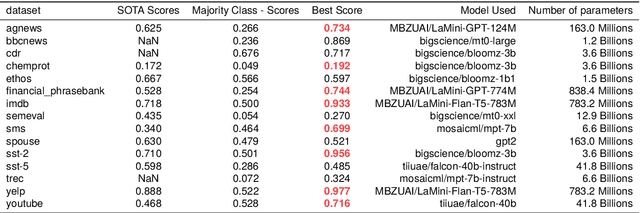

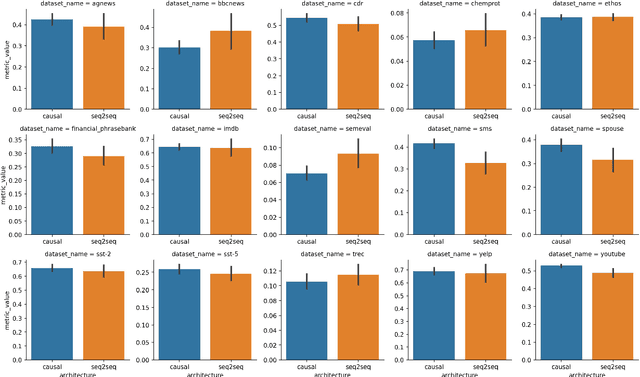

Abstract:This study is part of the debate on the efficiency of large versus small language models for text classification by prompting.We assess the performance of small language models in zero-shot text classification, challenging the prevailing dominance of large models.Across 15 datasets, our investigation benchmarks language models from 77M to 40B parameters using different architectures and scoring functions. Our findings reveal that small models can effectively classify texts, getting on par with or surpassing their larger counterparts.We developed and shared a comprehensive open-source repository that encapsulates our methodologies. This research underscores the notion that bigger isn't always better, suggesting that resource-efficient small models may offer viable solutions for specific data classification challenges.

New Semantic Task for the French Spoken Language Understanding MEDIA Benchmark

Mar 28, 2024Abstract:Intent classification and slot-filling are essential tasks of Spoken Language Understanding (SLU). In most SLUsystems, those tasks are realized by independent modules. For about fifteen years, models achieving both of themjointly and exploiting their mutual enhancement have been proposed. A multilingual module using a joint modelwas envisioned to create a touristic dialogue system for a European project, HumanE-AI-Net. A combination ofmultiple datasets, including the MEDIA dataset, was suggested for training this joint model. The MEDIA SLU datasetis a French dataset distributed since 2005 by ELRA, mainly used by the French research community and free foracademic research since 2020. Unfortunately, it is annotated only in slots but not intents. An enhanced version ofMEDIA annotated with intents has been built to extend its use to more tasks and use cases. This paper presents thesemi-automatic methodology used to obtain this enhanced version. In addition, we present the first results of SLUexperiments on this enhanced dataset using joint models for intent classification and slot-filling.

mALBERT: Is a Compact Multilingual BERT Model Still Worth It?

Mar 27, 2024

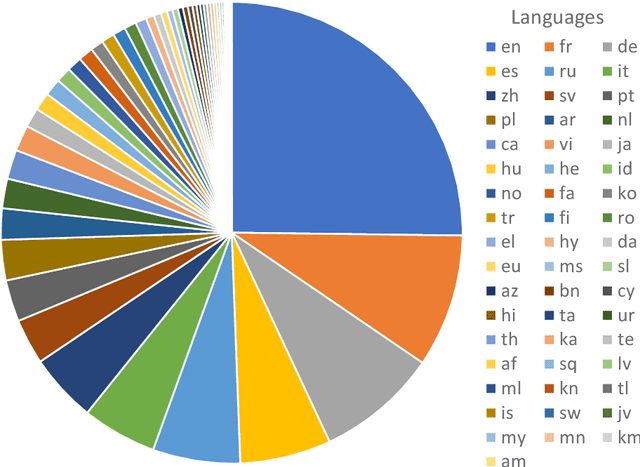

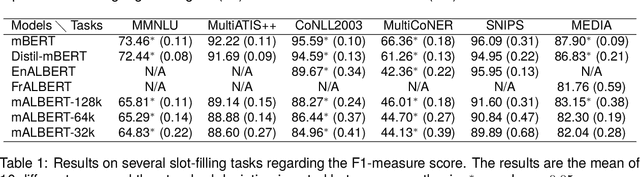

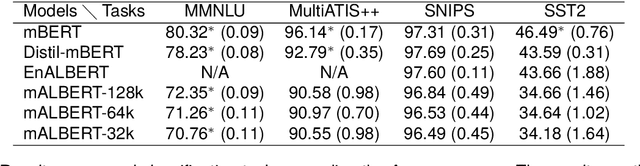

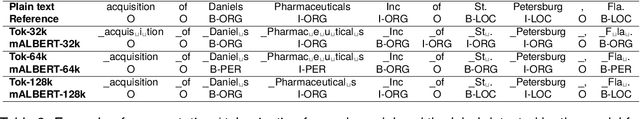

Abstract:Within the current trend of Pretained Language Models (PLM), emerge more and more criticisms about the ethical andecological impact of such models. In this article, considering these critical remarks, we propose to focus on smallermodels, such as compact models like ALBERT, which are more ecologically virtuous than these PLM. However,PLMs enable huge breakthroughs in Natural Language Processing tasks, such as Spoken and Natural LanguageUnderstanding, classification, Question--Answering tasks. PLMs also have the advantage of being multilingual, and,as far as we know, a multilingual version of compact ALBERT models does not exist. Considering these facts, wepropose the free release of the first version of a multilingual compact ALBERT model, pre-trained using Wikipediadata, which complies with the ethical aspect of such a language model. We also evaluate the model against classicalmultilingual PLMs in classical NLP tasks. Finally, this paper proposes a rare study on the subword tokenizationimpact on language performances.

Semantic enrichment towards efficient speech representations

Jul 03, 2023Abstract:Over the past few years, self-supervised learned speech representations have emerged as fruitful replacements for conventional surface representations when solving Spoken Language Understanding (SLU) tasks. Simultaneously, multilingual models trained on massive textual data were introduced to encode language agnostic semantics. Recently, the SAMU-XLSR approach introduced a way to make profit from such textual models to enrich multilingual speech representations with language agnostic semantics. By aiming for better semantic extraction on a challenging Spoken Language Understanding task and in consideration with computation costs, this study investigates a specific in-domain semantic enrichment of the SAMU-XLSR model by specializing it on a small amount of transcribed data from the downstream task. In addition, we show the benefits of the use of same-domain French and Italian benchmarks for low-resource language portability and explore cross-domain capacities of the enriched SAMU-XLSR.

Benchmarking Transformers-based models on French Spoken Language Understanding tasks

Jul 19, 2022

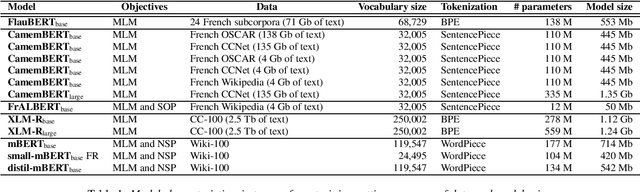

Abstract:In the last five years, the rise of the self-attentional Transformer-based architectures led to state-of-the-art performances over many natural language tasks. Although these approaches are increasingly popular, they require large amounts of data and computational resources. There is still a substantial need for benchmarking methodologies ever upwards on under-resourced languages in data-scarce application conditions. Most pre-trained language models were massively studied using the English language and only a few of them were evaluated on French. In this paper, we propose a unified benchmark, focused on evaluating models quality and their ecological impact on two well-known French spoken language understanding tasks. Especially we benchmark thirteen well-established Transformer-based models on the two available spoken language understanding tasks for French: MEDIA and ATIS-FR. Within this framework, we show that compact models can reach comparable results to bigger ones while their ecological impact is considerably lower. However, this assumption is nuanced and depends on the considered compression method.

Overlap-aware low-latency online speaker diarization based on end-to-end local segmentation

Sep 14, 2021

Abstract:We propose to address online speaker diarization as a combination of incremental clustering and local diarization applied to a rolling buffer updated every 500ms. Every single step of the proposed pipeline is designed to take full advantage of the strong ability of a recently proposed end-to-end overlap-aware segmentation to detect and separate overlapping speakers. In particular, we propose a modified version of the statistics pooling layer (initially introduced in the x-vector architecture) to give less weight to frames where the segmentation model predicts simultaneous speakers. Furthermore, we derive cannot-link constraints from the initial segmentation step to prevent two local speakers from being wrongfully merged during the incremental clustering step. Finally, we show how the latency of the proposed approach can be adjusted between 500ms and 5s to match the requirements of a particular use case, and we provide a systematic analysis of the influence of latency on the overall performance (on AMI, DIHARD and VoxConverse).

Where are we in semantic concept extraction for Spoken Language Understanding?

Jun 24, 2021

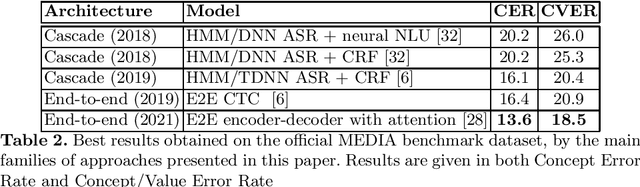

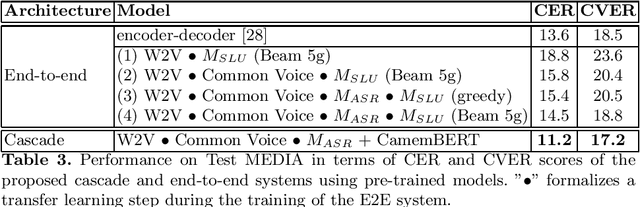

Abstract:Spoken language understanding (SLU) topic has seen a lot of progress these last three years, with the emergence of end-to-end neural approaches. Spoken language understanding refers to natural language processing tasks related to semantic extraction from speech signal, like named entity recognition from speech or slot filling task in a context of human-machine dialogue. Classically, SLU tasks were processed through a cascade approach that consists in applying, firstly, an automatic speech recognition process, followed by a natural language processing module applied to the automatic transcriptions. These three last years, end-to-end neural approaches, based on deep neural networks, have been proposed in order to directly extract the semantics from speech signal, by using a single neural model. More recent works on self-supervised training with unlabeled data open new perspectives in term of performance for automatic speech recognition and natural language processing. In this paper, we present a brief overview of the recent advances on the French MEDIA benchmark dataset for SLU, with or without the use of additional data. We also present our last results that significantly outperform the current state-of-the-art with a Concept Error Rate (CER) of 11.2%, instead of 13.6% for the last state-of-the-art system presented this year.

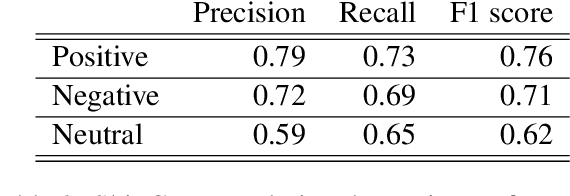

LIMSI_UPV at SemEval-2020 Task 9: Recurrent Convolutional Neural Network for Code-mixed Sentiment Analysis

Aug 30, 2020

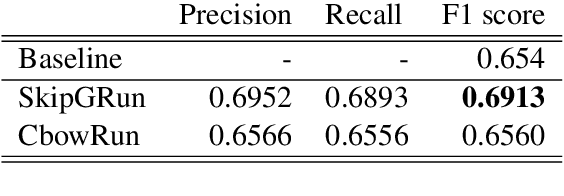

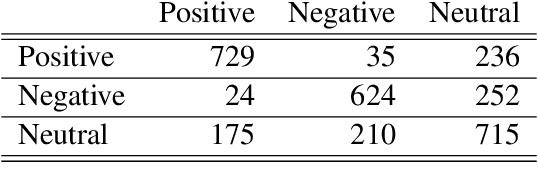

Abstract:This paper describes the participation of LIMSI UPV team in SemEval-2020 Task 9: Sentiment Analysis for Code-Mixed Social Media Text. The proposed approach competed in SentiMix Hindi-English subtask, that addresses the problem of predicting the sentiment of a given Hindi-English code-mixed tweet. We propose Recurrent Convolutional Neural Network that combines both the recurrent neural network and the convolutional network to better capture the semantics of the text, for code-mixed sentiment analysis. The proposed system obtained 0.69 (best run) in terms of F1 score on the given test data and achieved the 9th place (Codalab username: somban) in the SentiMix Hindi-English subtask.

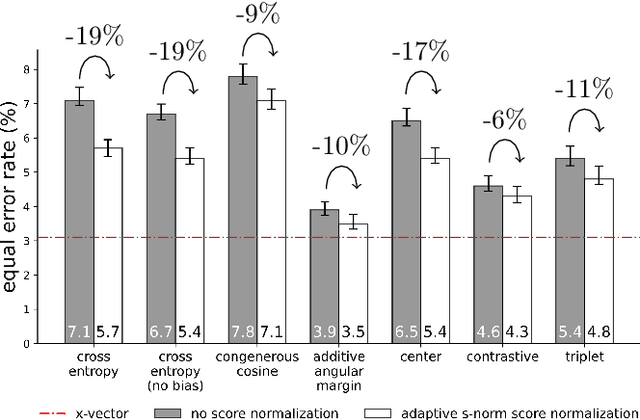

A Comparison of Metric Learning Loss Functions for End-To-End Speaker Verification

Mar 31, 2020

Abstract:Despite the growing popularity of metric learning approaches, very little work has attempted to perform a fair comparison of these techniques for speaker verification. We try to fill this gap and compare several metric learning loss functions in a systematic manner on the VoxCeleb dataset. The first family of loss functions is derived from the cross entropy loss (usually used for supervised classification) and includes the congenerous cosine loss, the additive angular margin loss, and the center loss. The second family of loss functions focuses on the similarity between training samples and includes the contrastive loss and the triplet loss. We show that the additive angular margin loss function outperforms all other loss functions in the study, while learning more robust representations. Based on a combination of SincNet trainable features and the x-vector architecture, the network used in this paper brings us a step closer to a really-end-to-end speaker verification system, when combined with the additive angular margin loss, while still being competitive with the x-vector baseline. In the spirit of reproducible research, we also release open source Python code for reproducing our results, and share pretrained PyTorch models on torch.hub that can be used either directly or after fine-tuning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge