Sadegh Khorasani

Efficiently Escaping Saddle Points for Non-Convex Policy Optimization

Nov 15, 2023

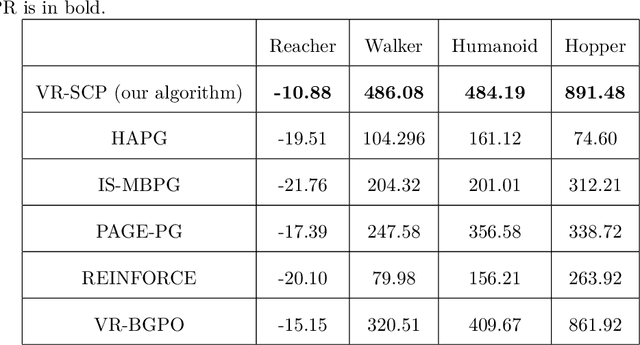

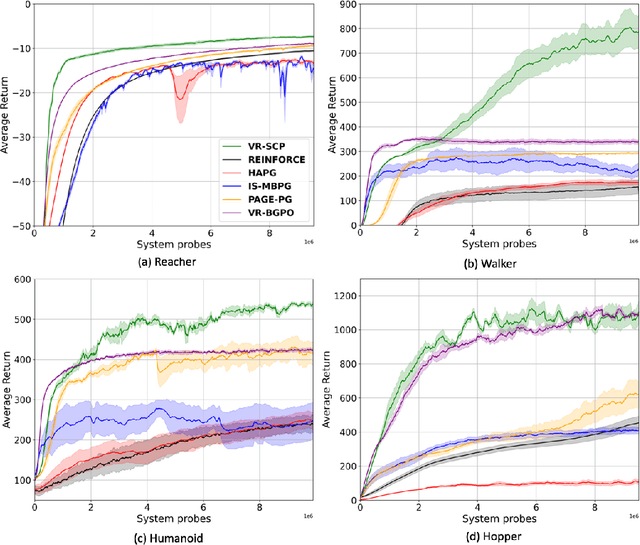

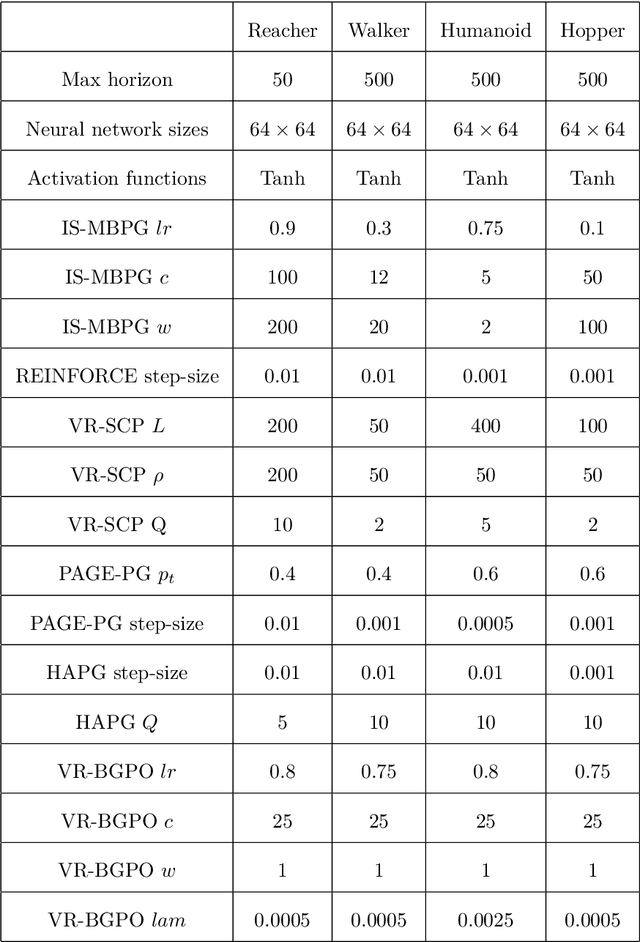

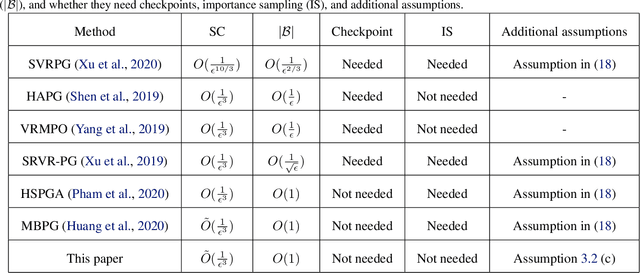

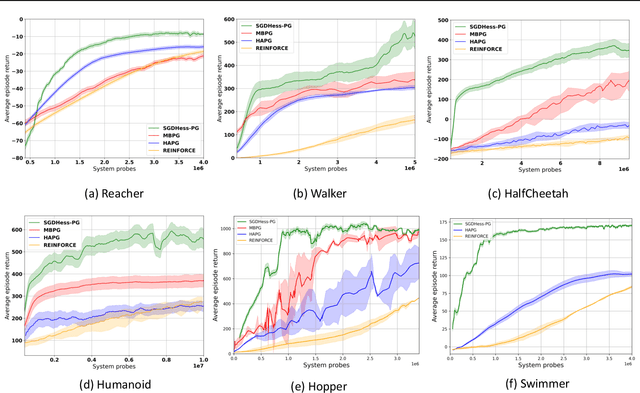

Abstract:Policy gradient (PG) is widely used in reinforcement learning due to its scalability and good performance. In recent years, several variance-reduced PG methods have been proposed with a theoretical guarantee of converging to an approximate first-order stationary point (FOSP) with the sample complexity of $O(\epsilon^{-3})$. However, FOSPs could be bad local optima or saddle points. Moreover, these algorithms often use importance sampling (IS) weights which could impair the statistical effectiveness of variance reduction. In this paper, we propose a variance-reduced second-order method that uses second-order information in the form of Hessian vector products (HVP) and converges to an approximate second-order stationary point (SOSP) with sample complexity of $\tilde{O}(\epsilon^{-3})$. This rate improves the best-known sample complexity for achieving approximate SOSPs by a factor of $O(\epsilon^{-0.5})$. Moreover, the proposed variance reduction technique bypasses IS weights by using HVP terms. Our experimental results show that the proposed algorithm outperforms the state of the art and is more robust to changes in random seeds.

Adaptive Momentum-Based Policy Gradient with Second-Order Information

May 17, 2022

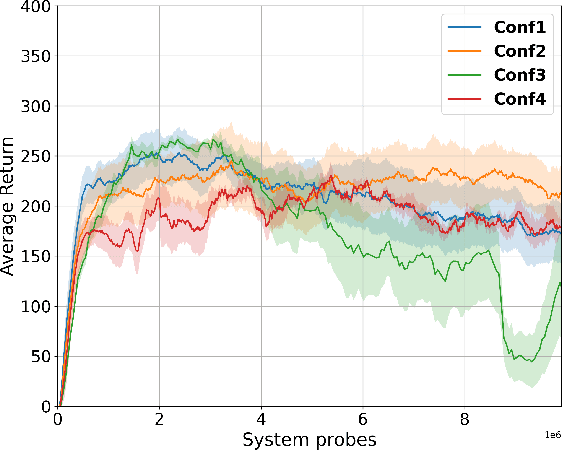

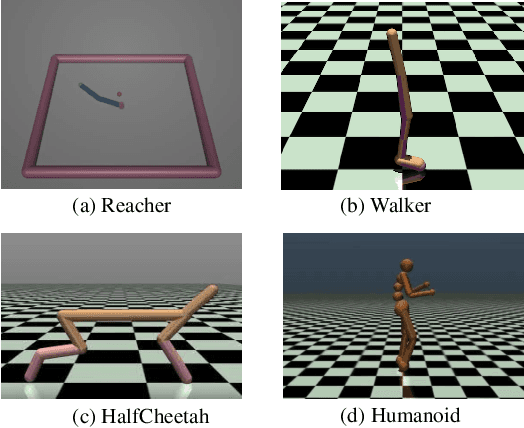

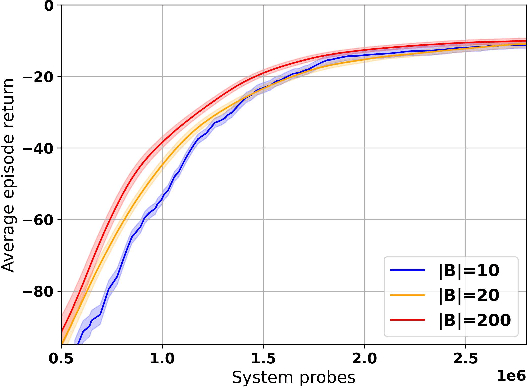

Abstract:The variance reduced gradient estimators for policy gradient methods has been one of the main focus of research in the reinforcement learning in recent years as they allow acceleration of the estimation process. We propose a variance reduced policy gradient method, called SGDHess-PG, which incorporates second-order information into stochastic gradient descent (SGD) using momentum with an adaptive learning rate. SGDHess-PG algorithm can achieve $\epsilon$-approximate first-order stationary point with $\tilde{O}(\epsilon^{-3})$ number of trajectories, while using a batch size of $O(1)$ at each iteration. Unlike most previous work, our proposed algorithm does not require importance sampling techniques which can compromise the advantage of variance reduction process. Our extensive experimental results show the effectiveness of the proposed algorithm on various control tasks and its advantage over the state of the art in practice.

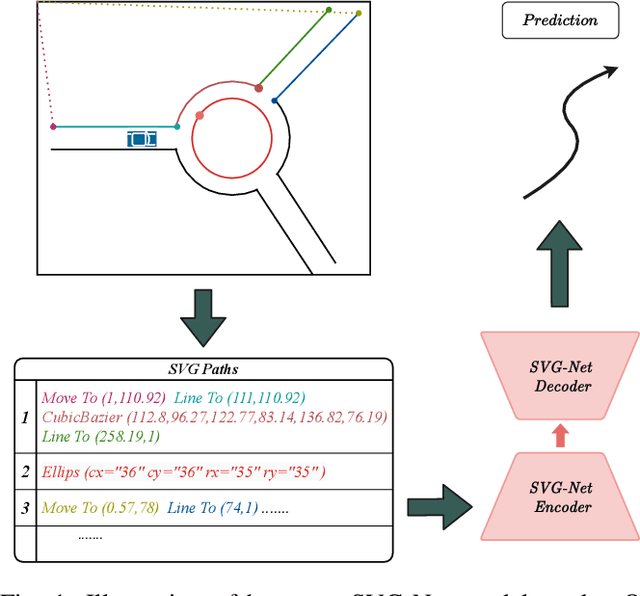

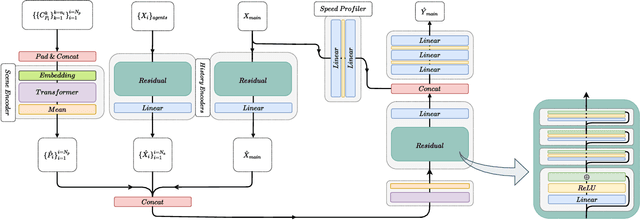

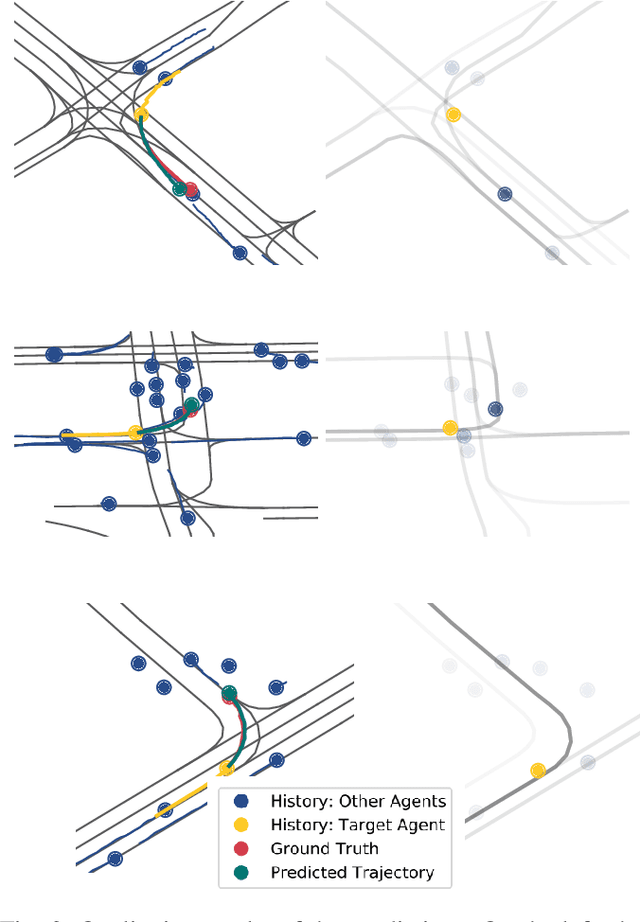

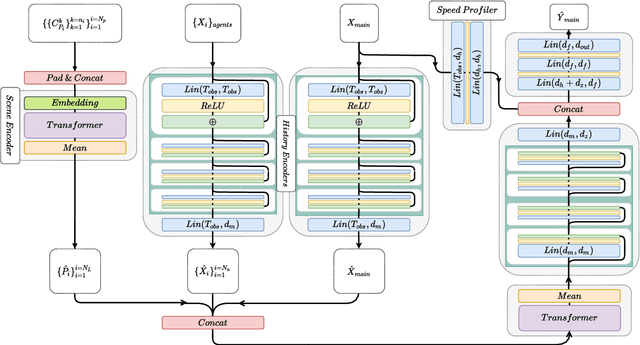

SVG-Net: An SVG-based Trajectory Prediction Model

Oct 11, 2021

Abstract:Anticipating motions of vehicles in a scene is an essential problem for safe autonomous driving systems. To this end, the comprehension of the scene's infrastructure is often the main clue for predicting future trajectories. Most of the proposed approaches represent the scene with a rasterized format and some of the more recent approaches leverage custom vectorized formats. In contrast, we propose representing the scene's information by employing Scalable Vector Graphics (SVG). SVG is a well-established format that matches the problem of trajectory prediction better than rasterized formats while being more general than arbitrary vectorized formats. SVG has the potential to provide the convenience and generality of raster-based solutions if coupled with a powerful tool such as CNNs, for which we introduce SVG-Net. SVG-Net is a Transformer-based Neural Network that can effectively capture the scene's information from SVG inputs. Thanks to the self-attention mechanism in its Transformers, SVG-Net can also adequately apprehend relations amongst the scene and the agents. We demonstrate SVG-Net's effectiveness by evaluating its performance on the publicly available Argoverse forecasting dataset. Finally, we illustrate how, by using SVG, one can benefit from datasets and advancements in other research fronts that also utilize the same input format. Our code is available at https://vita-epfl.github.io/SVGNet/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge