Sabbir Ahmed

Explainable AI and Machine Learning for Exam-based Student Evaluation: Causal and Predictive Analysis of Socio-academic and Economic Factors

Aug 01, 2025Abstract:Academic performance depends on a multivariable nexus of socio-academic and financial factors. This study investigates these influences to develop effective strategies for optimizing students' CGPA. To achieve this, we reviewed various literature to identify key influencing factors and constructed an initial hypothetical causal graph based on the findings. Additionally, an online survey was conducted, where 1,050 students participated, providing comprehensive data for analysis. Rigorous data preprocessing techniques, including cleaning and visualization, ensured data quality before analysis. Causal analysis validated the relationships among variables, offering deeper insights into their direct and indirect effects on CGPA. Regression models were implemented for CGPA prediction, while classification models categorized students based on performance levels. Ridge Regression demonstrated strong predictive accuracy, achieving a Mean Absolute Error of 0.12 and a Mean Squared Error of 0.023. Random Forest outperformed in classification, attaining an F1-score near perfection and an accuracy of 98.68%. Explainable AI techniques such as SHAP, LIME, and Interpret enhanced model interpretability, highlighting critical factors such as study hours, scholarships, parental education, and prior academic performance. The study culminated in the development of a web-based application that provides students with personalized insights, allowing them to predict academic performance, identify areas for improvement, and make informed decisions to enhance their outcomes.

VisionTrap: Unanswerable Questions On Visual Data

Jul 23, 2025Abstract:Visual Question Answering (VQA) has been a widely studied topic, with extensive research focusing on how VLMs respond to answerable questions based on real-world images. However, there has been limited exploration of how these models handle unanswerable questions, particularly in cases where they should abstain from providing a response. This research investigates VQA performance on unrealistically generated images or asking unanswerable questions, assessing whether models recognize the limitations of their knowledge or attempt to generate incorrect answers. We introduced a dataset, VisionTrap, comprising three categories of unanswerable questions across diverse image types: (1) hybrid entities that fuse objects and animals, (2) objects depicted in unconventional or impossible scenarios, and (3) fictional or non-existent figures. The questions posed are logically structured yet inherently unanswerable, testing whether models can correctly recognize their limitations. Our findings highlight the importance of incorporating such questions into VQA benchmarks to evaluate whether models tend to answer, even when they should abstain.

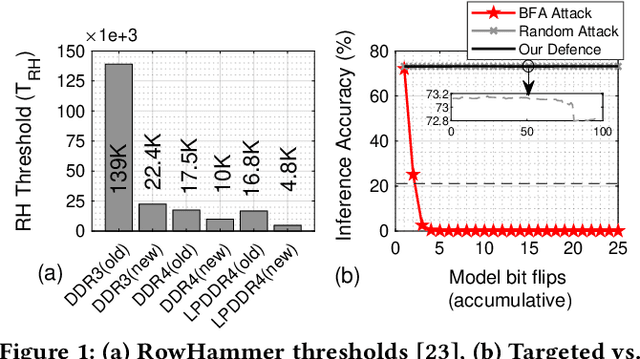

EIM-TRNG: Obfuscating Deep Neural Network Weights with Encoding-in-Memory True Random Number Generator via RowHammer

Jul 03, 2025Abstract:True Random Number Generators (TRNGs) play a fundamental role in hardware security, cryptographic systems, and data protection. In the context of Deep NeuralNetworks (DNNs), safeguarding model parameters, particularly weights, is critical to ensure the integrity, privacy, and intel-lectual property of AI systems. While software-based pseudo-random number generators are widely used, they lack the unpredictability and resilience offered by hardware-based TRNGs. In this work, we propose a novel and robust Encoding-in-Memory TRNG called EIM-TRNG that leverages the inherent physical randomness in DRAM cell behavior, particularly under RowHammer-induced disturbances, for the first time. We demonstrate how the unpredictable bit-flips generated through carefully controlled RowHammer operations can be harnessed as a reliable entropy source. Furthermore, we apply this TRNG framework to secure DNN weight data by encoding via a combination of fixed and unpredictable bit-flips. The encrypted data is later decrypted using a key derived from the probabilistic flip behavior, ensuring both data confidentiality and model authenticity. Our results validate the effectiveness of DRAM-based entropy extraction for robust, low-cost hardware security and offer a promising direction for protecting machine learning models at the hardware level.

Preemptive Hallucination Reduction: An Input-Level Approach for Multimodal Language Model

May 29, 2025Abstract:Visual hallucinations in Large Language Models (LLMs), where the model generates responses that are inconsistent with the visual input, pose a significant challenge to their reliability, particularly in contexts where precise and trustworthy outputs are critical. Current research largely emphasizes post-hoc correction or model-specific fine-tuning strategies, with limited exploration of preprocessing techniques to address hallucination issues at the input stage. This study presents a novel ensemble-based preprocessing framework that adaptively selects the most appropriate filtering approach -- noise reduced (NR), edge enhanced (EE), or unaltered input (org) based on the type of question posed, resulting into reduced hallucination without requiring any modifications to the underlying model architecture or training pipeline. Evaluated on the `HaloQuest' dataset -- a benchmark designed to test multimodal reasoning on visually complex inputs, our method achieves a 44.3% reduction in hallucination rates, as measured by Natural Language Inference (NLI) scores using SelfCheckGPT. This demonstrates that intelligent input conditioning alone can significantly enhance factual grounding in LLM responses. The findings highlight the importance of adaptive preprocessing techniques in mitigating hallucinations, paving the way for more reliable multimodal systems capable of addressing real-world challenges.

MangoLeafViT: Leveraging Lightweight Vision Transformer with Runtime Augmentation for Efficient Mango Leaf Disease Classification

May 29, 2025Abstract:Ensuring food safety is critical due to its profound impact on public health, economic stability, and global supply chains. Cultivation of Mango, a major agricultural product in several South Asian countries, faces high financial losses due to different diseases, affecting various aspects of the entire supply chain. While deep learning-based methods have been explored for mango leaf disease classification, there remains a gap in designing solutions that are computationally efficient and compatible with low-end devices. In this work, we propose a lightweight Vision Transformer-based pipeline with a self-attention mechanism to classify mango leaf diseases, achieving state-of-the-art performance with minimal computational overhead. Our approach leverages global attention to capture intricate patterns among disease types and incorporates runtime augmentation for enhanced performance. Evaluation on the MangoLeafBD dataset demonstrates a 99.43% accuracy, outperforming existing methods in terms of model size, parameter count, and FLOPs count.

Unified Alignment Protocol: Making Sense of the Unlabeled Data in New Domains

May 27, 2025

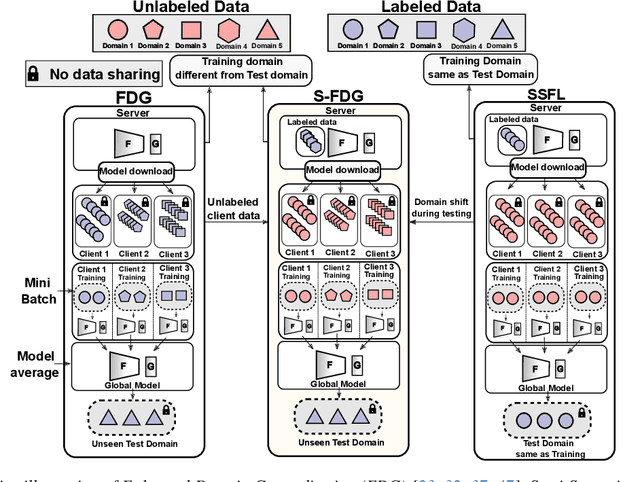

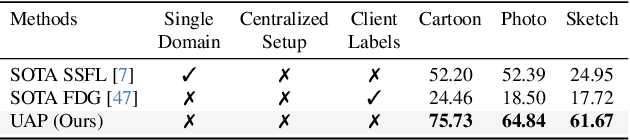

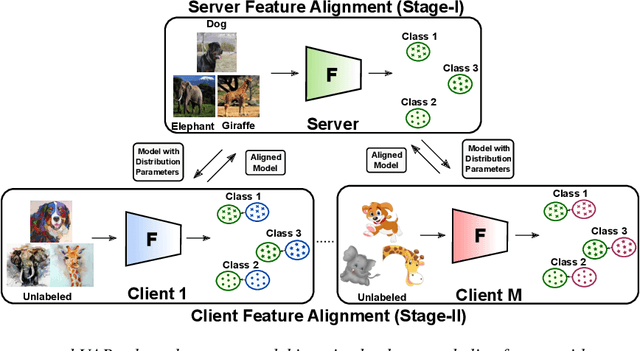

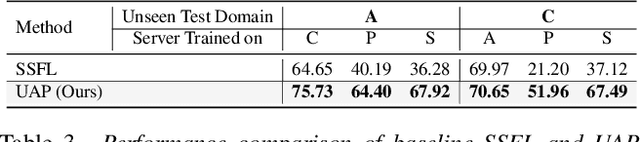

Abstract:Semi-Supervised Federated Learning (SSFL) is gaining popularity over conventional Federated Learning in many real-world applications. Due to the practical limitation of limited labeled data on the client side, SSFL considers that participating clients train with unlabeled data, and only the central server has the necessary resources to access limited labeled data, making it an ideal fit for real-world applications (e.g., healthcare). However, traditional SSFL assumes that the data distributions in the training phase and testing phase are the same. In practice, however, domain shifts frequently occur, making it essential for SSFL to incorporate generalization capabilities and enhance their practicality. The core challenge is improving model generalization to new, unseen domains while the client participate in SSFL. However, the decentralized setup of SSFL and unsupervised client training necessitates innovation to achieve improved generalization across domains. To achieve this, we propose a novel framework called the Unified Alignment Protocol (UAP), which consists of an alternating two-stage training process. The first stage involves training the server model to learn and align the features with a parametric distribution, which is subsequently communicated to clients without additional communication overhead. The second stage proposes a novel training algorithm that utilizes the server feature distribution to align client features accordingly. Our extensive experiments on standard domain generalization benchmark datasets across multiple model architectures reveal that proposed UAP successfully achieves SOTA generalization performance in SSFL setting.

Robo-Troj: Attacking LLM-based Task Planners

Apr 23, 2025Abstract:Robots need task planning methods to achieve goals that require more than individual actions. Recently, large language models (LLMs) have demonstrated impressive performance in task planning. LLMs can generate a step-by-step solution using a description of actions and the goal. Despite the successes in LLM-based task planning, there is limited research studying the security aspects of those systems. In this paper, we develop Robo-Troj, the first multi-trigger backdoor attack for LLM-based task planners, which is the main contribution of this work. As a multi-trigger attack, Robo-Troj is trained to accommodate the diversity of robot application domains. For instance, one can use unique trigger words, e.g., "herical", to activate a specific malicious behavior, e.g., cutting hand on a kitchen robot. In addition, we develop an optimization method for selecting the trigger words that are most effective. Through demonstrating the vulnerability of LLM-based planners, we aim to promote the development of secured robot systems.

Precision Cancer Classification and Biomarker Identification from mRNA Gene Expression via Dimensionality Reduction and Explainable AI

Oct 08, 2024Abstract:Gene expression analysis is a critical method for cancer classification, enabling precise diagnoses through the identification of unique molecular signatures associated with various tumors. Identifying cancer-specific genes from gene expression values enables a more tailored and personalized treatment approach. However, the high dimensionality of mRNA gene expression data poses challenges for analysis and data extraction. This research presents a comprehensive pipeline designed to accurately identify 33 distinct cancer types and their corresponding gene sets. It incorporates a combination of normalization and feature selection techniques to reduce dataset dimensionality effectively while ensuring high performance. Notably, our pipeline successfully identifies a substantial number of cancer-specific genes using a reduced feature set of just 500, in contrast to using the full dataset comprising 19,238 features. By employing an ensemble approach that combines three top-performing classifiers, a classification accuracy of 96.61% was achieved. Furthermore, we leverage Explainable AI to elucidate the biological significance of the identified cancer-specific genes, employing Differential Gene Expression (DGE) analysis.

HaSPeR: An Image Repository for Hand Shadow Puppet Recognition

Aug 19, 2024Abstract:Hand shadow puppetry, also known as shadowgraphy or ombromanie, is a form of theatrical art and storytelling where hand shadows are projected onto flat surfaces to create illusions of living creatures. The skilled performers create these silhouettes by hand positioning, finger movements, and dexterous gestures to resemble shadows of animals and objects. Due to the lack of practitioners and a seismic shift in people's entertainment standards, this art form is on the verge of extinction. To facilitate its preservation and proliferate it to a wider audience, we introduce ${\rm H{\small A}SP{\small E}R}$, a novel dataset consisting of 8,340 images of hand shadow puppets across 11 classes extracted from both professional and amateur hand shadow puppeteer clips. We provide a detailed statistical analysis of the dataset and employ a range of pretrained image classification models to establish baselines. Our findings show a substantial performance superiority of traditional convolutional models over attention-based transformer architectures. We also find that lightweight models, such as MobileNetV2, suited for mobile applications and embedded devices, perform comparatively well. We surmise that such low-latency architectures can be useful in developing ombromanie teaching tools, and we create a prototype application to explore this surmission. Keeping the best-performing model InceptionV3 under the limelight, we conduct comprehensive feature-spatial, explainability, and error analyses to gain insights into its decision-making process. To the best of our knowledge, this is the first documented dataset and research endeavor to preserve this dying art for future generations, with computer vision approaches. Our code and data are publicly available.

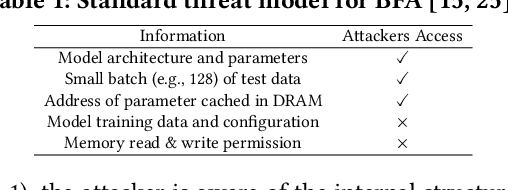

DNN-Defender: An in-DRAM Deep Neural Network Defense Mechanism for Adversarial Weight Attack

May 14, 2023

Abstract:With deep learning deployed in many security-sensitive areas, machine learning security is becoming progressively important. Recent studies demonstrate attackers can exploit system-level techniques exploiting the RowHammer vulnerability of DRAM to deterministically and precisely flip bits in Deep Neural Networks (DNN) model weights to affect inference accuracy. The existing defense mechanisms are software-based, such as weight reconstruction requiring expensive training overhead or performance degradation. On the other hand, generic hardware-based victim-/aggressor-focused mechanisms impose expensive hardware overheads and preserve the spatial connection between victim and aggressor rows. In this paper, we present the first DRAM-based victim-focused defense mechanism tailored for quantized DNNs, named DNN-Defender that leverages the potential of in-DRAM swapping to withstand the targeted bit-flip attacks. Our results indicate that DNN-Defender can deliver a high level of protection downgrading the performance of targeted RowHammer attacks to a random attack level. In addition, the proposed defense has no accuracy drop on CIFAR-10 and ImageNet datasets without requiring any software training or incurring additional hardware overhead.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge