Ruohong Liu

BEAVER: Building Environments with Assessable Variation for Evaluating Multi-Objective Reinforcement Learning

Jul 10, 2025Abstract:Recent years have seen significant advancements in designing reinforcement learning (RL)-based agents for building energy management. While individual success is observed in simulated or controlled environments, the scalability of RL approaches in terms of efficiency and generalization across building dynamics and operational scenarios remains an open question. In this work, we formally characterize the generalization space for the cross-environment, multi-objective building energy management task, and formulate the multi-objective contextual RL problem. Such a formulation helps understand the challenges of transferring learned policies across varied operational contexts such as climate and heat convection dynamics under multiple control objectives such as comfort level and energy consumption. We provide a principled way to parameterize such contextual information in realistic building RL environments, and construct a novel benchmark to facilitate the evaluation of generalizable RL algorithms in practical building control tasks. Our results show that existing multi-objective RL methods are capable of achieving reasonable trade-offs between conflicting objectives. However, their performance degrades under certain environment variations, underscoring the importance of incorporating dynamics-dependent contextual information into the policy learning process.

Hierarchical Learning-based Graph Partition for Large-scale Vehicle Routing Problems

Feb 12, 2025

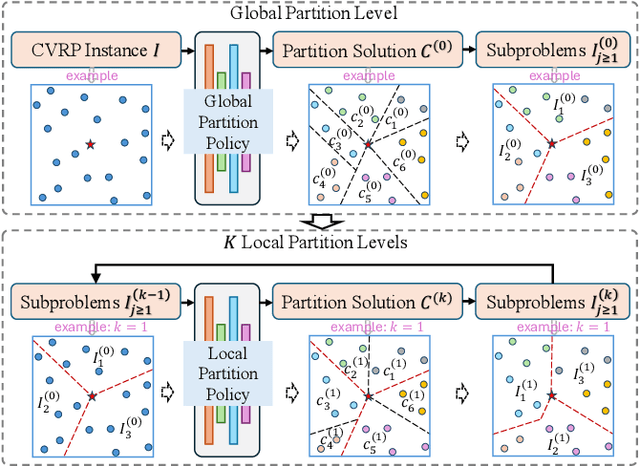

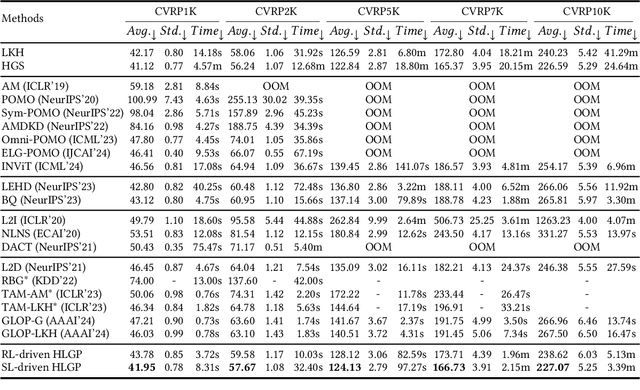

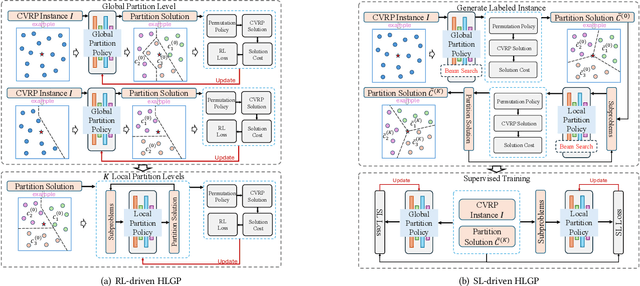

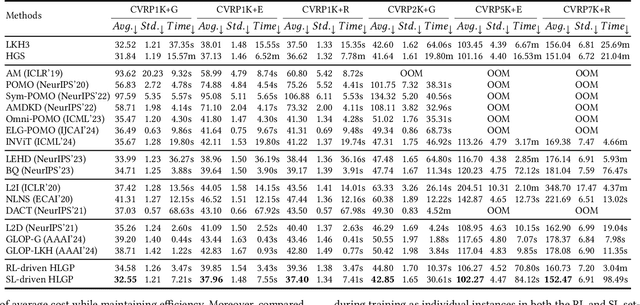

Abstract:Neural solvers based on the divide-and-conquer approach for Vehicle Routing Problems (VRPs) in general, and capacitated VRP (CVRP) in particular, integrates the global partition of an instance with local constructions for each subproblem to enhance generalization. However, during the global partition phase, misclusterings within subgraphs have a tendency to progressively compound throughout the multi-step decoding process of the learning-based partition policy. This suboptimal behavior in the global partition phase, in turn, may lead to a dramatic deterioration in the performance of the overall decomposition-based system, despite using optimal local constructions. To address these challenges, we propose a versatile Hierarchical Learning-based Graph Partition (HLGP) framework, which is tailored to benefit the partition of CVRP instances by synergistically integrating global and local partition policies. Specifically, the global partition policy is tasked with creating the coarse multi-way partition to generate the sequence of simpler two-way partition subtasks. These subtasks mark the initiation of the subsequent K local partition levels. At each local partition level, subtasks exclusive for this level are assigned to the local partition policy which benefits from the insensitive local topological features to incrementally alleviate the compounded errors. This framework is versatile in the sense that it optimizes the involved partition policies towards a unified objective harmoniously compatible with both reinforcement learning (RL) and supervised learning (SL). (*Due to the notification of arXiv "The Abstract field cannot be longer than 1,920 characters", the appeared Abstract is shortened. For the full Abstract, please download the Article.)

C-MORL: Multi-Objective Reinforcement Learning through Efficient Discovery of Pareto Front

Oct 03, 2024

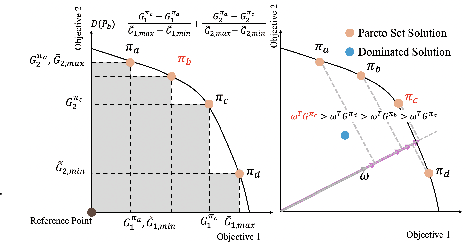

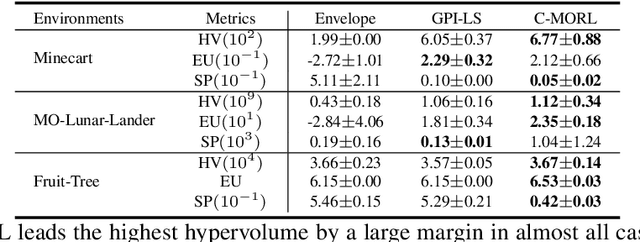

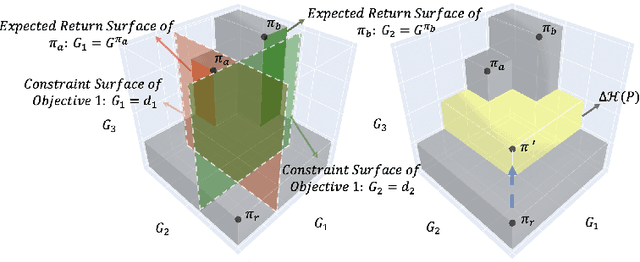

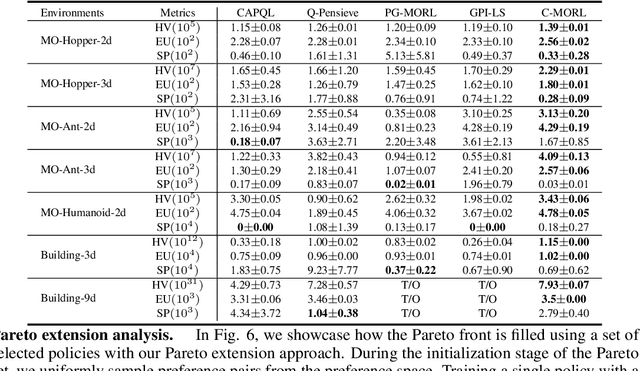

Abstract:Multi-objective reinforcement learning (MORL) excels at handling rapidly changing preferences in tasks that involve multiple criteria, even for unseen preferences. However, previous dominating MORL methods typically generate a fixed policy set or preference-conditioned policy through multiple training iterations exclusively for sampled preference vectors, and cannot ensure the efficient discovery of the Pareto front. Furthermore, integrating preferences into the input of policy or value functions presents scalability challenges, in particular as the dimension of the state and preference space grow, which can complicate the learning process and hinder the algorithm's performance on more complex tasks. To address these issues, we propose a two-stage Pareto front discovery algorithm called Constrained MORL (C-MORL), which serves as a seamless bridge between constrained policy optimization and MORL. Concretely, a set of policies is trained in parallel in the initialization stage, with each optimized towards its individual preference over the multiple objectives. Then, to fill the remaining vacancies in the Pareto front, the constrained optimization steps are employed to maximize one objective while constraining the other objectives to exceed a predefined threshold. Empirically, compared to recent advancements in MORL methods, our algorithm achieves more consistent and superior performances in terms of hypervolume, expected utility, and sparsity on both discrete and continuous control tasks, especially with numerous objectives (up to nine objectives in our experiments).

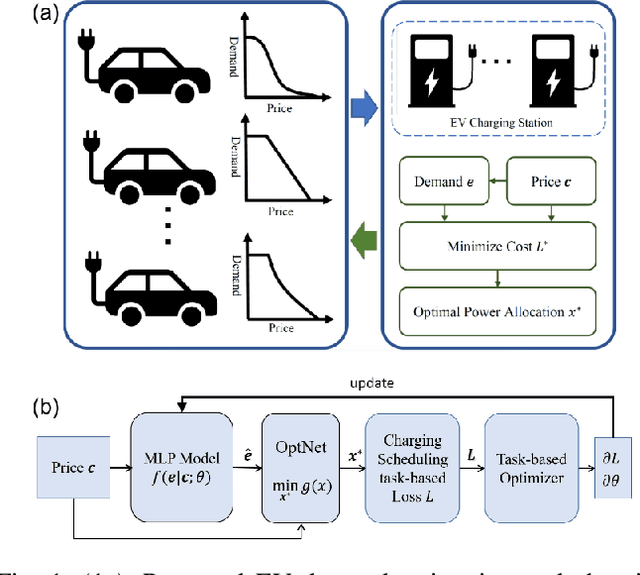

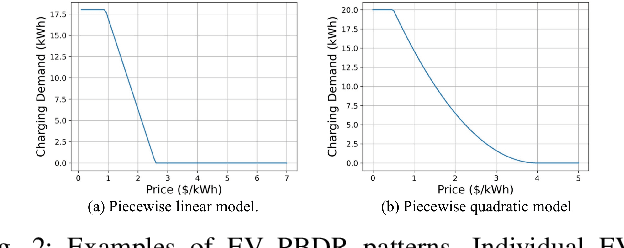

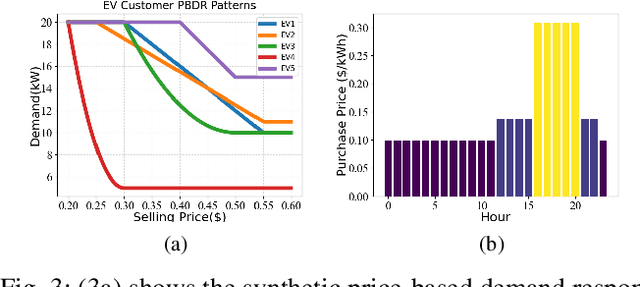

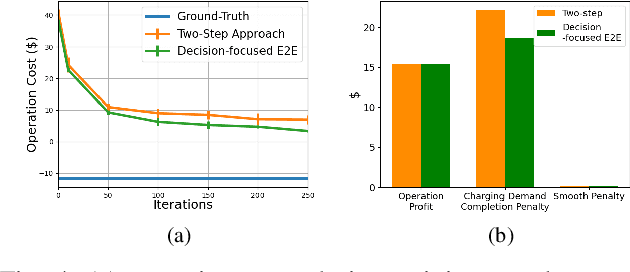

Learning and Optimization for Price-based Demand Response of Electric Vehicle Charging

Apr 16, 2024

Abstract:In the context of charging electric vehicles (EVs), the price-based demand response (PBDR) is becoming increasingly significant for charging load management. Such response usually encourages cost-sensitive customers to adjust their energy demand in response to changes in price for financial incentives. Thus, to model and optimize EV charging, it is important for charging station operator to model the PBDR patterns of EV customers by precisely predicting charging demands given price signals. Then the operator refers to these demands to optimize charging station power allocation policy. The standard pipeline involves offline fitting of a PBDR function based on historical EV charging records, followed by applying estimated EV demands in downstream charging station operation optimization. In this work, we propose a new decision-focused end-to-end framework for PBDR modeling that combines prediction errors and downstream optimization cost errors in the model learning stage. We evaluate the effectiveness of our method on a simulation of charging station operation with synthetic PBDR patterns of EV customers, and experimental results demonstrate that this framework can provide a more reliable prediction model for the ultimate optimization process, leading to more effective optimization solutions in terms of cost savings and charging station operation objectives with only a few training samples.

Large Foundation Models for Power Systems

Dec 12, 2023Abstract:Foundation models, such as Large Language Models (LLMs), can respond to a wide range of format-free queries without any task-specific data collection or model training, creating various research and application opportunities for the modeling and operation of large-scale power systems. In this paper, we outline how such large foundation model such as GPT-4 are developed, and discuss how they can be leveraged in challenging power and energy system tasks. We first investigate the potential of existing foundation models by validating their performance on four representative tasks across power system domains, including the optimal power flow (OPF), electric vehicle (EV) scheduling, knowledge retrieval for power engineering technical reports, and situation awareness. Our results indicate strong capabilities of such foundation models on boosting the efficiency and reliability of power system operational pipelines. We also provide suggestions and projections on future deployment of foundation models in power system applications.

Laxity-Aware Scalable Reinforcement Learning for HVAC Control

Jun 29, 2023

Abstract:Demand flexibility plays a vital role in maintaining grid balance, reducing peak demand, and saving customers' energy bills. Given their highly shiftable load and significant contribution to a building's energy consumption, Heating, Ventilation, and Air Conditioning (HVAC) systems can provide valuable demand flexibility to the power systems by adjusting their energy consumption in response to electricity price and power system needs. To exploit this flexibility in both operation time and power, it is imperative to accurately model and aggregate the load flexibility of a large population of HVAC systems as well as designing effective control algorithms. In this paper, we tackle the curse of dimensionality issue in modeling and control by utilizing the concept of laxity to quantify the emergency level of each HVAC operation request. We further propose a two-level approach to address energy optimization for a large population of HVAC systems. The lower level involves an aggregator to aggregate HVAC load laxity information and use least-laxity-first (LLF) rule to allocate real-time power for individual HVAC systems based on the controller's total power. Due to the complex and uncertain nature of HVAC systems, we leverage a reinforcement learning (RL)-based controller to schedule the total power based on the aggregated laxity information and electricity price. We evaluate the temperature control and energy cost saving performance of a large-scale group of HVAC systems in both single-zone and multi-zone scenarios, under varying climate and electricity market conditions. The experiment results indicate that proposed approach outperforms the centralized methods in the majority of test scenarios, and performs comparably to model-based method in some scenarios.

Learning Task-Aware Energy Disaggregation: a Federated Approach

Apr 14, 2022

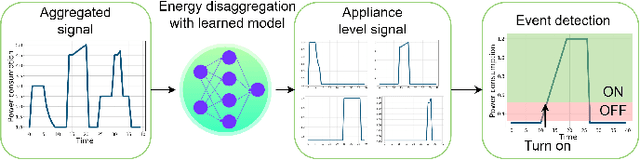

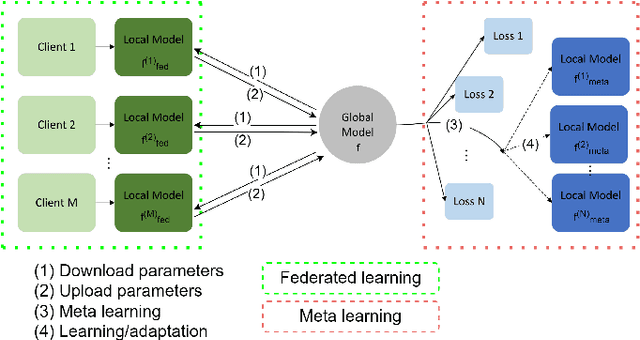

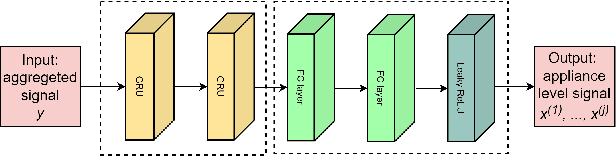

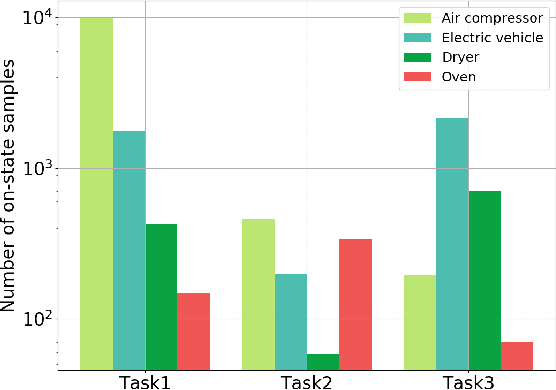

Abstract:We consider the problem of learning the energy disaggregation signals for residential load data. Such task is referred as non-intrusive load monitoring (NILM), and in order to find individual devices' power consumption profiles based on aggregated meter measurements, a machine learning model is usually trained based on large amount of training data coming from a number of residential homes. Yet collecting such residential load datasets require both huge efforts and customers' approval on sharing metering data, while load data coming from different regions or electricity users may exhibit heterogeneous usage patterns. Both practical concerns make training a single, centralized NILM model challenging. In this paper, we propose a decentralized and task-adaptive learning scheme for NILM tasks, where nested meta learning and federated learning steps are designed for learning task-specific models collectively. Simulation results on benchmark dataset validate proposed algorithm's performance on efficiently inferring appliance-level consumption for a variety of homes and appliances.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge