Ronald Fedkiw

Using Gaussian Splats to Create High-Fidelity Facial Geometry and Texture

Dec 18, 2025

Abstract:We leverage increasingly popular three-dimensional neural representations in order to construct a unified and consistent explanation of a collection of uncalibrated images of the human face. Our approach utilizes Gaussian Splatting, since it is more explicit and thus more amenable to constraints than NeRFs. We leverage segmentation annotations to align the semantic regions of the face, facilitating the reconstruction of a neutral pose from only 11 images (as opposed to requiring a long video). We soft constrain the Gaussians to an underlying triangulated surface in order to provide a more structured Gaussian Splat reconstruction, which in turn informs subsequent perturbations to increase the accuracy of the underlying triangulated surface. The resulting triangulated surface can then be used in a standard graphics pipeline. In addition, and perhaps most impactful, we show how accurate geometry enables the Gaussian Splats to be transformed into texture space where they can be treated as a view-dependent neural texture. This allows one to use high visual fidelity Gaussian Splatting on any asset in a scene without the need to modify any other asset or any other aspect (geometry, lighting, renderer, etc.) of the graphics pipeline. We utilize a relightable Gaussian model to disentangle texture from lighting in order to obtain a delit high-resolution albedo texture that is also readily usable in a standard graphics pipeline. The flexibility of our system allows for training with disparate images, even with incompatible lighting, facilitating robust regularization. Finally, we demonstrate the efficacy of our approach by illustrating its use in a text-driven asset creation pipeline.

Improving Facial Rig Semantics for Tracking and Retargeting

Aug 11, 2025Abstract:In this paper, we consider retargeting a tracked facial performance to either another person or to a virtual character in a game or virtual reality (VR) environment. We remove the difficulties associated with identifying and retargeting the semantics of one rig framework to another by utilizing the same framework (3DMM, FLAME, MetaHuman, etc.) for both subjects. Although this does not constrain the choice of framework when retargeting from one person to another, it does force the tracker to use the game/VR character rig when retargeting to a game/VR character. We utilize volumetric morphing in order to fit facial rigs to both performers and targets; in addition, a carefully chosen set of Simon-Says expressions is used to calibrate each rig to the motion signatures of the relevant performer or target. Although a uniform set of Simon-Says expressions can likely be used for all person to person retargeting, we argue that person to game/VR character retargeting benefits from Simon-Says expressions that capture the distinct motion signature of the game/VR character rig. The Simon-Says calibrated rigs tend to produce the desired expressions when exercising animation controls (as expected). Unfortunately, these well-calibrated rigs still lead to undesirable controls when tracking a performance (a well-behaved function can have an arbitrarily ill-conditioned inverse), even though they typically produce acceptable geometry reconstructions. Thus, we propose a fine-tuning approach that modifies the rig used by the tracker in order to promote the output of more semantically meaningful animation controls, facilitating high efficacy retargeting. In order to better address real-world scenarios, the fine-tuning relies on implicit differentiation so that the tracker can be treated as a (potentially non-differentiable) black box.

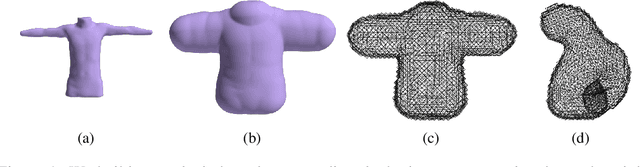

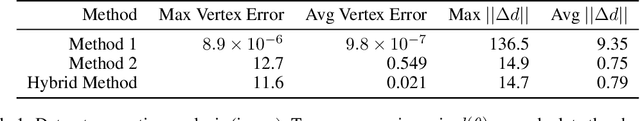

Shallow Signed Distance Functions for Kinematic Collision Bodies

Nov 11, 2024

Abstract:We present learning-based implicit shape representations designed for real-time avatar collision queries arising in the simulation of clothing. Signed distance functions (SDFs) have been used for such queries for many years due to their computational efficiency. Recently deep neural networks have been used for implicit shape representations (DeepSDFs) due to their ability to represent multiple shapes with modest memory requirements compared to traditional representations over dense grids. However, the computational expense of DeepSDFs prevents their use in real-time clothing simulation applications. We design a learning-based representation of SDFs for human avatars whoes bodies change shape kinematically due to joint-based skinning. Rather than using a single DeepSDF for the entire avatar, we use a collection of extremely computationally efficient (shallow) neural networks that represent localized deformations arising from changes in body shape induced by the variation of a single joint. This requires a stitching process to combine each shallow SDF in the collection together into one SDF representing the signed closest distance to the boundary of the entire body. To achieve this we augment each shallow SDF with an additional output that resolves whether or not the individual shallow SDF value is referring to a closest point on the boundary of the body, or to a point on the interior of the body (but on the boundary of the individual shallow SDF). Our model is extremely fast and accurate and we demonstrate its applicability with real-time simulation of garments driven by animated characters.

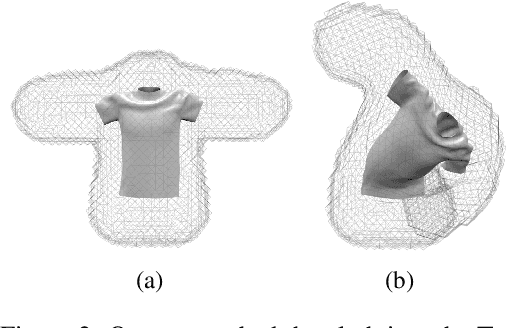

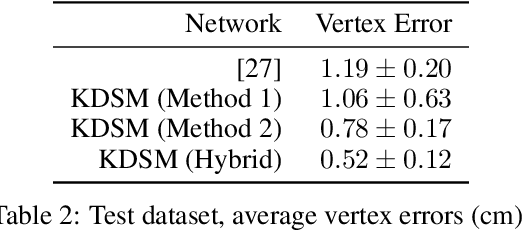

Weakly-Supervised 3D Reconstruction of Clothed Humans via Normal Maps

Nov 27, 2023

Abstract:We present a novel deep learning-based approach to the 3D reconstruction of clothed humans using weak supervision via 2D normal maps. Given a single RGB image or multiview images, our network infers a signed distance function (SDF) discretized on a tetrahedral mesh surrounding the body in a rest pose. Subsequently, inferred pose and camera parameters are used to generate a normal map from the SDF. A key aspect of our approach is the use of Marching Tetrahedra to (uniquely) compute a triangulated surface from the SDF on the tetrahedral mesh, facilitating straightforward differentiation (and thus backpropagation). Thus, given only ground truth normal maps (with no volumetric information ground truth information), we can train the network to produce SDF values from corresponding RGB images. Optionally, an additional multiview loss leads to improved results. We demonstrate the efficacy of our approach for both network inference and 3D reconstruction.

Addressing Discontinuous Root-Finding for Subsequent Differentiability in Machine Learning, Inverse Problems, and Control

Jun 21, 2023

Abstract:There are many physical processes that have inherent discontinuities in their mathematical formulations. This paper is motivated by the specific case of collisions between two rigid or deformable bodies and the intrinsic nature of that discontinuity. The impulse response to a collision is discontinuous with the lack of any response when no collision occurs, which causes difficulties for numerical approaches that require differentiability which are typical in machine learning, inverse problems, and control. We theoretically and numerically demonstrate that the derivative of the collision time with respect to the parameters becomes infinite as one approaches the barrier separating colliding from not colliding, and use lifting to complexify the solution space so that solutions on the other side of the barrier are directly attainable as precise values. Subsequently, we mollify the barrier posed by the unbounded derivatives, so that one can tunnel back and forth in a smooth and reliable fashion facilitating the use of standard numerical approaches. Moreover, we illustrate that standard approaches fail in numerous ways mostly due to a lack of understanding of the mathematical nature of the problem (e.g. typical backpropagation utilizes many rules of differentiation, but ignores L'Hopital's rule).

Software-based Automatic Differentiation is Flawed

May 05, 2023

Abstract:Various software efforts embrace the idea that object oriented programming enables a convenient implementation of the chain rule, facilitating so-called automatic differentiation via backpropagation. Such frameworks have no mechanism for simplifying the expressions (obtained via the chain rule) before evaluating them. As we illustrate below, the resulting errors tend to be unbounded.

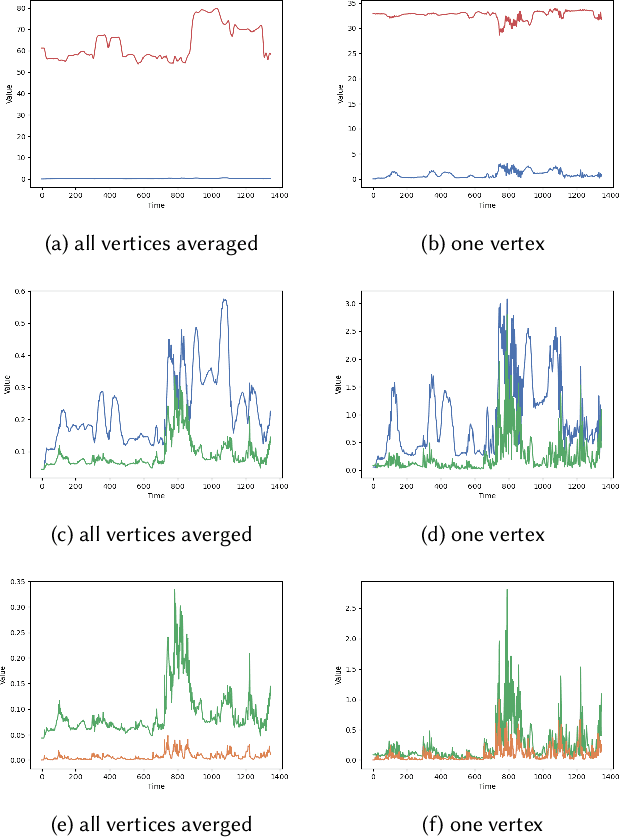

Analytically Integratable Zero-restlength Springs for Capturing Dynamic Modes unrepresented by Quasistatic Neural Networks

Jan 25, 2022

Abstract:We present a novel paradigm for modeling certain types of dynamic simulation in real-time with the aid of neural networks. In order to significantly reduce the requirements on data (especially time-dependent data), as well as decrease generalization error, our approach utilizes a data-driven neural network only to capture quasistatic information (instead of dynamic or time-dependent information). Subsequently, we augment our quasistatic neural network (QNN) inference with a (real-time) dynamic simulation layer. Our key insight is that the dynamic modes lost when using a QNN approximation can be captured with a quite simple (and decoupled) zero-restlength spring model, which can be integrated analytically (as opposed to numerically) and thus has no time-step stability restrictions. Additionally, we demonstrate that the spring constitutive parameters can be robustly learned from a surprisingly small amount of dynamic simulation data. Although we illustrate the efficacy of our approach by considering soft-tissue dynamics on animated human bodies, the paradigm is extensible to many different simulation frameworks.

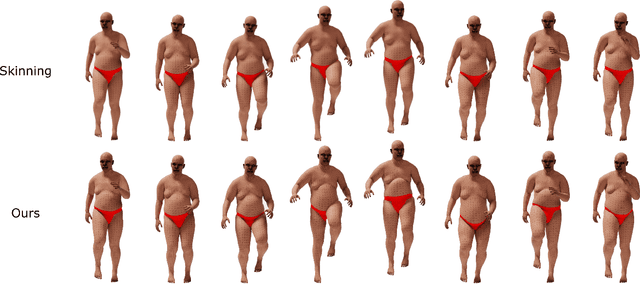

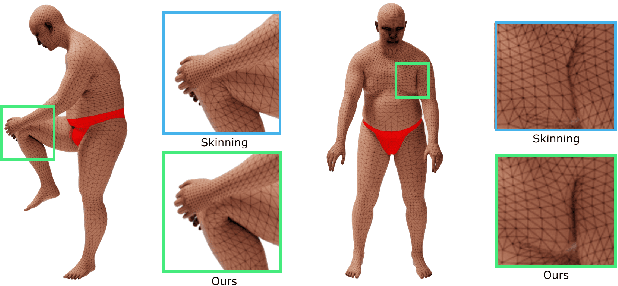

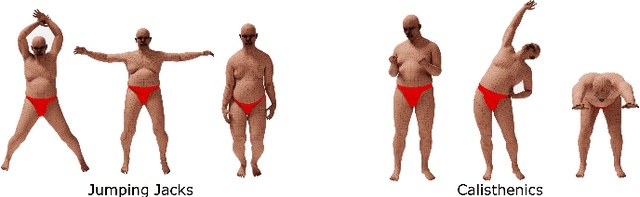

Skinning a Parameterization of Three-Dimensional Space for Neural Network Cloth

Jun 08, 2020

Abstract:We present a novel learning framework for cloth deformation by embedding virtual cloth into a tetrahedral mesh that parametrizes the volumetric region of air surrounding the underlying body. In order to maintain this volumetric parameterization during character animation, the tetrahedral mesh is constrained to follow the body surface as it deforms. We embed the cloth mesh vertices into this parameterization of three-dimensional space in order to automatically capture much of the nonlinear deformation due to both joint rotations and collisions. We then train a convolutional neural network to recover ground truth deformation by learning cloth embedding offsets for each skeletal pose. Our experiments show significant improvement over learning cloth offsets from body surface parameterizations, both quantitatively and visually, with prior state of the art having a mean error five standard deviations higher than ours. Moreover, our results demonstrate the efficacy of a general learning paradigm where high-frequency details can be embedded into low-frequency parameterizations.

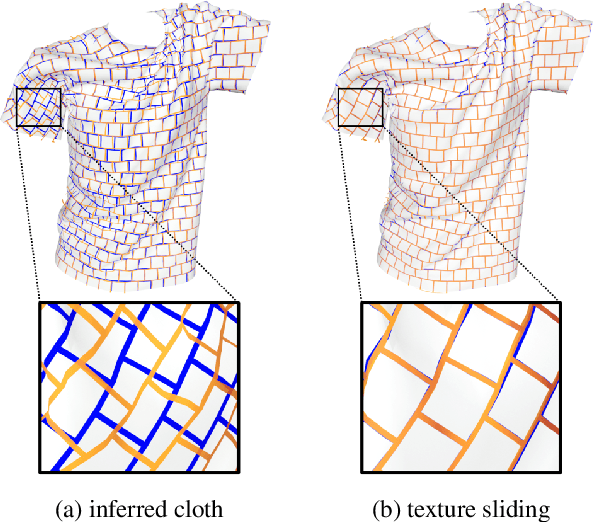

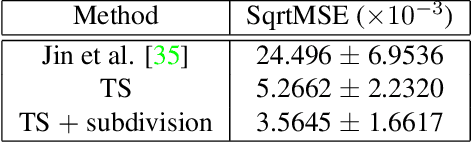

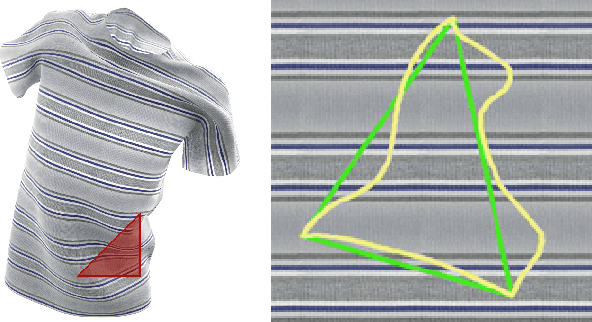

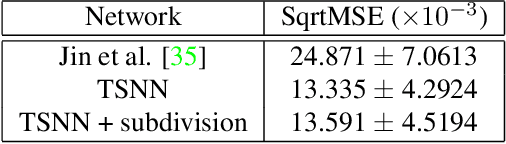

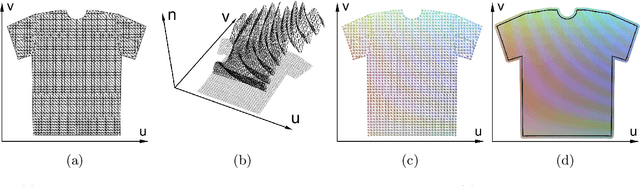

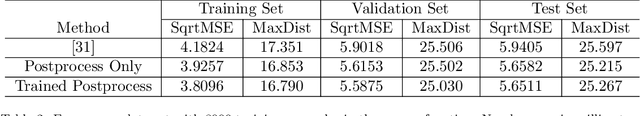

Recovering Geometric Information with Learned Texture Perturbations

Jan 20, 2020

Abstract:Regularization is used to avoid overfitting when training a neural network; unfortunately, this reduces the attainable level of detail hindering the ability to capture high-frequency information present in the training data. Even though various approaches may be used to re-introduce high-frequency detail, it typically does not match the training data and is often not time coherent. In the case of network inferred cloth, these sentiments manifest themselves via either a lack of detailed wrinkles or unnaturally appearing and/or time incoherent surrogate wrinkles. Thus, we propose a general strategy whereby high-frequency information is procedurally embedded into low-frequency data so that when the latter is smeared out by the network the former still retains its high-frequency detail. We illustrate this approach by learning texture coordinates which when smeared do not in turn smear out the high-frequency detail in the texture itself but merely smoothly distort it. Notably, we prescribe perturbed texture coordinates that are subsequently used to correct the over-smoothed appearance of inferred cloth, and correcting the appearance from multiple camera views naturally recovers lost geometric information.

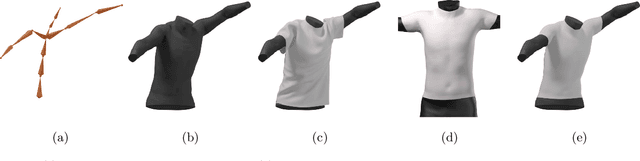

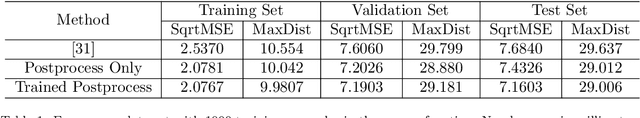

Coercing Machine Learning to Output Physically Accurate Results

Nov 23, 2019

Abstract:Many machine/deep learning artificial neural networks are trained to simply be interpolation functions that map input variables to output values interpolated from the training data in a linear/nonlinear fashion. Even when the input/output pairs of the training data are physically accurate (e.g. the results of an experiment or numerical simulation), interpolated quantities can deviate quite far from being physically accurate. Although one could project the output of a network into a physically feasible region, such a postprocess is not captured by the energy function minimized when training the network; thus, the final projected result could incorrectly deviate quite far from the training data. We propose folding any such projection or postprocess directly into the network so that the final result is correctly compared to the training data by the energy function. Although we propose a general approach, we illustrate its efficacy on a specific convolutional neural network that takes in human pose parameters (joint rotations) and outputs a prediction of vertex positions representing a triangulated cloth mesh. While the original network outputs vertex positions with erroneously high stretching and compression energies, the new network trained with our physics prior remedies these issues producing highly improved results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge