Rohan Jha

semantic-features: A User-Friendly Tool for Studying Contextual Word Embeddings in Interpretable Semantic Spaces

Jun 06, 2025Abstract:We introduce semantic-features, an extensible, easy-to-use library based on Chronis et al. (2023) for studying contextualized word embeddings of LMs by projecting them into interpretable spaces. We apply this tool in an experiment where we measure the contextual effect of the choice of dative construction (prepositional or double object) on the semantic interpretation of utterances (Bresnan, 2007). Specifically, we test whether "London" in "I sent London the letter." is more likely to be interpreted as an animate referent (e.g., as the name of a person) than in "I sent the letter to London." To this end, we devise a dataset of 450 sentence pairs, one in each dative construction, with recipients being ambiguous with respect to person-hood vs. place-hood. By applying semantic-features, we show that the contextualized word embeddings of three masked language models show the expected sensitivities. This leaves us optimistic about the usefulness of our tool.

Jina-ColBERT-v2: A General-Purpose Multilingual Late Interaction Retriever

Sep 04, 2024

Abstract:Multi-vector dense models, such as ColBERT, have proven highly effective in information retrieval. ColBERT's late interaction scoring approximates the joint query-document attention seen in cross-encoders while maintaining inference efficiency closer to traditional dense retrieval models, thanks to its bi-encoder architecture and recent optimizations in indexing and search. In this paper, we introduce a novel architecture and a training framework to support long context window and multilingual retrieval. Our new model, Jina-ColBERT-v2, demonstrates strong performance across a range of English and multilingual retrieval tasks,

LSTMSE-Net: Long Short Term Speech Enhancement Network for Audio-visual Speech Enhancement

Sep 03, 2024

Abstract:In this paper, we propose long short term memory speech enhancement network (LSTMSE-Net), an audio-visual speech enhancement (AVSE) method. This innovative method leverages the complementary nature of visual and audio information to boost the quality of speech signals. Visual features are extracted with VisualFeatNet (VFN), and audio features are processed through an encoder and decoder. The system scales and concatenates visual and audio features, then processes them through a separator network for optimized speech enhancement. The architecture highlights advancements in leveraging multi-modal data and interpolation techniques for robust AVSE challenge systems. The performance of LSTMSE-Net surpasses that of the baseline model from the COG-MHEAR AVSE Challenge 2024 by a margin of 0.06 in scale-invariant signal-to-distortion ratio (SISDR), $0.03$ in short-time objective intelligibility (STOI), and $1.32$ in perceptual evaluation of speech quality (PESQ). The source code of the proposed LSTMSE-Net is available at \url{https://github.com/mtanveer1/AVSEC-3-Challenge}.

When does data augmentation help generalization in NLP?

Apr 30, 2020

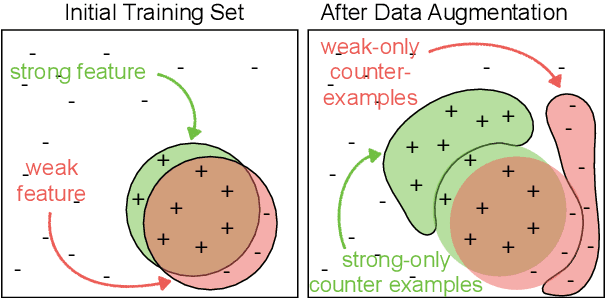

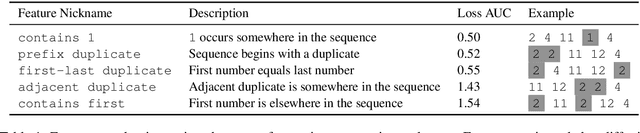

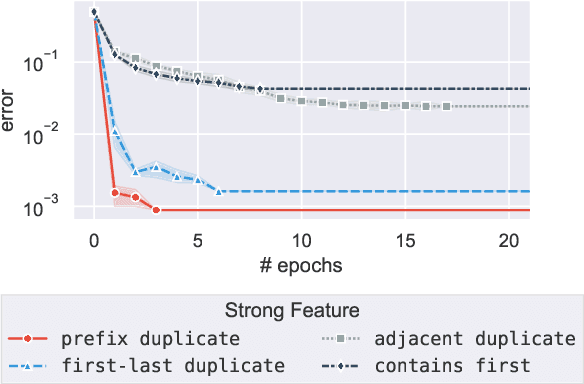

Abstract:Neural models often exploit superficial ("weak") features to achieve good performance, rather than deriving the more general ("strong") features that we'd prefer a model to use. Overcoming this tendency is a central challenge in areas such as representation learning and ML fairness. Recent work has proposed using data augmentation--that is, generating training examples on which these weak features fail--as a means of encouraging models to prefer the stronger features. We design a series of toy learning problems to investigate the conditions under which such data augmentation is helpful. We show that augmenting with training examples on which the weak feature fails ("counterexamples") does succeed in preventing the model from relying on the weak feature, but often does not succeed in encouraging the model to use the stronger feature in general. We also find in many cases that the number of counterexamples needed to reach a given error rate is independent of the amount of training data, and that this type of data augmentation becomes less effective as the target strong feature becomes harder to learn.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge