Renfang Wang

EFKAN: A KAN-Integrated Neural Operator For Efficient Magnetotelluric Forward Modeling

Feb 04, 2025Abstract:Magnetotelluric (MT) forward modeling is fundamental for improving the accuracy and efficiency of MT inversion. Neural operators (NOs) have been effectively used for rapid MT forward modeling, demonstrating their promising performance in solving the MT forward modeling-related partial differential equations (PDEs). Particularly, they can obtain the electromagnetic field at arbitrary locations and frequencies. In these NOs, the projection layers have been dominated by multi-layer perceptrons (MLPs), which may potentially reduce the accuracy of solution due to they usually suffer from the disadvantages of MLPs, such as lack of interpretability, overfitting, and so on. Therefore, to improve the accuracy of MT forward modeling with NOs and explore the potential alternatives to MLPs, we propose a novel neural operator by extending the Fourier neural operator (FNO) with Kolmogorov-Arnold network (EFKAN). Within the EFKAN framework, the FNO serves as the branch network to calculate the apparent resistivity and phase from the resistivity model in the frequency domain. Meanwhile, the KAN acts as the trunk network to project the resistivity and phase, determined by the FNO, to the desired locations and frequencies. Experimental results demonstrate that the proposed method not only achieves higher accuracy in obtaining apparent resistivity and phase compared to the NO equipped with MLPs at the desired frequencies and locations but also outperforms traditional numerical methods in terms of computational speed.

Low-Dose CT Reconstruction Using Dataset-free Learning

Feb 17, 2024Abstract:Low-Dose computer tomography (LDCT) is an ideal alternative to reduce radiation risk in clinical applications. Although supervised-deep-learning-based reconstruction methods have demonstrated superior performance compared to conventional model-driven reconstruction algorithms, they require collecting massive pairs of low-dose and norm-dose CT images for neural network training, which limits their practical application in LDCT imaging. In this paper, we propose an unsupervised and training data-free learning reconstruction method for LDCT imaging that avoids the requirement for training data. The proposed method is a post-processing technique that aims to enhance the initial low-quality reconstruction results, and it reconstructs the high-quality images by neural work training that minimizes the $\ell_1$-norm distance between the CT measurements and their corresponding simulated sinogram data, as well as the total variation (TV) value of the reconstructed image. Moreover, the proposed method does not require to set the weights for both the data fidelity term and the plenty term. Experimental results on the AAPM challenge data and LoDoPab-CT data demonstrate that the proposed method is able to effectively suppress the noise and preserve the tiny structures. And these results also shows the proposed method's low computational cost and rapid convergence. The source code is available at \url{https://github.com/linfengyu77/IRLDCT}.

Seismic Traveltime Tomography with Label-free Learning

Feb 01, 2024Abstract:Deep learning techniques have been used to build velocity models (VMs) for seismic traveltime tomography and have shown encouraging performance in recent years. However, they need to generate labeled samples (i.e., pairs of input and label) to train the deep neural network (NN) with end-to-end learning, and the real labels for field data inversion are usually missing or very expensive. Some traditional tomographic methods can be implemented quickly, but their effectiveness is often limited by prior assumptions. To avoid generating labeled samples, we propose a novel method by integrating deep learning and dictionary learning to enhance the VMs with low resolution by using the traditional tomography-least square method (LSQR). We first design a type of shallow and simple NN to reduce computational cost followed by proposing a two-step strategy to enhance the VMs with low resolution: (1) Warming up. An initial dictionary is trained from the estimation by LSQR through dictionary learning method; (2) Dictionary optimization. The initial dictionary obtained in the warming-up step will be optimized by the NN, and then it will be used to reconstruct high-resolution VMs with the reference slowness and the estimation by LSQR. Furthermore, we design a loss function to minimize traveltime misfit to ensure that NN training is label-free, and the optimized dictionary can be obtained after each epoch of NN training. We demonstrate the effectiveness of the proposed method through numerical tests.

A Latent Fingerprint in the Wild Database

Apr 03, 2023Abstract:Latent fingerprints are among the most important and widely used evidence in crime scenes, digital forensics and law enforcement worldwide. Despite the number of advancements reported in recent works, we note that significant open issues such as independent benchmarking and lack of large-scale evaluation databases for improving the algorithms are inadequately addressed. The available databases are mostly of semi-public nature, lack of acquisition in the wild environment, and post-processing pipelines. Moreover, they do not represent a realistic capture scenario similar to real crime scenes, to benchmark the robustness of the algorithms. Further, existing databases for latent fingerprint recognition do not have a large number of unique subjects/fingerprint instances or do not provide ground truth/reference fingerprint images to conduct a cross-comparison against the latent. In this paper, we introduce a new wild large-scale latent fingerprint database that includes five different acquisition scenarios: reference fingerprints from (1) optical and (2) capacitive sensors, (3) smartphone fingerprints, latent fingerprints captured from (4) wall surface, (5) Ipad surface, and (6) aluminium foil surface. The new database consists of 1,318 unique fingerprint instances captured in all above mentioned settings. A total of 2,636 reference fingerprints from optical and capacitive sensors, 1,318 fingerphotos from smartphones, and 9,224 latent fingerprints from each of the 132 subjects were provided in this work. The dataset is constructed considering various age groups, equal representations of genders and backgrounds. In addition, we provide an extensive set of analysis of various subset evaluations to highlight open challenges for future directions in latent fingerprint recognition research.

Adaptive Divergence-based Non-negative Latent Factor Analysis

Mar 30, 2022

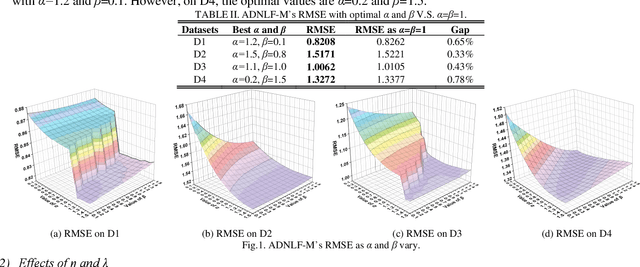

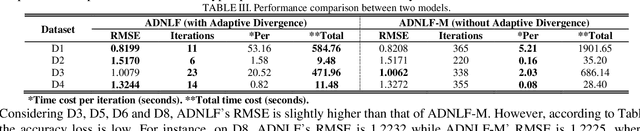

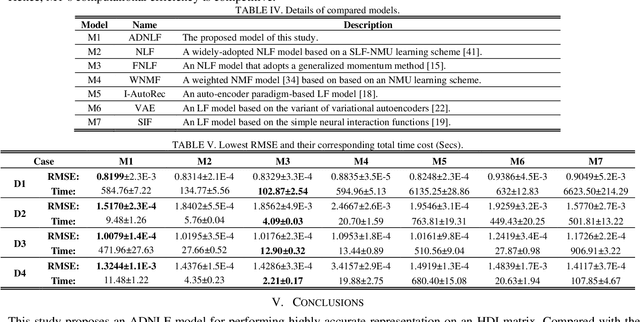

Abstract:High-Dimensional and Incomplete (HDI) data are frequently found in various industrial applications with complex interactions among numerous nodes, which are commonly non-negative for representing the inherent non-negativity of node interactions. A Non-negative Latent Factor (NLF) model is able to extract intrinsic features from such data efficiently. However, existing NLF models all adopt a static divergence metric like Euclidean distance or {\alpha}-\b{eta} divergence to build its learning objective, which greatly restricts its scalability of accurately representing HDI data from different domains. Aiming at addressing this issue, this study presents an Adaptive Divergence-based Non-negative Latent Factor (ADNLF) model with three-fold ideas: a) generalizing the objective function with the {\alpha}-\b{eta}-divergence to expand its potential of representing various HDI data; b) adopting a non-negative bridging function to connect the optimization variables with output latent factors for fulfilling the non-negativity constraints constantly; and c) making the divergence parameters adaptive through particle swarm optimization, thereby facilitating adaptive divergence in the learning objective to achieve high scalability. Empirical studies are conducted on four HDI datasets from real applications, whose results demonstrate that in comparison with state-of-the-art NLF models, an ADNLF model achieves significantly higher estimation accuracy for missing data of an HDI dataset with high computational efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge