Raunak Bhattacharyya

Learning Safe Autonomous Driving Policies Using Predictive Safety Representations

Dec 23, 2025Abstract:Safe reinforcement learning (SafeRL) is a prominent paradigm for autonomous driving, where agents are required to optimize performance under strict safety requirements. This dual objective creates a fundamental tension, as overly conservative policies limit driving efficiency while aggressive exploration risks safety violations. The Safety Representations for Safer Policy Learning (SRPL) framework addresses this challenge by equipping agents with a predictive model of future constraint violations and has shown promise in controlled environments. This paper investigates whether SRPL extends to real-world autonomous driving scenarios. Systematic experiments on the Waymo Open Motion Dataset (WOMD) and NuPlan demonstrate that SRPL can improve the reward-safety tradeoff, achieving statistically significant improvements in success rate (effect sizes r = 0.65-0.86) and cost reduction (effect sizes r = 0.70-0.83), with p < 0.05 for observed improvements. However, its effectiveness depends on the underlying policy optimizer and the dataset distribution. The results further show that predictive safety representations play a critical role in improving robustness to observation noise. Additionally, in zero-shot cross-dataset evaluation, SRPL-augmented agents demonstrate improved generalization compared to non-SRPL methods. These findings collectively demonstrate the potential of predictive safety representations to strengthen SafeRL for autonomous driving.

A Transparency Paradox? Investigating the Impact of Explanation Specificity and Autonomous Vehicle Perceptual Inaccuracies on Passengers

Aug 16, 2024Abstract:Transparency in automated systems could be afforded through the provision of intelligible explanations. While transparency is desirable, might it lead to catastrophic outcomes (such as anxiety), that could outweigh its benefits? It's quite unclear how the specificity of explanations (level of transparency) influences recipients, especially in autonomous driving (AD). In this work, we examined the effects of transparency mediated through varying levels of explanation specificity in AD. We first extended a data-driven explainer model by adding a rule-based option for explanation generation in AD, and then conducted a within-subject lab study with 39 participants in an immersive driving simulator to study the effect of the resulting explanations. Specifically, our investigation focused on: (1) how different types of explanations (specific vs. abstract) affect passengers' perceived safety, anxiety, and willingness to take control of the vehicle when the vehicle perception system makes erroneous predictions; and (2) the relationship between passengers' behavioural cues and their feelings during the autonomous drives. Our findings showed that passengers felt safer with specific explanations when the vehicle's perception system had minimal errors, while abstract explanations that hid perception errors led to lower feelings of safety. Anxiety levels increased when specific explanations revealed perception system errors (high transparency). We found no significant link between passengers' visual patterns and their anxiety levels. Our study suggests that passengers prefer clear and specific explanations (high transparency) when they originate from autonomous vehicles (AVs) with optimal perceptual accuracy.

CC-VPSTO: Chance-Constrained Via-Point-based Stochastic Trajectory Optimisation for Safe and Efficient Online Robot Motion Planning

Feb 06, 2024Abstract:Safety in the face of uncertainty is a key challenge in robotics. We introduce a real-time capable framework to generate safe and task-efficient robot motions for stochastic control problems. We frame this as a chance-constrained optimisation problem constraining the probability of the controlled system to violate a safety constraint to be below a set threshold. To estimate this probability we propose a Monte--Carlo approximation. We suggest several ways to construct the problem given a fixed number of uncertainty samples, such that it is a reliable over-approximation of the original problem, i.e. any solution to the sample-based problem adheres to the original chance-constraint with high confidence. To solve the resulting problem, we integrate it into our motion planner VP-STO and name the enhanced framework Chance-Constrained (CC)-VPSTO. The strengths of our approach lie in i) its generality, without assumptions on the underlying uncertainty distribution, system dynamics, cost function, or the form of inequality constraints; and ii) its applicability to MPC-settings. We demonstrate the validity and efficiency of our approach on both simulation and real-world robot experiments.

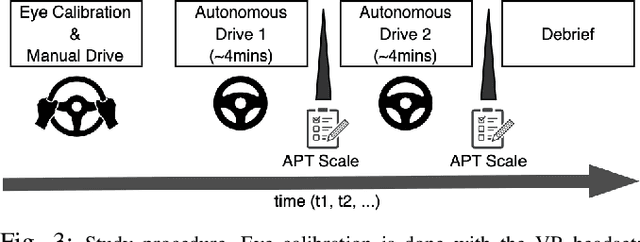

Effects of Explanation Specificity on Passengers in Autonomous Driving

Jul 02, 2023

Abstract:The nature of explanations provided by an explainable AI algorithm has been a topic of interest in the explainable AI and human-computer interaction community. In this paper, we investigate the effects of natural language explanations' specificity on passengers in autonomous driving. We extended an existing data-driven tree-based explainer algorithm by adding a rule-based option for explanation generation. We generated auditory natural language explanations with different levels of specificity (abstract and specific) and tested these explanations in a within-subject user study (N=39) using an immersive physical driving simulation setup. Our results showed that both abstract and specific explanations had similar positive effects on passengers' perceived safety and the feeling of anxiety. However, the specific explanations influenced the desire of passengers to takeover driving control from the autonomous vehicle (AV), while the abstract explanations did not. We conclude that natural language auditory explanations are useful for passengers in autonomous driving, and their specificity levels could influence how much in-vehicle participants would wish to be in control of the driving activity.

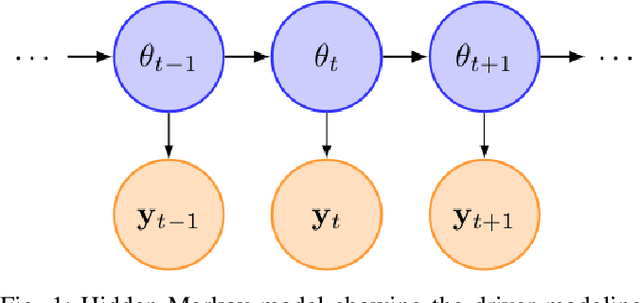

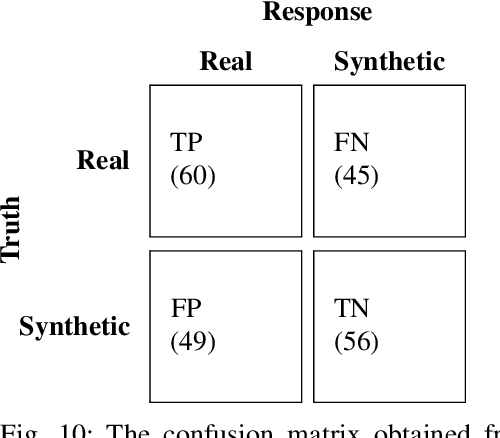

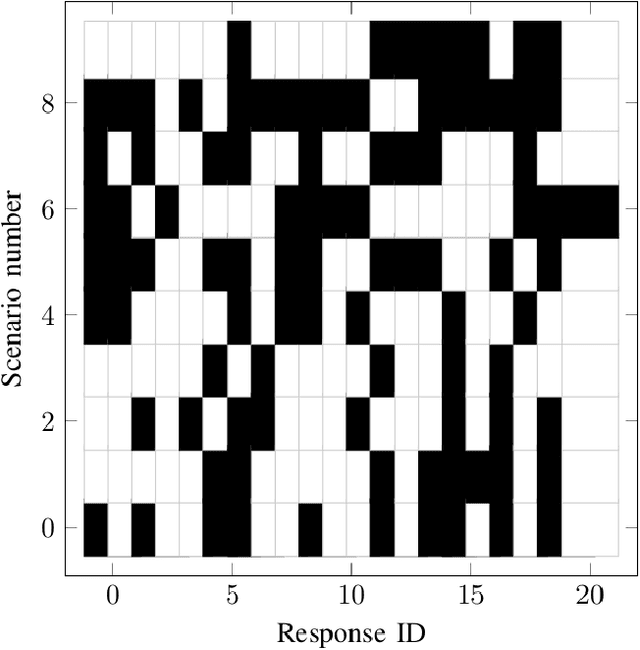

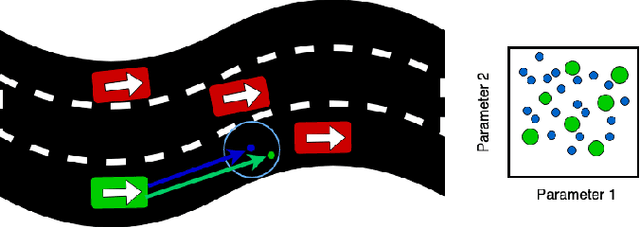

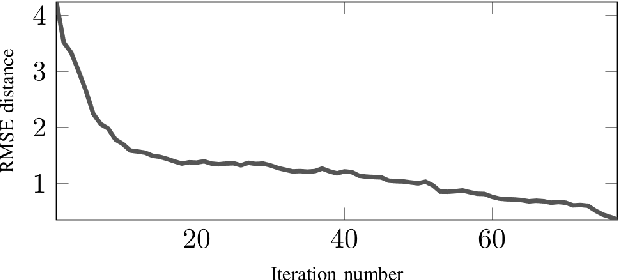

A Hybrid Rule-Based and Data-Driven Approach to Driver Modeling through Particle Filtering

Aug 29, 2021

Abstract:Autonomous vehicles need to model the behavior of surrounding human driven vehicles to be safe and efficient traffic participants. Existing approaches to modeling human driving behavior have relied on both data-driven and rule-based methods. While data-driven models are more expressive, rule-based models are interpretable, which is an important requirement for safety-critical domains like driving. However, rule-based models are not sufficiently representative of data, and data-driven models are yet unable to generate realistic traffic simulation due to unrealistic driving behavior such as collisions. In this paper, we propose a methodology that combines rule-based modeling with data-driven learning. While the rules are governed by interpretable parameters of the driver model, these parameters are learned online from driving demonstration data using particle filtering. We perform driver modeling experiments on the task of highway driving and merging using data from three real-world driving demonstration datasets. Our results show that driver models based on our hybrid rule-based and data-driven approach can accurately capture real-world driving behavior. Further, we assess the realism of the driving behavior generated by our model by having humans perform a driving Turing test, where they are asked to distinguish between videos of real driving and those generated using our driver models.

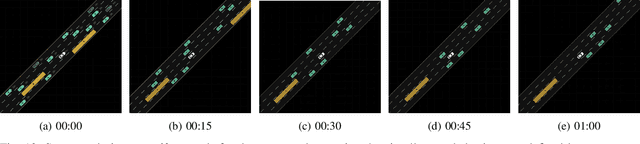

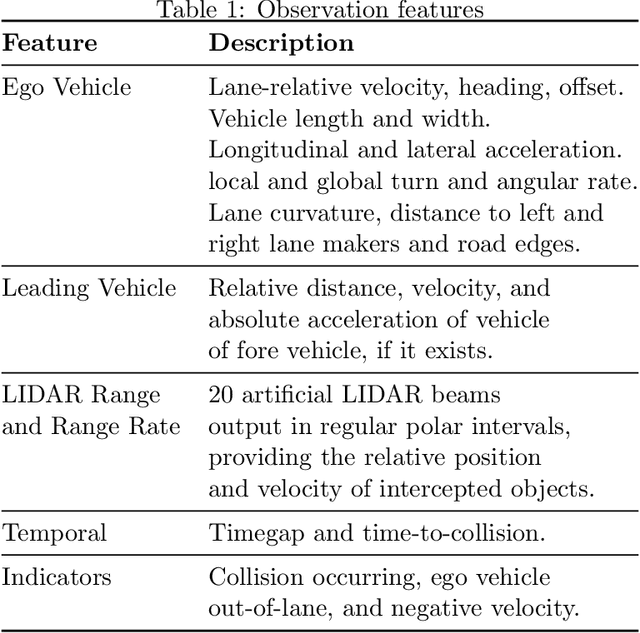

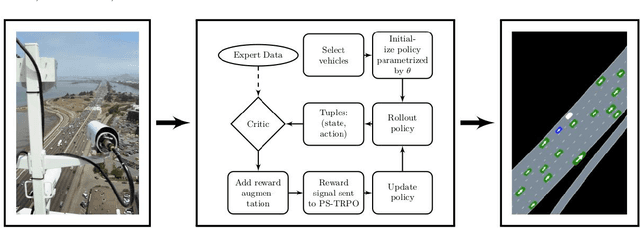

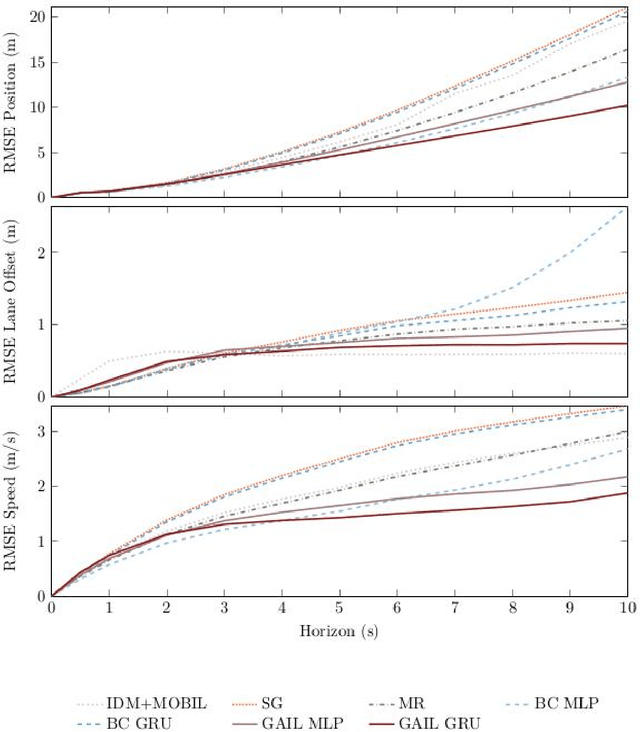

Modeling Human Driving Behavior through Generative Adversarial Imitation Learning

Jun 10, 2020

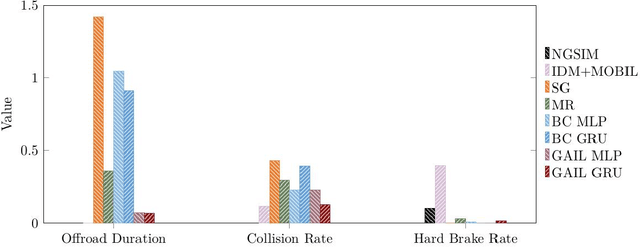

Abstract:Imitation learning is an approach for generating intelligent behavior when the cost function is unknown or difficult to specify. Building upon work in inverse reinforcement learning (IRL), Generative Adversarial Imitation Learning (GAIL) aims to provide effective imitation even for problems with large or continuous state and action spaces. Driver modeling is one example of a problem where the state and action spaces are continuous. Human driving behavior is characterized by non-linearity and stochasticity, and the underlying cost function is unknown. As a result, learning from human driving demonstrations is a promising approach for generating human-like driving behavior. This article describes the use of GAIL for learning-based driver modeling. Because driver modeling is inherently a multi-agent problem, where the interaction between agents needs to be modeled, this paper describes a parameter-sharing extension of GAIL called PS-GAIL to tackle multi-agent driver modeling. In addition, GAIL is domain agnostic, making it difficult to encode specific knowledge relevant to driving in the learning process. This paper describes Reward Augmented Imitation Learning (RAIL), which modifies the reward signal to provide domain-specific knowledge to the agent. Finally, human demonstrations are dependent upon latent factors that may not be captured by GAIL. This paper describes Burn-InfoGAIL, which allows for disentanglement of latent variability in demonstrations. Imitation learning experiments are performed using NGSIM, a real-world highway driving dataset. Experiments show that these modifications to GAIL can successfully model highway driving behavior, accurately replicating human demonstrations and generating realistic, emergent behavior in the traffic flow arising from the interaction between driving agents.

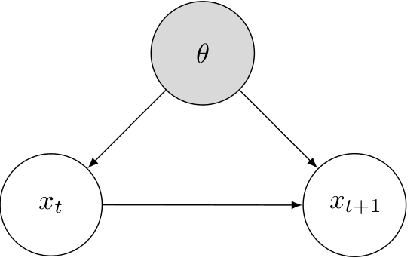

Online Parameter Estimation for Human Driver Behavior Prediction

May 06, 2020

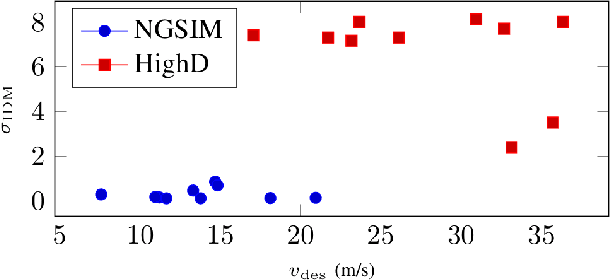

Abstract:Driver models are invaluable for planning in autonomous vehicles as well as validating their safety in simulation. Highly parameterized black-box driver models are very expressive, and can capture nuanced behavior. However, they usually lack interpretability and sometimes exhibit unrealistic-even dangerous-behavior. Rule-based models are interpretable, and can be designed to guarantee "safe" behavior, but are less expressive due to their low number of parameters. In this article, we show that online parameter estimation applied to the Intelligent Driver Model captures nuanced individual driving behavior while providing collision free trajectories. We solve the online parameter estimation problem using particle filtering, and benchmark performance against rule-based and black-box driver models on two real world driving data sets. We evaluate the closeness of our driver model to ground truth data demonstration and also assess the safety of the resulting emergent driving behavior.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge