Jeremy Morton

Modeling Human Driving Behavior through Generative Adversarial Imitation Learning

Jun 10, 2020

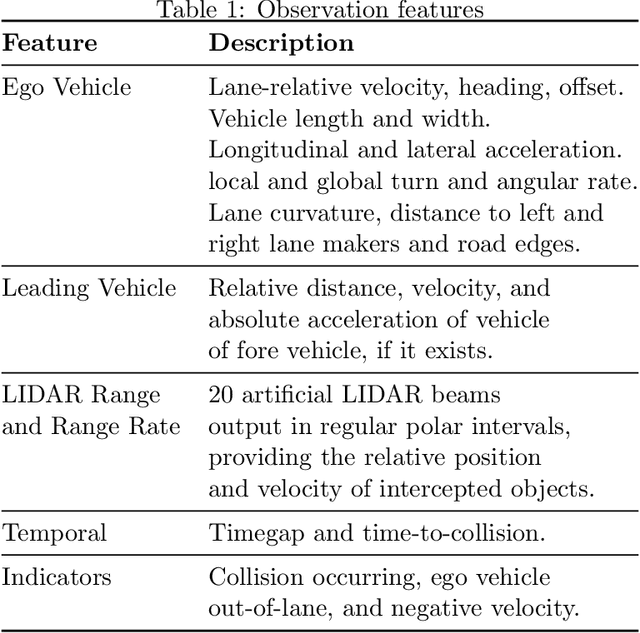

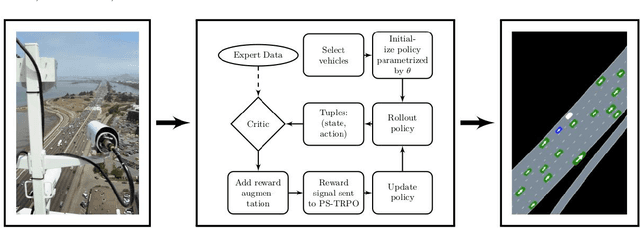

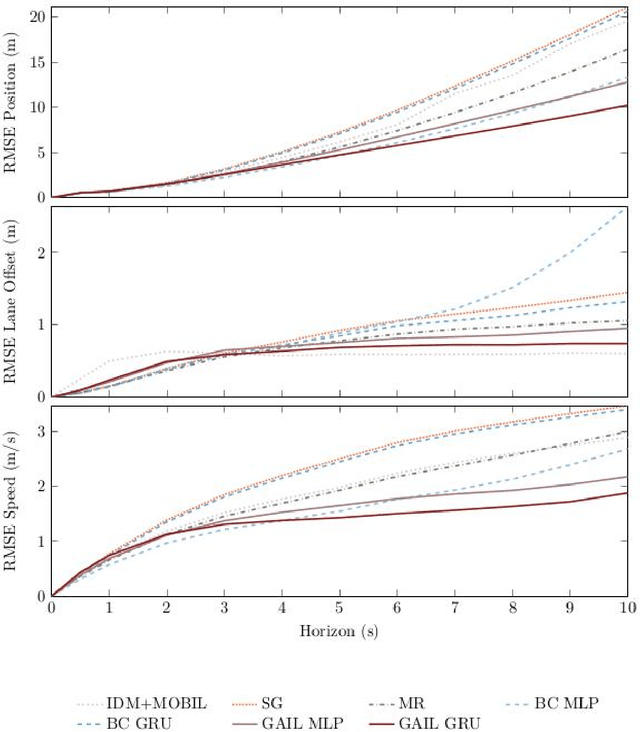

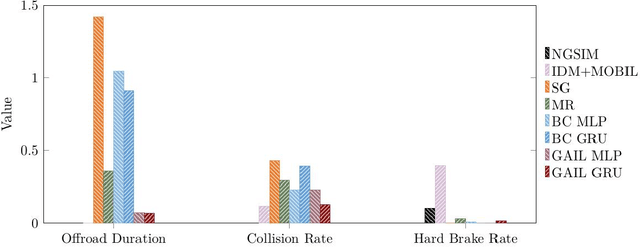

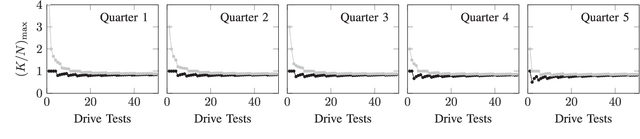

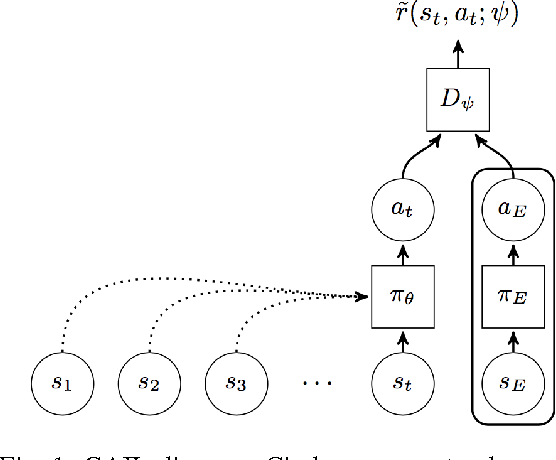

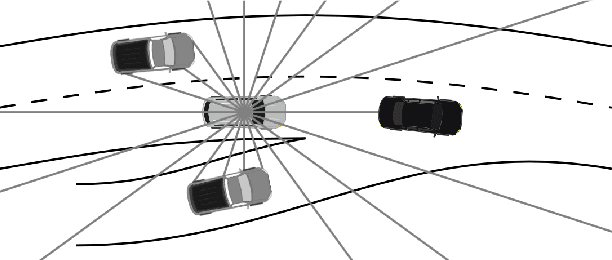

Abstract:Imitation learning is an approach for generating intelligent behavior when the cost function is unknown or difficult to specify. Building upon work in inverse reinforcement learning (IRL), Generative Adversarial Imitation Learning (GAIL) aims to provide effective imitation even for problems with large or continuous state and action spaces. Driver modeling is one example of a problem where the state and action spaces are continuous. Human driving behavior is characterized by non-linearity and stochasticity, and the underlying cost function is unknown. As a result, learning from human driving demonstrations is a promising approach for generating human-like driving behavior. This article describes the use of GAIL for learning-based driver modeling. Because driver modeling is inherently a multi-agent problem, where the interaction between agents needs to be modeled, this paper describes a parameter-sharing extension of GAIL called PS-GAIL to tackle multi-agent driver modeling. In addition, GAIL is domain agnostic, making it difficult to encode specific knowledge relevant to driving in the learning process. This paper describes Reward Augmented Imitation Learning (RAIL), which modifies the reward signal to provide domain-specific knowledge to the agent. Finally, human demonstrations are dependent upon latent factors that may not be captured by GAIL. This paper describes Burn-InfoGAIL, which allows for disentanglement of latent variability in demonstrations. Imitation learning experiments are performed using NGSIM, a real-world highway driving dataset. Experiments show that these modifications to GAIL can successfully model highway driving behavior, accurately replicating human demonstrations and generating realistic, emergent behavior in the traffic flow arising from the interaction between driving agents.

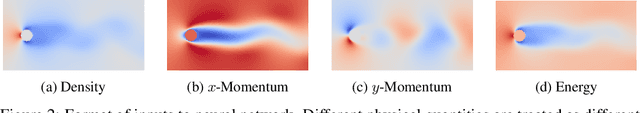

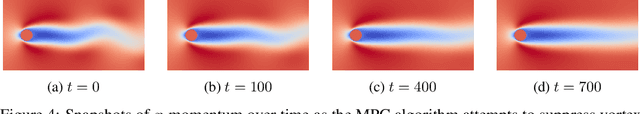

Parameter-Conditioned Sequential Generative Modeling of Fluid Flows

Dec 14, 2019

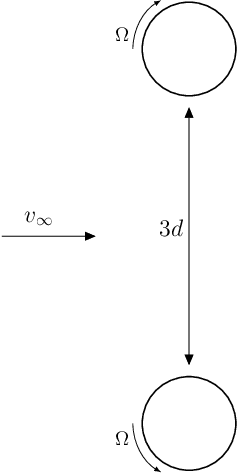

Abstract:The computational cost associated with simulating fluid flows can make it infeasible to run many simulations across multiple flow conditions. Building upon concepts from generative modeling, we introduce a new method for learning neural network models capable of performing efficient parameterized simulations of fluid flows. Evaluated on their ability to simulate both two-dimensional and three-dimensional fluid flows, trained models are shown to capture local and global properties of the flow fields at a wide array of flow conditions. Furthermore, flow simulations generated by the trained models are shown to be orders of magnitude faster than the corresponding computational fluid dynamics simulations.

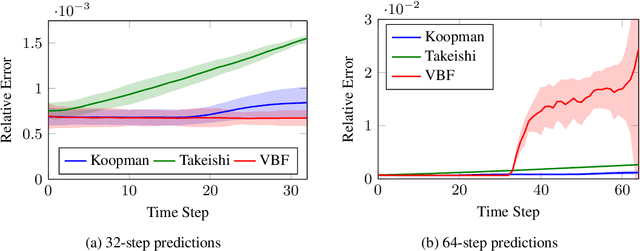

Deep Variational Koopman Models: Inferring Koopman Observations for Uncertainty-Aware Dynamics Modeling and Control

Feb 26, 2019

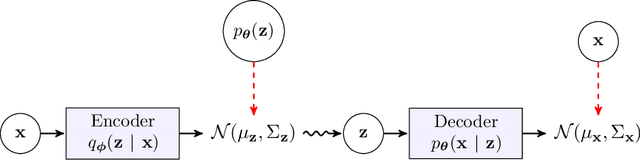

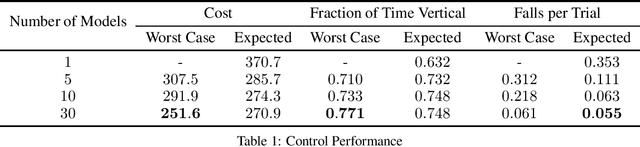

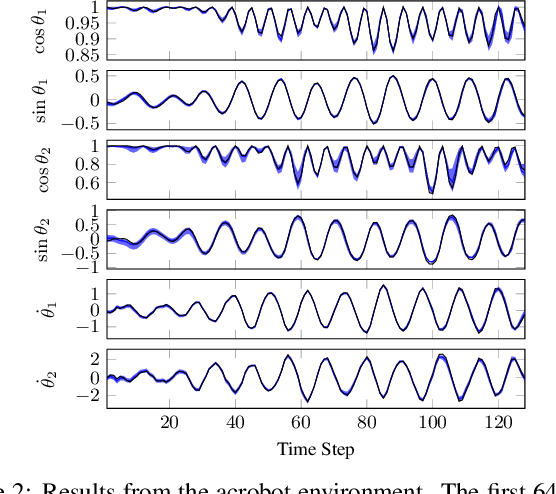

Abstract:Koopman theory asserts that a nonlinear dynamical system can be mapped to a linear system, where the Koopman operator advances observations of the state forward in time. However, the observable functions that map states to observations are generally unknown. We introduce the Deep Variational Koopman (DVK) model, a method for inferring distributions over observations that can be propagated linearly in time. By sampling from the inferred distributions, we obtain a distribution over dynamical models, which in turn provides a distribution over possible outcomes as a modeled system advances in time. Experiments show that the DVK model is effective at long-term prediction for a variety of dynamical systems. Furthermore, we describe how to incorporate the learned models into a control framework, and demonstrate that accounting for the uncertainty present in the distribution over dynamical models enables more effective control.

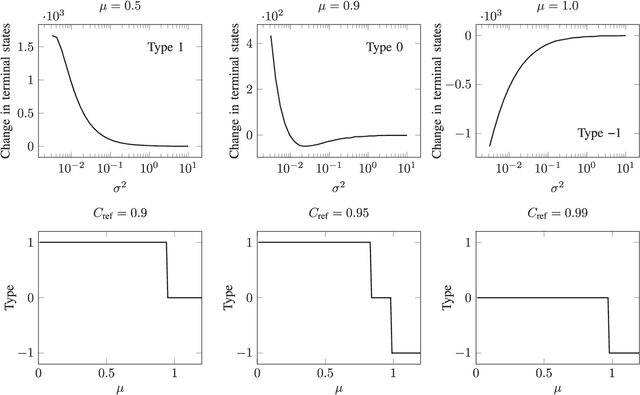

Closed-Loop Policies for Operational Tests of Safety-Critical Systems

May 19, 2018

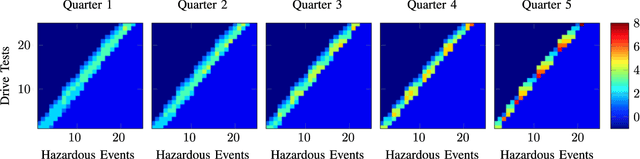

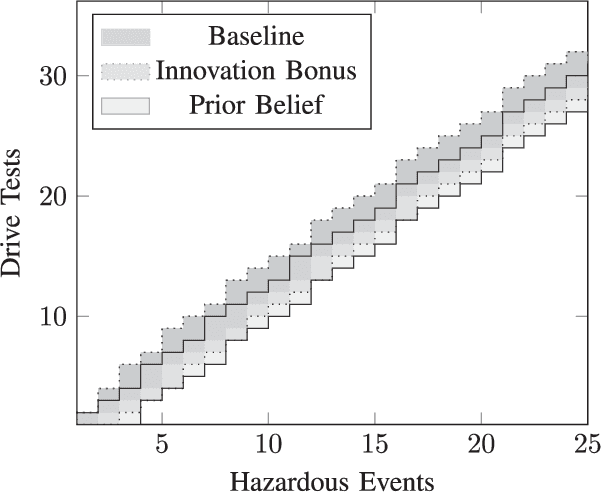

Abstract:Manufacturers of safety-critical systems must make the case that their product is sufficiently safe for public deployment. Much of this case often relies upon critical event outcomes from real-world testing, requiring manufacturers to be strategic about how they allocate testing resources in order to maximize their chances of demonstrating system safety. This work frames the partially observable and belief-dependent problem of test scheduling as a Markov decision process, which can be solved efficiently to yield closed-loop manufacturer testing policies. By solving for policies over a wide range of problem formulations, we are able to provide high-level guidance for manufacturers and regulators on issues relating to the testing of safety-critical systems. This guidance spans an array of topics, including circumstances under which manufacturers should continue testing despite observed incidents, when manufacturers should test aggressively, and when regulators should increase or reduce the real-world testing requirements for an autonomous vehicle.

Deep Dynamical Modeling and Control of Unsteady Fluid Flows

May 18, 2018

Abstract:The design of flow control systems remains a challenge due to the nonlinear nature of the equations that govern fluid flow. However, recent advances in computational fluid dynamics (CFD) have enabled the simulation of complex fluid flows with high accuracy, opening the possibility of using learning-based approaches to facilitate controller design. We present a method for learning the forced and unforced dynamics of airflow over a cylinder directly from CFD data. The proposed approach, grounded in Koopman theory, is shown to produce stable dynamical models that can predict the time evolution of the cylinder system over extended time horizons. Finally, by performing model predictive control with the learned dynamical models, we are able to find a straightforward, interpretable control law for suppressing vortex shedding in the wake of the cylinder.

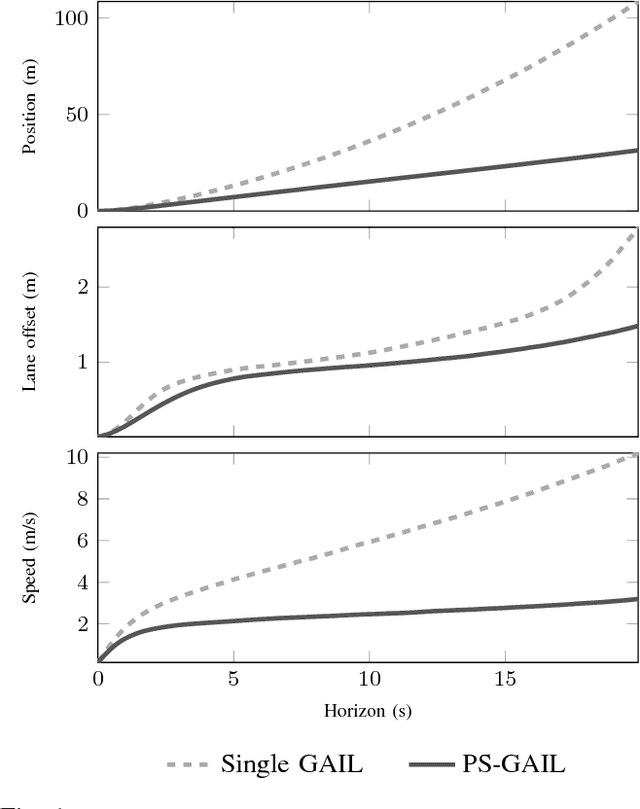

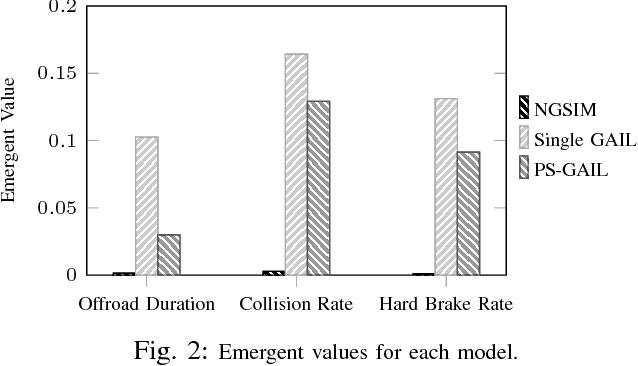

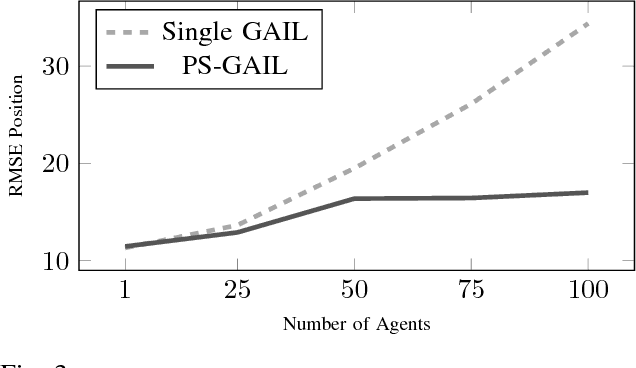

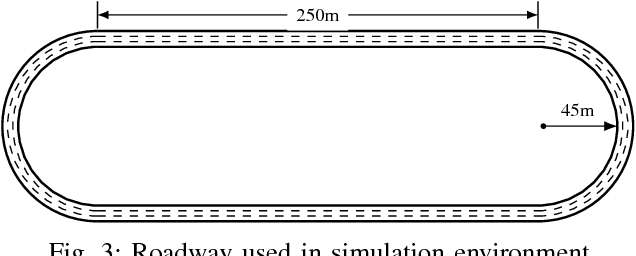

Multi-Agent Imitation Learning for Driving Simulation

Mar 02, 2018

Abstract:Simulation is an appealing option for validating the safety of autonomous vehicles. Generative Adversarial Imitation Learning (GAIL) has recently been shown to learn representative human driver models. These human driver models were learned through training in single-agent environments, but they have difficulty in generalizing to multi-agent driving scenarios. We argue these difficulties arise because observations at training and test time are sampled from different distributions. This difference makes such models unsuitable for the simulation of driving scenes, where multiple agents must interact realistically over long time horizons. We extend GAIL to address these shortcomings through a parameter-sharing approach grounded in curriculum learning. Compared with single-agent GAIL policies, policies generated by our PS-GAIL method prove superior at interacting stably in a multi-agent setting and capturing the emergent behavior of human drivers.

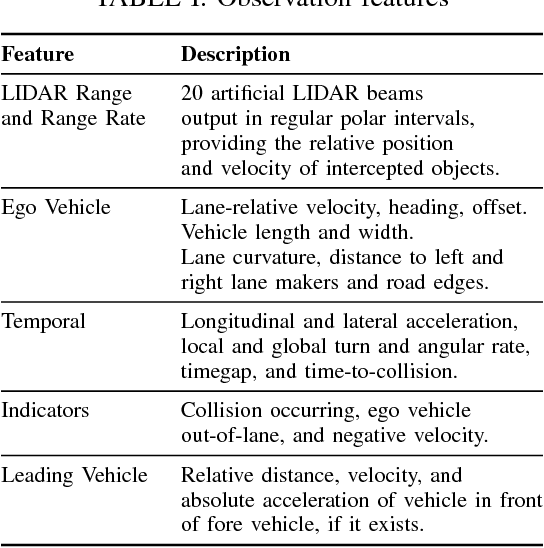

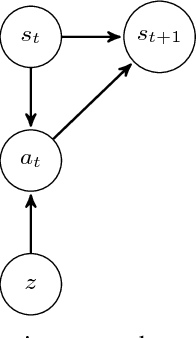

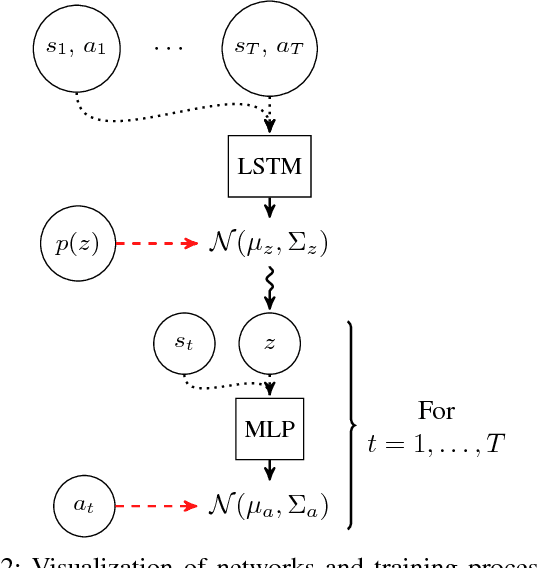

Simultaneous Policy Learning and Latent State Inference for Imitating Driver Behavior

Apr 19, 2017

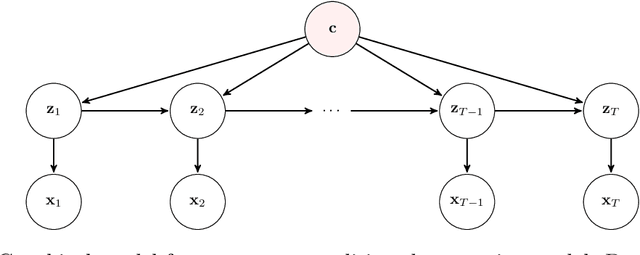

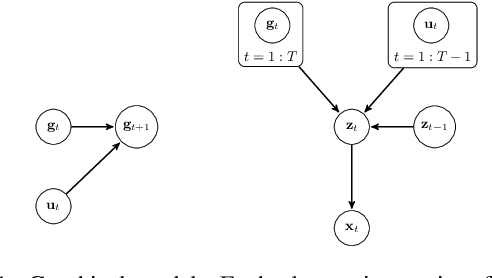

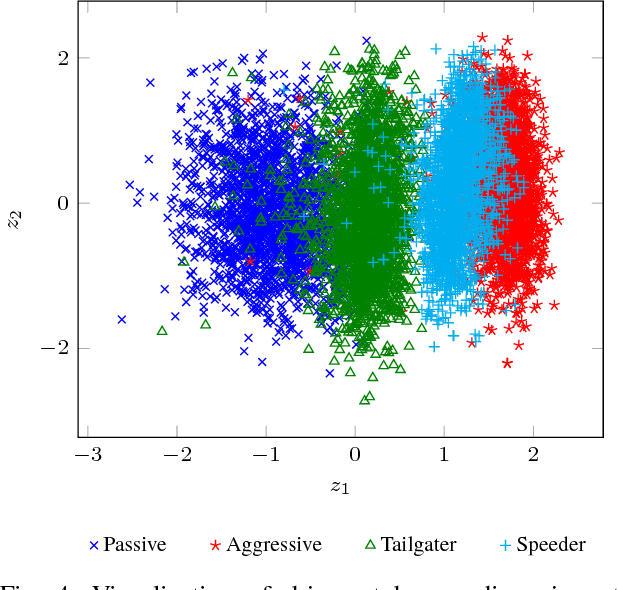

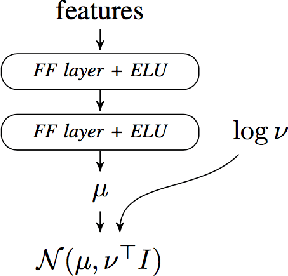

Abstract:In this work, we propose a method for learning driver models that account for variables that cannot be observed directly. When trained on a synthetic dataset, our models are able to learn encodings for vehicle trajectories that distinguish between four distinct classes of driver behavior. Such encodings are learned without any knowledge of the number of driver classes or any objective that directly requires the models to learn encodings for each class. We show that driving policies trained with knowledge of latent variables are more effective than baseline methods at imitating the driver behavior that they are trained to replicate. Furthermore, we demonstrate that the actions chosen by our policy are heavily influenced by the latent variable settings that are provided to them.

Imitating Driver Behavior with Generative Adversarial Networks

Jan 24, 2017

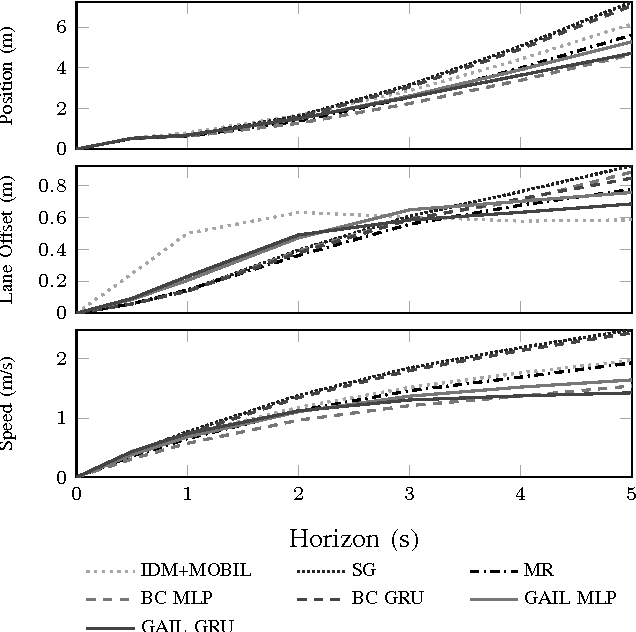

Abstract:The ability to accurately predict and simulate human driving behavior is critical for the development of intelligent transportation systems. Traditional modeling methods have employed simple parametric models and behavioral cloning. This paper adopts a method for overcoming the problem of cascading errors inherent in prior approaches, resulting in realistic behavior that is robust to trajectory perturbations. We extend Generative Adversarial Imitation Learning to the training of recurrent policies, and we demonstrate that our model outperforms rule-based controllers and maximum likelihood models in realistic highway simulations. Our model both reproduces emergent behavior of human drivers, such as lane change rate, while maintaining realistic control over long time horizons.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge