Raphael J. L. Townshend

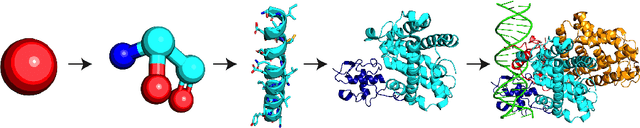

ATOM3D: Tasks On Molecules in Three Dimensions

Dec 07, 2020

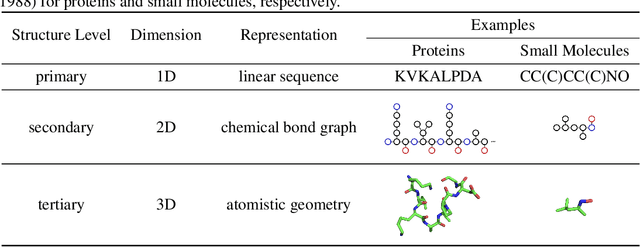

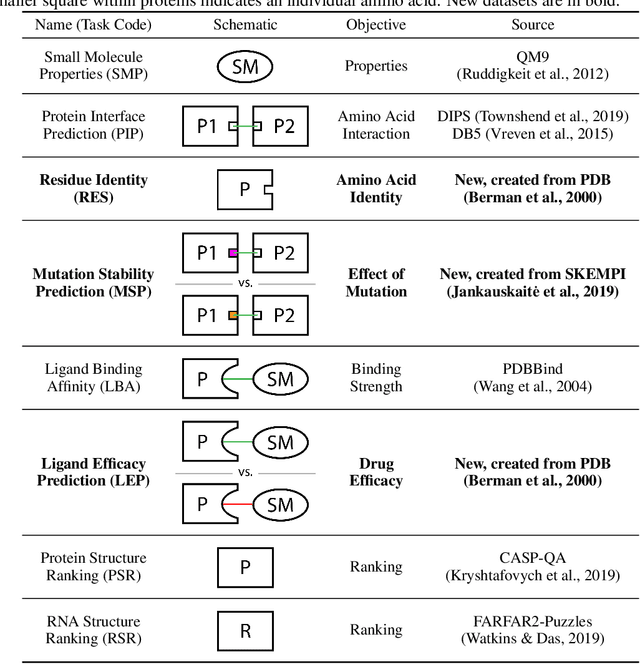

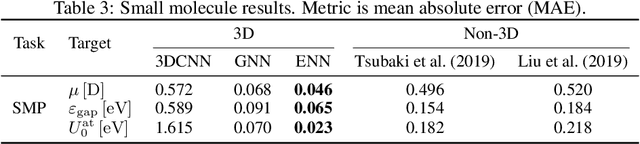

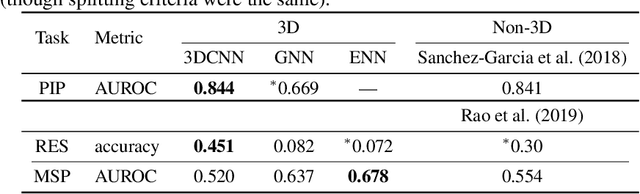

Abstract:Computational methods that operate directly on three-dimensional molecular structure hold large potential to solve important questions in biology and chemistry. In particular deep neural networks have recently gained significant attention. In this work we present ATOM3D, a collection of both novel and existing datasets spanning several key classes of biomolecules, to systematically assess such learning methods. We develop three-dimensional molecular learning networks for each of these tasks, finding that they consistently improve performance relative to one- and two-dimensional methods. The specific choice of architecture proves to be critical for performance, with three-dimensional convolutional networks excelling at tasks involving complex geometries, while graph networks perform well on systems requiring detailed positional information. Furthermore, equivariant networks show significant promise. Our results indicate many molecular problems stand to gain from three-dimensional molecular learning. All code and datasets can be accessed via https://www.atom3d.ai .

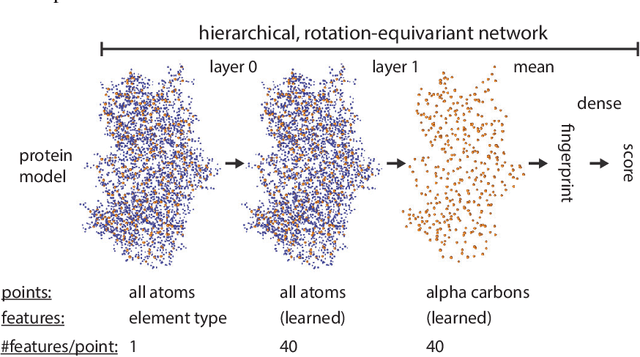

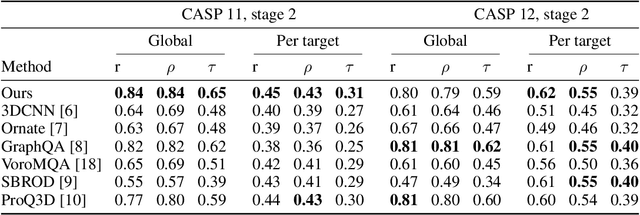

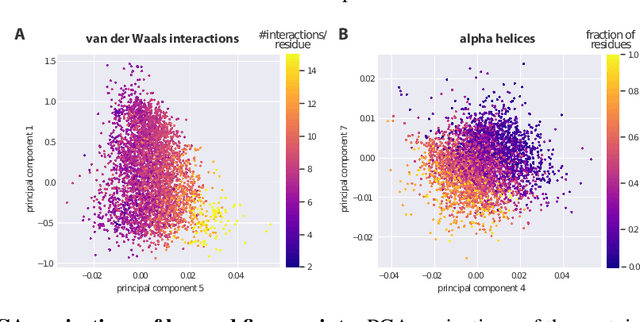

Protein model quality assessment using rotation-equivariant, hierarchical neural networks

Nov 27, 2020

Abstract:Proteins are miniature machines whose function depends on their three-dimensional (3D) structure. Determining this structure computationally remains an unsolved grand challenge. A major bottleneck involves selecting the most accurate structural model among a large pool of candidates, a task addressed in model quality assessment. Here, we present a novel deep learning approach to assess the quality of a protein model. Our network builds on a point-based representation of the atomic structure and rotation-equivariant convolutions at different levels of structural resolution. These combined aspects allow the network to learn end-to-end from entire protein structures. Our method achieves state-of-the-art results in scoring protein models submitted to recent rounds of CASP, a blind prediction community experiment. Particularly striking is that our method does not use physics-inspired energy terms and does not rely on the availability of additional information (beyond the atomic structure of the individual protein model), such as sequence alignments of multiple proteins.

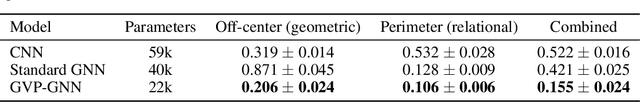

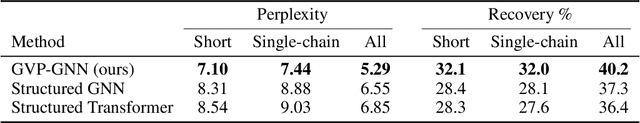

Learning from Protein Structure with Geometric Vector Perceptrons

Sep 03, 2020

Abstract:Learning on 3D structures of large biomolecules is emerging as a distinct area in machine learning, but there has yet to emerge a unifying network architecture that simultaneously leverages the graph-structured and geometric aspects of the problem domain. To address this gap, we introduce geometric vector perceptrons, which extend standard dense layers to operate on collections of Euclidean vectors. Graph neural networks equipped with such layers are able to perform both geometric and relational reasoning on efficient and natural representations of macromolecular structure. We demonstrate our approach on two important problems in learning from protein structure: model quality assessment and computational protein design. Our approach improves over existing classes of architectures, including state-of-the-art graph-based and voxel-based methods.

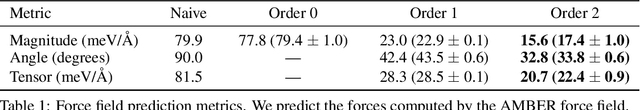

Geometric Prediction: Moving Beyond Scalars

Jun 25, 2020

Abstract:Many quantities we are interested in predicting are geometric tensors; we refer to this class of problems as geometric prediction. Attempts to perform geometric prediction in real-world scenarios have been limited to approximating them through scalar predictions, leading to losses in data efficiency. In this work, we demonstrate that equivariant networks have the capability to predict real-world geometric tensors without the need for such approximations. We show the applicability of this method to the prediction of force fields and then propose a novel formulation of an important task, biomolecular structure refinement, as a geometric prediction problem, improving state-of-the-art structural candidates. In both settings, we find that our equivariant network is able to generalize to unseen systems, despite having been trained on small sets of examples. This novel and data-efficient ability to predict real-world geometric tensors opens the door to addressing many problems through the lens of geometric prediction, in areas such as 3D vision, robotics, and molecular and structural biology.

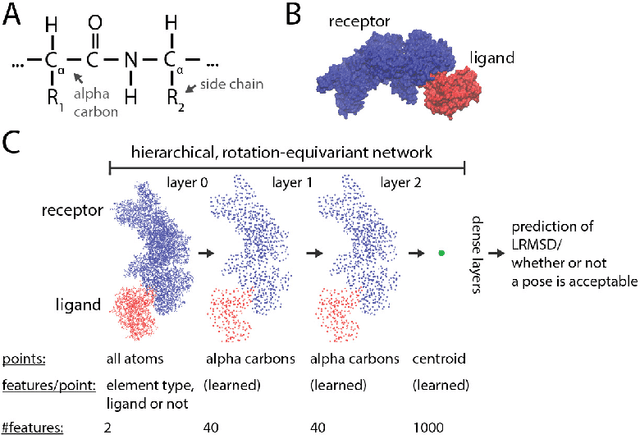

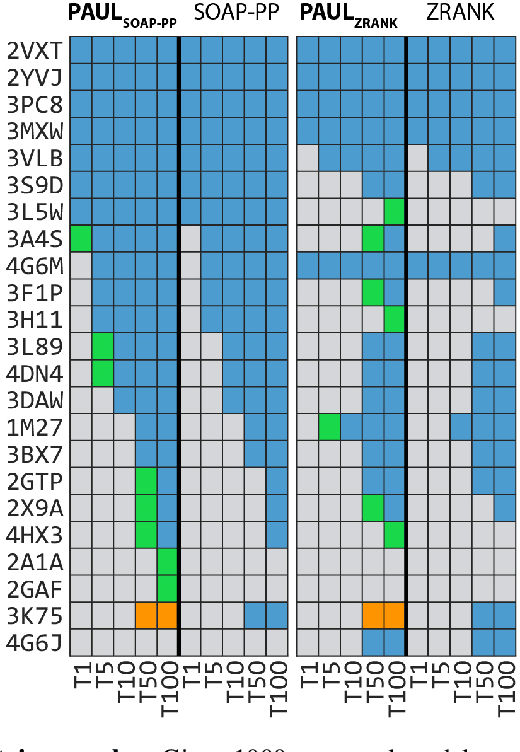

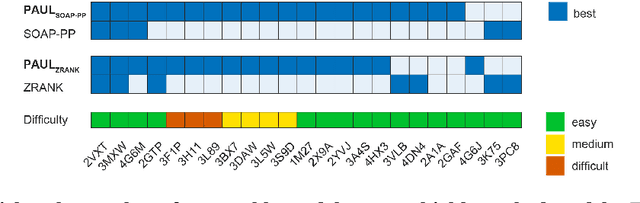

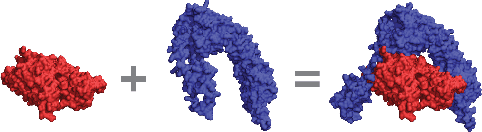

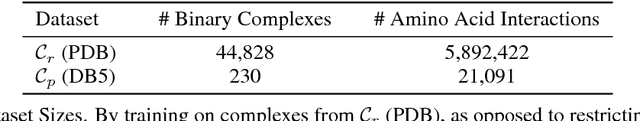

Hierarchical, rotation-equivariant neural networks to predict the structure of protein complexes

Jun 05, 2020

Abstract:Predicting the structure of multi-protein complexes is a grand challenge in biochemistry, with major implications for basic science and drug discovery. Computational structure prediction methods generally leverage pre-defined structural features to distinguish accurate structural models from less accurate ones. This raises the question of whether it is possible to learn characteristics of accurate models directly from atomic coordinates of protein complexes, with no prior assumptions. Here we introduce a machine learning method that learns directly from the 3D positions of all atoms to identify accurate models of protein complexes, without using any pre-computed physics-inspired or statistical terms. Our neural network architecture combines multiple ingredients that together enable end-to-end learning from molecular structures containing tens of thousands of atoms: a point-based representation of atoms, equivariance with respect to rotation and translation, local convolutions, and hierarchical subsampling operations. When used in combination with previously developed scoring functions, our network substantially improves the identification of accurate structural models among a large set of possible models. Our network can also be used to predict the accuracy of a given structural model in absolute terms. The architecture we present is readily applicable to other tasks involving learning on 3D structures of large atomic systems.

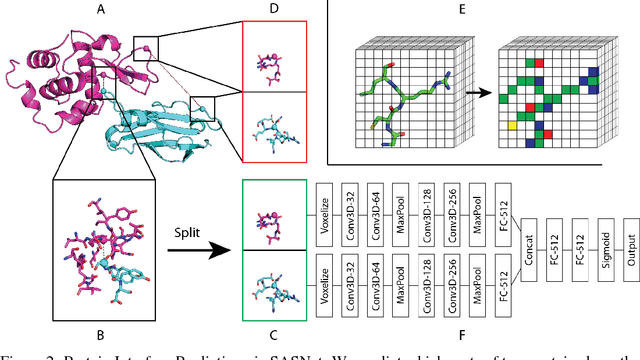

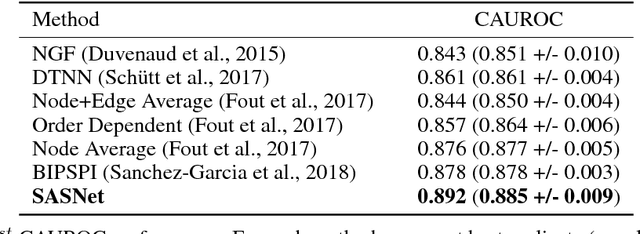

Generalizable Protein Interface Prediction with End-to-End Learning

Sep 21, 2018

Abstract:Predicting how proteins interact with one another - that is, which surfaces of one protein bind to which surfaces of another protein - is a central problem in biology. Here we present Siamese Atomic Surfacelet Network (SASNet), the first end-to-end learning method for protein interface prediction. Despite using only spatial coordinates and identities of atoms as inputs, SASNet outperforms state-of-the-art methods that rely on complex, hand-selected features. These results are particularly striking because we train the method entirely on a significantly biased data set that does not account for the fact that proteins deform when binding to one another. Nonetheless, our network maintains high performance, without retraining, when tested on real cases in which proteins do deform. This suggests that it has learned fundamental properties of protein structure and dynamics, which has important implications for a variety of key problems related to biomolecular structure.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge