Qiuying Li

Exploring Few-Shot Defect Segmentation in General Industrial Scenarios with Metric Learning and Vision Foundation Models

Feb 03, 2025

Abstract:Industrial defect segmentation is critical for manufacturing quality control. Due to the scarcity of training defect samples, few-shot semantic segmentation (FSS) holds significant value in this field. However, existing studies mostly apply FSS to tackle defects on simple textures, without considering more diverse scenarios. This paper aims to address this gap by exploring FSS in broader industrial products with various defect types. To this end, we contribute a new real-world dataset and reorganize some existing datasets to build a more comprehensive few-shot defect segmentation (FDS) benchmark. On this benchmark, we thoroughly investigate metric learning-based FSS methods, including those based on meta-learning and those based on Vision Foundation Models (VFMs). We observe that existing meta-learning-based methods are generally not well-suited for this task, while VFMs hold great potential. We further systematically study the applicability of various VFMs in this task, involving two paradigms: feature matching and the use of Segment Anything (SAM) models. We propose a novel efficient FDS method based on feature matching. Meanwhile, we find that SAM2 is particularly effective for addressing FDS through its video track mode. The contributed dataset and code will be available at: https://github.com/liutongkun/GFDS.

3D Brainformer: 3D Fusion Transformer for Brain Tumor Segmentation

Apr 28, 2023

Abstract:Magnetic resonance imaging (MRI) is critically important for brain mapping in both scientific research and clinical studies. Precise segmentation of brain tumors facilitates clinical diagnosis, evaluations, and surgical planning. Deep learning has recently emerged to improve brain tumor segmentation and achieved impressive results. Convolutional architectures are widely used to implement those neural networks. By the nature of limited receptive fields, however, those architectures are subject to representing long-range spatial dependencies of the voxel intensities in MRI images. Transformers have been leveraged recently to address the above limitations of convolutional networks. Unfortunately, the majority of current Transformers-based methods in segmentation are performed with 2D MRI slices, instead of 3D volumes. Moreover, it is difficult to incorporate the structures between layers because each head is calculated independently in the Multi-Head Self-Attention mechanism (MHSA). In this work, we proposed a 3D Transformer-based segmentation approach. We developed a Fusion-Head Self-Attention mechanism (FHSA) to combine each attention head through attention logic and weight mapping, for the exploration of the long-range spatial dependencies in 3D MRI images. We implemented a plug-and-play self-attention module, named the Infinite Deformable Fusion Transformer Module (IDFTM), to extract features on any deformable feature maps. We applied our approach to the task of brain tumor segmentation, and assessed it on the public BRATS datasets. The experimental results demonstrated that our proposed approach achieved superior performance, in comparison to several state-of-the-art segmentation methods.

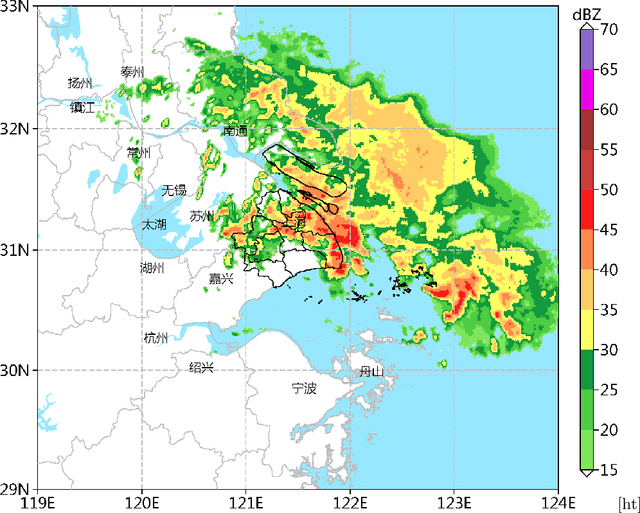

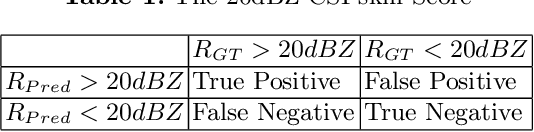

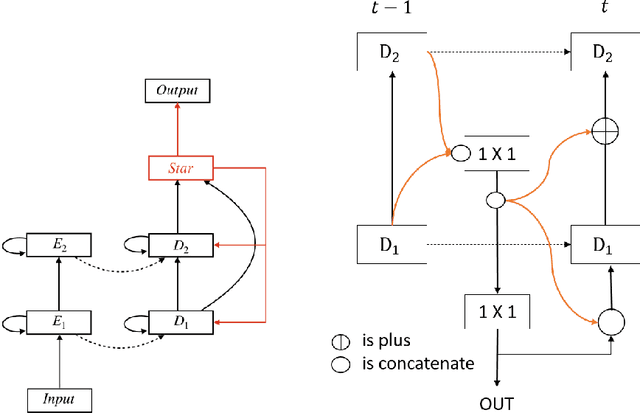

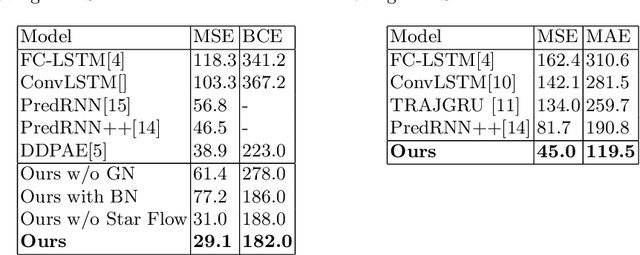

Video Prediction for Precipitation Nowcasting

Jul 18, 2019

Abstract:Video prediction, which aims to synthesize new consecutive frames subsequent to an existing video. However, its performance suffers from uncertainty of the future. As a potential weather application for video prediction, short time precipitation nowcasting is a more challenging task than other ones as its uncertainty is highly influenced by temperature, atmospheric, wind, humidity and such like. To address this issue, we propose a star-bridge neural network (StarBriNet). Specifically, we first construct a simple yet effective star-shape information bridge for RNN to transfer features across time-steps. We also propose a novel loss function designed for precipitaion nowcasting task. Furthermore, we utilize group normalization to refine the predictive performance of our network. Experiments in a Moving-Digital dataset and a weather predicting dataset demonstrate that our model outperforms the state-of-the-art algorithms for video prediction and precipitation nowcasting, achieving satisfied weather forecasting performance.

Transfer-Learning Oriented Class Imbalance Learning for Cross-Project Defect Prediction

Jan 24, 2019

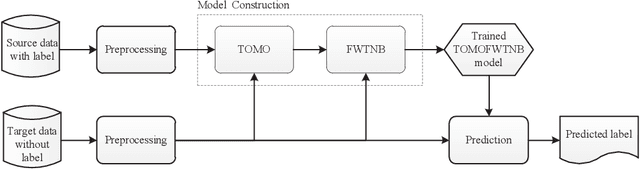

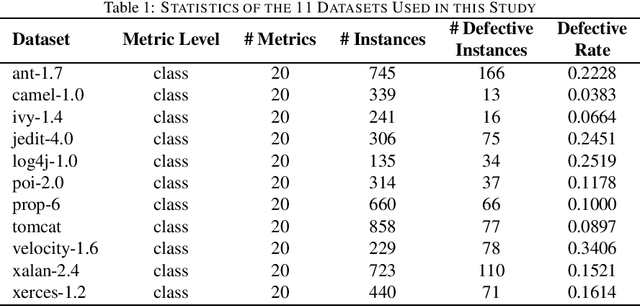

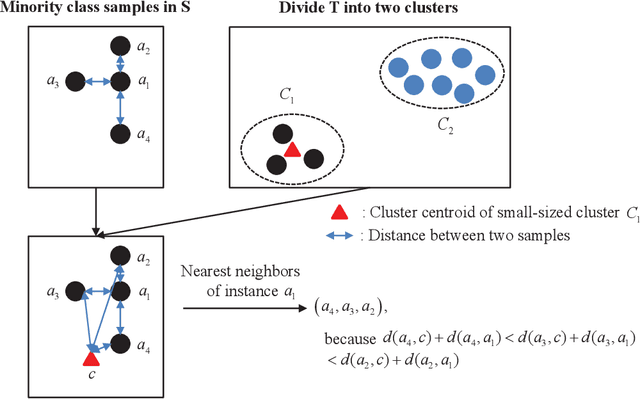

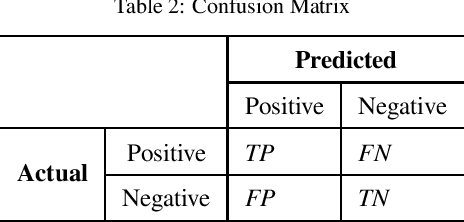

Abstract:Cross-project defect prediction (CPDP) aims to predict defects of projects lacking training data by using prediction models trained on historical defect data from other projects. However, since the distribution differences between datasets from different projects, it is still a challenge to build high-quality CPDP models. Unfortunately, class imbalanced nature of software defect datasets further increases the difficulty. In this paper, we propose a transferlearning oriented minority over-sampling technique (TOMO) based feature weighting transfer naive Bayes (FWTNB) approach (TOMOFWTNB) for CPDP by considering both classimbalance and feature importance problems. Differing from traditional over-sampling techniques, TOMO not only can balance the data but reduce the distribution difference. And then FWTNB is used to further increase the similarity of two distributions. Experiments are performed on 11 public defect datasets. The experimental results show that (1) TOMO improves the average G-Measure by 23.7\%$\sim$41.8\%, and the average MCC by 54.2\%$\sim$77.8\%. (2) feature weighting (FW) strategy improves the average G-Measure by 11\%, and the average MCC by 29.2\%. (3) TOMOFWTNB improves the average G-Measure value by at least 27.8\%, and the average MCC value by at least 71.5\%, compared with existing state-of-theart CPDP approaches. It can be concluded that (1) TOMO is very effective for addressing class-imbalance problem in CPDP scenario; (2) our FW strategy is helpful for CPDP; (3) TOMOFWTNB outperforms previous state-of-the-art CPDP approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge