Qiang Heng

Exploring Vision-Language Models for Imbalanced Learning

Apr 04, 2023Abstract:Vision-Language models (VLMs) that use contrastive language-image pre-training have shown promising zero-shot classification performance. However, their performance on imbalanced dataset is relatively poor, where the distribution of classes in the training dataset is skewed, leading to poor performance in predicting minority classes. For instance, CLIP achieved only 5% accuracy on the iNaturalist18 dataset. We propose to add a lightweight decoder to VLMs to avoid OOM (out of memory) problem caused by large number of classes and capture nuanced features for tail classes. Then, we explore improvements of VLMs using prompt tuning, fine-tuning, and incorporating imbalanced algorithms such as Focal Loss, Balanced SoftMax and Distribution Alignment. Experiments demonstrate that the performance of VLMs can be further boosted when used with decoder and imbalanced methods. Specifically, our improved VLMs significantly outperforms zero-shot classification by an average accuracy of 6.58%, 69.82%, and 6.17%, on ImageNet-LT, iNaturalist18, and Places-LT, respectively. We further analyze the influence of pre-training data size, backbones, and training cost. Our study highlights the significance of imbalanced learning algorithms in face of VLMs pre-trained by huge data. We release our code at https://github.com/Imbalance-VLM/Imbalance-VLM.

FreeMatch: Self-adaptive Thresholding for Semi-supervised Learning

May 15, 2022

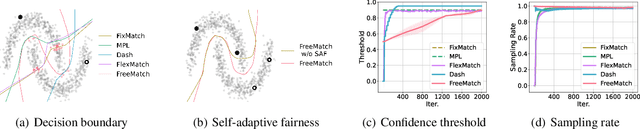

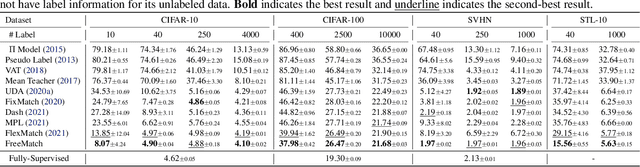

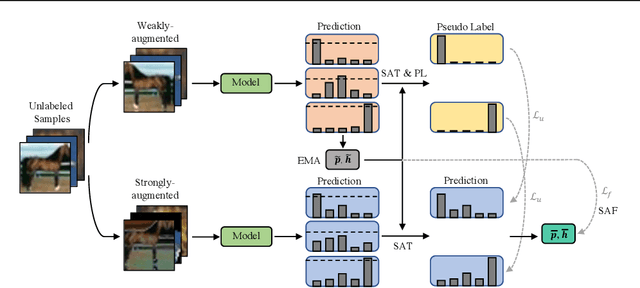

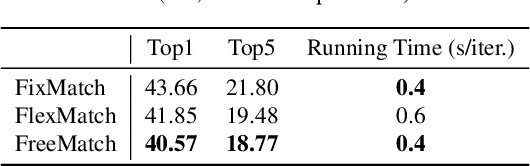

Abstract:Pseudo labeling and consistency regularization approaches with confidence-based thresholding have made great progress in semi-supervised learning (SSL). In this paper, we theoretically and empirically analyze the relationship between the unlabeled data distribution and the desirable confidence threshold. Our analysis shows that previous methods might fail to define favorable threshold since they either require a pre-defined / fixed threshold or an ad-hoc threshold adjusting scheme that does not reflect the learning effect well, resulting in inferior performance and slow convergence, especially for complicated unlabeled data distributions. We hence propose \emph{FreeMatch} to define and adjust the confidence threshold in a self-adaptive manner according to the model's learning status. To handle complicated unlabeled data distributions more effectively, we further propose a self-adaptive class fairness regularization method that encourages the model to produce diverse predictions during training. Extensive experimental results indicate the superiority of FreeMatch especially when the labeled data are extremely rare. FreeMatch achieves \textbf{5.78}\%, \textbf{13.59}\%, and \textbf{1.28}\% error rate reduction over the latest state-of-the-art method FlexMatch on CIFAR-10 with 1 label per class, STL-10 with 4 labels per class, and ImageNet with 100k labels respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge