Poramate Manoonpong

Growable and Interpretable Neural Control with Online Continual Learning for Autonomous Lifelong Locomotion Learning Machines

May 17, 2025Abstract:Continual locomotion learning faces four challenges: incomprehensibility, sample inefficiency, lack of knowledge exploitation, and catastrophic forgetting. Thus, this work introduces Growable Online Locomotion Learning Under Multicondition (GOLLUM), which exploits the interpretability feature to address the aforementioned challenges. GOLLUM has two dimensions of interpretability: layer-wise interpretability for neural control function encoding and column-wise interpretability for robot skill encoding. With this interpretable control structure, GOLLUM utilizes neurogenesis to unsupervisely increment columns (ring-like networks); each column is trained separately to encode and maintain a specific primary robot skill. GOLLUM also transfers the parameters to new skills and supplements the learned combination of acquired skills through another neural mapping layer added (layer-wise) with online supplementary learning. On a physical hexapod robot, GOLLUM successfully acquired multiple locomotion skills (e.g., walking, slope climbing, and bouncing) autonomously and continuously within an hour using a simple reward function. Furthermore, it demonstrated the capability of combining previous learned skills to facilitate the learning process of new skills while preventing catastrophic forgetting. Compared to state-of-the-art locomotion learning approaches, GOLLUM is the only approach that addresses the four challenges above mentioned without human intervention. It also emphasizes the potential exploitation of interpretability to achieve autonomous lifelong learning machines.

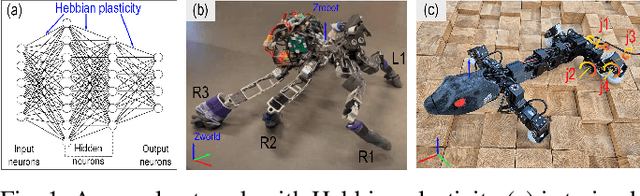

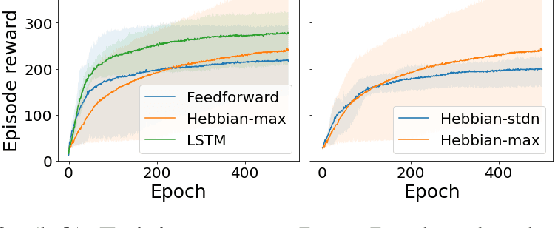

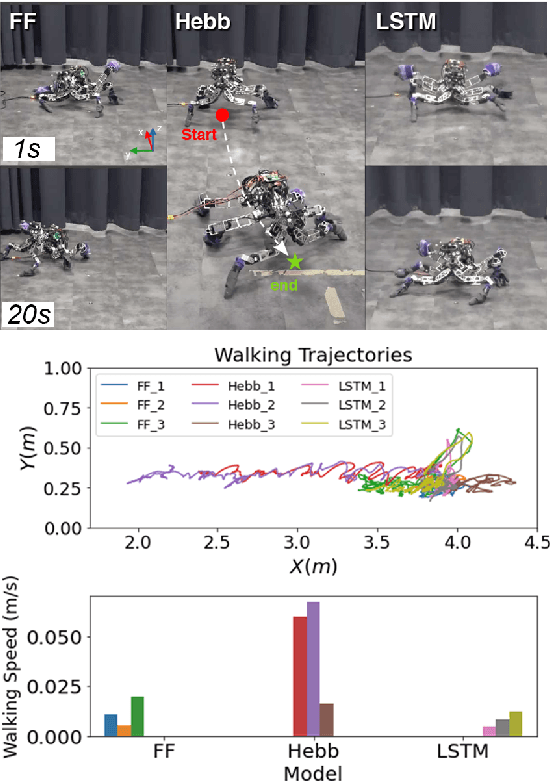

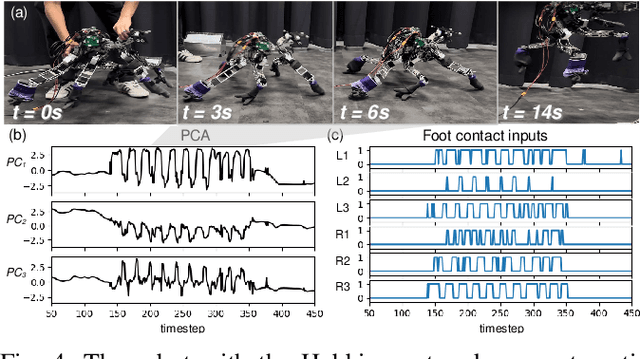

Bio-Inspired Plastic Neural Networks for Zero-Shot Out-of-Distribution Generalization in Complex Animal-Inspired Robots

Mar 16, 2025

Abstract:Artificial neural networks can be used to solve a variety of robotic tasks. However, they risk failing catastrophically when faced with out-of-distribution (OOD) situations. Several approaches have employed a type of synaptic plasticity known as Hebbian learning that can dynamically adjust weights based on local neural activities. Research has shown that synaptic plasticity can make policies more robust and help them adapt to unforeseen changes in the environment. However, networks augmented with Hebbian learning can lead to weight divergence, resulting in network instability. Furthermore, such Hebbian networks have not yet been applied to solve legged locomotion in complex real robots with many degrees of freedom. In this work, we improve the Hebbian network with a weight normalization mechanism for preventing weight divergence, analyze the principal components of the Hebbian's weights, and perform a thorough evaluation of network performance in locomotion control for real 18-DOF dung beetle-like and 16-DOF gecko-like robots. We find that the Hebbian-based plastic network can execute zero-shot sim-to-real adaptation locomotion and generalize to unseen conditions, such as uneven terrain and morphological damage.

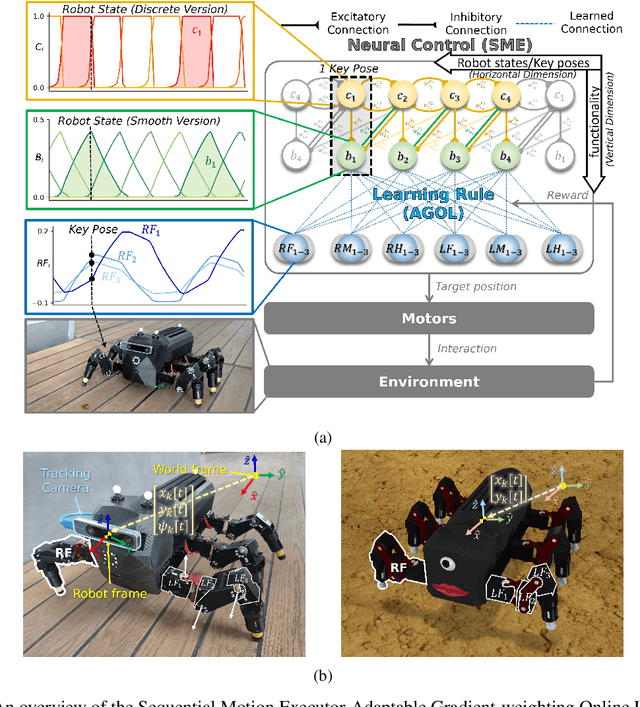

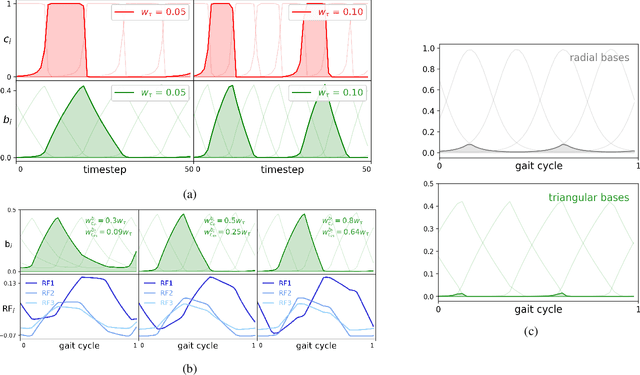

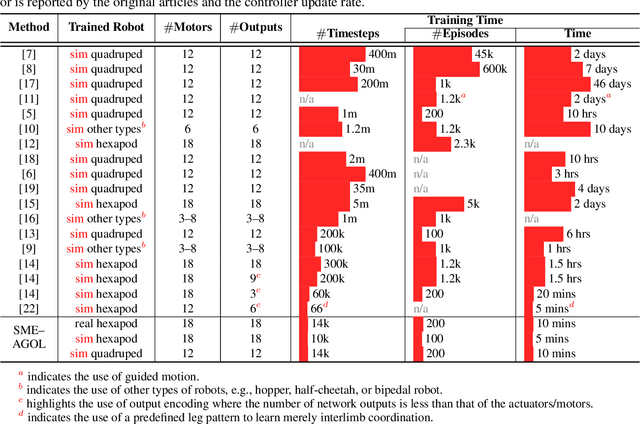

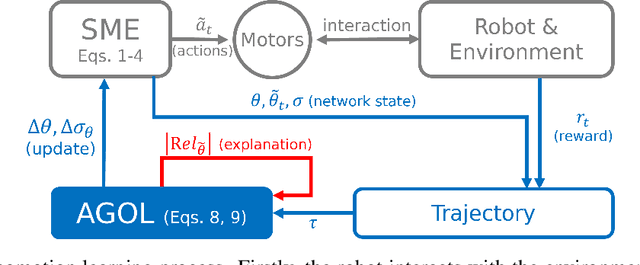

An Interpretable Neural Control Network with Adaptable Online Learning for Sample Efficient Robot Locomotion Learning

Jan 18, 2025

Abstract:Robot locomotion learning using reinforcement learning suffers from training sample inefficiency and exhibits the non-understandable/black-box nature. Thus, this work presents a novel SME-AGOL to address such problems. Firstly, Sequential Motion Executor (SME) is a three-layer interpretable neural network, where the first produces the sequentially propagating hidden states, the second constructs the corresponding triangular bases with minor non-neighbor interference, and the third maps the bases to the motor commands. Secondly, the Adaptable Gradient-weighting Online Learning (AGOL) algorithm prioritizes the update of the parameters with high relevance score, allowing the learning to focus more on the highly relevant ones. Thus, these two components lead to an analyzable framework, where each sequential hidden state/basis represents the learned key poses/robot configuration. Compared to state-of-the-art methods, the SME-AGOL requires 40% fewer samples and receives 150% higher final reward/locomotion performance on a simulated hexapod robot, while taking merely 10 minutes of learning time from scratch on a physical hexapod robot. Taken together, this work not only proposes the SME-AGOL for sample efficient and understandable locomotion learning but also emphasizes the potential exploitation of interpretability for improving sample efficiency and learning performance.

Nature's All-in-One: Multitasking Robots Inspired by Dung Beetles

Nov 05, 2024Abstract:Dung beetles impressively coordinate their six legs simultaneously to effectively roll large dung balls. They are also capable of rolling dung balls varying in the weight on different terrains. The mechanisms underlying how their motor commands are adapted to walk and simultaneously roll balls (multitasking behavior) under different conditions remain unknown. Therefore, this study unravels the mechanisms of how dung beetles roll dung balls and adapt their leg movements to stably roll balls over different terrains for multitasking robots. We synthesize a modular neural-based loco-manipulation control inspired by and based on ethological observations of the ball-rolling behavior of dung beetles. The proposed neural-based control contains various neural modules, including a central pattern generator (CPG) module, a pattern formation network (PFN) module, and a robot orientation control (ROC) module. The integrated neural control mechanisms can successfully control a dung beetle-like robot (ALPHA) with biomechanical feet to perform adaptive robust (multitasking) loco-manipulation (walking and ball-rolling) on various terrains (flat and uneven). It can also deal with different ball weights (2.0 and 4.6 kg) and ball types (soft and rigid). The control mechanisms can serve as guiding principles for solving complex sensory-motor coordination for multitasking robots. Furthermore, this study contributes to biological research by enhancing our scientific understanding of sensory-motor coordination for complex adaptive (multitasking) loco-manipulation behavior in animals.

Learning to Cooperate with Unseen Agent via Meta-Reinforcement Learning

Nov 05, 2021

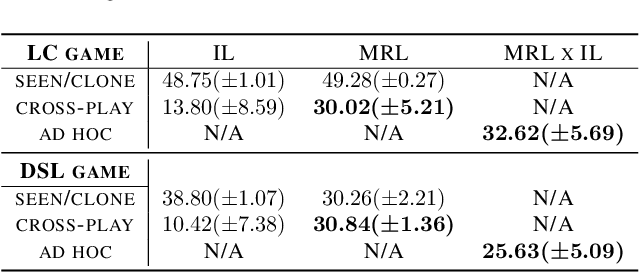

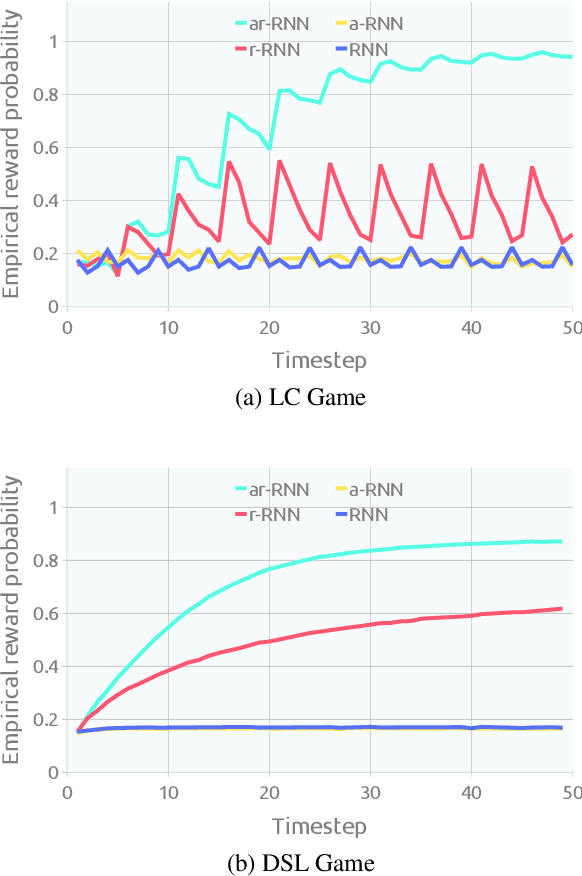

Abstract:Ad hoc teamwork problem describes situations where an agent has to cooperate with previously unseen agents to achieve a common goal. For an agent to be successful in these scenarios, it has to have a suitable cooperative skill. One could implement cooperative skills into an agent by using domain knowledge to design the agent's behavior. However, in complex domains, domain knowledge might not be available. Therefore, it is worthwhile to explore how to directly learn cooperative skills from data. In this work, we apply meta-reinforcement learning (meta-RL) formulation in the context of the ad hoc teamwork problem. Our empirical results show that such a method could produce robust cooperative agents in two cooperative environments with different cooperative circumstances: social compliance and language interpretation. (This is a full paper of the extended abstract version.)

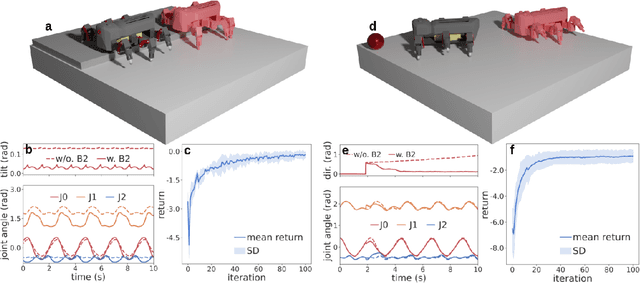

Versatile modular neural locomotion control with fast learning

Jul 16, 2021

Abstract:Legged robots have significant potential to operate in highly unstructured environments. The design of locomotion control is, however, still challenging. Currently, controllers must be either manually designed for specific robots and tasks, or automatically designed via machine learning methods that require long training times and yield large opaque controllers. Drawing inspiration from animal locomotion, we propose a simple yet versatile modular neural control structure with fast learning. The key advantages of our approach are that behavior-specific control modules can be added incrementally to obtain increasingly complex emergent locomotion behaviors, and that neural connections interfacing with existing modules can be quickly and automatically learned. In a series of experiments, we show how eight modules can be quickly learned and added to a base control module to obtain emergent adaptive behaviors allowing a hexapod robot to navigate in complex environments. We also show that modules can be added and removed during operation without affecting the functionality of the remaining controller. Finally, the control approach was successfully demonstrated on a physical hexapod robot. Taken together, our study reveals a significant step towards fast automatic design of versatile neural locomotion control for complex robotic systems.

An Explicit Local and Global Representation Disentanglement Framework with Applications in Deep Clustering and Unsupervised Object Detection

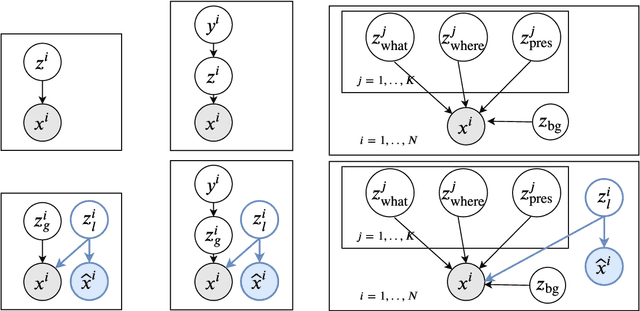

Feb 24, 2020

Abstract:Visual data can be understood at different levels of granularity, where global features correspond to semantic-level information and local features correspond to texture patterns. In this work, we propose a framework, called SPLIT, which allows us to disentangle local and global information into two separate sets of latent variables within the variational autoencoder (VAE) framework. Our framework adds generative assumption to the VAE by requiring a subset of the latent variables to generate an auxiliary set of observable data. This additional generative assumption primes the latent variables to local information and encourages the other latent variables to represent global information. We examine three different flavours of VAEs with different generative assumptions. We show that the framework can effectively disentangle local and global information within these models leads to improved representation, with better clustering and unsupervised object detection benchmarks. Finally, we establish connections between SPLIT and recent research in cognitive neuroscience regarding the disentanglement in human visual perception. The code for our experiments is at https://github.com/51616/split-vae .

Distributed Recurrent Neural Forward Models with Synaptic Adaptation for Complex Behaviors of Walking Robots

Jun 11, 2015

Abstract:Walking animals, like stick insects, cockroaches or ants, demonstrate a fascinating range of locomotive abilities and complex behaviors. The locomotive behaviors can consist of a variety of walking patterns along with adaptation that allow the animals to deal with changes in environmental conditions, like uneven terrains, gaps, obstacles etc. Biological study has revealed that such complex behaviors are a result of a combination of biome- chanics and neural mechanism thus representing the true nature of embodied interactions. While the biomechanics helps maintain flexibility and sustain a variety of movements, the neural mechanisms generate movements while making appropriate predictions crucial for achieving adaptation. Such predictions or planning ahead can be achieved by way of in- ternal models that are grounded in the overall behavior of the animal. Inspired by these findings, we present here, an artificial bio-inspired walking system which effectively com- bines biomechanics (in terms of the body and leg structures) with the underlying neural mechanisms. The neural mechanisms consist of 1) central pattern generator based control for generating basic rhythmic patterns and coordinated movements, 2) distributed (at each leg) recurrent neural network based adaptive forward models with efference copies as internal models for sensory predictions and instantaneous state estimations, and 3) searching and elevation control for adapting the movement of an individual leg to deal with different environmental conditions. Using simulations we show that this bio-inspired approach with adaptive internal models allows the walking robot to perform complex loco- motive behaviors as observed in insects, including walking on undulated terrains, crossing large gaps as well as climbing over high obstacles...

Multiple chaotic central pattern generators with learning for legged locomotion and malfunction compensation

Jul 11, 2014

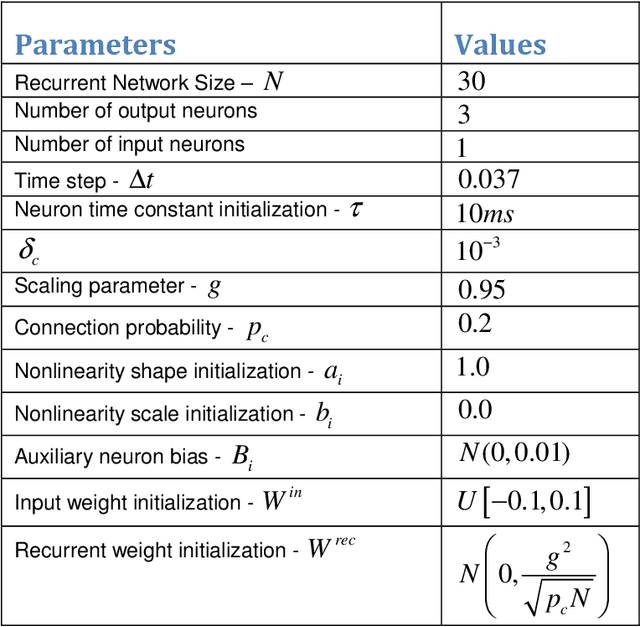

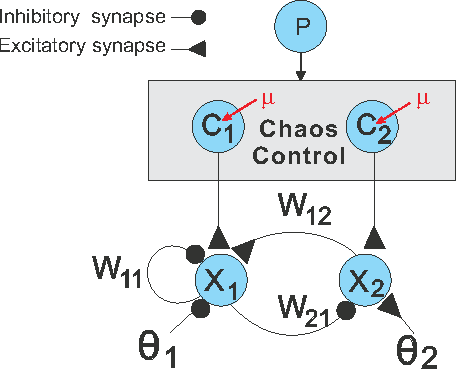

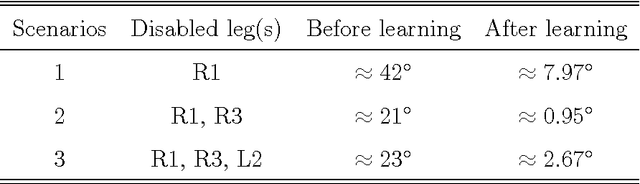

Abstract:An originally chaotic system can be controlled into various periodic dynamics. When it is implemented into a legged robot's locomotion control as a central pattern generator (CPG), sophisticated gait patterns arise so that the robot can perform various walking behaviors. However, such a single chaotic CPG controller has difficulties dealing with leg malfunction. Specifically, in the scenarios presented here, its movement permanently deviates from the desired trajectory. To address this problem, we extend the single chaotic CPG to multiple CPGs with learning. The learning mechanism is based on a simulated annealing algorithm. In a normal situation, the CPGs synchronize and their dynamics are identical. With leg malfunction or disability, the CPGs lose synchronization leading to independent dynamics. In this case, the learning mechanism is applied to automatically adjust the remaining legs' oscillation frequencies so that the robot adapts its locomotion to deal with the malfunction. As a consequence, the trajectory produced by the multiple chaotic CPGs resembles the original trajectory far better than the one produced by only a single CPG. The performance of the system is evaluated first in a physical simulation of a quadruped as well as a hexapod robot and finally in a real six-legged walking machine called AMOSII. The experimental results presented here reveal that using multiple CPGs with learning is an effective approach for adaptive locomotion generation where, for instance, different body parts have to perform independent movements for malfunction compensation.

Self-organized adaptation of a simple neural circuit enables complex robot behaviour

May 06, 2011

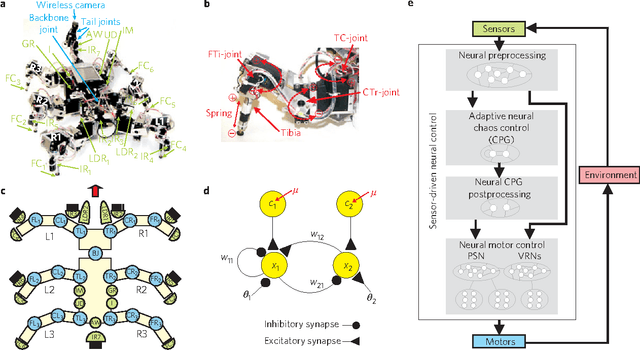

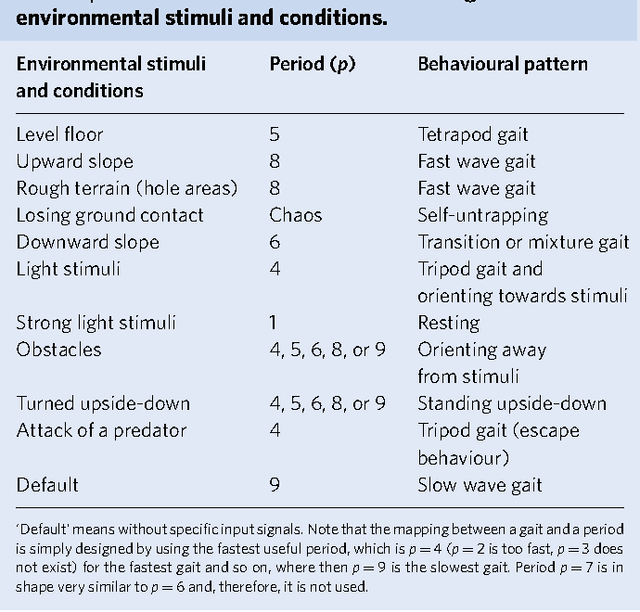

Abstract:Controlling sensori-motor systems in higher animals or complex robots is a challenging combinatorial problem, because many sensory signals need to be simultaneously coordinated into a broad behavioural spectrum. To rapidly interact with the environment, this control needs to be fast and adaptive. Current robotic solutions operate with limited autonomy and are mostly restricted to few behavioural patterns. Here we introduce chaos control as a new strategy to generate complex behaviour of an autonomous robot. In the presented system, 18 sensors drive 18 motors via a simple neural control circuit, thereby generating 11 basic behavioural patterns (e.g., orienting, taxis, self-protection, various gaits) and their combinations. The control signal quickly and reversibly adapts to new situations and additionally enables learning and synaptic long-term storage of behaviourally useful motor responses. Thus, such neural control provides a powerful yet simple way to self-organize versatile behaviours in autonomous agents with many degrees of freedom.

* 16 pages, non-final version, for final see Nature Physics homepage

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge