Po-Chen Wu

D-STAR: Dual Simultaneously Transmitting and Reflecting Reconfigurable Intelligent Surfaces for Joint Uplink/Downlink Transmission

Jul 30, 2023

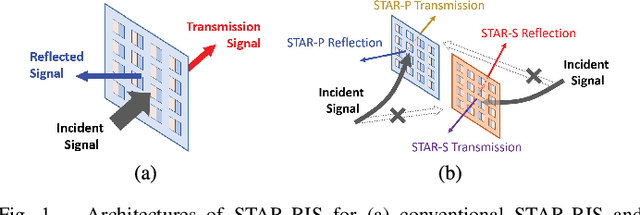

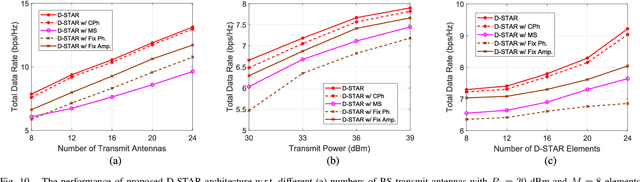

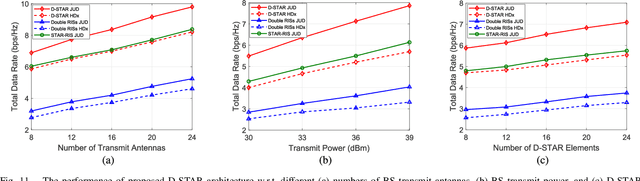

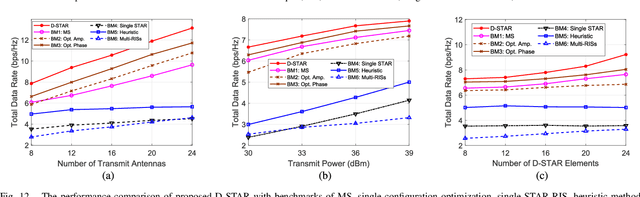

Abstract:The joint uplink/downlink (JUD) design of simultaneously transmitting and reflecting reconfigurable intelligent surfaces (STAR-RIS) is conceived in support of both uplink (UL) and downlink (DL) users. Furthermore, the dual STAR-RISs (D-STAR) concept is conceived as a promising architecture for 360-degree full-plane service coverage including users located between the base station (BS) and the D-STAR and beyond. The corresponding regions are termed as primary (P) and secondary (S) regions. The primary STAR-RIS (STAR-P) plays an important role in terms of tackling the P-region inter-user interference, the self-interference (SI) from the BS and from the reflective as well as refractive UL users imposed on the DL receiver. By contrast, the secondary STAR-RIS (STAR-S) aims for mitigating the S-region interferences. The non-linear and non-convex rate-maximization problem formulated is solved by alternating optimization amongst the decomposed convex sub-problems of the BS beamformer, and the D-STAR amplitude as well as phase shift configurations. We also propose a D-STAR based active beamforming and passive STAR-RIS amplitude/phase (DBAP) optimization scheme to solve the respective sub-problems by Lagrange dual with Dinkelbach transformation, alternating direction method of multipliers (ADMM) with successive convex approximation (SCA), and penalty convex-concave procedure (PCCP). Our simulation results reveal that the proposed D-STAR architecture outperforms the conventional single RIS, single STAR-RIS, and half-duplex networks. The proposed DBAP in D-STAR outperforms the state-of-the-art solutions in the open literature.

Neural Correspondence Field for Object Pose Estimation

Jul 30, 2022

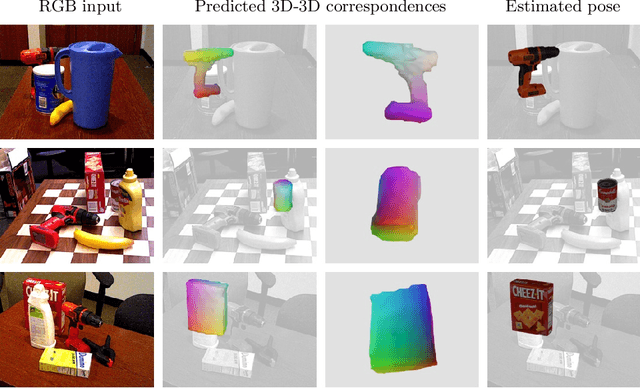

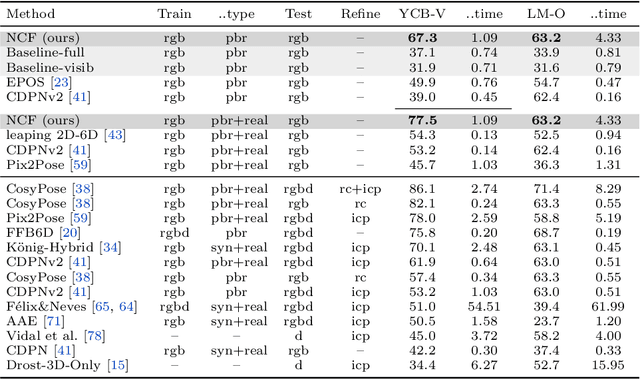

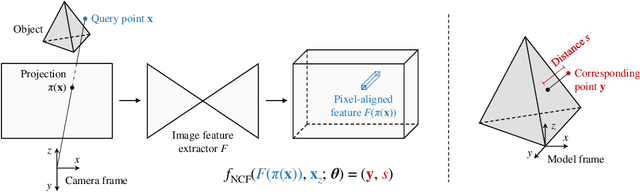

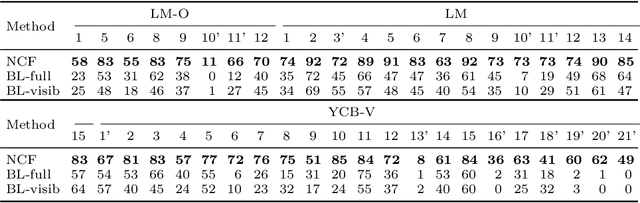

Abstract:We propose a method for estimating the 6DoF pose of a rigid object with an available 3D model from a single RGB image. Unlike classical correspondence-based methods which predict 3D object coordinates at pixels of the input image, the proposed method predicts 3D object coordinates at 3D query points sampled in the camera frustum. The move from pixels to 3D points, which is inspired by recent PIFu-style methods for 3D reconstruction, enables reasoning about the whole object, including its (self-)occluded parts. For a 3D query point associated with a pixel-aligned image feature, we train a fully-connected neural network to predict: (i) the corresponding 3D object coordinates, and (ii) the signed distance to the object surface, with the first defined only for query points in the surface vicinity. We call the mapping realized by this network as Neural Correspondence Field. The object pose is then robustly estimated from the predicted 3D-3D correspondences by the Kabsch-RANSAC algorithm. The proposed method achieves state-of-the-art results on three BOP datasets and is shown superior especially in challenging cases with occlusion. The project website is at: linhuang17.github.io/NCF.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge