Philip J. B. Jackson

ToS: A Team of Specialists ensemble framework for Stereo Sound Event Localization and Detection with distance estimation in Video

Jan 24, 2026Abstract:Sound event localization and detection with distance estimation (3D SELD) in video involves identifying active sound events at each time frame while estimating their spatial coordinates. This multimodal task requires joint reasoning across semantic, spatial, and temporal dimensions, a challenge that single models often struggle to address effectively. To tackle this, we introduce the Team of Specialists (ToS) ensemble framework, which integrates three complementary sub-networks: a spatio-linguistic model, a spatio-temporal model, and a tempo-linguistic model. Each sub-network specializes in a unique pair of dimensions, contributing distinct insights to the final prediction, akin to a collaborative team with diverse expertise. ToS has been benchmarked against state-of-the-art audio-visual models for 3D SELD on the DCASE2025 Task 3 Stereo SELD development set, consistently outperforming existing methods across key metrics. Future work will extend this proof of concept by strengthening the specialists with appropriate tasks, training, and pre-training curricula.

PAL: Probing Audio Encoders via LLMs -- A Study of Information Transfer from Audio Encoders to LLMs

Jun 12, 2025Abstract:The integration of audio perception capabilities into Large Language Models (LLMs) has enabled significant advances in Audio-LLMs. Although application-focused developments, particularly in curating training data for specific capabilities e.g., audio reasoning, have progressed rapidly, the underlying mechanisms that govern efficient transfer of rich semantic representations from audio encoders to LLMs remain under-explored. We conceptualize effective audio-LLM interaction as the LLM's ability to proficiently probe the audio encoder representations to satisfy textual queries. This paper presents a systematic investigation on how architectural design choices can affect that. Beginning with a standard Pengi/LLaVA-style audio-LLM architecture, we propose and evaluate several modifications guided by hypotheses derived from mechanistic interpretability studies and LLM operational principles. Our experiments demonstrate that: (1) delaying audio integration until the LLM's initial layers establish textual context that enhances its ability to probe the audio representations for relevant information; (2) the LLM can proficiently probe audio representations exclusively through LLM layer's attention submodule, without requiring propagation to its Feed-Forward Network (FFN) submodule; (3) an efficiently integrated ensemble of diverse audio encoders provides richer, complementary representations, thereby broadening the LLM's capacity to probe a wider spectrum of audio information. All hypotheses are evaluated using an identical three-stage training curriculum on a dataset of 5.6 million audio-text pairs, ensuring controlled comparisons. Our final architecture, which incorporates all proposed modifications, achieves relative improvements from 10\% to 60\% over the baseline, validating our approach to optimizing cross-modal information transfer in audio-LLMs. Project page: https://ta012.github.io/PAL/

Reverberation-based Features for Sound Event Localization and Detection with Distance Estimation

Apr 11, 2025Abstract:Sound event localization and detection (SELD) involves predicting active sound event classes over time while estimating their positions. The localization subtask in SELD is usually treated as a direction of arrival estimation problem, ignoring source distance. Only recently, SELD was extended to 3D by incorporating distance estimation, enabling the prediction of sound event positions in 3D space (3D SELD). However, existing methods lack input features designed for distance estimation. We argue that reverberation encodes valuable information for this task. This paper introduces two novel feature formats for 3D SELD based on reverberation: one using direct-to-reverberant ratio (DRR) and another leveraging signal autocorrelation to provide the model with insights into early reflections. Pre-training on synthetic data improves relative distance error (RDE) and overall SELD score, with autocorrelation-based features reducing RDE by over 3 percentage points on the STARSS23 dataset. The code to extract the features is available at github.com/dberghi/SELD-distance-features.

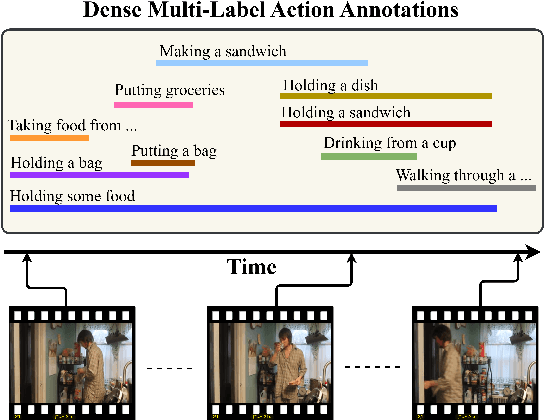

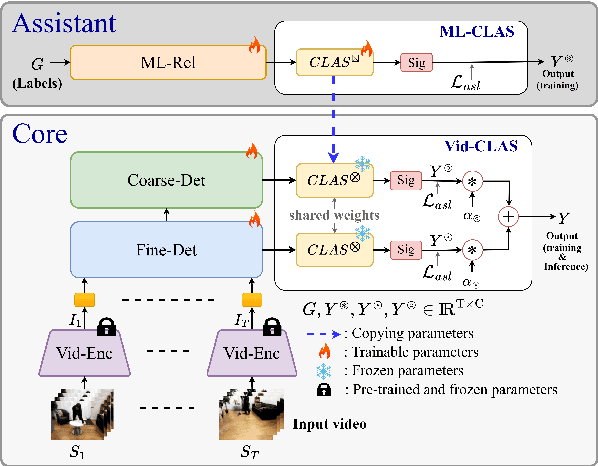

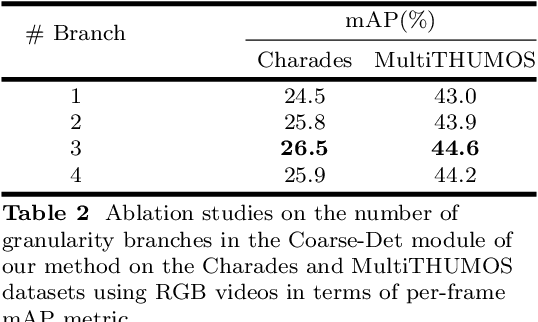

Deconstruct Complexity (DeComplex): A Novel Perspective on Tackling Dense Action Detection

Jan 30, 2025Abstract:Dense action detection involves detecting multiple co-occurring actions in an untrimmed video while action classes are often ambiguous and represent overlapping concepts. To address this challenge task, we introduce a novel perspective inspired by how humans tackle complex tasks by breaking them into manageable sub-tasks. Instead of relying on a single network to address the entire problem, as in current approaches, we propose decomposing the problem into detecting key concepts present in action classes, specifically, detecting dense static concepts and detecting dense dynamic concepts, and assigning them to distinct, specialized networks. Furthermore, simultaneous actions in a video often exhibit interrelationships, and exploiting these relationships can improve performance. However, we argue that current networks fail to effectively learn these relationships due to their reliance on binary cross-entropy optimization, which treats each class independently. To address this limitation, we propose providing explicit supervision on co-occurring concepts during network optimization through a novel language-guided contrastive learning loss. Our extensive experiments demonstrate the superiority of our approach over state-of-the-art methods, achieving substantial relative improvements of 23.4% and 2.5% mAP on the challenging benchmark datasets, Charades and MultiTHUMOS.

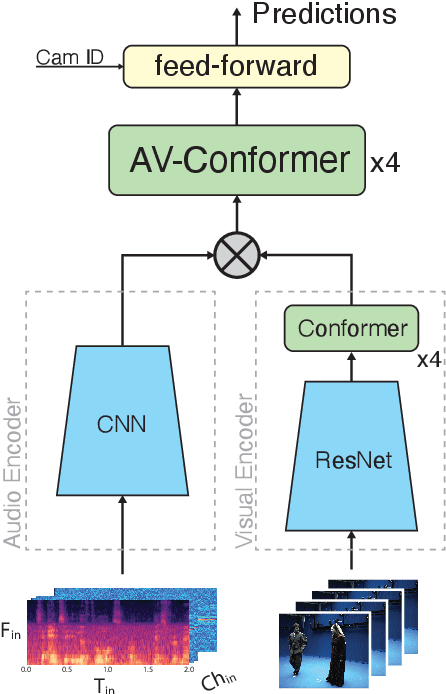

Leveraging Reverberation and Visual Depth Cues for Sound Event Localization and Detection with Distance Estimation

Oct 29, 2024

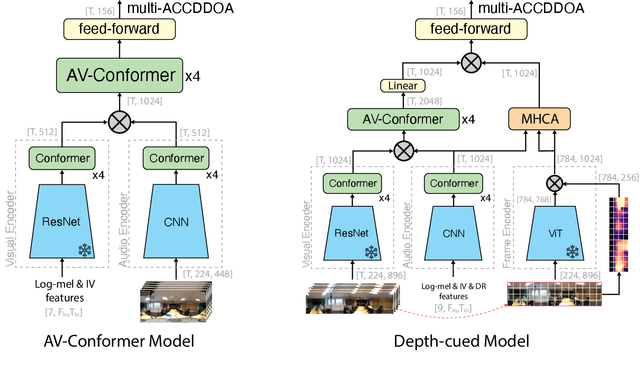

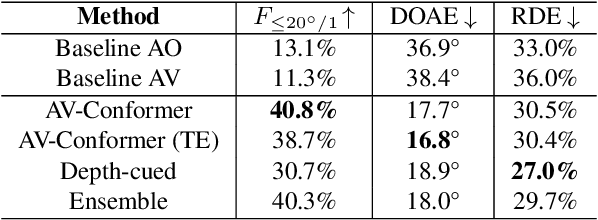

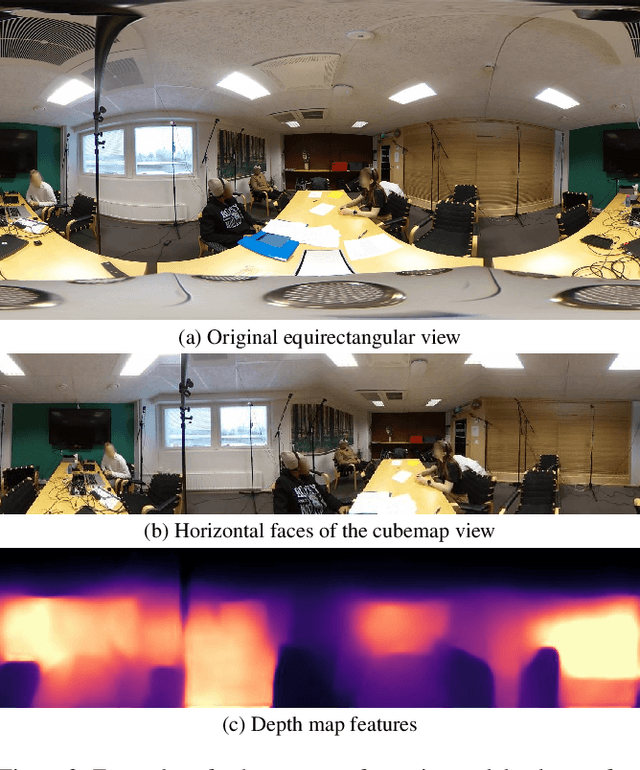

Abstract:This report describes our systems submitted for the DCASE2024 Task 3 challenge: Audio and Audiovisual Sound Event Localization and Detection with Source Distance Estimation (Track B). Our main model is based on the audio-visual (AV) Conformer, which processes video and audio embeddings extracted with ResNet50 and with an audio encoder pre-trained on SELD, respectively. This model outperformed the audio-visual baseline of the development set of the STARSS23 dataset by a wide margin, halving its DOAE and improving the F1 by more than 3x. Our second system performs a temporal ensemble from the outputs of the AV-Conformer. We then extended the model with features for distance estimation, such as direct and reverberant signal components extracted from the omnidirectional audio channel, and depth maps extracted from the video frames. While the new system improved the RDE of our previous model by about 3 percentage points, it achieved a lower F1 score. This may be caused by sound classes that rarely appear in the training set and that the more complex system does not detect, as analysis can determine. To overcome this problem, our fourth and final system consists of an ensemble strategy combining the predictions of the other three. Many opportunities to refine the system and training strategy can be tested in future ablation experiments, and likely achieve incremental performance gains for this audio-visual task.

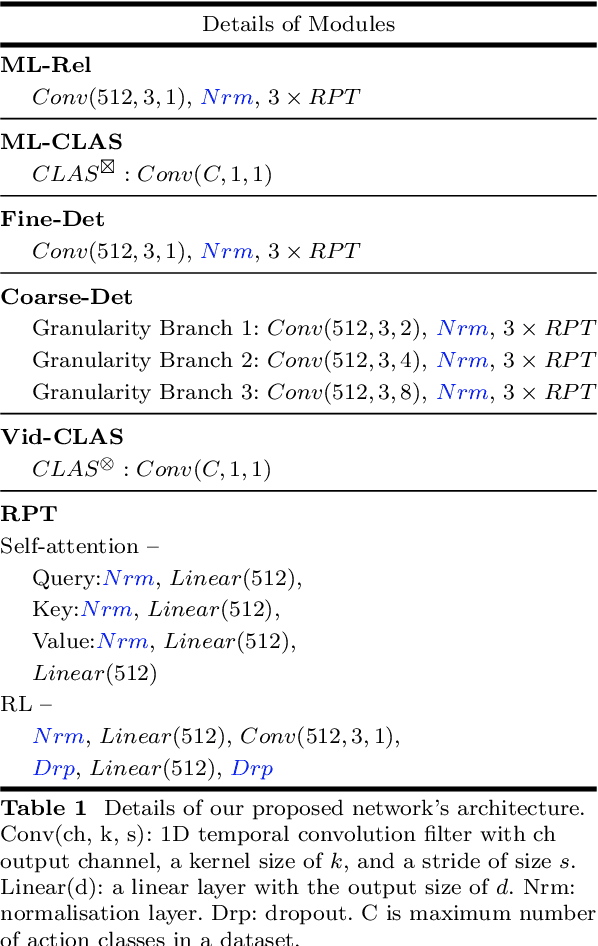

An Effective-Efficient Approach for Dense Multi-Label Action Detection

Jun 10, 2024

Abstract:Unlike the sparse label action detection task, where a single action occurs in each timestamp of a video, in a dense multi-label scenario, actions can overlap. To address this challenging task, it is necessary to simultaneously learn (i) temporal dependencies and (ii) co-occurrence action relationships. Recent approaches model temporal information by extracting multi-scale features through hierarchical transformer-based networks. However, the self-attention mechanism in transformers inherently loses temporal positional information. We argue that combining this with multiple sub-sampling processes in hierarchical designs can lead to further loss of positional information. Preserving this information is essential for accurate action detection. In this paper, we address this issue by proposing a novel transformer-based network that (a) employs a non-hierarchical structure when modelling different ranges of temporal dependencies and (b) embeds relative positional encoding in its transformer layers. Furthermore, to model co-occurrence action relationships, current methods explicitly embed class relations into the transformer network. However, these approaches are not computationally efficient, as the network needs to compute all possible pair action class relations. We also overcome this challenge by introducing a novel learning paradigm that allows the network to benefit from explicitly modelling temporal co-occurrence action dependencies without imposing their additional computational costs during inference. We evaluate the performance of our proposed approach on two challenging dense multi-label benchmark datasets and show that our method improves the current state-of-the-art results.

Audio-Visual Talker Localization in Video for Spatial Sound Reproduction

Jun 01, 2024

Abstract:Object-based audio production requires the positional metadata to be defined for each point-source object, including the key elements in the foreground of the sound scene. In many media production use cases, both cameras and microphones are employed to make recordings, and the human voice is often a key element. In this research, we detect and locate the active speaker in the video, facilitating the automatic extraction of the positional metadata of the talker relative to the camera's reference frame. With the integration of the visual modality, this study expands upon our previous investigation focused solely on audio-based active speaker detection and localization. Our experiments compare conventional audio-visual approaches for active speaker detection that leverage monaural audio, our previous audio-only method that leverages multichannel recordings from a microphone array, and a novel audio-visual approach integrating vision and multichannel audio. We found the role of the two modalities to complement each other. Multichannel audio, overcoming the problem of visual occlusions, provides a double-digit reduction in detection error compared to audio-visual methods with single-channel audio. The combination of multichannel audio and vision further enhances spatial accuracy, leading to a four-percentage point increase in F1 score on the Tragic Talkers dataset. Future investigations will assess the robustness of the model in noisy and highly reverberant environments, as well as tackle the problem of off-screen speakers.

CoLeaF: A Contrastive-Collaborative Learning Framework for Weakly Supervised Audio-Visual Video Parsing

May 17, 2024

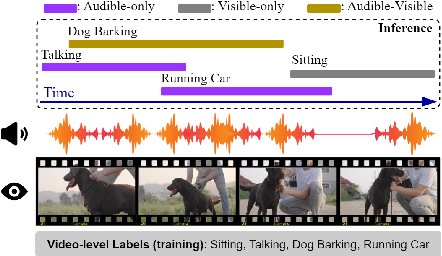

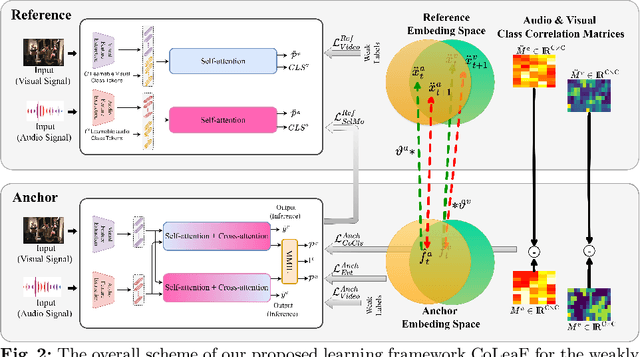

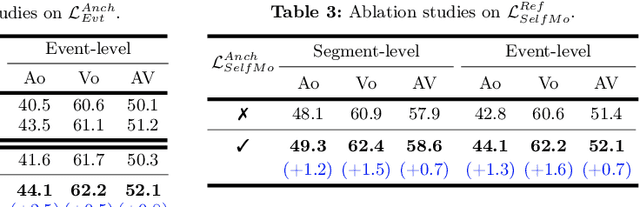

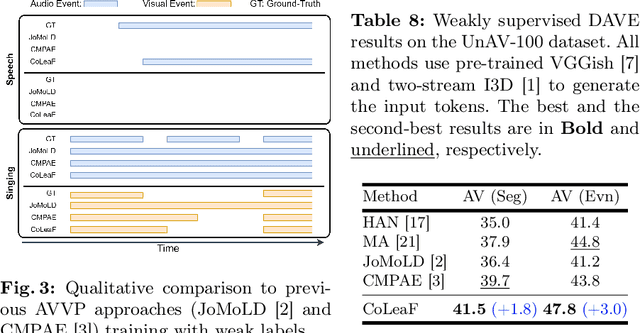

Abstract:Weakly supervised audio-visual video parsing (AVVP) methods aim to detect audible-only, visible-only, and audible-visible events using only video-level labels. Existing approaches tackle this by leveraging unimodal and cross-modal contexts. However, we argue that while cross-modal learning is beneficial for detecting audible-visible events, in the weakly supervised scenario, it negatively impacts unaligned audible or visible events by introducing irrelevant modality information. In this paper, we propose CoLeaF, a novel learning framework that optimizes the integration of cross-modal context in the embedding space such that the network explicitly learns to combine cross-modal information for audible-visible events while filtering them out for unaligned events. Additionally, as videos often involve complex class relationships, modelling them improves performance. However, this introduces extra computational costs into the network. Our framework is designed to leverage cross-class relationships during training without incurring additional computations at inference. Furthermore, we propose new metrics to better evaluate a method's capabilities in performing AVVP. Our extensive experiments demonstrate that CoLeaF significantly improves the state-of-the-art results by an average of 1.9% and 2.4% F-score on the LLP and UnAV-100 datasets, respectively.

Leveraging Visual Supervision for Array-based Active Speaker Detection and Localization

Dec 21, 2023

Abstract:Conventional audio-visual approaches for active speaker detection (ASD) typically rely on visually pre-extracted face tracks and the corresponding single-channel audio to find the speaker in a video. Therefore, they tend to fail every time the face of the speaker is not visible. We demonstrate that a simple audio convolutional recurrent neural network (CRNN) trained with spatial input features extracted from multichannel audio can perform simultaneous horizontal active speaker detection and localization (ASDL), independently of the visual modality. To address the time and cost of generating ground truth labels to train such a system, we propose a new self-supervised training pipeline that embraces a ``student-teacher'' learning approach. A conventional pre-trained active speaker detector is adopted as a ``teacher'' network to provide the position of the speakers as pseudo-labels. The multichannel audio ``student'' network is trained to generate the same results. At inference, the student network can generalize and locate also the occluded speakers that the teacher network is not able to detect visually, yielding considerable improvements in recall rate. Experiments on the TragicTalkers dataset show that an audio network trained with the proposed self-supervised learning approach can exceed the performance of the typical audio-visual methods and produce results competitive with the costly conventional supervised training. We demonstrate that improvements can be achieved when minimal manual supervision is introduced in the learning pipeline. Further gains may be sought with larger training sets and integrating vision with the multichannel audio system.

Fusion of Audio and Visual Embeddings for Sound Event Localization and Detection

Dec 14, 2023Abstract:Sound event localization and detection (SELD) combines two subtasks: sound event detection (SED) and direction of arrival (DOA) estimation. SELD is usually tackled as an audio-only problem, but visual information has been recently included. Few audio-visual (AV)-SELD works have been published and most employ vision via face/object bounding boxes, or human pose keypoints. In contrast, we explore the integration of audio and visual feature embeddings extracted with pre-trained deep networks. For the visual modality, we tested ResNet50 and Inflated 3D ConvNet (I3D). Our comparison of AV fusion methods includes the AV-Conformer and Cross-Modal Attentive Fusion (CMAF) model. Our best models outperform the DCASE 2023 Task3 audio-only and AV baselines by a wide margin on the development set of the STARSS23 dataset, making them competitive amongst state-of-the-art results of the AV challenge, without model ensembling, heavy data augmentation, or prediction post-processing. Such techniques and further pre-training could be applied as next steps to improve performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge