Petr Mitrichev

Modeling Text with Decision Forests using Categorical-Set Splits

Sep 28, 2020

Abstract:Decision forest algorithms model data by learning a binary tree structure recursively where every node splits the feature space into two regions, sending examples into the left or right branches. This "decision" is the result of the evaluation of a condition. For example, a node may split input data by applying a threshold to a numerical feature value. Such decisions are learned using (often greedy) algorithms that attempt to optimize a local loss function. Crucially, whether an algorithm exists to find and evaluate splits for a feature type (e.g., text) determines whether a decision forest algorithm can model that feature type at all. In this work, we set out to devise such an algorithm for textual features, thereby equipping decision forests with the ability to directly model text without the need for feature transformation. Our algorithm is efficient during training and the resulting splits are fast to evaluate with our extension of the QuickScorer inference algorithm. Experiments on benchmark text classification datasets demonstrate the utility and effectiveness of our proposal.

Interpretable Learning-to-Rank with Generalized Additive Models

May 14, 2020

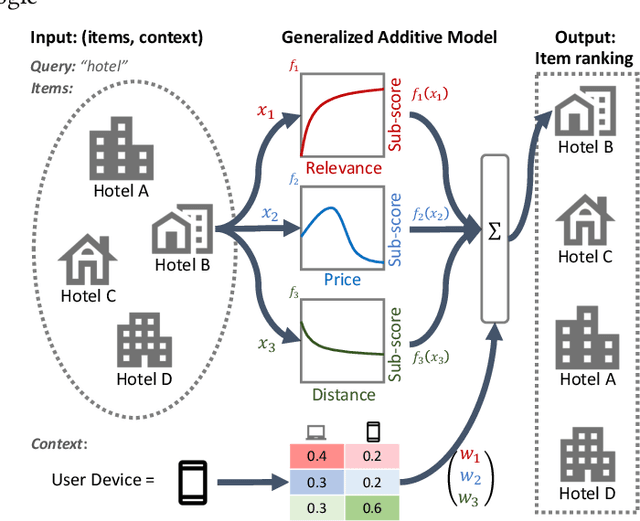

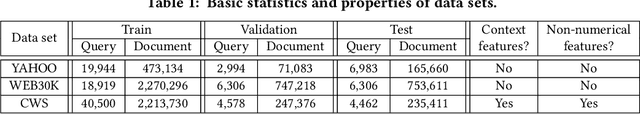

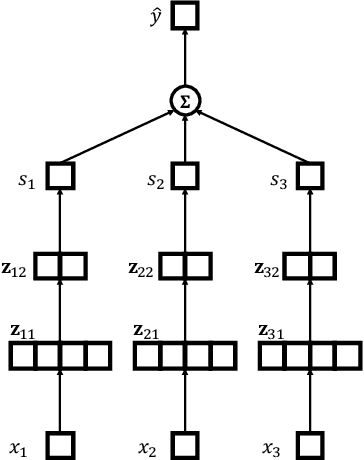

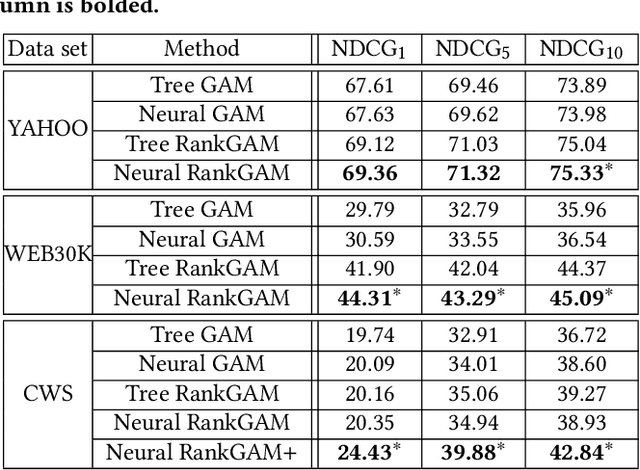

Abstract:Interpretability of learning-to-rank models is a crucial yet relatively under-examined research area. Recent progress on interpretable ranking models largely focuses on generating post-hoc explanations for existing black-box ranking models, whereas the alternative option of building an intrinsically interpretable ranking model with transparent and self-explainable structure remains unexplored. Developing fully-understandable ranking models is necessary in some scenarios (e.g., due to legal or policy constraints) where post-hoc methods cannot provide sufficiently accurate explanations. In this paper, we lay the groundwork for intrinsically interpretable learning-to-rank by introducing generalized additive models (GAMs) into ranking tasks. Generalized additive models (GAMs) are intrinsically interpretable machine learning models and have been extensively studied on regression and classification tasks. We study how to extend GAMs into ranking models which can handle both item-level and list-level features and propose a novel formulation of ranking GAMs. To instantiate ranking GAMs, we employ neural networks instead of traditional splines or regression trees. We also show that our neural ranking GAMs can be distilled into a set of simple and compact piece-wise linear functions that are much more efficient to evaluate with little accuracy loss. We conduct experiments on three data sets and show that our proposed neural ranking GAMs can achieve significantly better performance than other traditional GAM baselines while maintaining similar interpretability.

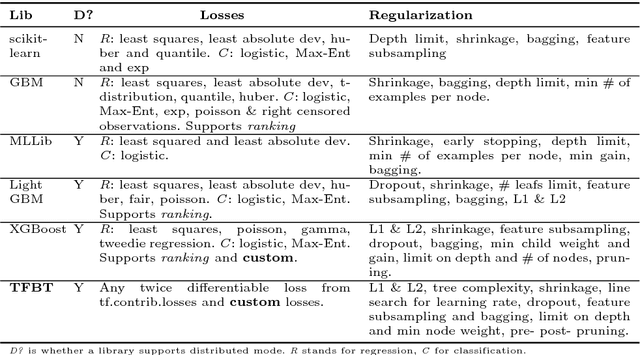

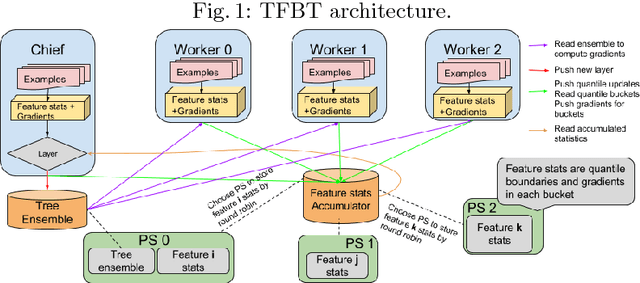

TF Boosted Trees: A scalable TensorFlow based framework for gradient boosting

Oct 31, 2017

Abstract:TF Boosted Trees (TFBT) is a new open-sourced frame-work for the distributed training of gradient boosted trees. It is based on TensorFlow, and its distinguishing features include a novel architecture, automatic loss differentiation, layer-by-layer boosting that results in smaller ensembles and faster prediction, principled multi-class handling, and a number of regularization techniques to prevent overfitting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge