Pengcheng Zou

Community Trend Prediction on Heterogeneous Graph in E-commerce

Feb 24, 2022

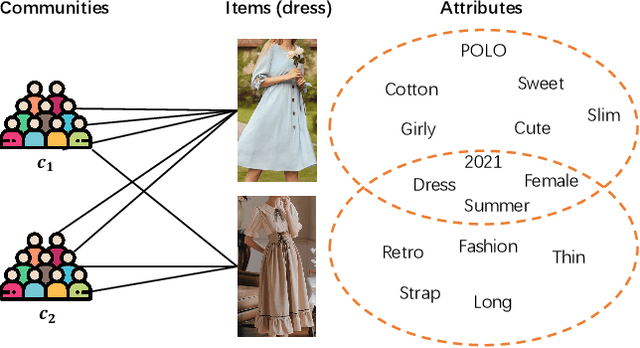

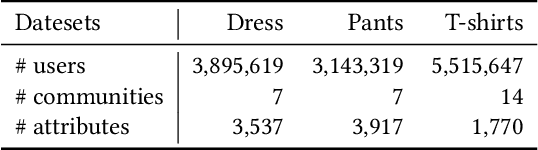

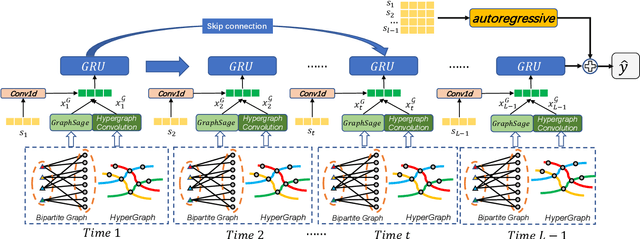

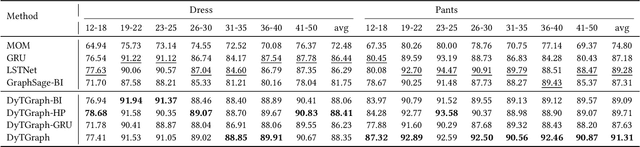

Abstract:In online shopping, ever-changing fashion trends make merchants need to prepare more differentiated products to meet the diversified demands, and e-commerce platforms need to capture the market trend with a prophetic vision. For the trend prediction, the attribute tags, as the essential description of items, can genuinely reflect the decision basis of consumers. However, few existing works explore the attribute trend in the specific community for e-commerce. In this paper, we focus on the community trend prediction on the item attribute and propose a unified framework that combines the dynamic evolution of two graph patterns to predict the attribute trend in a specific community. Specifically, we first design a communityattribute bipartite graph at each time step to learn the collaboration of different communities. Next, we transform the bipartite graph into a hypergraph to exploit the associations of different attribute tags in one community. Lastly, we introduce a dynamic evolution component based on the recurrent neural networks to capture the fashion trend of attribute tags. Extensive experiments on three real-world datasets in a large e-commerce platform show the superiority of the proposed approach over several strong alternatives and demonstrate the ability to discover the community trend in advance.

Distant Supervision for E-commerce Query Segmentation via Attention Network

Nov 09, 2020

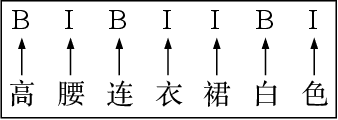

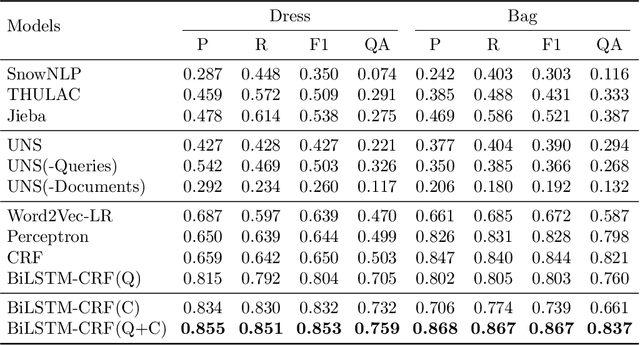

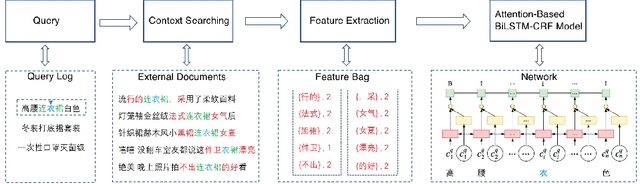

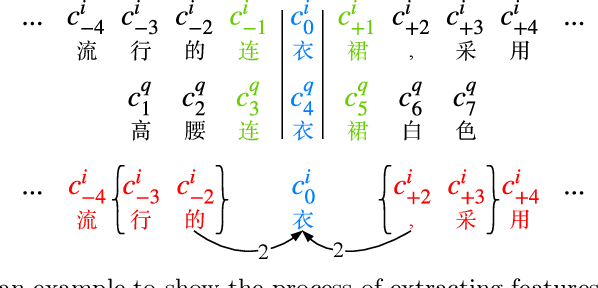

Abstract:The booming online e-commerce platforms demand highly accurate approaches to segment queries that carry the product requirements of consumers. Recent works have shown that the supervised methods, especially those based on deep learning, are attractive for achieving better performance on the problem of query segmentation. However, the lack of labeled data is still a big challenge for training a deep segmentation network, and the problem of Out-of-Vocabulary (OOV) also adversely impacts the performance of query segmentation. Different from query segmentation task in an open domain, e-commerce scenario can provide external documents that are closely related to these queries. Thus, to deal with the two challenges, we employ the idea of distant supervision and design a novel method to find contexts in external documents and extract features from these contexts. In this work, we propose a BiLSTM-CRF based model with an attention module to encode external features, such that external contexts information, which can be utilized naturally and effectively to help query segmentation. Experiments on two datasets show the effectiveness of our approach compared with several kinds of baselines.

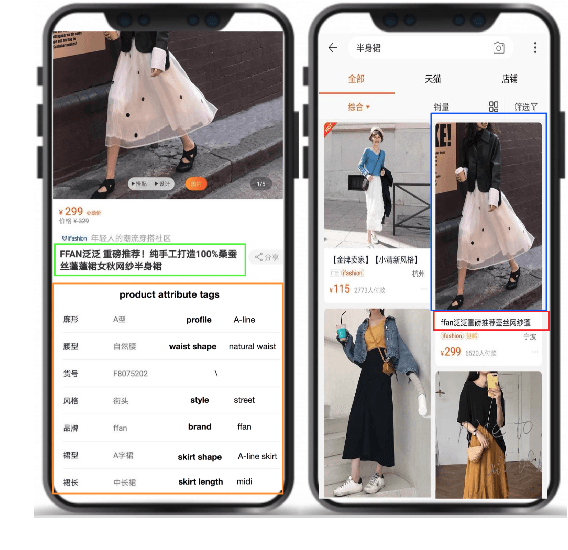

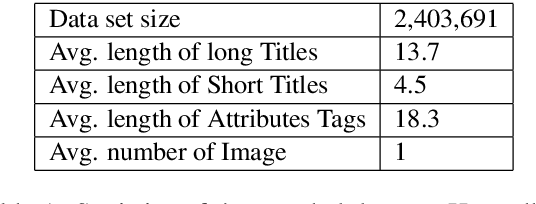

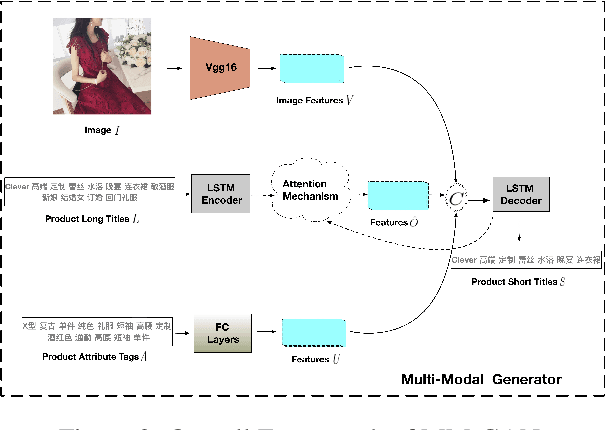

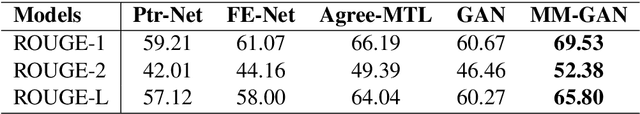

Multi-Modal Generative Adversarial Network for Short Product Title Generation in Mobile E-Commerce

Apr 03, 2019

Abstract:Nowadays, more and more customers browse and purchase products in favor of using mobile E-Commerce Apps such as Taobao and Amazon. Since merchants are usually inclined to describe redundant and over-informative product titles to attract attentions from customers, it is important to concisely display short product titles on limited screen of mobile phones. To address this discrepancy, previous studies mainly consider textual information of long product titles and lacks of human-like view during training and evaluation process. In this paper, we propose a Multi-Modal Generative Adversarial Network (MM-GAN) for short product title generation in E-Commerce, which innovatively incorporates image information and attribute tags from product, as well as textual information from original long titles. MM-GAN poses short title generation as a reinforcement learning process, where the generated titles are evaluated by the discriminator in a human-like view. Extensive experiments on a large-scale E-Commerce dataset demonstrate that our algorithm outperforms other state-of-the-art methods. Moreover, we deploy our model into a real-world online E-Commerce environment and effectively boost the performance of click through rate and click conversion rate by 1.66% and 1.87%, respectively.

Product Title Refinement via Multi-Modal Generative Adversarial Learning

Nov 11, 2018

Abstract:Nowadays, an increasing number of customers are in favor of using E-commerce Apps to browse and purchase products. Since merchants are usually inclined to employ redundant and over-informative product titles to attract customers' attention, it is of great importance to concisely display short product titles on limited screen of cell phones. Previous researchers mainly consider textual information of long product titles and lack of human-like view during training and evaluation procedure. In this paper, we propose a Multi-Modal Generative Adversarial Network (MM-GAN) for short product title generation, which innovatively incorporates image information, attribute tags from the product and the textual information from original long titles. MM-GAN treats short titles generation as a reinforcement learning process, where the generated titles are evaluated by the discriminator in a human-like view.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge