Pedro Machado

SteganoSNN: SNN-Based Audio-in-Image Steganography with Encryption

Nov 09, 2025

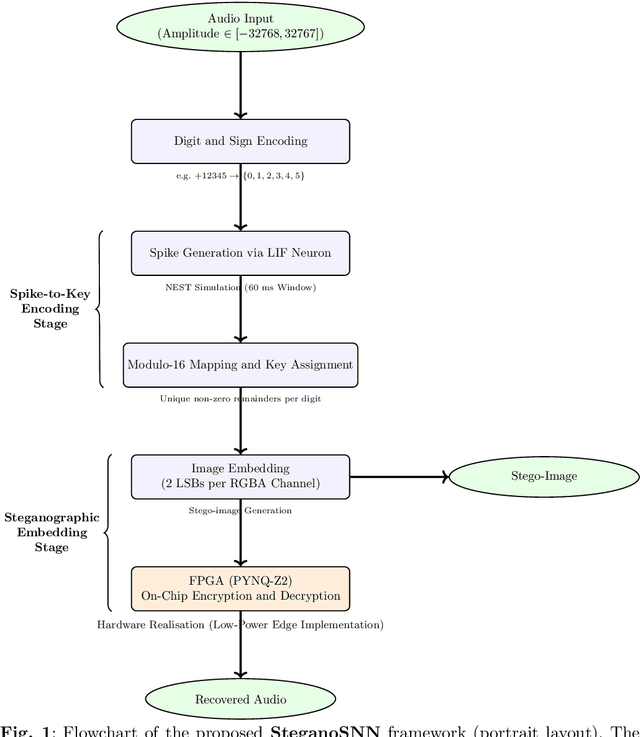

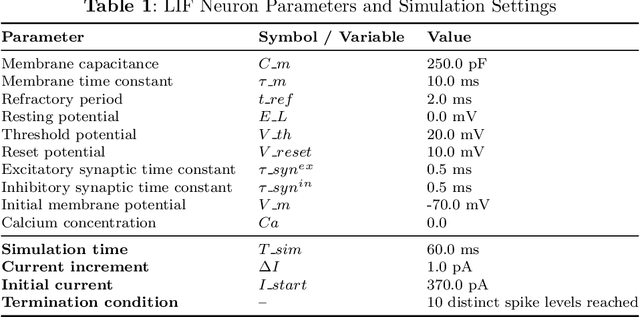

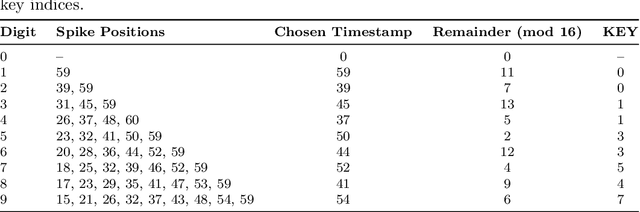

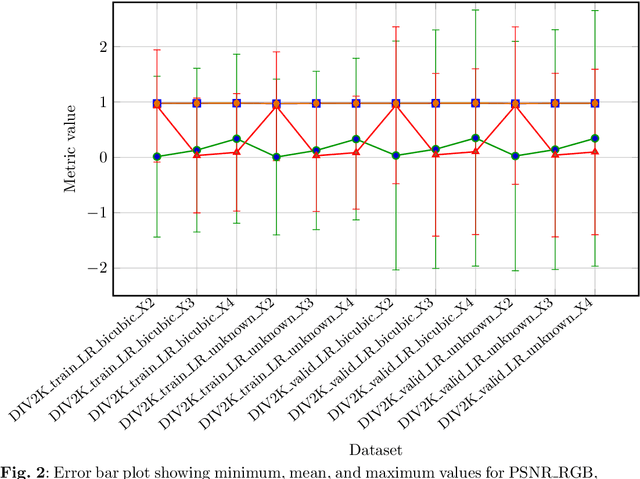

Abstract:Secure data hiding remains a fundamental challenge in digital communication, requiring a careful balance between computational efficiency and perceptual transparency. The balance between security and performance is increasingly fragile with the emergence of generative AI systems capable of autonomously generating and optimising sophisticated cryptanalysis and steganalysis algorithms, thereby accelerating the exposure of vulnerabilities in conventional data-hiding schemes. This work introduces SteganoSNN, a neuromorphic steganographic framework that exploits spiking neural networks (SNNs) to achieve secure, low-power, and high-capacity multimedia data hiding. Digitised audio samples are converted into spike trains using leaky integrate-and-fire (LIF) neurons, encrypted via a modulo-based mapping scheme, and embedded into the least significant bits of RGBA image channels using a dithering mechanism to minimise perceptual distortion. Implemented in Python using NEST and realised on a PYNQ-Z2 FPGA, SteganoSNN attains real-time operation with an embedding capacity of 8 bits per pixel. Experimental evaluations on the DIV2K 2017 dataset demonstrate image fidelity between 40.4 dB and 41.35 dB in PSNR and SSIM values consistently above 0.97, surpassing SteganoGAN in computational efficiency and robustness. SteganoSNN establishes a foundation for neuromorphic steganography, enabling secure, energy-efficient communication for Edge-AI, IoT, and biomedical applications.

Bearded Dragon Activity Recognition Pipeline: An AI-Based Approach to Behavioural Monitoring

Jul 23, 2025Abstract:Traditional monitoring of bearded dragon (Pogona Viticeps) behaviour is time-consuming and prone to errors. This project introduces an automated system for real-time video analysis, using You Only Look Once (YOLO) object detection models to identify two key behaviours: basking and hunting. We trained five YOLO variants (v5, v7, v8, v11, v12) on a custom, publicly available dataset of 1200 images, encompassing bearded dragons (600), heating lamps (500), and crickets (100). YOLOv8s was selected as the optimal model due to its superior balance of accuracy (mAP@0.5:0.95 = 0.855) and speed. The system processes video footage by extracting per-frame object coordinates, applying temporal interpolation for continuity, and using rule-based logic to classify specific behaviours. Basking detection proved reliable. However, hunting detection was less accurate, primarily due to weak cricket detection (mAP@0.5 = 0.392). Future improvements will focus on enhancing cricket detection through expanded datasets or specialised small-object detectors. This automated system offers a scalable solution for monitoring reptile behaviour in controlled environments, significantly improving research efficiency and data quality.

Enhancing Pollinator Conservation towards Agriculture 4.0: Monitoring of Bees through Object Recognition

May 24, 2024

Abstract:In an era of rapid climate change and its adverse effects on food production, technological intervention to monitor pollinator conservation is of paramount importance for environmental monitoring and conservation for global food security. The survival of the human species depends on the conservation of pollinators. This article explores the use of Computer Vision and Object Recognition to autonomously track and report bee behaviour from images. A novel dataset of 9664 images containing bees is extracted from video streams and annotated with bounding boxes. With training, validation and testing sets (6722, 1915, and 997 images, respectively), the results of the COCO-based YOLO model fine-tuning approaches show that YOLOv5m is the most effective approach in terms of recognition accuracy. However, YOLOv5s was shown to be the most optimal for real-time bee detection with an average processing and inference time of 5.1ms per video frame at the cost of slightly lower ability. The trained model is then packaged within an explainable AI interface, which converts detection events into timestamped reports and charts, with the aim of facilitating use by non-technical users such as expert stakeholders from the apiculture industry towards informing responsible consumption and production.

WeedScout: Real-Time Autonomous blackgrass Classification and Mapping using dedicated hardware

May 12, 2024

Abstract:Blackgrass (Alopecurus myosuroides) is a competitive weed that has wide-ranging impacts on food security by reducing crop yields and increasing cultivation costs. In addition to the financial burden on agriculture, the application of herbicides as a preventive to blackgrass can negatively affect access to clean water and sanitation. The WeedScout project introduces a Real-Rime Autonomous Black-Grass Classification and Mapping (RT-ABGCM), a cutting-edge solution tailored for real-time detection of blackgrass, for precision weed management practices. Leveraging Artificial Intelligence (AI) algorithms, the system processes live image feeds, infers blackgrass density, and covers two stages of maturation. The research investigates the deployment of You Only Look Once (YOLO) models, specifically the streamlined YOLOv8 and YOLO-NAS, accelerated at the edge with the NVIDIA Jetson Nano (NJN). By optimising inference speed and model performance, the project advances the integration of AI into agricultural practices, offering potential solutions to challenges such as herbicide resistance and environmental impact. Additionally, two datasets and model weights are made available to the research community, facilitating further advancements in weed detection and precision farming technologies.

UDEEP: Edge-based Computer Vision for In-Situ Underwater Crayfish and Plastic Detection

Dec 21, 2023Abstract:Invasive signal crayfish have a detrimental impact on ecosystems. They spread the fungal-type crayfish plague disease (Aphanomyces astaci) that is lethal to the native white clawed crayfish, the only native crayfish species in Britain. Invasive signal crayfish extensively burrow, causing habitat destruction, erosion of river banks and adverse changes in water quality, while also competing with native species for resources and leading to declines in native populations. Moreover, pollution exacerbates the vulnerability of White-clawed crayfish, with their populations declining by over 90% in certain English counties, making them highly susceptible to extinction. To safeguard aquatic ecosystems, it is imperative to address the challenges posed by invasive species and discarded plastics in the United Kingdom's river ecosystem's. The UDEEP platform can play a crucial role in environmental monitoring by performing on-the-fly classification of Signal crayfish and plastic debris while leveraging the efficacy of AI, IoT devices and the power of edge computing (i.e., NJN). By providing accurate data on the presence, spread and abundance of these species, the UDEEP platform can contribute to monitoring efforts and aid in mitigating the spread of invasive species.

Mitigating Adversarial Attacks in Deepfake Detection: An Exploration of Perturbation and AI Techniques

Feb 22, 2023

Abstract:Deep learning is a crucial aspect of machine learning, but it also makes these techniques vulnerable to adversarial examples, which can be seen in a variety of applications. These examples can even be targeted at humans, leading to the creation of false media, such as deepfakes, which are often used to shape public opinion and damage the reputation of public figures. This article will explore the concept of adversarial examples, which are comprised of perturbations added to clean images or videos, and their ability to deceive DL algorithms. The proposed approach achieved a precision value of accuracy of 76.2% on the DFDC dataset.

Dynamic Training of Liquid State Machines

Feb 06, 2023

Abstract:Spiking Neural Networks (SNNs) emerged as a promising solution in the field of Artificial Neural Networks (ANNs), attracting the attention of researchers due to their ability to mimic the human brain and process complex information with remarkable speed and accuracy. This research aimed to optimise the training process of Liquid State Machines (LSMs), a recurrent architecture of SNNs, by identifying the most effective weight range to be assigned in SNN to achieve the least difference between desired and actual output. The experimental results showed that by using spike metrics and a range of weights, the desired output and the actual output of spiking neurons could be effectively optimised, leading to improved performance of SNNs. The results were tested and confirmed using three different weight initialisation approaches, with the best results obtained using the Barabasi-Albert random graph method.

Multi-Objective Optimization Approach Using Deep Reinforcement Learning for Energy Efficiency in Heterogeneous Computing System

Feb 01, 2023

Abstract:The growing demand for optimal and low-power energy consumption paradigms for Internet of Things (IoT) devices has garnered significant attention due to their cost-effectiveness, simplicity, and intelligibility. We propose an Artificial Intelligence (AI) hardware energy-efficient framework to achieve optimal energy savings in heterogeneous computing through appropriate power consumption management. A deep reinforcement learning framework is employed, utilizing the Actor-Critic architecture to provide a simple and precise method for power saving. The results of the study demonstrate the proposed approach's suitability for different hardware configurations, achieving notable energy consumption control while adhering to strict performance requirements. The evaluation of the proposed power-saving framework shows that it is more stable, and has achieved more than 23% efficiency improvement, outperforming other methods by more than 5%.

Secure Video Streaming Using Dedicated Hardware

Jan 15, 2023Abstract:Purpose: The purpose of this article is to present a system that enhances the security, efficiency, and reconfigurability of an Internet-of-Things (IoT) system used for surveillance and monitoring. Methods: A Multi-Processor System-On-Chip (MPSoC) composed of Central Processor Unit (CPU) and Field-Programmable Gate Array (FPGA) is proposed for increasing the security and the frame rate of a smart IoT edge device. The private encryption key is safely embedded in the FPGA unit to avoid being exposed in the Random Access Memory (RAM). This allows the edge device to securely store and authenticate the key, protecting the data transmitted from the same Integrated Circuit (IC). Additionally, the edge device can simultaneously publish and route a camera stream using a lightweight communication protocol, achieving a frame rate of 14 frames per Second (fps). The performance of the MPSoC is compared to a NVIDIA Jetson Nano (NJN) and a Raspberry Pi 4 (RPI4) and it is found that the RPI4 is the most cost-effective solution but with lower frame rate, the NJN is the fastest because it can achieve higher frame-rate but it is not secure, and the MPSoC is the optimal solution because it offers a balanced frame rate and it is secure because it never exposes the secure key into the memory. Results: The proposed system successfully addresses the challenges of security, scalability, and efficiency in an IoT system used for surveillance and monitoring. The encryption key is securely stored and authenticated, and the edge device is able to simultaneously publish and route a camera stream feed high-definition images at 14 fps.

Benchmarking Edge Computing Devices for Grape Bunches and Trunks Detection using Accelerated Object Detection Single Shot MultiBox Deep Learning Models

Nov 21, 2022

Abstract:Purpose: Visual perception enables robots to perceive the environment. Visual data is processed using computer vision algorithms that are usually time-expensive and require powerful devices to process the visual data in real-time, which is unfeasible for open-field robots with limited energy. This work benchmarks the performance of different heterogeneous platforms for object detection in real-time. This research benchmarks three architectures: embedded GPU -- Graphical Processing Units (such as NVIDIA Jetson Nano 2 GB and 4 GB, and NVIDIA Jetson TX2), TPU -- Tensor Processing Unit (such as Coral Dev Board TPU), and DPU -- Deep Learning Processor Unit (such as in AMD-Xilinx ZCU104 Development Board, and AMD-Xilinx Kria KV260 Starter Kit). Method: The authors used the RetinaNet ResNet-50 fine-tuned using the natural VineSet dataset. After the trained model was converted and compiled for target-specific hardware formats to improve the execution efficiency. Conclusions and Results: The platforms were assessed in terms of performance of the evaluation metrics and efficiency (time of inference). Graphical Processing Units (GPUs) were the slowest devices, running at 3 FPS to 5 FPS, and Field Programmable Gate Arrays (FPGAs) were the fastest devices, running at 14 FPS to 25 FPS. The efficiency of the Tensor Processing Unit (TPU) is irrelevant and similar to NVIDIA Jetson TX2. TPU and GPU are the most power-efficient, consuming about 5W. The performance differences, in the evaluation metrics, across devices are irrelevant and have an F1 of about 70 % and mean Average Precision (mAP) of about 60 %.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge