T. M. McGinnity

Exploiting High Quality Tactile Sensors for Simplified Grasping

Jul 25, 2022

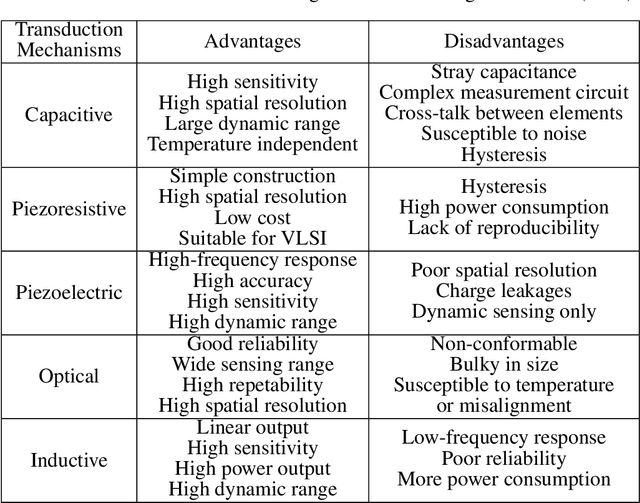

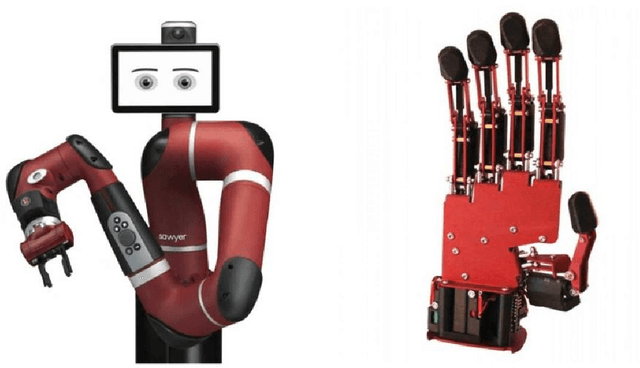

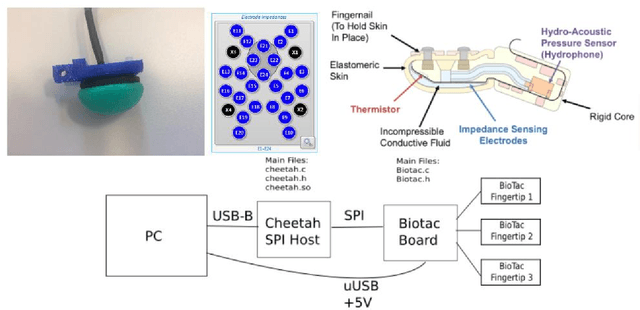

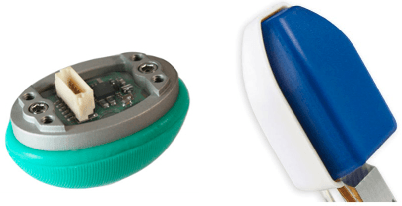

Abstract:Robots are expected to grasp a wide range of objects varying in shape, weight or material type. Providing robots with tactile capabilities similar to humans is thus essential for applications involving human-to-robot or robot-to-robot interactions, particularly in those situations where a robot is expected to grasp and manipulate complex objects not previously encountered. A critical aspect for successful object grasp and manipulation is the use of high-quality fingertips equipped with multiple high-performance sensors, distributed appropriately across a specific contact surface. In this paper, we present a detailed analysis of the use of two different types of commercially available robotic fingertips (BioTac and WTS-FT), each of which is equipped with multiple sensors distributed across the fingertips' contact surface. We further demonstrate that, due to the high performance of the fingertips, a complex adaptive grasping algorithm is not required for grasping of everyday objects. We conclude that a simple algorithm based on a proportional controller will suffice for many grasping applications, provided the relevant fingertips exhibit high sensitivity. In a quantified assessment, we also demonstrate that, due in part to the sensor distribution, the BioTac-based fingertip performs better than the WTS-FT device, in enabling lifting of loads up to 850g, and that the simple proportional controller can adapt the grasp even when the object is exposed to significant external vibrational challenges.

Object recognition for robotics from tactile time series data utilising different neural network architectures

Sep 09, 2021

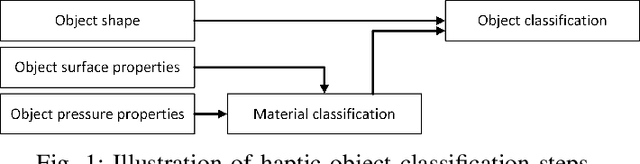

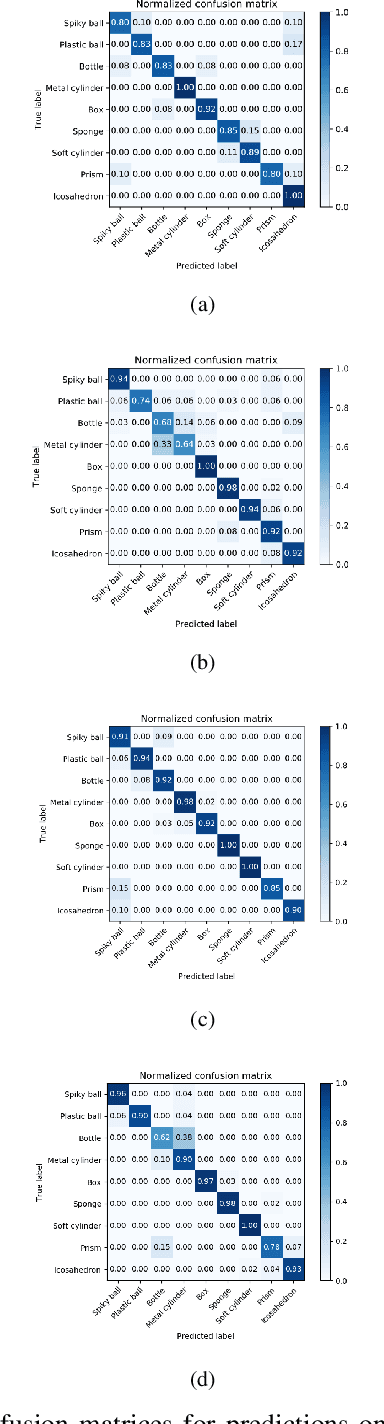

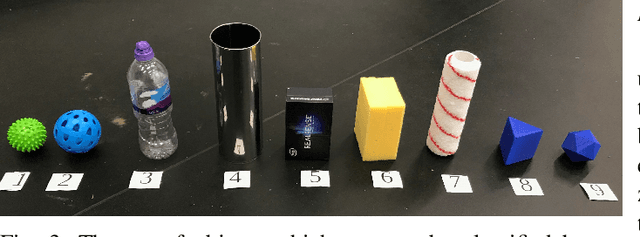

Abstract:Robots need to exploit high-quality information on grasped objects to interact with the physical environment. Haptic data can therefore be used for supplementing the visual modality. This paper investigates the use of Convolutional Neural Networks (CNN) and Long-Short Term Memory (LSTM) neural network architectures for object classification on Spatio-temporal tactile grasping data. Furthermore, we compared these methods using data from two different fingertip sensors (namely the BioTac SP and WTS-FT) in the same physical setup, allowing for a realistic comparison across methods and sensors for the same tactile object classification dataset. Additionally, we propose a way to create more training examples from the recorded data. The results show that the proposed method improves the maximum accuracy from 82.4% (BioTac SP fingertips) and 90.7% (WTS-FT fingertips) with complete time-series data to about 94% for both sensor types.

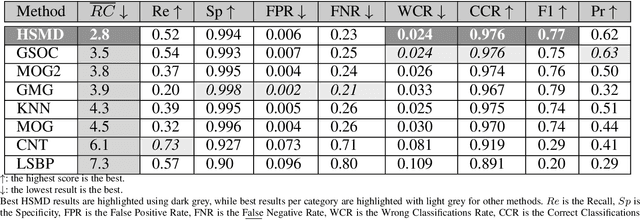

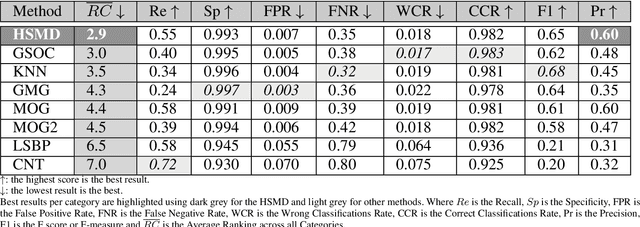

HSMD: An object motion detection algorithm using a Hybrid Spiking Neural Network Architecture

Sep 09, 2021

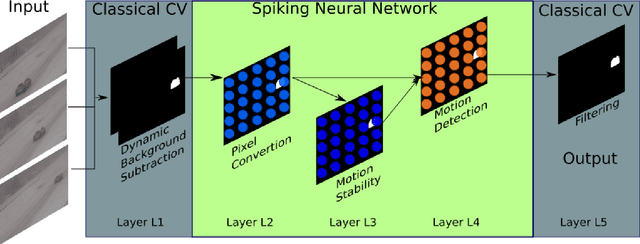

Abstract:The detection of moving objects is a trivial task performed by vertebrate retinas, yet a complex computer vision task. Object-motion-sensitive ganglion cells (OMS-GC) are specialised cells in the retina that sense moving objects. OMS-GC take as input continuous signals and produce spike patterns as output, that are transmitted to the Visual Cortex via the optic nerve. The Hybrid Sensitive Motion Detector (HSMD) algorithm proposed in this work enhances the GSOC dynamic background subtraction (DBS) algorithm with a customised 3-layer spiking neural network (SNN) that outputs spiking responses akin to the OMS-GC. The algorithm was compared against existing background subtraction (BS) approaches, available on the OpenCV library, specifically on the 2012 change detection (CDnet2012) and the 2014 change detection (CDnet2014) benchmark datasets. The results show that the HSMD was ranked overall first among the competing approaches and has performed better than all the other algorithms on four of the categories across all the eight test metrics. Furthermore, the HSMD proposed in this paper is the first to use an SNN to enhance an existing state of the art DBS (GSOC) algorithm and the results demonstrate that the SNN provides near real-time performance in realistic applications.

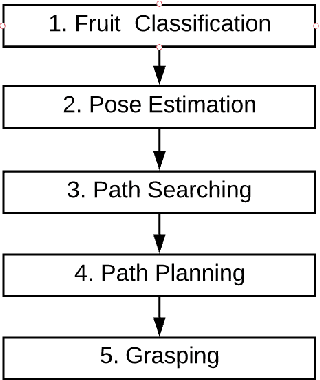

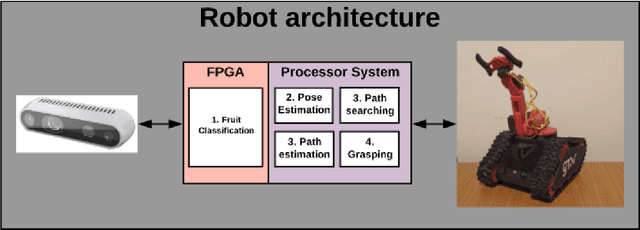

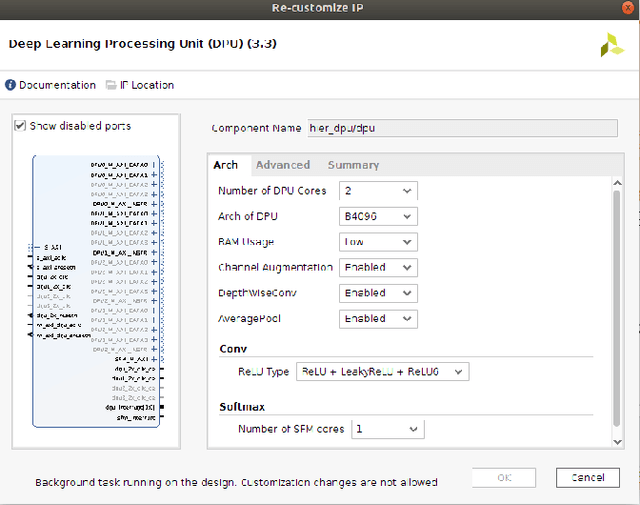

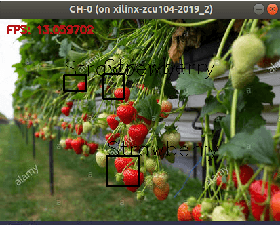

Strawberry Detection Using a Heterogeneous Multi-Processor Platform

Nov 07, 2020

Abstract:Over the last few years, the number of precision farming projects has increased specifically in harvesting robots and many of which have made continued progress from identifying crops to grasping the desired fruit or vegetable. One of the most common issues found in precision farming projects is that successful application is heavily dependent not just on identifying the fruit but also on ensuring that localisation allows for accurate navigation. These issues become significant factors when the robot is not operating in a prearranged environment, or when vegetation becomes too thick, thus covering crop. Moreover, running a state-of-the-art deep learning algorithm on an embedded platform is also very challenging, resulting most of the times in low frame rates. This paper proposes using the You Only Look Once version 3 (YOLOv3) Convolutional Neural Network (CNN) in combination with utilising image processing techniques for the application of precision farming robots targeting strawberry detection, accelerated on a heterogeneous multiprocessor platform. The results show a performance acceleration by five times when implemented on a Field-Programmable Gate Array (FPGA) when compared with the same algorithm running on the processor side with an accuracy of 78.3\% over the test set comprised of 146 images.

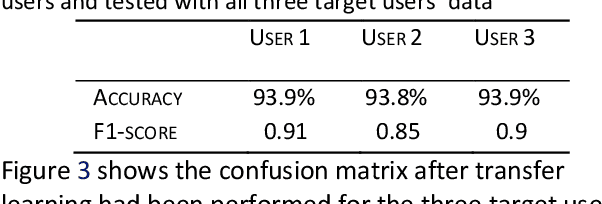

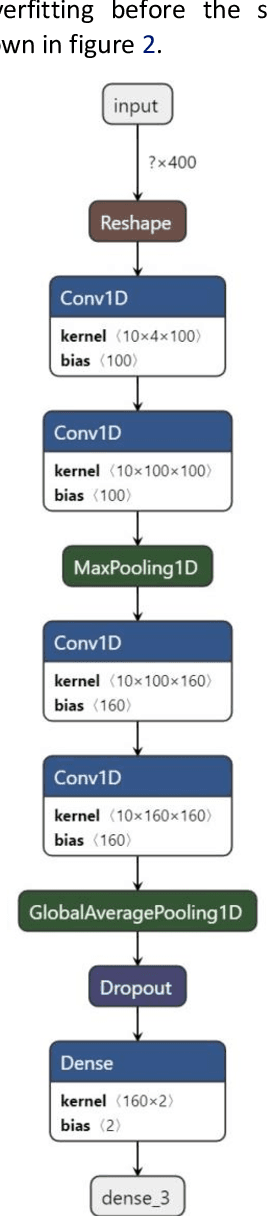

On-Device Transfer Learning for Personalising Psychological Stress Modelling using a Convolutional Neural Network

Apr 03, 2020

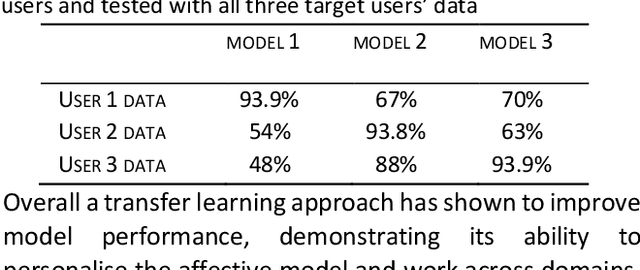

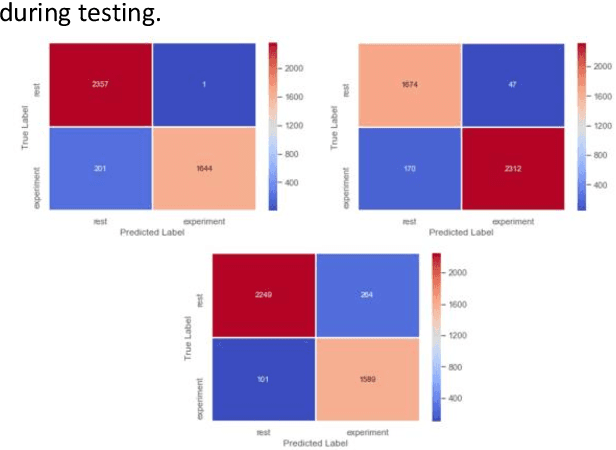

Abstract:Stress is a growing concern in modern society adversely impacting the wider population more than ever before. The accurate inference of stress may result in the possibility for personalised interventions. However, individual differences between people limits the generalisability of machine learning models to infer emotions as people's physiology when experiencing the same emotions widely varies. In addition, it is time consuming and extremely challenging to collect large datasets of individuals' emotions as it relies on users labelling sensor data in real-time for extended periods. We propose the development of a personalised, cross-domain 1D CNN by utilising transfer learning from an initial base model trained using data from 20 participants completing a controlled stressor experiment. By utilising physiological sensors (HR, HRV EDA) embedded within edge computing interfaces that additionally contain a labelling technique, it is possible to collect a small real-world personal dataset that can be used for on-device transfer learning to improve model personalisation and cross-domain performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge