Eiman Kanjo

MultiCore+TPU Accelerated Multi-Modal TinyML for Livestock Behaviour Recognition

Apr 10, 2025Abstract:The advancement of technology has revolutionised the agricultural industry, transitioning it from labour-intensive farming practices to automated, AI-powered management systems. In recent years, more intelligent livestock monitoring solutions have been proposed to enhance farming efficiency and productivity. This work presents a novel approach to animal activity recognition and movement tracking, leveraging tiny machine learning (TinyML) techniques, wireless communication framework, and microcontroller platforms to develop an efficient, cost-effective livestock sensing system. It collects and fuses accelerometer data and vision inputs to build a multi-modal network for three tasks: image classification, object detection, and behaviour recognition. The system is deployed and evaluated on commercial microcontrollers for real-time inference using embedded applications, demonstrating up to 270$\times$ model size reduction, less than 80ms response latency, and on-par performance comparable to existing methods. The incorporation of the TinyML technique allows for seamless data transmission between devices, benefiting use cases in remote locations with poor Internet connectivity. This work delivers a robust, scalable IoT-edge livestock monitoring solution adaptable to diverse farming needs, offering flexibility for future extensions.

Decentralised Resource Sharing in TinyML: Wireless Bilayer Gossip Parallel SGD for Collaborative Learning

Jan 08, 2025

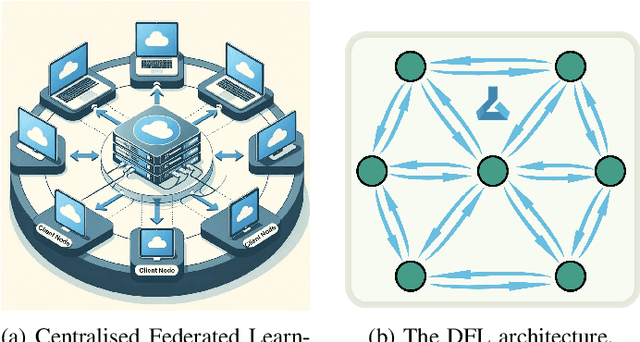

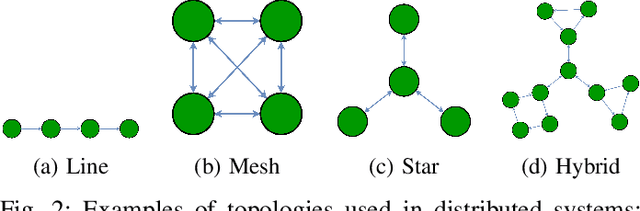

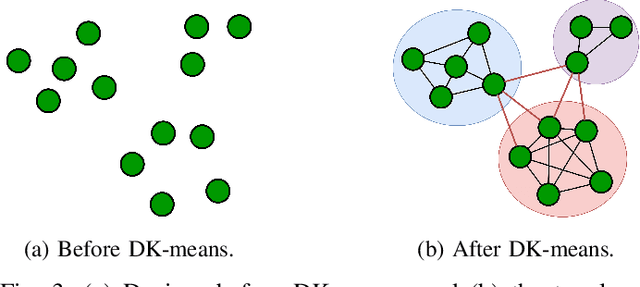

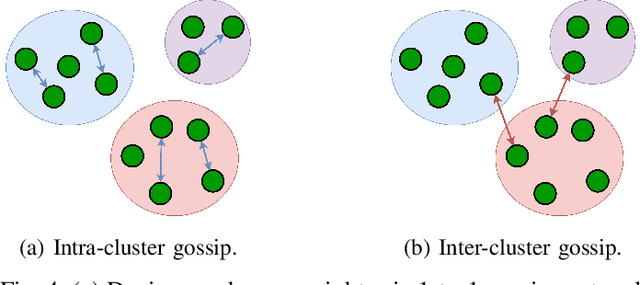

Abstract:With the growing computational capabilities of microcontroller units (MCUs), edge devices can now support machine learning models. However, deploying decentralised federated learning (DFL) on such devices presents key challenges, including intermittent connectivity, limited communication range, and dynamic network topologies. This paper proposes a novel framework, bilayer Gossip Decentralised Parallel Stochastic Gradient Descent (GD PSGD), designed to address these issues in resource-constrained environments. The framework incorporates a hierarchical communication structure using Distributed Kmeans (DKmeans) clustering for geographic grouping and a gossip protocol for efficient model aggregation across two layers: intra-cluster and inter-cluster. We evaluate the framework's performance against the Centralised Federated Learning (CFL) baseline using the MCUNet model on the CIFAR-10 dataset under IID and Non-IID conditions. Results demonstrate that the proposed method achieves comparable accuracy to CFL on IID datasets, requiring only 1.8 additional rounds for convergence. On Non-IID datasets, the accuracy loss remains under 8\% for moderate data imbalance. These findings highlight the framework's potential to support scalable and privacy-preserving learning on edge devices with minimal performance trade-offs.

NeuroHSMD: Neuromorphic Hybrid Spiking Motion Detector

Dec 12, 2021

Abstract:Vertebrate retinas are highly-efficient in processing trivial visual tasks such as detecting moving objects, yet a complex task for modern computers. The detection of object motion is done by specialised retinal ganglion cells named Object-motion-sensitive ganglion cells (OMS-GC). OMS-GC process continuous signals and generate spike patterns that are post-processed by the Visual Cortex. The Neuromorphic Hybrid Spiking Motion Detector (NeuroHSMD) proposed in this work accelerates the HSMD algorithm using Field-Programmable Gate Arrays (FPGAs). The Hybrid Spiking Motion Detector (HSMD) algorithm was the first hybrid algorithm to enhance dynamic background subtraction (DBS) algorithms with a customised 3-layer spiking neural network (SNN) that generates OMS-GC spiking-like responses. The NeuroHSMD algorithm was compared against the HSMD algorithm, using the same 2012 change detection (CDnet2012) and 2014 change detection (CDnet2014) benchmark datasets. The results show that the NeuroHSMD has produced the same results as the HSMD algorithm in real-time without degradation of quality. Moreover, the NeuroHSMD proposed in this paper was completely implemented in Open Computer Language (OpenCL) and therefore is easily replicated in other devices such as Graphical Processor Units (GPUs) and clusters of Central Processor Units (CPUs).

DigitalExposome: Quantifying the Urban Environment Influence on Wellbeing based on Real-Time Multi-Sensor Fusion and Deep Belief Network

Jan 29, 2021

Abstract:In this paper, we define the term 'DigitalExposome' as a conceptual framework that takes us closer towards understanding the relationship between environment, personal characteristics, behaviour and wellbeing using multimodel mobile sensing technology. Specifically, we simultaneously collected (for the first time) multi-sensor data including urban environmental factors (e.g. air pollution including: PM1, PM2.5, PM10, Oxidised, Reduced, NH3 and Noise, People Count in the vicinity), body reaction (physiological reactions including: EDA, HR, HRV, Body Temperature, BVP and movement) and individuals' perceived responses (e.g. self-reported valence) in urban settings. Our users followed a pre-specified urban path and collected the data using a comprehensive sensing edge devices. The data is instantly fused, time-stamped and geo-tagged at the point of collection. A range of multivariate statistical analysis techniques have been applied including Principle Component Analysis, Regression and spatial visualisations to unravel the relationship between the variables. Results showed that EDA and Heart Rate Variability HRV are noticeably impacted by the level of Particulate Matters (PM) in the environment well with the environmental variables. Furthermore, we adopted Deep Belief Network to extract features from the multimodel data feed which outperformed Convolutional Neural Network and achieved up to (a=80.8%, {\sigma}=0.001) accuracy.

Combining Deep Transfer Learning with Signal-image Encoding for Multi-Modal Mental Wellbeing Classification

Nov 20, 2020

Abstract:The quantification of emotional states is an important step to understanding wellbeing. Time series data from multiple modalities such as physiological and motion sensor data have proven to be integral for measuring and quantifying emotions. Monitoring emotional trajectories over long periods of time inherits some critical limitations in relation to the size of the training data. This shortcoming may hinder the development of reliable and accurate machine learning models. To address this problem, this paper proposes a framework to tackle the limitation in performing emotional state recognition on multiple multimodal datasets: 1) encoding multivariate time series data into coloured images; 2) leveraging pre-trained object recognition models to apply a Transfer Learning (TL) approach using the images from step 1; 3) utilising a 1D Convolutional Neural Network (CNN) to perform emotion classification from physiological data; 4) concatenating the pre-trained TL model with the 1D CNN. Furthermore, the possibility of performing TL to infer stress from physiological data is explored by initially training a 1D CNN using a large physical activity dataset and then applying the learned knowledge to the target dataset. We demonstrate that model performance when inferring real-world wellbeing rated on a 5-point Likert scale can be enhanced using our framework, resulting in up to 98.5% accuracy, outperforming a conventional CNN by 4.5%. Subject-independent models using the same approach resulted in an average of 72.3% accuracy (SD 0.038). The proposed CNN-TL-based methodology may overcome problems with small training datasets, thus improving on the performance of conventional deep learning methods.

On-Device Transfer Learning for Personalising Psychological Stress Modelling using a Convolutional Neural Network

Apr 03, 2020

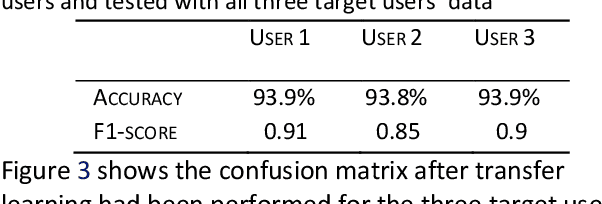

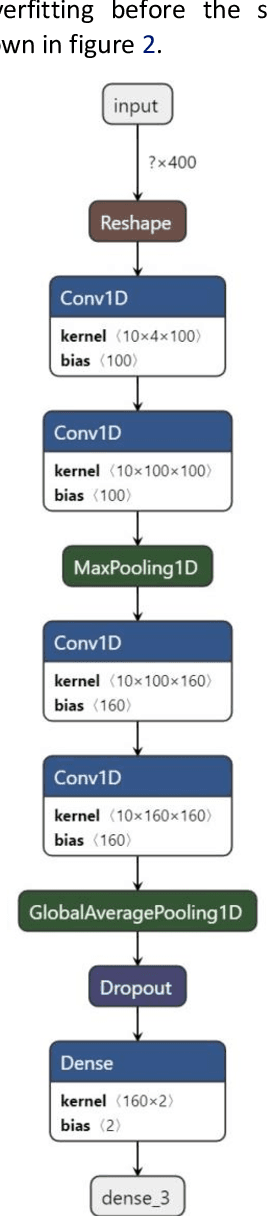

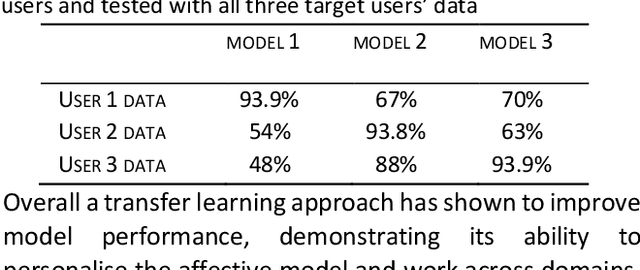

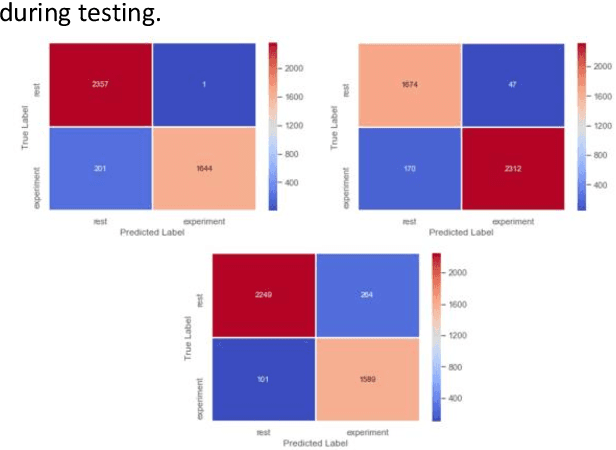

Abstract:Stress is a growing concern in modern society adversely impacting the wider population more than ever before. The accurate inference of stress may result in the possibility for personalised interventions. However, individual differences between people limits the generalisability of machine learning models to infer emotions as people's physiology when experiencing the same emotions widely varies. In addition, it is time consuming and extremely challenging to collect large datasets of individuals' emotions as it relies on users labelling sensor data in real-time for extended periods. We propose the development of a personalised, cross-domain 1D CNN by utilising transfer learning from an initial base model trained using data from 20 participants completing a controlled stressor experiment. By utilising physiological sensors (HR, HRV EDA) embedded within edge computing interfaces that additionally contain a labelling technique, it is possible to collect a small real-world personal dataset that can be used for on-device transfer learning to improve model personalisation and cross-domain performance.

LabelSens: Enabling Real-time Sensor Data Labelling at the point of Collection on Edge Computing

Oct 04, 2019

Abstract:In recent years, machine learning has made leaps and bounds enabling applications with high recognition accuracy for speech and images. However, other types of data to which these models can be applied have not yet been explored as thoroughly. In particular, it can be relatively challenging to accurately classify single or multi-model, real-time sensor data. Labelling is an indispensable stage of data pre-processing that can be even more challenging in real-time sensor data collection. Currently, real-time sensor data labelling is an unwieldly process with limited tools available and poor performance characteristics that can lead to the performance of the machine learning models being compromised. In this paper, we introduce new techniques for labelling at the point of collection coupled with a systematic performance comparison of two popular types of Deep Neural Networks running on five custom built edge devices. These state-of-the-art edge devices are designed to enable real-time labelling with various buttons, slide potentiometer and force sensors. This research provides results and insights that can help researchers utilising edge devices for real-time data collection select appropriate labelling techniques. We also identify common bottlenecks in each architecture and provide field tested guidelines to assist developers building adaptive, high performance edge solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge