Strawberry Detection Using a Heterogeneous Multi-Processor Platform

Paper and Code

Nov 07, 2020

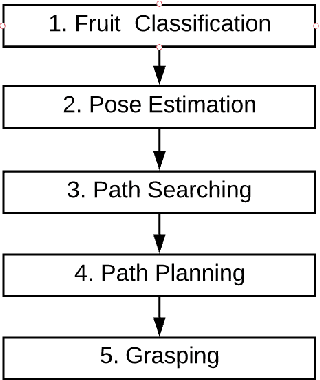

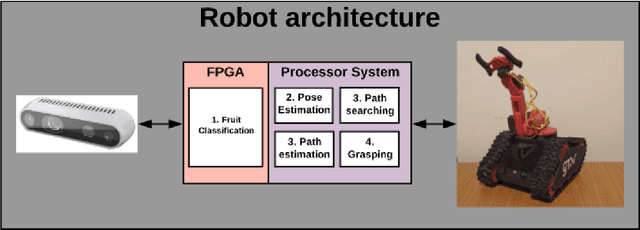

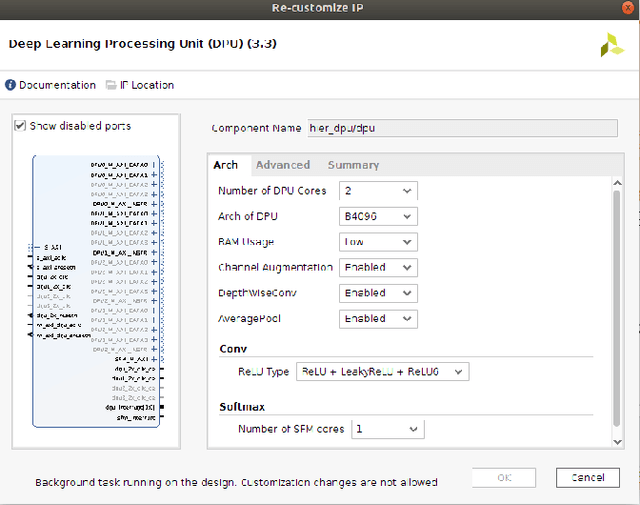

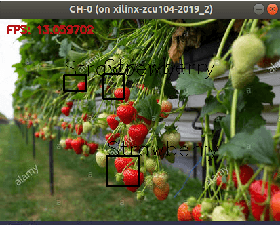

Over the last few years, the number of precision farming projects has increased specifically in harvesting robots and many of which have made continued progress from identifying crops to grasping the desired fruit or vegetable. One of the most common issues found in precision farming projects is that successful application is heavily dependent not just on identifying the fruit but also on ensuring that localisation allows for accurate navigation. These issues become significant factors when the robot is not operating in a prearranged environment, or when vegetation becomes too thick, thus covering crop. Moreover, running a state-of-the-art deep learning algorithm on an embedded platform is also very challenging, resulting most of the times in low frame rates. This paper proposes using the You Only Look Once version 3 (YOLOv3) Convolutional Neural Network (CNN) in combination with utilising image processing techniques for the application of precision farming robots targeting strawberry detection, accelerated on a heterogeneous multiprocessor platform. The results show a performance acceleration by five times when implemented on a Field-Programmable Gate Array (FPGA) when compared with the same algorithm running on the processor side with an accuracy of 78.3\% over the test set comprised of 146 images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge