Pavlos Protopapas

PTL-PINNs: Perturbation-Guided Transfer Learning with Physics- Informed Neural Networks for Nonlinear Systems

Jan 17, 2026Abstract:Accurately and efficiently solving nonlinear differential equations is crucial for modeling dynamic behavior across science and engineering. Physics-Informed Neural Networks (PINNs) have emerged as a powerful solution that embeds physical laws in training by enforcing equation residuals. However, these struggle to model nonlinear dynamics, suffering from limited generalization across problems and long training times. To address these limitations, we propose a perturbation-guided transfer learning framework for PINNs (PTL-PINN), which integrates perturbation theory with transfer learning to efficiently solve nonlinear equations. Unlike gradient-based transfer learning, PTL-PINNs solve an approximate linear perturbative system using closed-form expressions, enabling rapid generalization with the time complexity of matrix-vector multiplication. We show that PTL-PINNs achieve accuracy comparable to various Runge-Kutta methods, with computational speeds up to one order of magnitude faster. To benchmark performance, we solve a broad set of problems, including nonlinear oscillators across various damping regimes, the equilibrium-centered Lotka-Volterra system, the KPP-Fisher and the Wave equation. Since perturbation theory sets the accuracy bound of PTL-PINNs, we systematically evaluate its practical applicability. This work connects long-standing perturbation methods with PINNs, demonstrating how perturbation theory can guide foundational models to solve nonlinear systems with speeds comparable to those of classical solvers.

One-Shot Transfer Learning for Nonlinear PDEs with Perturbative PINNs

Nov 14, 2025Abstract:We propose a framework for solving nonlinear partial differential equations (PDEs) by combining perturbation theory with one-shot transfer learning in Physics-Informed Neural Networks (PINNs). Nonlinear PDEs with polynomial terms are decomposed into a sequence of linear subproblems, which are efficiently solved using a Multi-Head PINN. Once the latent representation of the linear operator is learned, solutions to new PDE instances with varying perturbations, forcing terms, or boundary/initial conditions can be obtained in closed form without retraining. We validate the method on KPP-Fisher and wave equations, achieving errors on the order of 1e-3 while adapting to new problem instances in under 0.2 seconds; comparable accuracy to classical solvers but with faster transfer. Sensitivity analyses show predictable error growth with epsilon and polynomial degree, clarifying the method's effective regime. Our contributions are: (i) extending one-shot transfer learning from nonlinear ODEs to PDEs, (ii) deriving a closed-form solution for adapting to new PDE instances, and (iii) demonstrating accuracy and efficiency on canonical nonlinear PDEs. We conclude by outlining extensions to derivative-dependent nonlinearities and higher-dimensional PDEs.

The Denario project: Deep knowledge AI agents for scientific discovery

Oct 30, 2025Abstract:We present Denario, an AI multi-agent system designed to serve as a scientific research assistant. Denario can perform many different tasks, such as generating ideas, checking the literature, developing research plans, writing and executing code, making plots, and drafting and reviewing a scientific paper. The system has a modular architecture, allowing it to handle specific tasks, such as generating an idea, or carrying out end-to-end scientific analysis using Cmbagent as a deep-research backend. In this work, we describe in detail Denario and its modules, and illustrate its capabilities by presenting multiple AI-generated papers generated by it in many different scientific disciplines such as astrophysics, biology, biophysics, biomedical informatics, chemistry, material science, mathematical physics, medicine, neuroscience and planetary science. Denario also excels at combining ideas from different disciplines, and we illustrate this by showing a paper that applies methods from quantum physics and machine learning to astrophysical data. We report the evaluations performed on these papers by domain experts, who provided both numerical scores and review-like feedback. We then highlight the strengths, weaknesses, and limitations of the current system. Finally, we discuss the ethical implications of AI-driven research and reflect on how such technology relates to the philosophy of science. We publicly release the code at https://github.com/AstroPilot-AI/Denario. A Denario demo can also be run directly on the web at https://huggingface.co/spaces/astropilot-ai/Denario, and the full app will be deployed on the cloud.

Image-Based Multi-Survey Classification of Light Curves with a Pre-Trained Vision Transformer

Jul 15, 2025Abstract:We explore the use of Swin Transformer V2, a pre-trained vision Transformer, for photometric classification in a multi-survey setting by leveraging light curves from the Zwicky Transient Facility (ZTF) and the Asteroid Terrestrial-impact Last Alert System (ATLAS). We evaluate different strategies for integrating data from these surveys and find that a multi-survey architecture which processes them jointly achieves the best performance. These results highlight the importance of modeling survey-specific characteristics and cross-survey interactions, and provide guidance for building scalable classifiers for future time-domain astronomy.

Improved Uncertainty Quantification in Physics-Informed Neural Networks Using Error Bounds and Solution Bundles

May 09, 2025

Abstract:Physics-Informed Neural Networks (PINNs) have been widely used to obtain solutions to various physical phenomena modeled as Differential Equations. As PINNs are not naturally equipped with mechanisms for Uncertainty Quantification, some work has been done to quantify the different uncertainties that arise when dealing with PINNs. In this paper, we use a two-step procedure to train Bayesian Neural Networks that provide uncertainties over the solutions to differential equation systems provided by PINNs. We use available error bounds over PINNs to formulate a heteroscedastic variance that improves the uncertainty estimation. Furthermore, we solve forward problems and utilize the obtained uncertainties when doing parameter estimation in inverse problems in cosmology.

Astromer 2

Feb 04, 2025

Abstract:Foundational models have emerged as a powerful paradigm in deep learning field, leveraging their capacity to learn robust representations from large-scale datasets and effectively to diverse downstream applications such as classification. In this paper, we present Astromer 2 a foundational model specifically designed for extracting light curve embeddings. We introduce Astromer 2 as an enhanced iteration of our self-supervised model for light curve analysis. This paper highlights the advantages of its pre-trained embeddings, compares its performance with that of its predecessor, Astromer 1, and provides a detailed empirical analysis of its capabilities, offering deeper insights into the model's representations. Astromer 2 is pretrained on 1.5 million single-band light curves from the MACHO survey using a self-supervised learning task that predicts randomly masked observations within sequences. Fine-tuning on a smaller labeled dataset allows us to assess its performance in classification tasks. The quality of the embeddings is measured by the F1 score of an MLP classifier trained on Astromer-generated embeddings. Our results demonstrate that Astromer 2 significantly outperforms Astromer 1 across all evaluated scenarios, including limited datasets of 20, 100, and 500 samples per class. The use of weighted per-sample embeddings, which integrate intermediate representations from Astromer's attention blocks, is particularly impactful. Notably, Astromer 2 achieves a 15% improvement in F1 score on the ATLAS dataset compared to prior models, showcasing robust generalization to new datasets. This enhanced performance, especially with minimal labeled data, underscores the potential of Astromer 2 for more efficient and scalable light curve analysis.

Stiff Transfer Learning for Physics-Informed Neural Networks

Jan 28, 2025Abstract:Stiff differential equations are prevalent in various scientific domains, posing significant challenges due to the disparate time scales of their components. As computational power grows, physics-informed neural networks (PINNs) have led to significant improvements in modeling physical processes described by differential equations. Despite their promising outcomes, vanilla PINNs face limitations when dealing with stiff systems, known as failure modes. In response, we propose a novel approach, stiff transfer learning for physics-informed neural networks (STL-PINNs), to effectively tackle stiff ordinary differential equations (ODEs) and partial differential equations (PDEs). Our methodology involves training a Multi-Head-PINN in a low-stiff regime, and obtaining the final solution in a high stiff regime by transfer learning. This addresses the failure modes related to stiffness in PINNs while maintaining computational efficiency by computing "one-shot" solutions. The proposed approach demonstrates superior accuracy and speed compared to PINNs-based methods, as well as comparable computational efficiency with implicit numerical methods in solving stiff-parameterized linear and polynomial nonlinear ODEs and PDEs under stiff conditions. Furthermore, we demonstrate the scalability of such an approach and the superior speed it offers for simulations involving initial conditions and forcing function reparametrization.

Efficient PINNs: Multi-Head Unimodular Regularization of the Solutions Space

Jan 21, 2025Abstract:We present a machine learning framework to facilitate the solution of nonlinear multiscale differential equations and, especially, inverse problems using Physics-Informed Neural Networks (PINNs). This framework is based on what is called multihead (MH) training, which involves training the network to learn a general space of all solutions for a given set of equations with certain variability, rather than learning a specific solution of the system. This setup is used with a second novel technique that we call Unimodular Regularization (UR) of the latent space of solutions. We show that the multihead approach, combined with the regularization, significantly improves the efficiency of PINNs by facilitating the transfer learning process thereby enabling the finding of solutions for nonlinear, coupled, and multiscale differential equations.

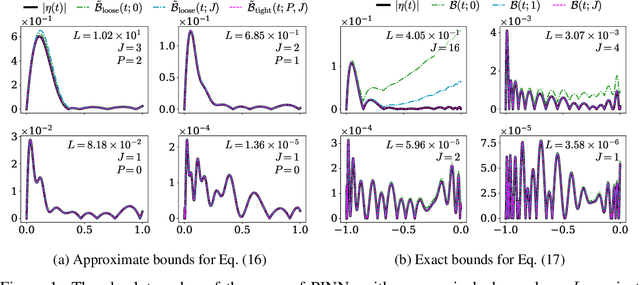

Exact and approximate error bounds for physics-informed neural networks

Nov 21, 2024

Abstract:The use of neural networks to solve differential equations, as an alternative to traditional numerical solvers, has increased recently. However, error bounds for the obtained solutions have only been developed for certain equations. In this work, we report important progress in calculating error bounds of physics-informed neural networks (PINNs) solutions of nonlinear first-order ODEs. We give a general expression that describes the error of the solution that the PINN-based method provides for a nonlinear first-order ODE. In addition, we propose a technique to calculate an approximate bound for the general case and an exact bound for a particular case. The error bounds are computed using only the residual information and the equation structure. We apply the proposed methods to particular cases and show that they can successfully provide error bounds without relying on the numerical solution.

Gravitational Duals from Equations of State

Mar 21, 2024

Abstract:Holography relates gravitational theories in five dimensions to four-dimensional quantum field theories in flat space. Under this map, the equation of state of the field theory is encoded in the black hole solutions of the gravitational theory. Solving the five-dimensional Einstein's equations to determine the equation of state is an algorithmic, direct problem. Determining the gravitational theory that gives rise to a prescribed equation of state is a much more challenging, inverse problem. We present a novel approach to solve this problem based on physics-informed neural networks. The resulting algorithm is not only data-driven but also informed by the physics of the Einstein's equations. We successfully apply it to theories with crossovers, first- and second-order phase transitions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge