Paola Ruiz Puentes

Eye Tracking for Tele-robotic Surgery: A Comparative Evaluation of Head-worn Solutions

Oct 18, 2023Abstract:Purpose: Metrics derived from eye-gaze-tracking and pupillometry show promise for cognitive load assessment, potentially enhancing training and patient safety through user-specific feedback in tele-robotic surgery. However, current eye-tracking solutions' effectiveness in tele-robotic surgery is uncertain compared to everyday situations due to close-range interactions causing extreme pupil angles and occlusions. To assess the effectiveness of modern eye-gaze-tracking solutions in tele-robotic surgery, we compare the Tobii Pro 3 Glasses and Pupil Labs Core, evaluating their pupil diameter and gaze stability when integrated with the da Vinci Research Kit (dVRK). Methods: The study protocol includes a nine-point gaze calibration followed by pick-and-place task using the dVRK and is repeated three times. After a final calibration, users view a 3x3 grid of AprilTags, focusing on each marker for 10 seconds, to evaluate gaze stability across dVRK-screen positions with the L2-norm. Different gaze calibrations assess calibration's temporal deterioration due to head movements. Pupil diameter stability is evaluated using the FFT from the pupil diameter during the pick-and-place tasks. Users perform this routine with both head-worn eye-tracking systems. Results: Data collected from ten users indicate comparable pupil diameter stability. FFTs of pupil diameters show similar amplitudes in high-frequency components. Tobii Glasses show more temporal gaze stability compared to Pupil Labs, though both eye trackers yield a similar 4cm error in gaze estimation without an outdated calibration. Conclusion: Both eye trackers demonstrate similar stability of the pupil diameter and gaze, when the calibration is not outdated, indicating comparable eye-tracking and pupillometry performance in tele-robotic surgery settings.

Towards Holistic Surgical Scene Understanding

Dec 13, 2022Abstract:Most benchmarks for studying surgical interventions focus on a specific challenge instead of leveraging the intrinsic complementarity among different tasks. In this work, we present a new experimental framework towards holistic surgical scene understanding. First, we introduce the Phase, Step, Instrument, and Atomic Visual Action recognition (PSI-AVA) Dataset. PSI-AVA includes annotations for both long-term (Phase and Step recognition) and short-term reasoning (Instrument detection and novel Atomic Action recognition) in robot-assisted radical prostatectomy videos. Second, we present Transformers for Action, Phase, Instrument, and steps Recognition (TAPIR) as a strong baseline for surgical scene understanding. TAPIR leverages our dataset's multi-level annotations as it benefits from the learned representation on the instrument detection task to improve its classification capacity. Our experimental results in both PSI-AVA and other publicly available databases demonstrate the adequacy of our framework to spur future research on holistic surgical scene understanding.

Ego4D: Around the World in 3,000 Hours of Egocentric Video

Oct 13, 2021

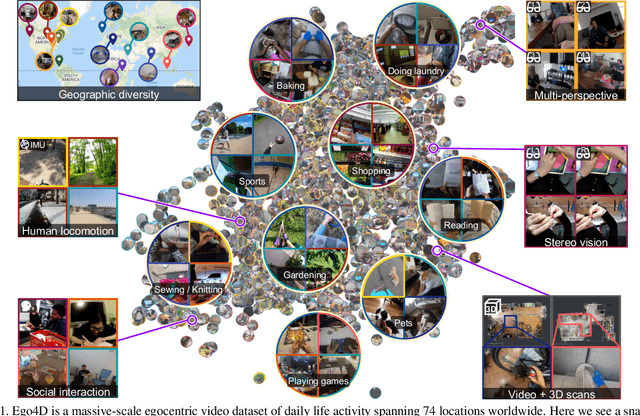

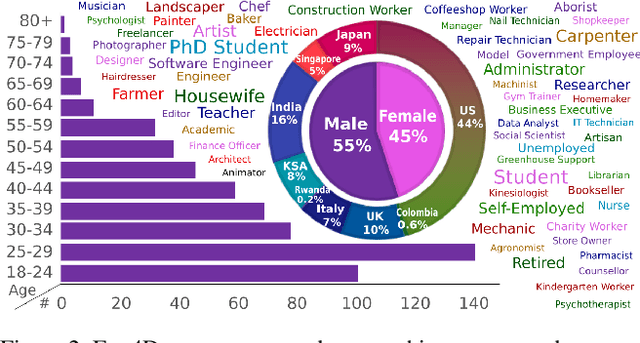

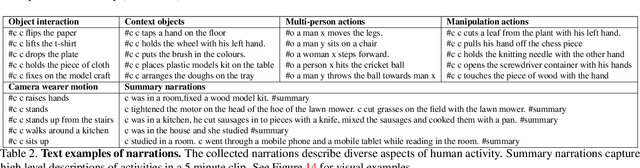

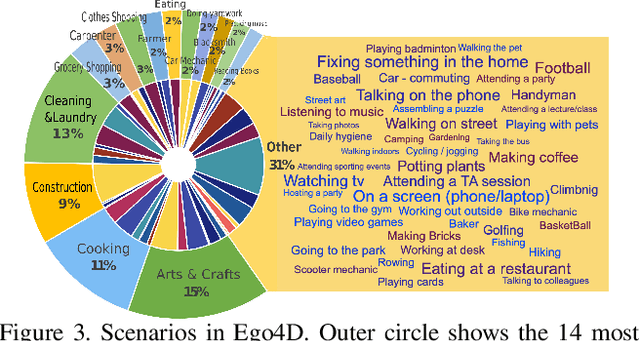

Abstract:We introduce Ego4D, a massive-scale egocentric video dataset and benchmark suite. It offers 3,025 hours of daily-life activity video spanning hundreds of scenarios (household, outdoor, workplace, leisure, etc.) captured by 855 unique camera wearers from 74 worldwide locations and 9 different countries. The approach to collection is designed to uphold rigorous privacy and ethics standards with consenting participants and robust de-identification procedures where relevant. Ego4D dramatically expands the volume of diverse egocentric video footage publicly available to the research community. Portions of the video are accompanied by audio, 3D meshes of the environment, eye gaze, stereo, and/or synchronized videos from multiple egocentric cameras at the same event. Furthermore, we present a host of new benchmark challenges centered around understanding the first-person visual experience in the past (querying an episodic memory), present (analyzing hand-object manipulation, audio-visual conversation, and social interactions), and future (forecasting activities). By publicly sharing this massive annotated dataset and benchmark suite, we aim to push the frontier of first-person perception. Project page: https://ego4d-data.org/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge