Oliver Scheel

Urban Driver: Learning to Drive from Real-world Demonstrations Using Policy Gradients

Sep 27, 2021

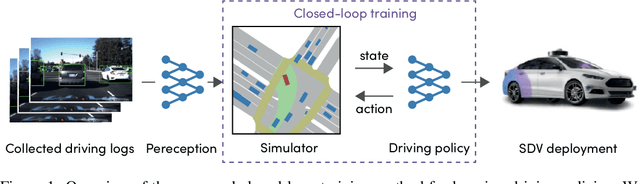

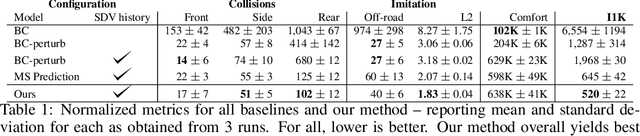

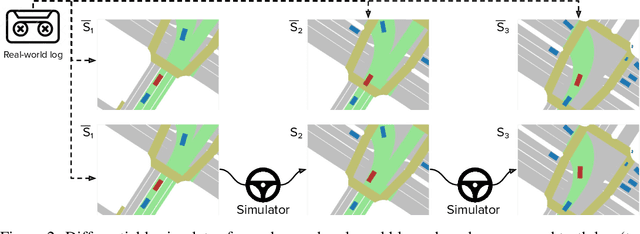

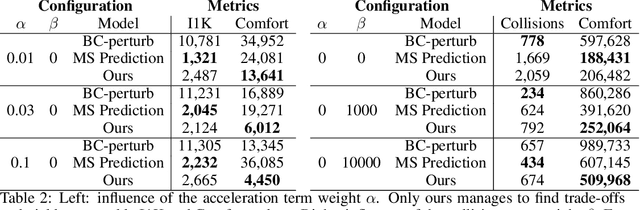

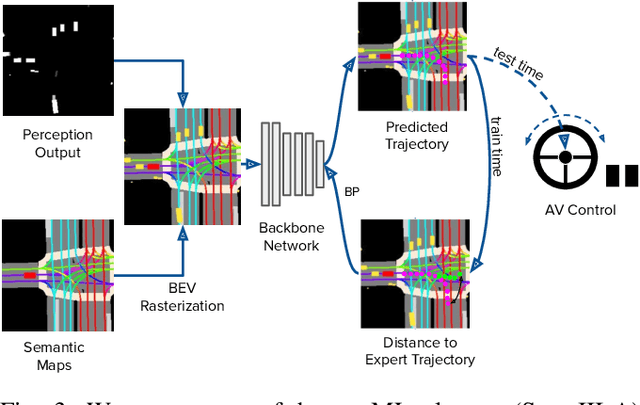

Abstract:In this work we are the first to present an offline policy gradient method for learning imitative policies for complex urban driving from a large corpus of real-world demonstrations. This is achieved by building a differentiable data-driven simulator on top of perception outputs and high-fidelity HD maps of the area. It allows us to synthesize new driving experiences from existing demonstrations using mid-level representations. Using this simulator we then train a policy network in closed-loop employing policy gradients. We train our proposed method on 100 hours of expert demonstrations on urban roads and show that it learns complex driving policies that generalize well and can perform a variety of driving maneuvers. We demonstrate this in simulation as well as deploy our model to self-driving vehicles in the real-world. Our method outperforms previously demonstrated state-of-the-art for urban driving scenarios -- all this without the need for complex state perturbations or collecting additional on-policy data during training. We make code and data publicly available.

What data do we need for training an AV motion planner?

May 26, 2021

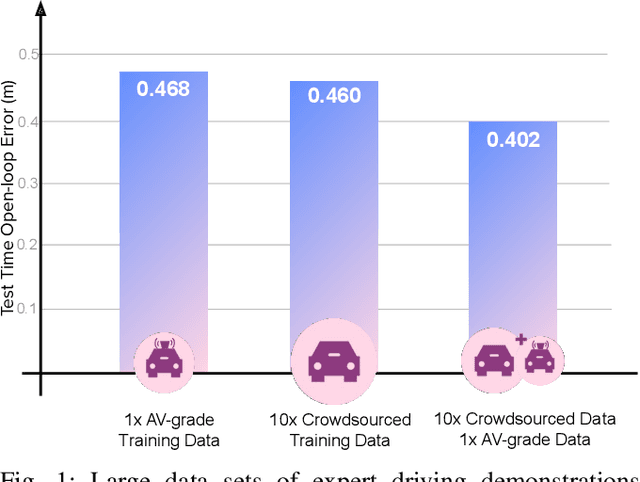

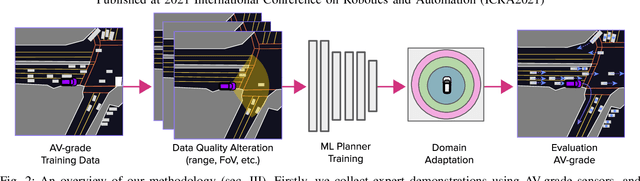

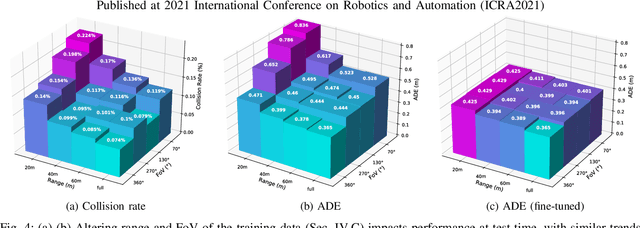

Abstract:We investigate what grade of sensor data is required for training an imitation-learning-based AV planner on human expert demonstration. Machine-learned planners are very hungry for training data, which is usually collected using vehicles equipped with the same sensors used for autonomous operation. This is costly and non-scalable. If cheaper sensors could be used for collection instead, data availability would go up, which is crucial in a field where data volume requirements are large and availability is small. We present experiments using up to 1000 hours worth of expert demonstration and find that training with 10x lower-quality data outperforms 1x AV-grade data in terms of planner performance. The important implication of this is that cheaper sensors can indeed be used. This serves to improve data access and democratize the field of imitation-based motion planning. Alongside this, we perform a sensitivity analysis of planner performance as a function of perception range, field-of-view, accuracy, and data volume, and the reason why lower-quality data still provide good planning results.

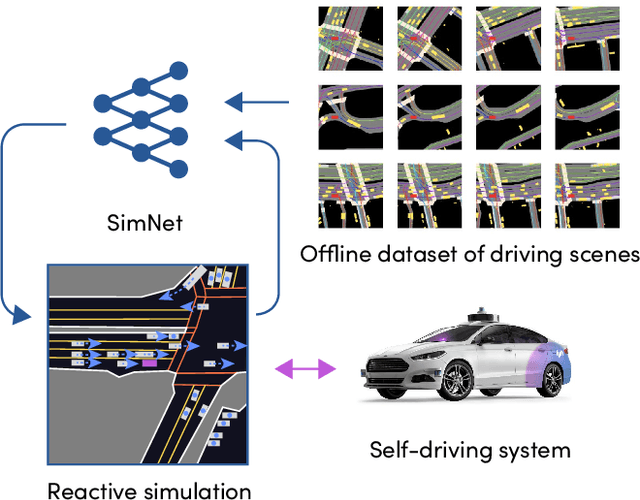

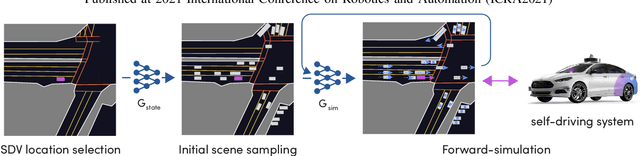

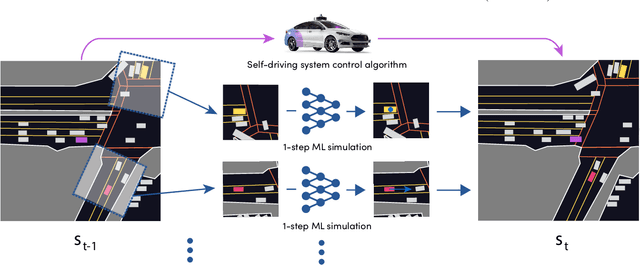

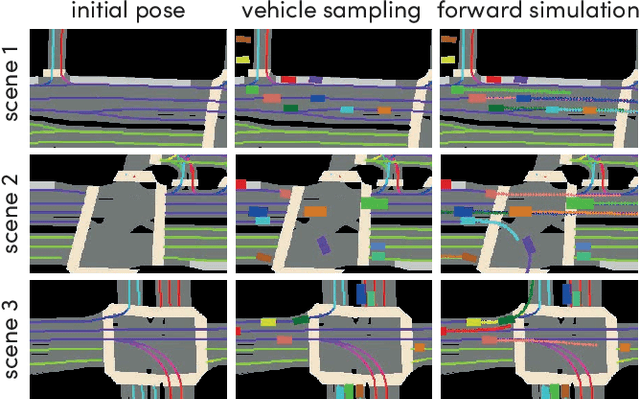

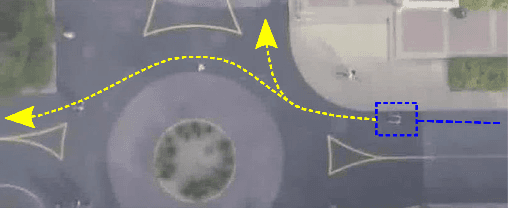

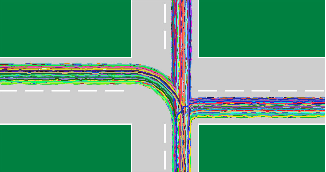

SimNet: Learning Reactive Self-driving Simulations from Real-world Observations

May 26, 2021

Abstract:In this work, we present a simple end-to-end trainable machine learning system capable of realistically simulating driving experiences. This can be used for the verification of self-driving system performance without relying on expensive and time-consuming road testing. In particular, we frame the simulation problem as a Markov Process, leveraging deep neural networks to model both state distribution and transition function. These are trainable directly from the existing raw observations without the need for any handcrafting in the form of plant or kinematic models. All that is needed is a dataset of historical traffic episodes. Our formulation allows the system to construct never seen scenes that unfold realistically reacting to the self-driving car's behaviour. We train our system directly from 1,000 hours of driving logs and measure both realism, reactivity of the simulation as the two key properties of the simulation. At the same time, we apply the method to evaluate the performance of a recently proposed state-of-the-art ML planning system trained from human driving logs. We discover this planning system is prone to previously unreported causal confusion issues that are difficult to test by non-reactive simulation. To the best of our knowledge, this is the first work that directly merges highly realistic data-driven simulations with a closed-loop evaluation for self-driving vehicles. We make the data, code, and pre-trained models publicly available to further stimulate simulation development.

Explicit Domain Adaptation with Loosely Coupled Samples

Apr 24, 2020

Abstract:Transfer learning is an important field of machine learning in general, and particularly in the context of fully autonomous driving, which needs to be solved simultaneously for many different domains, such as changing weather conditions and country-specific driving behaviors. Traditional transfer learning methods often focus on image data and are black-box models. In this work we propose a transfer learning framework, core of which is learning an explicit mapping between domains. Due to its interpretability, this is beneficial for safety-critical applications, like autonomous driving. We show its general applicability by considering image classification problems and then move on to time-series data, particularly predicting lane changes. In our evaluation we adapt a pre-trained model to a dataset exhibiting different driving and sensory characteristics.

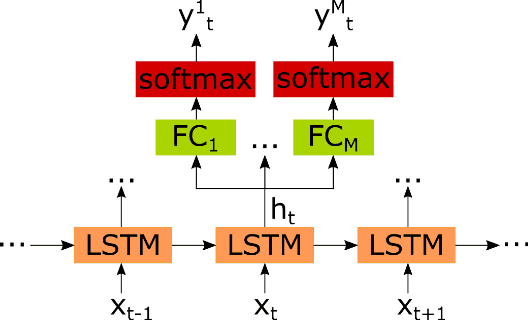

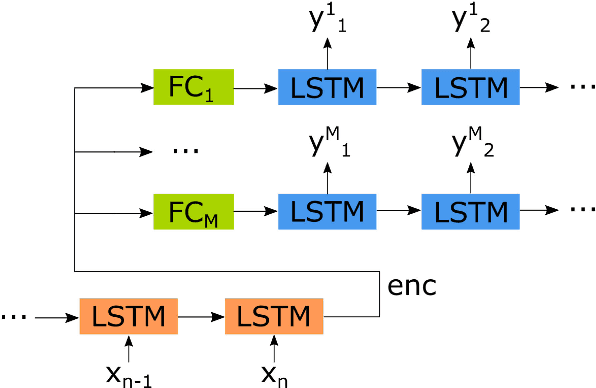

Ambiguity in Sequential Data: Predicting Uncertain Futures with Recurrent Models

Mar 10, 2020

Abstract:Ambiguity is inherently present in many machine learning tasks, but especially for sequential models seldom accounted for, as most only output a single prediction. In this work we propose an extension of the Multiple Hypothesis Prediction (MHP) model to handle ambiguous predictions with sequential data, which is of special importance, as often multiple futures are equally likely. Our approach can be applied to the most common recurrent architectures and can be used with any loss function. Additionally, we introduce a novel metric for ambiguous problems, which is better suited to account for uncertainties and coincides with our intuitive understanding of correctness in the presence of multiple labels. We test our method on several experiments and across diverse tasks dealing with time series data, such as trajectory forecasting and maneuver prediction, achieving promising results.

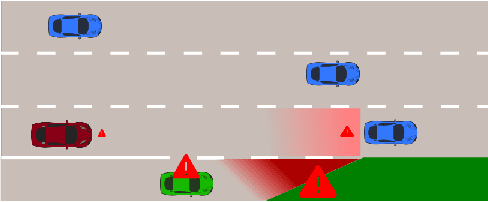

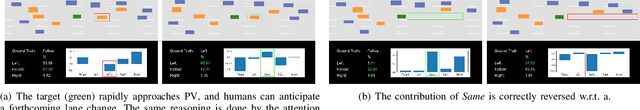

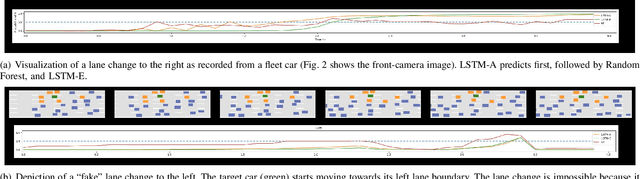

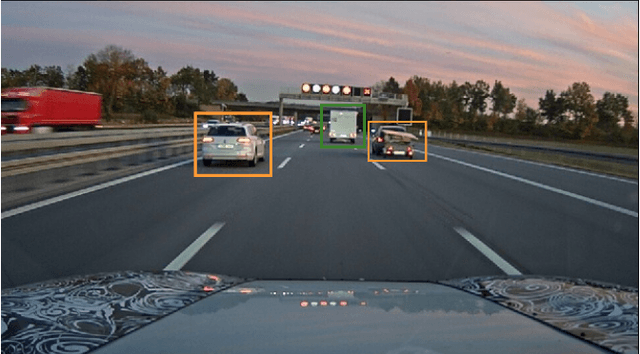

Attention-based Lane Change Prediction

Mar 07, 2019

Abstract:Lane change prediction of surrounding vehicles is a key building block of path planning. The focus has been on increasing the accuracy of prediction by posing it purely as a function estimation problem at the cost of model understandability. However, the efficacy of any lane change prediction model can be improved when both corner and failure cases are humanly understandable. We propose an attention-based recurrent model to tackle both understandability and prediction quality. We also propose metrics which reflect the discomfort felt by the driver. We show encouraging results on a publicly available dataset and proprietary fleet data.

Situation Assessment for Planning Lane Changes: Combining Recurrent Models and Prediction

May 17, 2018

Abstract:One of the greatest challenges towards fully autonomous cars is the understanding of complex and dynamic scenes. Such understanding is needed for planning of maneuvers, especially those that are particularly frequent such as lane changes. While in recent years advanced driver-assistance systems have made driving safer and more comfortable, these have mostly focused on car following scenarios, and less on maneuvers involving lane changes. In this work we propose a situation assessment algorithm for classifying driving situations with respect to their suitability for lane changing. For this, we propose a deep learning architecture based on a Bidirectional Recurrent Neural Network, which uses Long Short-Term Memory units, and integrates a prediction component in the form of the Intelligent Driver Model. We prove the feasibility of our algorithm on the publicly available NGSIM datasets, where we outperform existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge