Novel Certad

Evaluating Pedestrian Risks in Shared Spaces Through Autonomous Vehicle Experiments on a Fixed Track

Apr 11, 2025

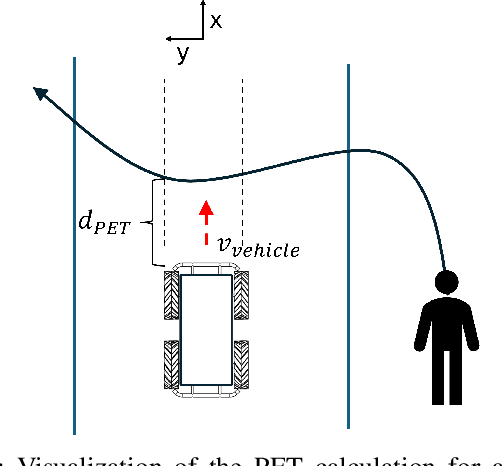

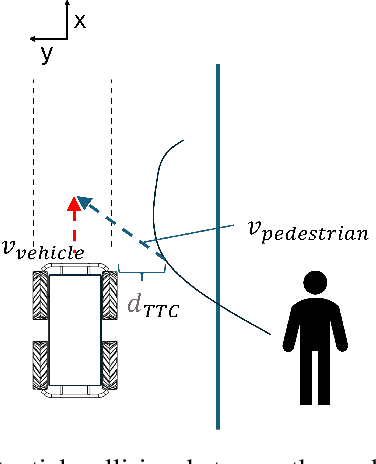

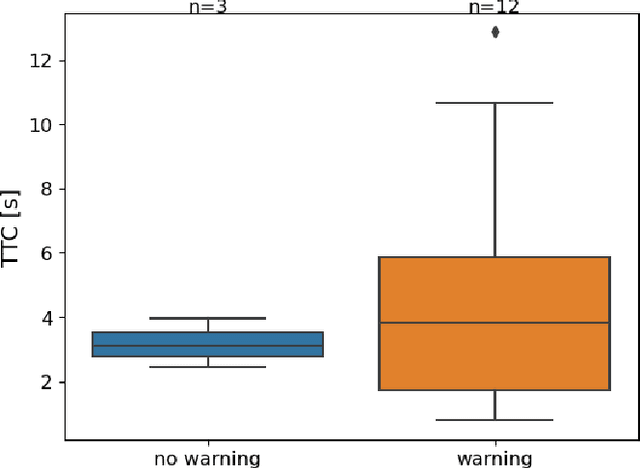

Abstract:The majority of research on safety in autonomous vehicles has been conducted in structured and controlled environments. However, there is a scarcity of research on safety in unregulated pedestrian areas, especially when interacting with public transport vehicles like trams. This study investigates pedestrian responses to an alert system in this context by replicating this real-world scenario in an environment using an autonomous vehicle. The results show that safety measures from other contexts can be adapted to shared spaces with trams, where fixed tracks heighten risks in unregulated crossings.

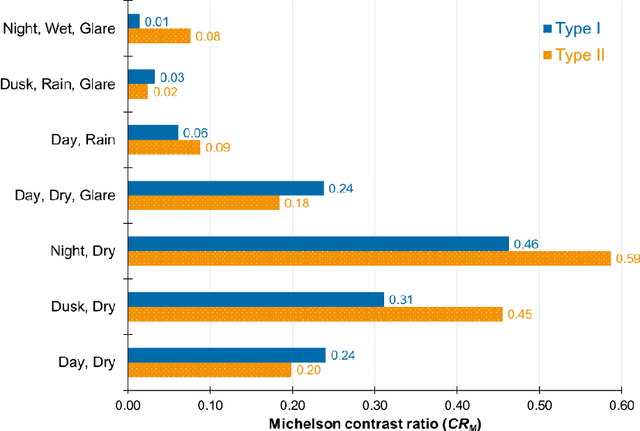

Inadequate contrast ratio of road markings as an indicator for ADAS failure

Oct 17, 2024

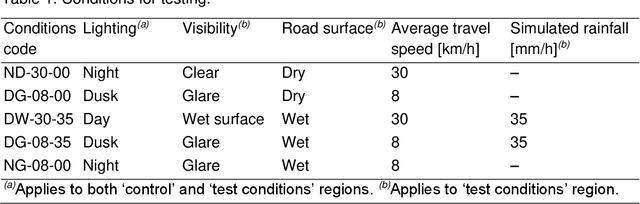

Abstract:Road markings were reported as critical road safety features, equally needed for both human drivers and for machine vision technologies utilised by advanced driver assistance systems (ADAS) and in driving automation. Visibility of road markings is achieved because of their colour contrasting with the roadway surface. During recent testing of an open-source camera-based ADAS under several visibility conditions (day, night, rain, glare), significant failures in trajectory planning were recorded and quantified. Consistently, better ADAS reliability under poor visibility conditions was achieved with Type II road markings (i.e. structured markings, facilitating moisture drainage) as compared to Type I road marking (i.e. flat lines). To further understand these failures, analysis of contrast ratio of road markings, which the tested ADAS was detecting for traffic lane recognition, was performed. The highest contrast ratio (greater than 0.5, calculated per Michelson equation) was measured at night in the absence of confounding factors, with statistically significant difference of 0.1 in favour of Type II road markings over Type I. Under daylight conditions, contrast ratio was reduced, with slightly higher values measured with Type I. The presence of rain or wet roads caused the deterioration of the contrast ratio, with Type II road markings exhibiting significantly higher contrast ratio than Type I, even though the values were low (less than 0.1). These findings matched the output of the ADAS related to traffic lane detection and underlined the importance of road marking visibility. Inadequate lane recognition by ADAS was associated with very low contrast ratio of road markings indeed. Importantly, specific minimum contrast ratio value could not be found, which was due to the complexity of ADAS algorithms...

IAMCV Multi-Scenario Vehicle Interaction Dataset

Mar 13, 2024

Abstract:The acquisition and analysis of high-quality sensor data constitute an essential requirement in shaping the development of fully autonomous driving systems. This process is indispensable for enhancing road safety and ensuring the effectiveness of the technological advancements in the automotive industry. This study introduces the Interaction of Autonomous and Manually-Controlled Vehicles (IAMCV) dataset, a novel and extensive dataset focused on inter-vehicle interactions. The dataset, enriched with a sophisticated array of sensors such as Light Detection and Ranging, cameras, Inertial Measurement Unit/Global Positioning System, and vehicle bus data acquisition, provides a comprehensive representation of real-world driving scenarios that include roundabouts, intersections, country roads, and highways, recorded across diverse locations in Germany. Furthermore, the study shows the versatility of the IAMCV dataset through several proof-of-concept use cases. Firstly, an unsupervised trajectory clustering algorithm illustrates the dataset's capability in categorizing vehicle movements without the need for labeled training data. Secondly, we compare an online camera calibration method with the Robot Operating System-based standard, using images captured in the dataset. Finally, a preliminary test employing the YOLOv8 object-detection model is conducted, augmented by reflections on the transferability of object detection across various LIDAR resolutions. These use cases underscore the practical utility of the collected dataset, emphasizing its potential to advance research and innovation in the area of intelligent vehicles.

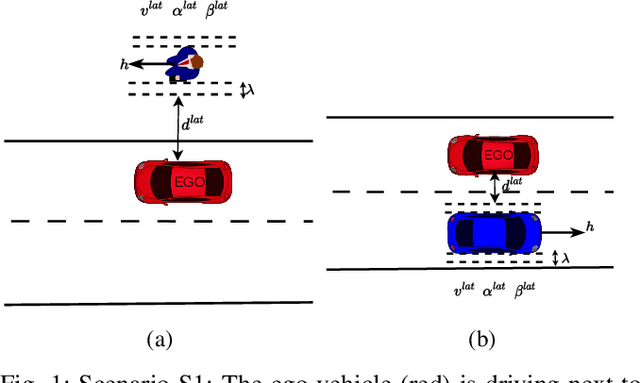

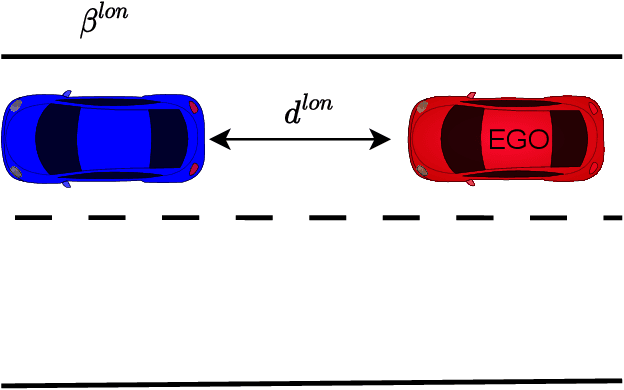

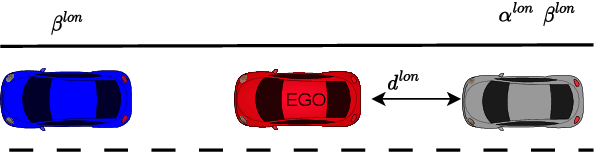

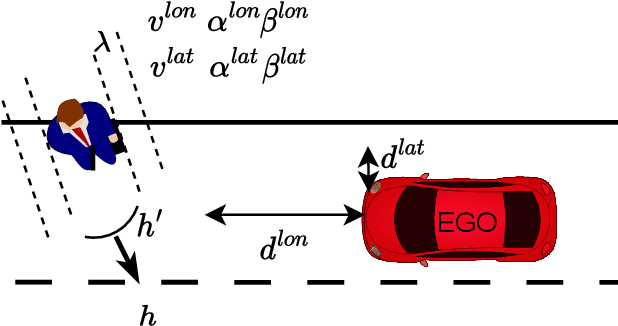

Extraction of Road Users' Behavior From Realistic Data According to Assumptions in Safety-Related Models for Automated Driving Systems

Jul 31, 2023

Abstract:In this work, we utilized the methodology outlined in the IEEE Standard 2846-2022 for "Assumptions in Safety-Related Models for Automated Driving Systems" to extract information on the behavior of other road users in driving scenarios. This method includes defining high-level scenarios, determining kinematic characteristics, evaluating safety relevance, and making assumptions on reasonably predictable behaviors. The assumptions were expressed as kinematic bounds. The numerical values for these bounds were extracted using Python scripts to process realistic data from the UniD dataset. The resulting information enables Automated Driving Systems designers to specify the parameters and limits of a road user's state in a specific scenario. This information can be utilized to establish starting conditions for testing a vehicle that is equipped with an Automated Driving System in simulations or on actual roads.

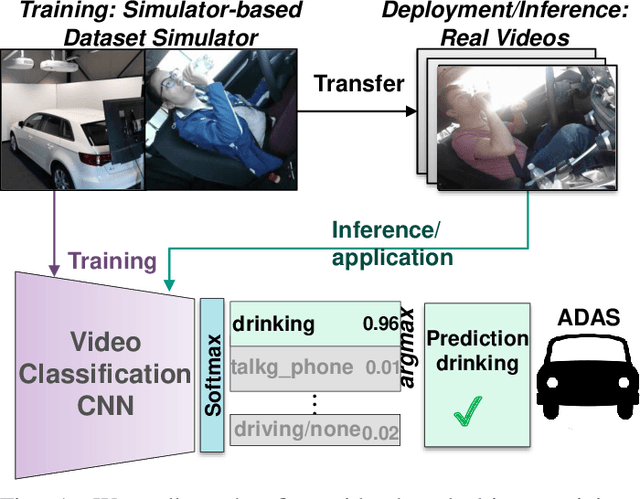

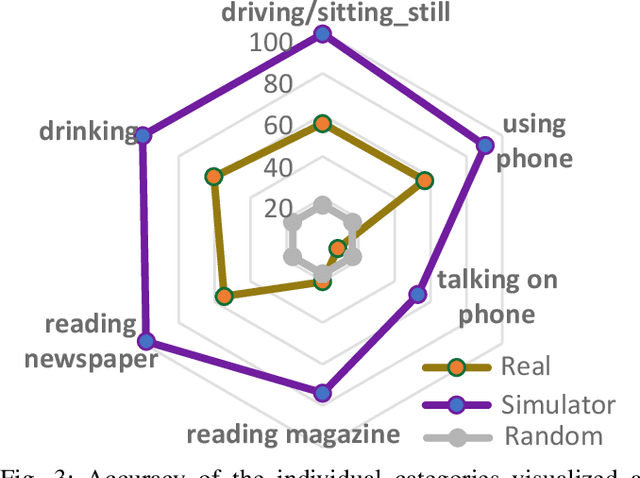

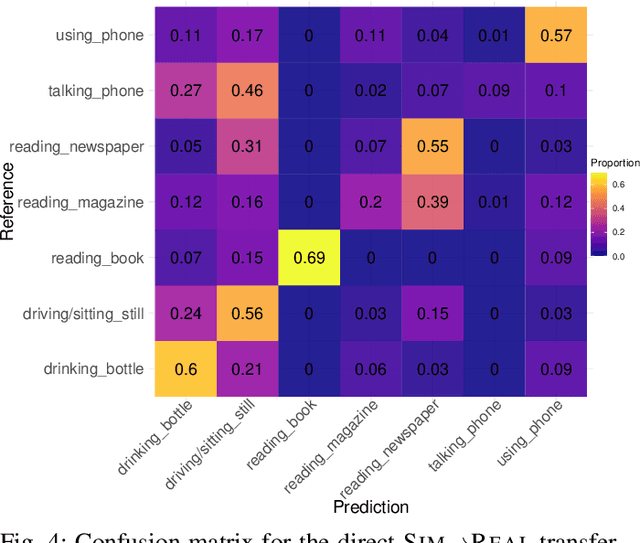

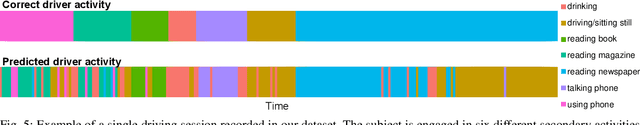

On Transferability of Driver Observation Models from Simulated to Real Environments in Autonomous Cars

Jul 31, 2023

Abstract:For driver observation frameworks, clean datasets collected in controlled simulated environments often serve as the initial training ground. Yet, when deployed under real driving conditions, such simulator-trained models quickly face the problem of distributional shifts brought about by changing illumination, car model, variations in subject appearances, sensor discrepancies, and other environmental alterations. This paper investigates the viability of transferring video-based driver observation models from simulation to real-world scenarios in autonomous vehicles, given the frequent use of simulation data in this domain due to safety issues. To achieve this, we record a dataset featuring actual autonomous driving conditions and involving seven participants engaged in highly distracting secondary activities. To enable direct SIM to REAL transfer, our dataset was designed in accordance with an existing large-scale simulator dataset used as the training source. We utilize the Inflated 3D ConvNet (I3D) model, a popular choice for driver observation, with Gradient-weighted Class Activation Mapping (Grad-CAM) for detailed analysis of model decision-making. Though the simulator-based model clearly surpasses the random baseline, its recognition quality diminishes, with average accuracy dropping from 85.7% to 46.6%. We also observe strong variations across different behavior classes. This underscores the challenges of model transferability, facilitating our research of more robust driver observation systems capable of dealing with real driving conditions.

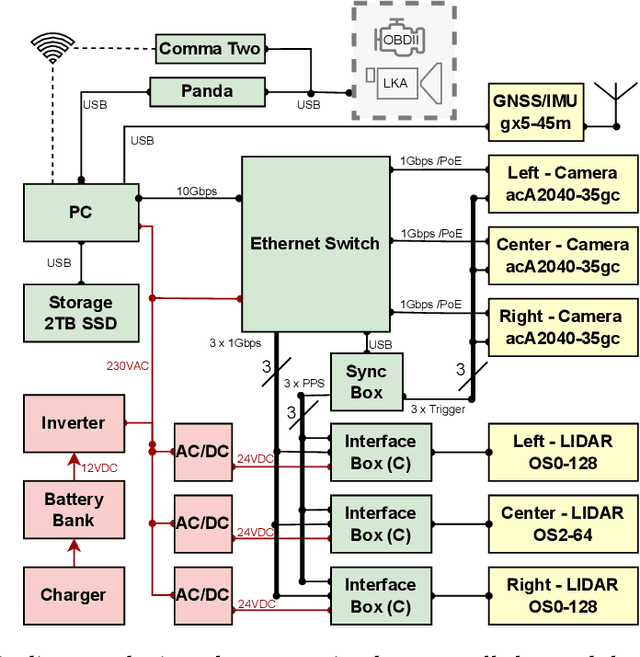

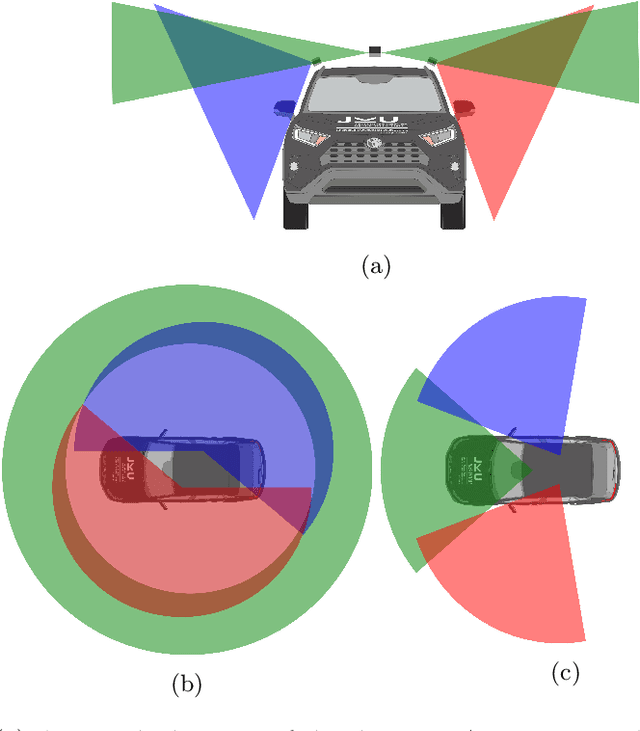

JKU-ITS Automobile for Research on Autonomous Vehicles

Jan 16, 2023

Abstract:In this paper, we present our brand-new platform for Automated Driving research. The chosen vehicle is a RAV4 hybrid SUV from TOYOTA provided with exteroceptive sensors such as a multilayer LIDAR, a monocular camera, Radar and GPS; and proprioceptive sensors such as encoders and a 9-DOF IMU. These sensors are integrated in the vehicle via a main computer running ROS1 under Linux 20.04. Additionally, we installed an open-source ADAS called Comma Two, that runs Openpilot to control the vehicle. The platform is currently being used to research in the field of autonomous vehicles, human and autonomous vehicles interaction, human factors, and energy consumption.

Road Markings Segmentation from LIDAR Point Clouds using Reflectivity Information

Nov 02, 2022Abstract:Lane detection algorithms are crucial for the development of autonomous vehicles technologies. The more extended approach is to use cameras as sensors. However, LIDAR sensors can cope with weather and light conditions that cameras can not. In this paper, we introduce a method to extract road markings from the reflectivity data of a 64-layers LIDAR sensor. First, a plane segmentation method along with region grow clustering was used to extract the road plane. Then we applied an adaptive thresholding based on Otsu s method and finally, we fitted line models to filter out the remaining outliers. The algorithm was tested on a test track at 60km/h and a highway at 100km/h. Results showed the algorithm was reliable and precise. There was a clear improvement when using reflectivity data in comparison to the use of the raw intensity data both of them provided by the LIDAR sensor.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge