Cristina Olaverri-Monreal

Senior Member, IEEE

ClearLines - Camera Calibration from Straight Lines

May 01, 2025

Abstract:The problem of calibration from straight lines is fundamental in geometric computer vision, with well-established theoretical foundations. However, its practical applicability remains limited, particularly in real-world outdoor scenarios. These environments pose significant challenges due to diverse and cluttered scenes, interrupted reprojections of straight 3D lines, and varying lighting conditions, making the task notoriously difficult. Furthermore, the field lacks a dedicated dataset encouraging the development of respective detection algorithms. In this study, we present a small dataset named "ClearLines", and by detailing its creation process, provide practical insights that can serve as a guide for developing and refining straight 3D line detection algorithms.

Shadow Erosion and Nighttime Adaptability for Camera-Based Automated Driving Applications

Apr 11, 2025

Abstract:Enhancement of images from RGB cameras is of particular interest due to its wide range of ever-increasing applications such as medical imaging, satellite imaging, automated driving, etc. In autonomous driving, various techniques are used to enhance image quality under challenging lighting conditions. These include artificial augmentation to improve visibility in poor nighttime conditions, illumination-invariant imaging to reduce the impact of lighting variations, and shadow mitigation to ensure consistent image clarity in bright daylight. This paper proposes a pipeline for Shadow Erosion and Nighttime Adaptability in images for automated driving applications while preserving color and texture details. The Shadow Erosion and Nighttime Adaptability pipeline is compared to the widely used CLAHE technique and evaluated based on illumination uniformity and visual perception quality metrics. The results also demonstrate a significant improvement over CLAHE, enhancing a YOLO-based drivable area segmentation algorithm.

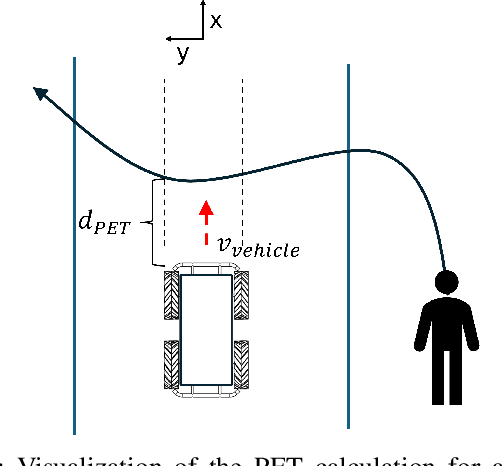

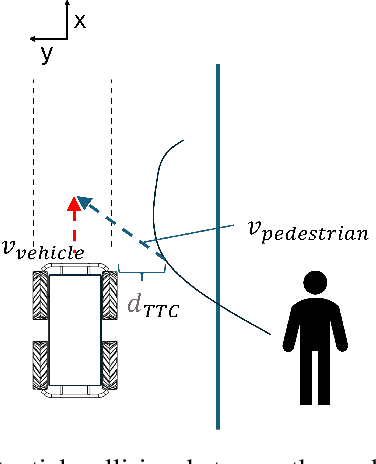

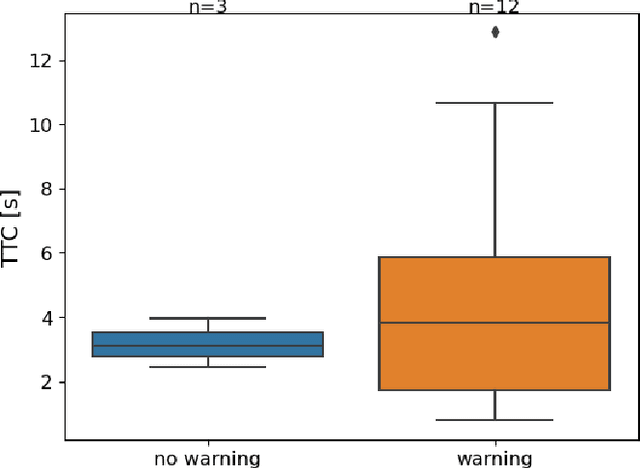

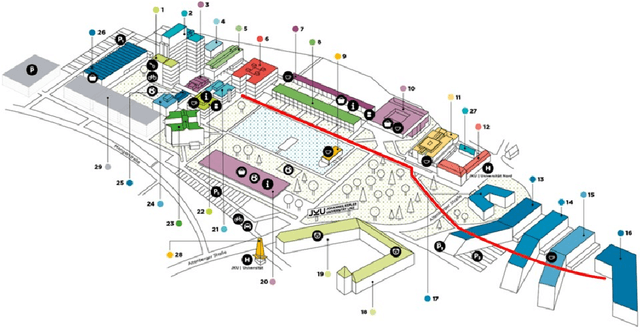

Evaluating Pedestrian Risks in Shared Spaces Through Autonomous Vehicle Experiments on a Fixed Track

Apr 11, 2025

Abstract:The majority of research on safety in autonomous vehicles has been conducted in structured and controlled environments. However, there is a scarcity of research on safety in unregulated pedestrian areas, especially when interacting with public transport vehicles like trams. This study investigates pedestrian responses to an alert system in this context by replicating this real-world scenario in an environment using an autonomous vehicle. The results show that safety measures from other contexts can be adapted to shared spaces with trams, where fixed tracks heighten risks in unregulated crossings.

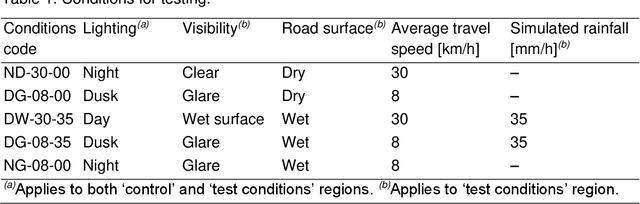

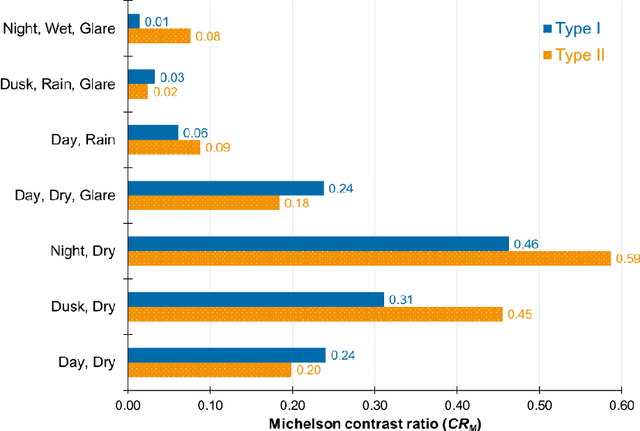

Inadequate contrast ratio of road markings as an indicator for ADAS failure

Oct 17, 2024

Abstract:Road markings were reported as critical road safety features, equally needed for both human drivers and for machine vision technologies utilised by advanced driver assistance systems (ADAS) and in driving automation. Visibility of road markings is achieved because of their colour contrasting with the roadway surface. During recent testing of an open-source camera-based ADAS under several visibility conditions (day, night, rain, glare), significant failures in trajectory planning were recorded and quantified. Consistently, better ADAS reliability under poor visibility conditions was achieved with Type II road markings (i.e. structured markings, facilitating moisture drainage) as compared to Type I road marking (i.e. flat lines). To further understand these failures, analysis of contrast ratio of road markings, which the tested ADAS was detecting for traffic lane recognition, was performed. The highest contrast ratio (greater than 0.5, calculated per Michelson equation) was measured at night in the absence of confounding factors, with statistically significant difference of 0.1 in favour of Type II road markings over Type I. Under daylight conditions, contrast ratio was reduced, with slightly higher values measured with Type I. The presence of rain or wet roads caused the deterioration of the contrast ratio, with Type II road markings exhibiting significantly higher contrast ratio than Type I, even though the values were low (less than 0.1). These findings matched the output of the ADAS related to traffic lane detection and underlined the importance of road marking visibility. Inadequate lane recognition by ADAS was associated with very low contrast ratio of road markings indeed. Importantly, specific minimum contrast ratio value could not be found, which was due to the complexity of ADAS algorithms...

Want a Ride? Attitudes Towards Autonomous Driving and Behavior in Autonomous Vehicles

Sep 04, 2024

Abstract:Research conducted previously has focused on either attitudes toward or behaviors associated with autonomous driving. In this paper, we bridge these two dimensions by exploring how attitudes towards autonomous driving influence behavior in an autonomous car. We conducted a field experiment with twelve participants engaged in non-driving related tasks. Our findings indicate that attitudes towards autonomous driving do not affect participants' driving interventions in vehicle control and eye glance behavior. Therefore, studies on autonomous driving technology lacking field tests might be unreliable for assessing the potential behaviors, attitudes, and acceptance of autonomous vehicles.

Integrating Naturalistic Insights in Objective Multi-Vehicle Safety Framework

Aug 19, 2024

Abstract:As autonomous vehicle technology advances, the precise assessment of safety in complex traffic scenarios becomes crucial, especially in mixed-vehicle environments where human perception of safety must be taken into account. This paper presents a framework designed for assessing traffic safety in multi-vehicle situations, facilitating the simultaneous utilization of diverse objective safety metrics. Additionally, it allows the integration of subjective perception of safety by adjusting model parameters. The framework was applied to evaluate various model configurations in car-following scenarios on a highway, utilizing naturalistic driving datasets. The evaluation of the model showed an outstanding performance, particularly when integrating multiple objective safety measures. Furthermore, the performance was significantly enhanced when considering all surrounding vehicles.

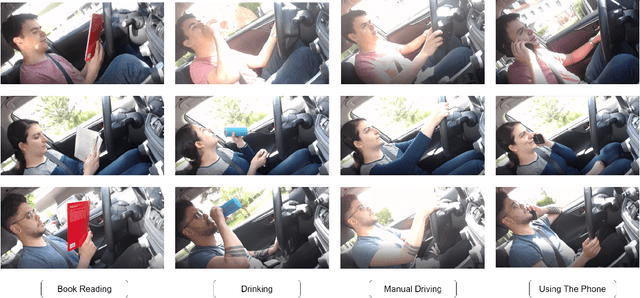

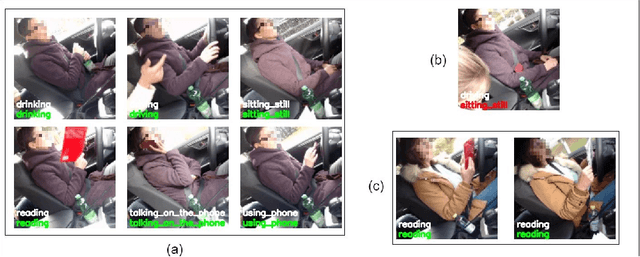

Automated Vehicle Driver Monitoring Dataset from Real-World Scenarios

Aug 19, 2024

Abstract:From SAE Level 3 of automation onwards, drivers are allowed to engage in activities that are not directly related to driving during their travel. However, in level 3, a misunderstanding of the capabilities of the system might lead drivers to engage in secondary tasks, which could impair their ability to react to challenging traffic situations. Anticipating driver activity allows for early detection of risky behaviors, to prevent accidents. To be able to predict the driver activity, a Deep Learning network needs to be trained on a dataset. However, the use of datasets based on simulation for training and the migration to real-world data for prediction has proven to be suboptimal. Hence, this paper presents a real-world driver activity dataset, openly accessible on IEEE Dataport, which encompasses various activities that occur in autonomous driving scenarios under various illumination and weather conditions. Results from the training process showed that the dataset provides an excellent benchmark for implementing models for driver activity recognition.

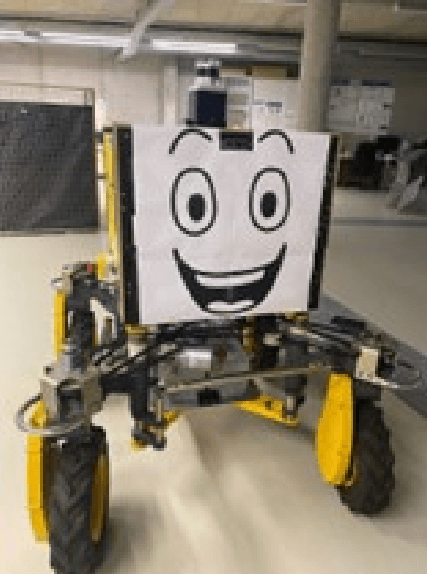

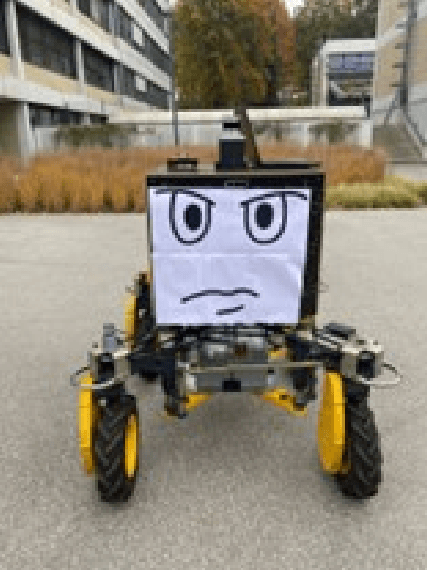

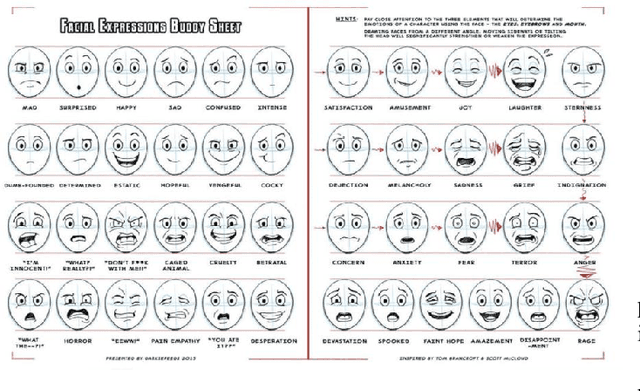

Facial Features Integration in Last Mile Delivery Robots

Apr 15, 2024

Abstract:Delivery services have undergone technological advancements, with robots now directly delivering packages to recipients. While these robots are designed for efficient functionality, they have not been specifically designed for interactions with humans. Building on the premise that incorporating human-like characteristics into a robot has the potential to positively impact technology acceptance, this study explores human reactions to a robot characterized with facial expressions. The findings indicate a correlation between anthropomorphic features and the observed responses.

IAMCV Multi-Scenario Vehicle Interaction Dataset

Mar 13, 2024

Abstract:The acquisition and analysis of high-quality sensor data constitute an essential requirement in shaping the development of fully autonomous driving systems. This process is indispensable for enhancing road safety and ensuring the effectiveness of the technological advancements in the automotive industry. This study introduces the Interaction of Autonomous and Manually-Controlled Vehicles (IAMCV) dataset, a novel and extensive dataset focused on inter-vehicle interactions. The dataset, enriched with a sophisticated array of sensors such as Light Detection and Ranging, cameras, Inertial Measurement Unit/Global Positioning System, and vehicle bus data acquisition, provides a comprehensive representation of real-world driving scenarios that include roundabouts, intersections, country roads, and highways, recorded across diverse locations in Germany. Furthermore, the study shows the versatility of the IAMCV dataset through several proof-of-concept use cases. Firstly, an unsupervised trajectory clustering algorithm illustrates the dataset's capability in categorizing vehicle movements without the need for labeled training data. Secondly, we compare an online camera calibration method with the Robot Operating System-based standard, using images captured in the dataset. Finally, a preliminary test employing the YOLOv8 object-detection model is conducted, augmented by reflections on the transferability of object detection across various LIDAR resolutions. These use cases underscore the practical utility of the collected dataset, emphasizing its potential to advance research and innovation in the area of intelligent vehicles.

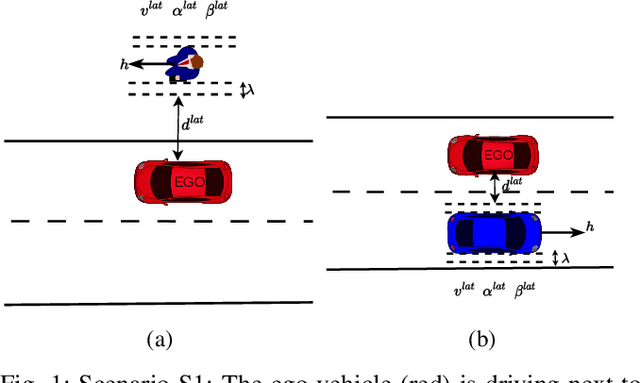

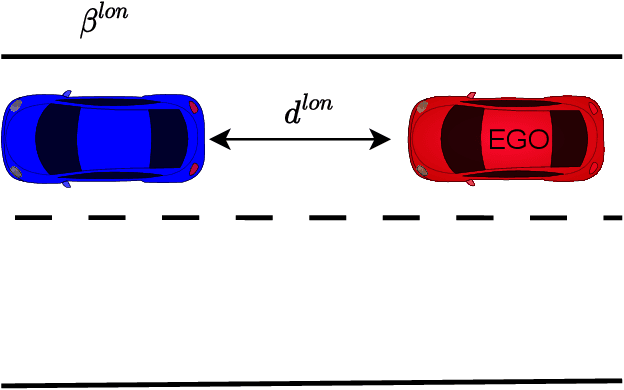

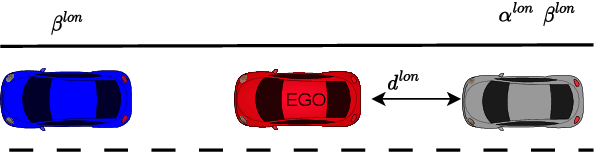

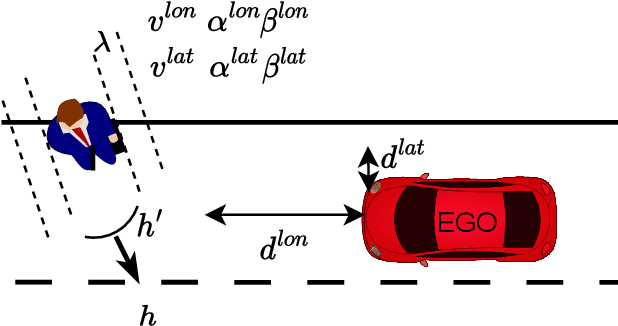

Extraction of Road Users' Behavior From Realistic Data According to Assumptions in Safety-Related Models for Automated Driving Systems

Jul 31, 2023

Abstract:In this work, we utilized the methodology outlined in the IEEE Standard 2846-2022 for "Assumptions in Safety-Related Models for Automated Driving Systems" to extract information on the behavior of other road users in driving scenarios. This method includes defining high-level scenarios, determining kinematic characteristics, evaluating safety relevance, and making assumptions on reasonably predictable behaviors. The assumptions were expressed as kinematic bounds. The numerical values for these bounds were extracted using Python scripts to process realistic data from the UniD dataset. The resulting information enables Automated Driving Systems designers to specify the parameters and limits of a road user's state in a specific scenario. This information can be utilized to establish starting conditions for testing a vehicle that is equipped with an Automated Driving System in simulations or on actual roads.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge