Gregory Schröder

IAMCV Multi-Scenario Vehicle Interaction Dataset

Mar 13, 2024

Abstract:The acquisition and analysis of high-quality sensor data constitute an essential requirement in shaping the development of fully autonomous driving systems. This process is indispensable for enhancing road safety and ensuring the effectiveness of the technological advancements in the automotive industry. This study introduces the Interaction of Autonomous and Manually-Controlled Vehicles (IAMCV) dataset, a novel and extensive dataset focused on inter-vehicle interactions. The dataset, enriched with a sophisticated array of sensors such as Light Detection and Ranging, cameras, Inertial Measurement Unit/Global Positioning System, and vehicle bus data acquisition, provides a comprehensive representation of real-world driving scenarios that include roundabouts, intersections, country roads, and highways, recorded across diverse locations in Germany. Furthermore, the study shows the versatility of the IAMCV dataset through several proof-of-concept use cases. Firstly, an unsupervised trajectory clustering algorithm illustrates the dataset's capability in categorizing vehicle movements without the need for labeled training data. Secondly, we compare an online camera calibration method with the Robot Operating System-based standard, using images captured in the dataset. Finally, a preliminary test employing the YOLOv8 object-detection model is conducted, augmented by reflections on the transferability of object detection across various LIDAR resolutions. These use cases underscore the practical utility of the collected dataset, emphasizing its potential to advance research and innovation in the area of intelligent vehicles.

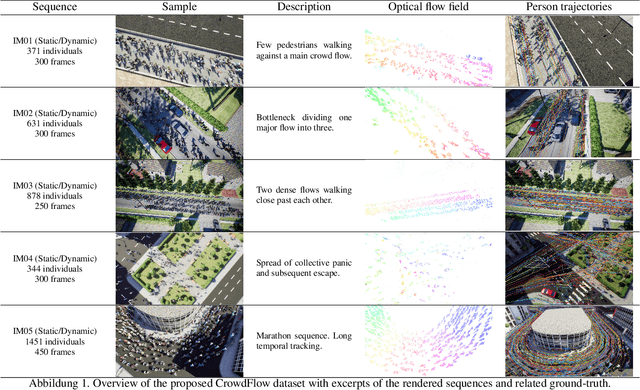

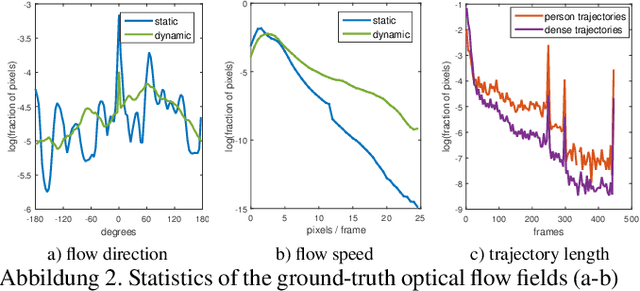

Optical Flow Dataset and Benchmark for Visual Crowd Analysis

Nov 17, 2018

Abstract:The performance of optical flow algorithms greatly depends on the specifics of the content and the application for which it is used. Existing and well established optical flow datasets are limited to rather particular contents from which none is close to crowd behavior analysis; whereas such applications heavily utilize optical flow. We introduce a new optical flow dataset exploiting the possibilities of a recent video engine to generate sequences with ground-truth optical flow for large crowds in different scenarios. We break with the development of the last decade of introducing ever increasing displacements to pose new difficulties. Instead we focus on real-world surveillance scenarios where numerous small, partly independent, non rigidly moving objects observed over a long temporal range pose a challenge. By evaluating different optical flow algorithms, we find that results of established datasets can not be transferred to these new challenges. In exhaustive experiments we are able to provide new insight into optical flow for crowd analysis. Finally, the results have been validated on the real-world UCF crowd tracking benchmark while achieving competitive results compared to more sophisticated state-of-the-art crowd tracking approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge