Nikolaos N. Vlassis

A Large Language Model and Denoising Diffusion Framework for Targeted Design of Microstructures with Commands in Natural Language

Sep 22, 2024Abstract:Microstructure plays a critical role in determining the macroscopic properties of materials, with applications spanning alloy design, MEMS devices, and tissue engineering, among many others. Computational frameworks have been developed to capture the complex relationship between microstructure and material behavior. However, despite these advancements, the steep learning curve associated with domain-specific knowledge and complex algorithms restricts the broader application of these tools. To lower this barrier, we propose a framework that integrates Natural Language Processing (NLP), Large Language Models (LLMs), and Denoising Diffusion Probabilistic Models (DDPMs) to enable microstructure design using intuitive natural language commands. Our framework employs contextual data augmentation, driven by a pretrained LLM, to generate and expand a diverse dataset of microstructure descriptors. A retrained NER model extracts relevant microstructure descriptors from user-provided natural language inputs, which are then used by the DDPM to generate microstructures with targeted mechanical properties and topological features. The NLP and DDPM components of the framework are modular, allowing for separate training and validation, which ensures flexibility in adapting the framework to different datasets and use cases. A surrogate model system is employed to rank and filter generated samples based on their alignment with target properties. Demonstrated on a database of nonlinear hyperelastic microstructures, this framework serves as a prototype for accessible inverse design of microstructures, starting from intuitive natural language commands.

A review on data-driven constitutive laws for solids

May 06, 2024

Abstract:This review article highlights state-of-the-art data-driven techniques to discover, encode, surrogate, or emulate constitutive laws that describe the path-independent and path-dependent response of solids. Our objective is to provide an organized taxonomy to a large spectrum of methodologies developed in the past decades and to discuss the benefits and drawbacks of the various techniques for interpreting and forecasting mechanics behavior across different scales. Distinguishing between machine-learning-based and model-free methods, we further categorize approaches based on their interpretability and on their learning process/type of required data, while discussing the key problems of generalization and trustworthiness. We attempt to provide a road map of how these can be reconciled in a data-availability-aware context. We also touch upon relevant aspects such as data sampling techniques, design of experiments, verification, and validation.

Synthesizing realistic sand assemblies with denoising diffusion in latent space

Jun 07, 2023Abstract:The shapes and morphological features of grains in sand assemblies have far-reaching implications in many engineering applications, such as geotechnical engineering, computer animations, petroleum engineering, and concentrated solar power. Yet, our understanding of the influence of grain geometries on macroscopic response is often only qualitative, due to the limited availability of high-quality 3D grain geometry data. In this paper, we introduce a denoising diffusion algorithm that uses a set of point clouds collected from the surface of individual sand grains to generate grains in the latent space. By employing a point cloud autoencoder, the three-dimensional point cloud structures of sand grains are first encoded into a lower-dimensional latent space. A generative denoising diffusion probabilistic model is trained to produce synthetic sand that maximizes the log-likelihood of the generated samples belonging to the original data distribution measured by a Kullback-Leibler divergence. Numerical experiments suggest that the proposed method is capable of generating realistic grains with morphology, shapes and sizes consistent with the training data inferred from an F50 sand database. We then use a rigid contact dynamic simulator to pour the synthetic sand in a confined volume to form granular assemblies in a static equilibrium state with targeted distribution properties. To ensure third-party validation, 50,000 synthetic sand grains and the 1,542 real synchrotron microcomputed tomography (SMT) scans of the F50 sand, as well as the granular assemblies composed of synthetic sand grains are made available in an open-source repository.

Denoising diffusion algorithm for inverse design of microstructures with fine-tuned nonlinear material properties

Feb 24, 2023

Abstract:In this paper, we introduce a denoising diffusion algorithm to discover microstructures with nonlinear fine-tuned properties. Denoising diffusion probabilistic models are generative models that use diffusion-based dynamics to gradually denoise images and generate realistic synthetic samples. By learning the reverse of a Markov diffusion process, we design an artificial intelligence to efficiently manipulate the topology of microstructures to generate a massive number of prototypes that exhibit constitutive responses sufficiently close to designated nonlinear constitutive responses. To identify the subset of microstructures with sufficiently precise fine-tuned properties, a convolutional neural network surrogate is trained to replace high-fidelity finite element simulations to filter out prototypes outside the admissible range. The results of this study indicate that the denoising diffusion process is capable of creating microstructures of fine-tuned nonlinear material properties within the latent space of the training data. More importantly, the resulting algorithm can be easily extended to incorporate additional topological and geometric modifications by introducing high-dimensional structures embedded in the latent space. The algorithm is tested on the open-source mechanical MNIST data set. Consequently, this algorithm is not only capable of performing inverse design of nonlinear effective media but also learns the nonlinear structure-property map to quantitatively understand the multiscale interplay among the geometry and topology and their effective macroscopic properties.

Design of experiments for the calibration of history-dependent models via deep reinforcement learning and an enhanced Kalman filter

Sep 27, 2022

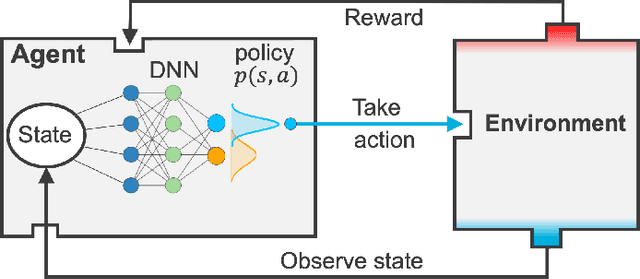

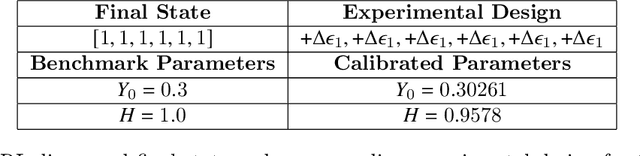

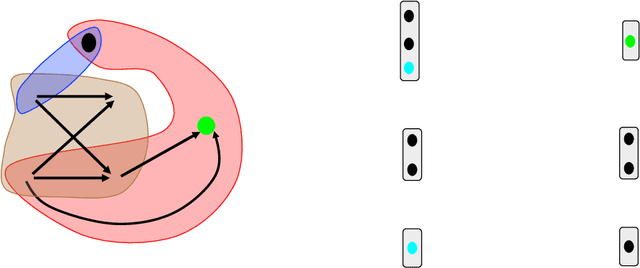

Abstract:Experimental data is costly to obtain, which makes it difficult to calibrate complex models. For many models an experimental design that produces the best calibration given a limited experimental budget is not obvious. This paper introduces a deep reinforcement learning (RL) algorithm for design of experiments that maximizes the information gain measured by Kullback-Leibler (KL) divergence obtained via the Kalman filter (KF). This combination enables experimental design for rapid online experiments where traditional methods are too costly. We formulate possible configurations of experiments as a decision tree and a Markov decision process (MDP), where a finite choice of actions is available at each incremental step. Once an action is taken, a variety of measurements are used to update the state of the experiment. This new data leads to a Bayesian update of the parameters by the KF, which is used to enhance the state representation. In contrast to the Nash-Sutcliffe efficiency (NSE) index, which requires additional sampling to test hypotheses for forward predictions, the KF can lower the cost of experiments by directly estimating the values of new data acquired through additional actions. In this work our applications focus on mechanical testing of materials. Numerical experiments with complex, history-dependent models are used to verify the implementation and benchmark the performance of the RL-designed experiments.

Geometric deep learning for computational mechanics Part II: Graph embedding for interpretable multiscale plasticity

Jul 30, 2022

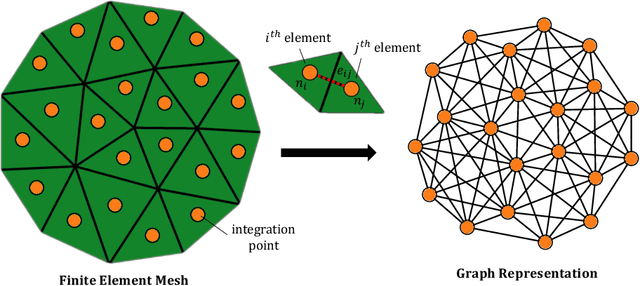

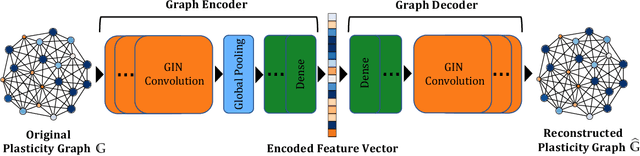

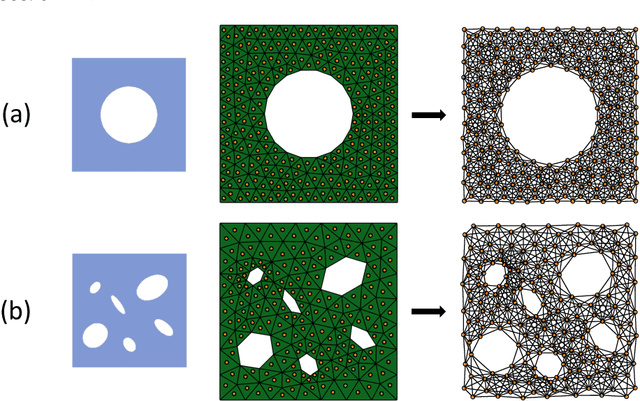

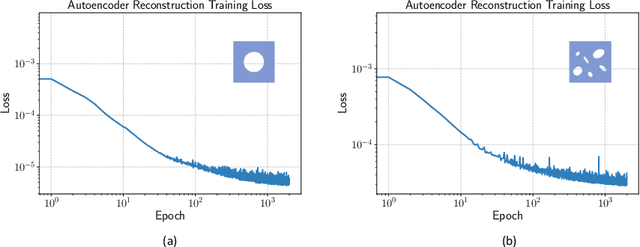

Abstract:The history-dependent behaviors of classical plasticity models are often driven by internal variables evolved according to phenomenological laws. The difficulty to interpret how these internal variables represent a history of deformation, the lack of direct measurement of these internal variables for calibration and validation, and the weak physical underpinning of those phenomenological laws have long been criticized as barriers to creating realistic models. In this work, geometric machine learning on graph data (e.g. finite element solutions) is used as a means to establish a connection between nonlinear dimensional reduction techniques and plasticity models. Geometric learning-based encoding on graphs allows the embedding of rich time-history data onto a low-dimensional Euclidean space such that the evolution of plastic deformation can be predicted in the embedded feature space. A corresponding decoder can then convert these low-dimensional internal variables back into a weighted graph such that the dominating topological features of plastic deformation can be observed and analyzed.

MD-inferred neural network monoclinic finite-strain hyperelasticity models for $β$-HMX: Sobolev training and validation against physical constraints

Nov 29, 2021

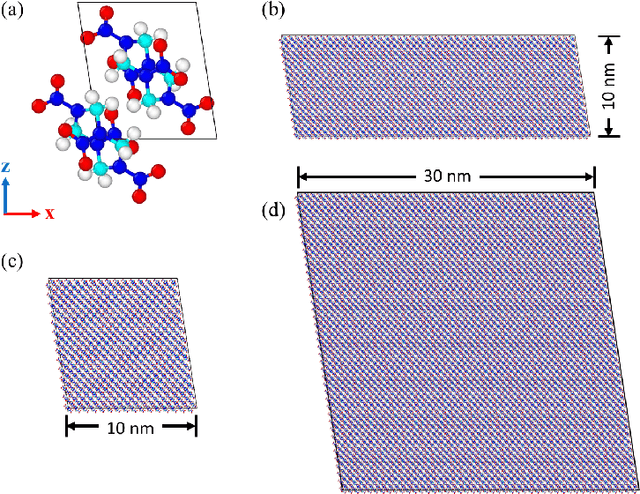

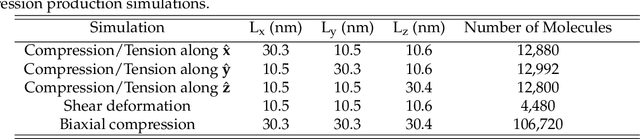

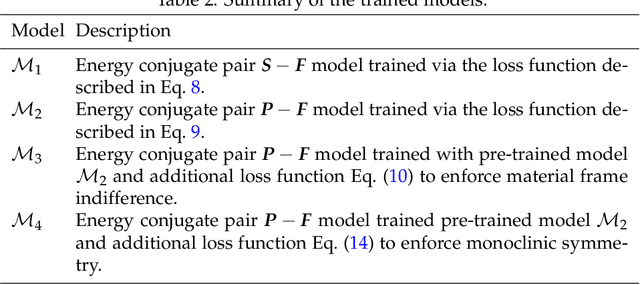

Abstract:We present a machine learning framework to train and validate neural networks to predict the anisotropic elastic response of the monoclinic organic molecular crystal $\beta$-HMX in the geometrical nonlinear regime. A filtered molecular dynamic (MD) simulations database is used to train the neural networks with a Sobolev norm that uses the stress measure and a reference configuration to deduce the elastic stored energy functional. To improve the accuracy of the elasticity tangent predictions originating from the learned stored energy, a transfer learning technique is used to introduce additional tangential constraints from the data while necessary conditions (e.g. strong ellipticity, crystallographic symmetry) for the correctness of the model are either introduced as additional physical constraints or incorporated in the validation tests. Assessment of the neural networks is based on (1) the accuracy with which they reproduce the bottom-line constitutive responses predicted by MD, (2) detailed examination of their stability and uniqueness, and (3) admissibility of the predicted responses with respect to continuum mechanics theory in the finite-deformation regime. We compare the neural networks' training efficiency under different Sobolev constraints and assess the models' accuracy and robustness against MD benchmarks for $\beta$-HMX.

Data-driven discovery of interpretable causal relations for deep learning material laws with uncertainty propagation

May 20, 2021

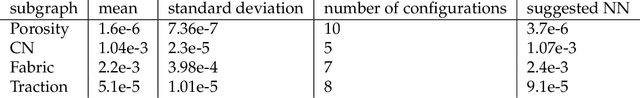

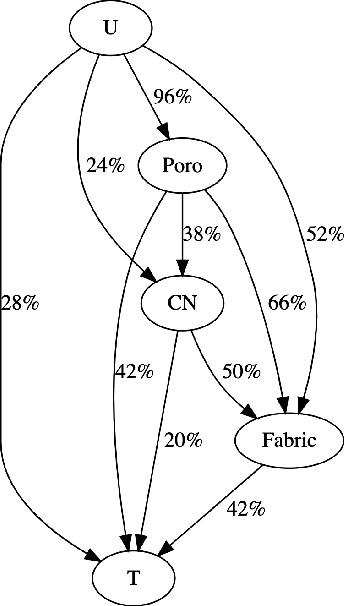

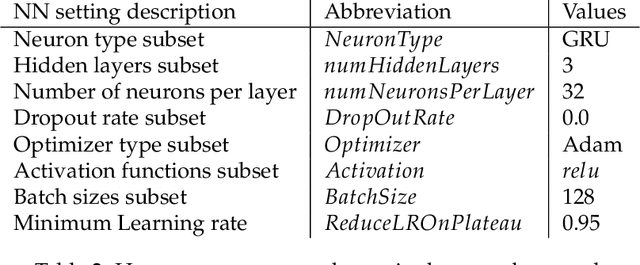

Abstract:This paper presents a computational framework that generates ensemble predictive mechanics models with uncertainty quantification (UQ). We first develop a causal discovery algorithm to infer causal relations among time-history data measured during each representative volume element (RVE) simulation through a directed acyclic graph (DAG). With multiple plausible sets of causal relationships estimated from multiple RVE simulations, the predictions are propagated in the derived causal graph while using a deep neural network equipped with dropout layers as a Bayesian approximation for uncertainty quantification. We select two representative numerical examples (traction-separation laws for frictional interfaces, elastoplasticity models for granular assembles) to examine the accuracy and robustness of the proposed causal discovery method for the common material law predictions in civil engineering applications.

Sobolev training of thermodynamic-informed neural networks for smoothed elasto-plasticity models with level set hardening

Oct 15, 2020

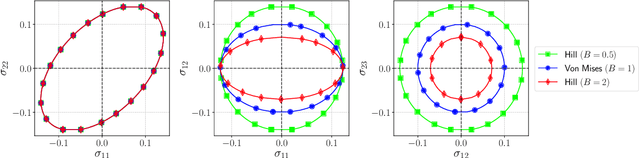

Abstract:We introduce a deep learning framework designed to train smoothed elastoplasticity models with interpretable components, such as a smoothed stored elastic energy function, a yield surface, and a plastic flow that are evolved based on a set of deep neural network predictions. By recasting the yield function as an evolving level set, we introduce a machine learning approach to predict the solutions of the Hamilton-Jacobi equation that governs the hardening mechanism. This machine learning hardening law may recover classical hardening models and discover new mechanisms that are otherwise very difficult to anticipate and hand-craft. This treatment enables us to use supervised machine learning to generate models that are thermodynamically consistent, interpretable, but also exhibit excellent learning capacity. Using a 3D FFT solver to create a polycrystal database, numerical experiments are conducted and the implementations of each component of the models are individually verified. Our numerical experiments reveal that this new approach provides more robust and accurate forward predictions of cyclic stress paths than these obtained from black-box deep neural network models such as a recurrent GRU neural network, a 1D convolutional neural network, and a multi-step feedforward model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge