Natalie Voets

Modality-Agnostic Input Channels Enable Segmentation of Brain lesions in Multimodal MRI with Sequences Unavailable During Training

Sep 11, 2025Abstract:Segmentation models are important tools for the detection and analysis of lesions in brain MRI. Depending on the type of brain pathology that is imaged, MRI scanners can acquire multiple, different image modalities (contrasts). Most segmentation models for multimodal brain MRI are restricted to fixed modalities and cannot effectively process new ones at inference. Some models generalize to unseen modalities but may lose discriminative modality-specific information. This work aims to develop a model that can perform inference on data that contain image modalities unseen during training, previously seen modalities, and heterogeneous combinations of both, thus allowing a user to utilize any available imaging modalities. We demonstrate this is possible with a simple, thus practical alteration to the U-net architecture, by integrating a modality-agnostic input channel or pathway, alongside modality-specific input channels. To train this modality-agnostic component, we develop an image augmentation scheme that synthesizes artificial MRI modalities. Augmentations differentially alter the appearance of pathological and healthy brain tissue to create artificial contrasts between them while maintaining realistic anatomical integrity. We evaluate the method using 8 MRI databases that include 5 types of pathologies (stroke, tumours, traumatic brain injury, multiple sclerosis and white matter hyperintensities) and 8 modalities (T1, T1+contrast, T2, PD, SWI, DWI, ADC and FLAIR). The results demonstrate that the approach preserves the ability to effectively process MRI modalities encountered during training, while being able to process new, unseen modalities to improve its segmentation. Project code: https://github.com/Anthony-P-Addison/AGN-MOD-SEG

The 2025 PNPL Competition: Speech Detection and Phoneme Classification in the LibriBrain Dataset

Jun 11, 2025Abstract:The advance of speech decoding from non-invasive brain data holds the potential for profound societal impact. Among its most promising applications is the restoration of communication to paralysed individuals affected by speech deficits such as dysarthria, without the need for high-risk surgical interventions. The ultimate aim of the 2025 PNPL competition is to produce the conditions for an "ImageNet moment" or breakthrough in non-invasive neural decoding, by harnessing the collective power of the machine learning community. To facilitate this vision we present the largest within-subject MEG dataset recorded to date (LibriBrain) together with a user-friendly Python library (pnpl) for easy data access and integration with deep learning frameworks. For the competition we define two foundational tasks (i.e. Speech Detection and Phoneme Classification from brain data), complete with standardised data splits and evaluation metrics, illustrative benchmark models, online tutorial code, a community discussion board, and public leaderboard for submissions. To promote accessibility and participation the competition features a Standard track that emphasises algorithmic innovation, as well as an Extended track that is expected to reward larger-scale computing, accelerating progress toward a non-invasive brain-computer interface for speech.

IterMask3D: Unsupervised Anomaly Detection and Segmentation with Test-Time Iterative Mask Refinement in 3D Brain MR

Apr 07, 2025Abstract:Unsupervised anomaly detection and segmentation methods train a model to learn the training distribution as 'normal'. In the testing phase, they identify patterns that deviate from this normal distribution as 'anomalies'. To learn the `normal' distribution, prevailing methods corrupt the images and train a model to reconstruct them. During testing, the model attempts to reconstruct corrupted inputs based on the learned 'normal' distribution. Deviations from this distribution lead to high reconstruction errors, which indicate potential anomalies. However, corrupting an input image inevitably causes information loss even in normal regions, leading to suboptimal reconstruction and an increased risk of false positives. To alleviate this, we propose IterMask3D, an iterative spatial mask-refining strategy designed for 3D brain MRI. We iteratively spatially mask areas of the image as corruption and reconstruct them, then shrink the mask based on reconstruction error. This process iteratively unmasks 'normal' areas to the model, whose information further guides reconstruction of 'normal' patterns under the mask to be reconstructed accurately, reducing false positives. In addition, to achieve better reconstruction performance, we also propose using high-frequency image content as additional structural information to guide the reconstruction of the masked area. Extensive experiments on the detection of both synthetic and real-world imaging artifacts, as well as segmentation of various pathological lesions across multiple MRI sequences, consistently demonstrate the effectiveness of our proposed method.

Feasibility of Federated Learning from Client Databases with Different Brain Diseases and MRI Modalities

Jun 17, 2024

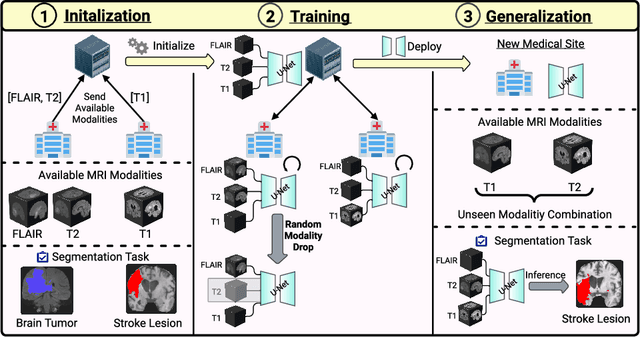

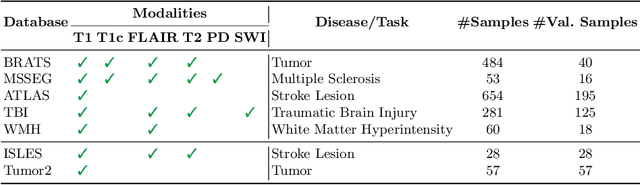

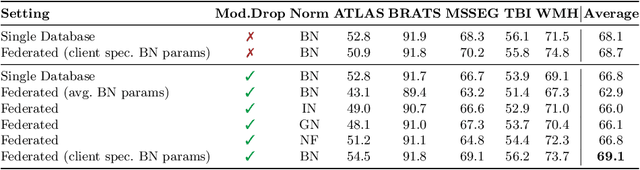

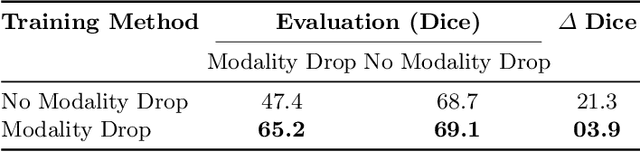

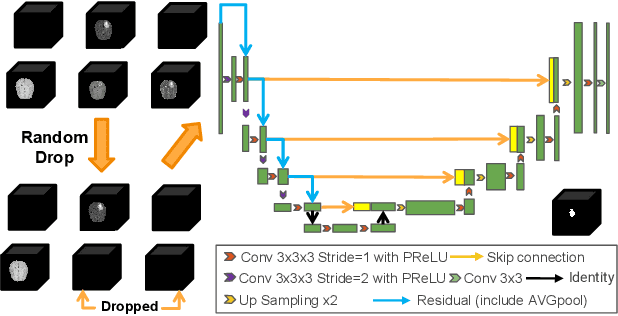

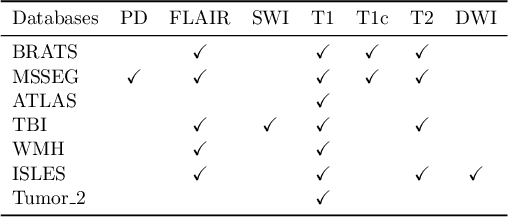

Abstract:Segmentation models for brain lesions in MRI are commonly developed for a specific disease and trained on data with a predefined set of MRI modalities. Each such model cannot segment the disease using data with a different set of MRI modalities, nor can it segment any other type of disease. Moreover, this training paradigm does not allow a model to benefit from learning from heterogeneous databases that may contain scans and segmentation labels for different types of brain pathologies and diverse sets of MRI modalities. Is it feasible to use Federated Learning (FL) for training a single model on client databases that contain scans and labels of different brain pathologies and diverse sets of MRI modalities? We demonstrate promising results by combining appropriate, simple, and practical modifications to the model and training strategy: Designing a model with input channels that cover the whole set of modalities available across clients, training with random modality drop, and exploring the effects of feature normalization methods. Evaluation on 7 brain MRI databases with 5 different diseases shows that such FL framework can train a single model that is shown to be very promising in segmenting all disease types seen during training. Importantly, it is able to segment these diseases in new databases that contain sets of modalities different from those in training clients. These results demonstrate, for the first time, feasibility and effectiveness of using FL to train a single segmentation model on decentralised data with diverse brain diseases and MRI modalities, a necessary step towards leveraging heterogeneous real-world databases. Code will be made available at: https://github.com/FelixWag/FL-MultiDisease-MRI

Feasibility and benefits of joint learning from MRI databases with different brain diseases and modalities for segmentation

May 28, 2024

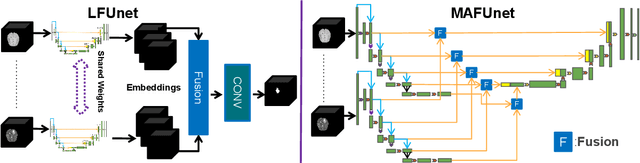

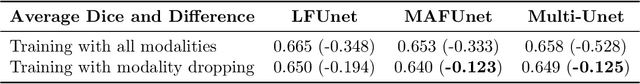

Abstract:Models for segmentation of brain lesions in multi-modal MRI are commonly trained for a specific pathology using a single database with a predefined set of MRI modalities, determined by a protocol for the specific disease. This work explores the following open questions: Is it feasible to train a model using multiple databases that contain varying sets of MRI modalities and annotations for different brain pathologies? Will this joint learning benefit performance on the sets of modalities and pathologies available during training? Will it enable analysis of new databases with different sets of modalities and pathologies? We develop and compare different methods and show that promising results can be achieved with appropriate, simple and practical alterations to the model and training framework. We experiment with 7 databases containing 5 types of brain pathologies and different sets of MRI modalities. Results demonstrate, for the first time, that joint training on multi-modal MRI databases with different brain pathologies and sets of modalities is feasible and offers practical benefits. It enables a single model to segment pathologies encountered during training in diverse sets of modalities, while facilitating segmentation of new types of pathologies such as via follow-up fine-tuning. The insights this study provides into the potential and limitations of this paradigm should prove useful for guiding future advances in the direction. Code and pretrained models: https://github.com/WenTXuL/MultiUnet

* Accepted to MIDL 2024

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge