Nassim Sehad

Large-Scale AI in Telecom: Charting the Roadmap for Innovation, Scalability, and Enhanced Digital Experiences

Mar 06, 2025

Abstract:This white paper discusses the role of large-scale AI in the telecommunications industry, with a specific focus on the potential of generative AI to revolutionize network functions and user experiences, especially in the context of 6G systems. It highlights the development and deployment of Large Telecom Models (LTMs), which are tailored AI models designed to address the complex challenges faced by modern telecom networks. The paper covers a wide range of topics, from the architecture and deployment strategies of LTMs to their applications in network management, resource allocation, and optimization. It also explores the regulatory, ethical, and standardization considerations for LTMs, offering insights into their future integration into telecom infrastructure. The goal is to provide a comprehensive roadmap for the adoption of LTMs to enhance scalability, performance, and user-centric innovation in telecom networks.

Generative AI for Immersive Communication: The Next Frontier in Internet-of-Senses Through 6G

Apr 02, 2024

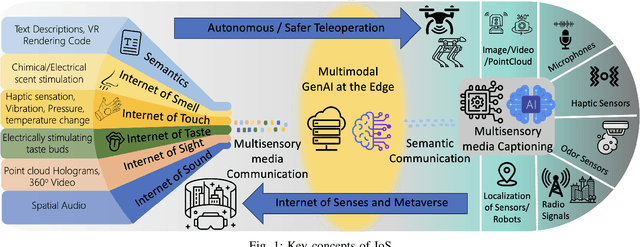

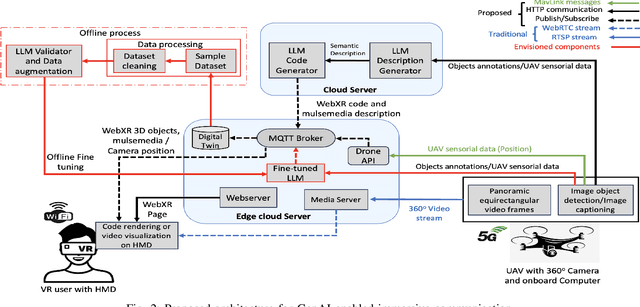

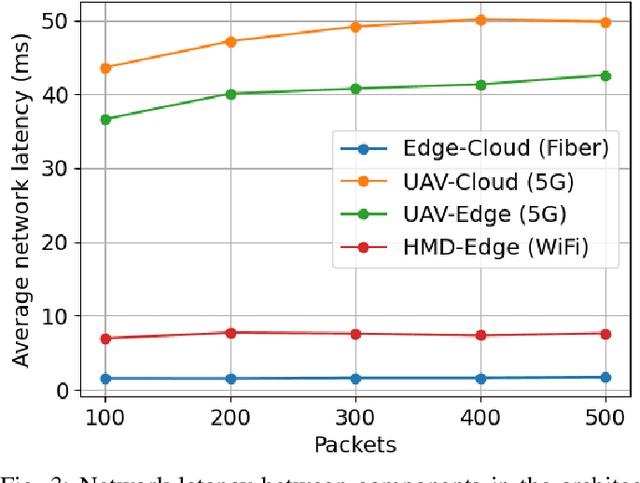

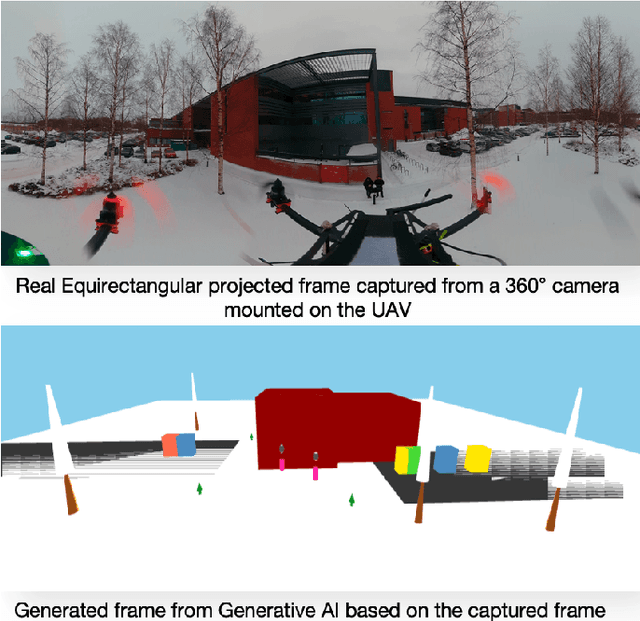

Abstract:Over the past two decades, the Internet-of-Things (IoT) has been a transformative concept, and as we approach 2030, a new paradigm known as the Internet of Senses (IoS) is emerging. Unlike conventional Virtual Reality (VR), IoS seeks to provide multi-sensory experiences, acknowledging that in our physical reality, our perception extends far beyond just sight and sound; it encompasses a range of senses. This article explores existing technologies driving immersive multi-sensory media, delving into their capabilities and potential applications. This exploration includes a comparative analysis between conventional immersive media streaming and a proposed use case that leverages semantic communication empowered by generative Artificial Intelligence (AI). The focal point of this analysis is the substantial reduction in bandwidth consumption by 99.93% in the proposed scheme. Through this comparison, we aim to underscore the practical applications of generative AI for immersive media while addressing the challenges and outlining future trajectories.

UAV Immersive Video Streaming: A Comprehensive Survey, Benchmarking, and Open Challenges

Oct 31, 2023Abstract:Over the past decade, the utilization of UAVs has witnessed significant growth, owing to their agility, rapid deployment, and maneuverability. In particular, the use of UAV-mounted 360-degree cameras to capture omnidirectional videos has enabled truly immersive viewing experiences with up to 6DoF. However, achieving this immersive experience necessitates encoding omnidirectional videos in high resolution, leading to increased bitrates. Consequently, new challenges arise in terms of latency, throughput, perceived quality, and energy consumption for real-time streaming of such content. This paper presents a comprehensive survey of research efforts in UAV-based immersive video streaming, benchmarks popular video encoding schemes, and identifies open research challenges. Initially, we review the literature on 360-degree video coding, packaging, and streaming, with a particular focus on standardization efforts to ensure interoperability of immersive video streaming devices and services. Subsequently, we provide a comprehensive review of research efforts focused on optimizing video streaming for timevarying UAV wireless channels. Additionally, we introduce a high resolution 360-degree video dataset captured from UAVs under different flying conditions. This dataset facilitates the evaluation of complexity and coding efficiency of software and hardware video encoders based on popular video coding standards and formats, including AVC/H.264, HEVC/H.265, VVC/H.266, VP9, and AV1. Our results demonstrate that HEVC achieves the best trade-off between coding efficiency and complexity through its hardware implementation, while AV1 format excels in coding efficiency through its software implementation, specifically using the libsvt-av1 encoder. Furthermore, we present a real testbed showcasing 360-degree video streaming over a UAV, enabling remote control of the drone via a 5G cellular network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge