Ibrahim Farhat

ALRM: Agentic LLM for Robotic Manipulation

Jan 27, 2026Abstract:Large Language Models (LLMs) have recently empowered agentic frameworks to exhibit advanced reasoning and planning capabilities. However, their integration in robotic control pipelines remains limited in two aspects: (1) prior \ac{llm}-based approaches often lack modular, agentic execution mechanisms, limiting their ability to plan, reflect on outcomes, and revise actions in a closed-loop manner; and (2) existing benchmarks for manipulation tasks focus on low-level control and do not systematically evaluate multistep reasoning and linguistic variation. In this paper, we propose Agentic LLM for Robot Manipulation (ALRM), an LLM-driven agentic framework for robotic manipulation. ALRM integrates policy generation with agentic execution through a ReAct-style reasoning loop, supporting two complementary modes: Code-asPolicy (CaP) for direct executable control code generation, and Tool-as-Policy (TaP) for iterative planning and tool-based action execution. To enable systematic evaluation, we also introduce a novel simulation benchmark comprising 56 tasks across multiple environments, capturing linguistically diverse instructions. Experiments with ten LLMs demonstrate that ALRM provides a scalable, interpretable, and modular approach for bridging natural language reasoning with reliable robotic execution. Results reveal Claude-4.1-Opus as the top closed-source model and Falcon-H1-7B as the top open-source model under CaP.

360-Degree Video Super Resolution and Quality Enhancement Challenge: Methods and Results

Nov 11, 2024Abstract:Omnidirectional (360-degree) video is rapidly gaining popularity due to advancements in immersive technologies like virtual reality (VR) and extended reality (XR). However, real-time streaming of such videos, especially in live mobile scenarios like unmanned aerial vehicles (UAVs), is challenged by limited bandwidth and strict latency constraints. Traditional methods, such as compression and adaptive resolution, help but often compromise video quality and introduce artifacts that degrade the viewer experience. Additionally, the unique spherical geometry of 360-degree video presents challenges not encountered in traditional 2D video. To address these issues, we initiated the 360-degree Video Super Resolution and Quality Enhancement Challenge. This competition encourages participants to develop efficient machine learning solutions to enhance the quality of low-bitrate compressed 360-degree videos, with two tracks focusing on 2x and 4x super-resolution (SR). In this paper, we outline the challenge framework, detailing the two competition tracks and highlighting the SR solutions proposed by the top-performing models. We assess these models within a unified framework, considering quality enhancement, bitrate gain, and computational efficiency. This challenge aims to drive innovation in real-time 360-degree video streaming, improving the quality and accessibility of immersive visual experiences.

ODVista: An Omnidirectional Video Dataset for super-resolution and Quality Enhancement Tasks

Mar 07, 2024

Abstract:Omnidirectional or 360-degree video is being increasingly deployed, largely due to the latest advancements in immersive virtual reality (VR) and extended reality (XR) technology. However, the adoption of these videos in streaming encounters challenges related to bandwidth and latency, particularly in mobility conditions such as with unmanned aerial vehicles (UAVs). Adaptive resolution and compression aim to preserve quality while maintaining low latency under these constraints, yet downscaling and encoding can still degrade quality and introduce artifacts. Machine learning (ML)-based super-resolution (SR) and quality enhancement techniques offer a promising solution by enhancing detail recovery and reducing compression artifacts. However, current publicly available 360-degree video SR datasets lack compression artifacts, which limit research in this field. To bridge this gap, this paper introduces omnidirectional video streaming dataset (ODVista), which comprises 200 high-resolution and high quality videos downscaled and encoded at four bitrate ranges using the high-efficiency video coding (HEVC)/H.265 standard. Evaluations show that the dataset not only features a wide variety of scenes but also spans different levels of content complexity, which is crucial for robust solutions that perform well in real-world scenarios and generalize across diverse visual environments. Additionally, we evaluate the performance, considering both quality enhancement and runtime, of two handcrafted and two ML-based SR models on the validation and testing sets of ODVista.

UAV Immersive Video Streaming: A Comprehensive Survey, Benchmarking, and Open Challenges

Oct 31, 2023Abstract:Over the past decade, the utilization of UAVs has witnessed significant growth, owing to their agility, rapid deployment, and maneuverability. In particular, the use of UAV-mounted 360-degree cameras to capture omnidirectional videos has enabled truly immersive viewing experiences with up to 6DoF. However, achieving this immersive experience necessitates encoding omnidirectional videos in high resolution, leading to increased bitrates. Consequently, new challenges arise in terms of latency, throughput, perceived quality, and energy consumption for real-time streaming of such content. This paper presents a comprehensive survey of research efforts in UAV-based immersive video streaming, benchmarks popular video encoding schemes, and identifies open research challenges. Initially, we review the literature on 360-degree video coding, packaging, and streaming, with a particular focus on standardization efforts to ensure interoperability of immersive video streaming devices and services. Subsequently, we provide a comprehensive review of research efforts focused on optimizing video streaming for timevarying UAV wireless channels. Additionally, we introduce a high resolution 360-degree video dataset captured from UAVs under different flying conditions. This dataset facilitates the evaluation of complexity and coding efficiency of software and hardware video encoders based on popular video coding standards and formats, including AVC/H.264, HEVC/H.265, VVC/H.266, VP9, and AV1. Our results demonstrate that HEVC achieves the best trade-off between coding efficiency and complexity through its hardware implementation, while AV1 format excels in coding efficiency through its software implementation, specifically using the libsvt-av1 encoder. Furthermore, we present a real testbed showcasing 360-degree video streaming over a UAV, enabling remote control of the drone via a 5G cellular network.

Performance Analysis of Optimized Versatile Video Coding Software Decoders on Embedded Platforms

Jun 30, 2022

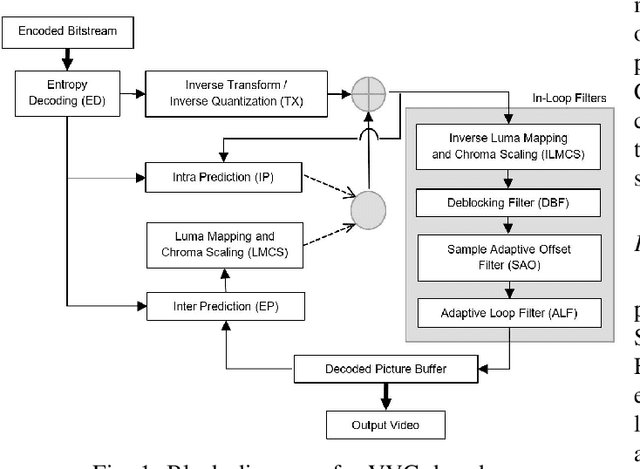

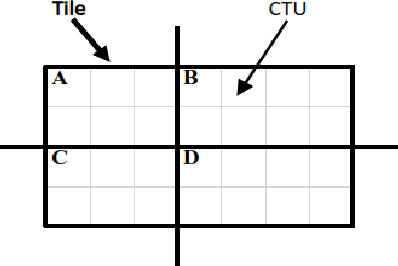

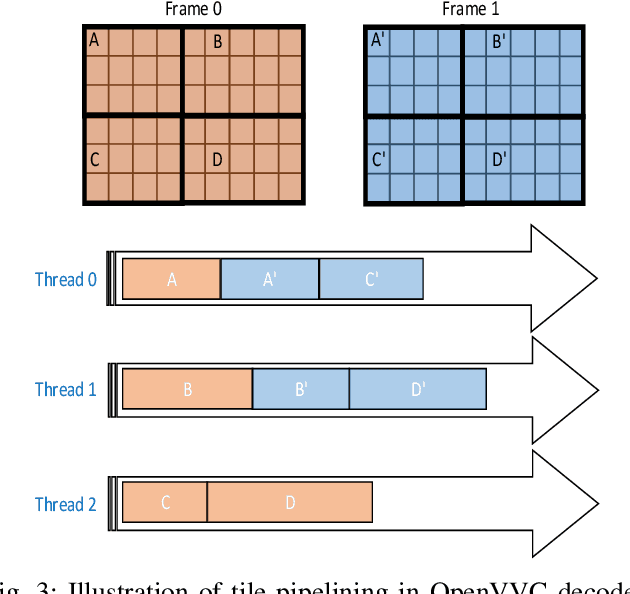

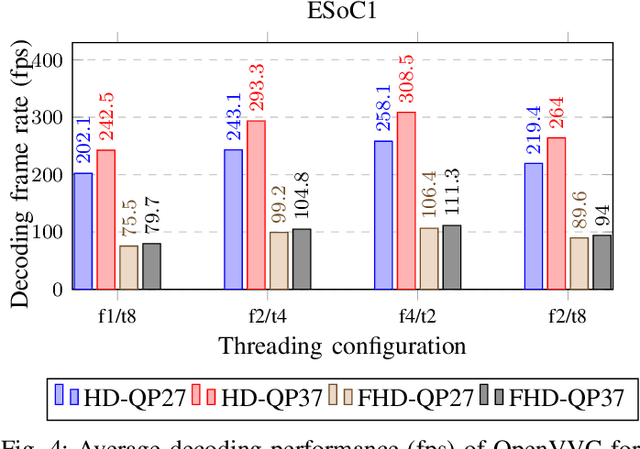

Abstract:In recent years, the global demand for high-resolution videos and the emergence of new multimedia applications have created the need for a new video coding standard. Hence, in July 2020 the Versatile Video Coding (VVC) standard was released providing up to 50% bit-rate saving for the same video quality compared to its predecessor High Efficiency Video Coding (HEVC). However, this bit-rate saving comes at the cost of a high computational complexity, particularly for live applications and on resource-constraint embedded devices. This paper presents two optimized VVC software decoders, named OpenVVC and Versatile Video deCoder (VVdeC), designed for low resources platforms. They exploit optimization techniques such as data level parallelism using Single Instruction Multiple Data (SIMD) instructions and functional level parallelism using frame, tile and slice-based parallelisms. Furthermore, a comparison in terms of decoding run time, energy and memory consumption between the two decoders is presented while targeting two different resource-constraint embedded devices. The results showed that both decoders achieve real-time decoding of Full High definition (FHD) resolution over the first platform using 8 cores and High-definition (HD) real-time decoding for the second platform using only 4 cores with comparable results in terms of average consumed energy: around 26 J and 15 J for the 8 cores and 4 cores embedded platforms, respectively. Regarding the memory usage, OpenVVC showed better results with less average maximum memory consumed during run time compared to VVdeC.

Lightweight Hardware Design of the Inverse Transform Module for 4K ASIC VVC Decoders

Jul 24, 2021

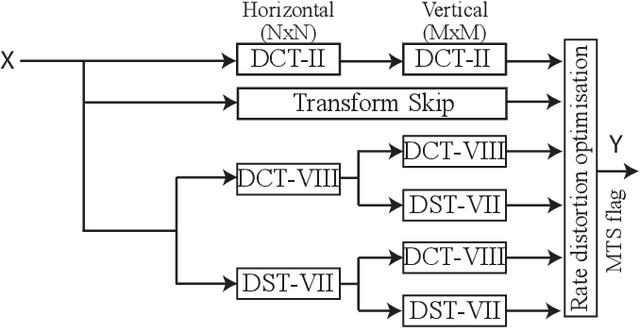

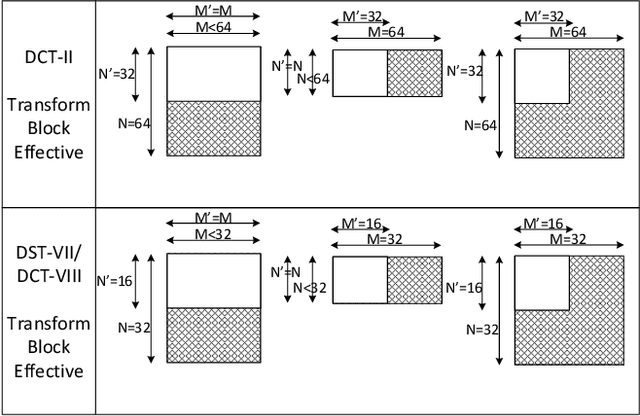

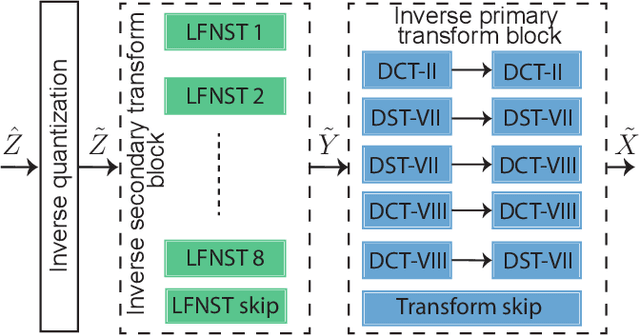

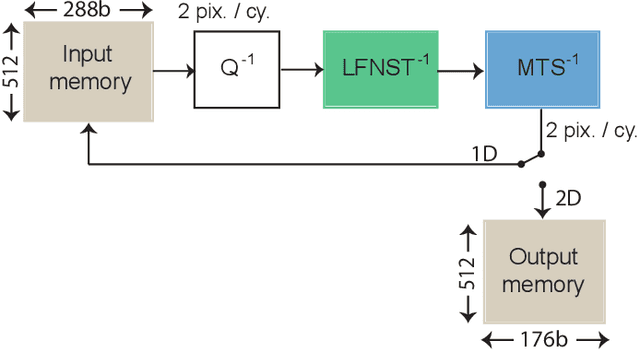

Abstract:Versatile Video Coding (VVC) is the next generation video coding standard finalized in July 2020. VVC introduces new coding tools enhancing the coding efficiency compared to its predecessor High Efficiency Video Coding (HEVC). These new tools have a significant impact on the VVC software decoder complexity estimated to 2 times HEVC decoder complexity. In particular, the transform module includes in VVC separable and non-separable transforms named Multiple Transform Selection (MTS) and Low Frequency Non-Separable Transform (LFNST) tools, respectively. In this paper, we present an area-efficient hardware architecture of the inverse transform module for a VVC decoder. The proposed design uses a total of 64 regular multipliers in a pipelined architecture targeting ASIC platforms. It consists in a multi-standard architecture that supports the transform modules of recent MPEG standards including AVC, HEVC and VVC. The architecture leverages all primary and secondary transforms optimisations including butterfly decomposition, coefficients zeroing and the inherent linear relationship between the transforms. The synthesized results show that the proposed method sustains a constant throughput of 1 sample per cycle and a constant latency for all block sizes. The proposed hardware inverse transform module operates at 600 MHz frequency enabling to decode in real-time 4K video at 30 frames per second in 4:2:2 chroma sub-sampling format. The proposed module is integrated in an ASIC UHD decoder targeting energy-aware decoding of VVC videos on consumer devices.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge