Mo Wang

From Native Memes to Global Moderation: Cross-Cultural Evaluation of Vision-Language Models for Hateful Meme Detection

Feb 11, 2026Abstract:Cultural context profoundly shapes how people interpret online content, yet vision-language models (VLMs) remain predominantly trained through Western or English-centric lenses. This limits their fairness and cross-cultural robustness in tasks like hateful meme detection. We introduce a systematic evaluation framework designed to diagnose and quantify the cross-cultural robustness of state-of-the-art VLMs across multilingual meme datasets, analyzing three axes: (i) learning strategy (zero-shot vs. one-shot), (ii) prompting language (native vs. English), and (iii) translation effects on meaning and detection. Results show that the common ``translate-then-detect'' approach deteriorate performance, while culturally aligned interventions - native-language prompting and one-shot learning - significantly enhance detection. Our findings reveal systematic convergence toward Western safety norms and provide actionable strategies to mitigate such bias, guiding the design of globally robust multimodal moderation systems.

From Native Memes to Global Moderation: Cros-Cultural Evaluation of Vision-Language Models for Hateful Meme Detection

Feb 07, 2026Abstract:Cultural context profoundly shapes how people interpret online content, yet vision-language models (VLMs) remain predominantly trained through Western or English-centric lenses. This limits their fairness and cross-cultural robustness in tasks like hateful meme detection. We introduce a systematic evaluation framework designed to diagnose and quantify the cross-cultural robustness of state-of-the-art VLMs across multilingual meme datasets, analyzing three axes: (i) learning strategy (zero-shot vs. one-shot), (ii) prompting language (native vs. English), and (iii) translation effects on meaning and detection. Results show that the common ``translate-then-detect'' approach deteriorate performance, while culturally aligned interventions - native-language prompting and one-shot learning - significantly enhance detection. Our findings reveal systematic convergence toward Western safety norms and provide actionable strategies to mitigate such bias, guiding the design of globally robust multimodal moderation systems.

SLIM-Brain: A Data- and Training-Efficient Foundation Model for fMRI Data Analysis

Dec 26, 2025Abstract:Foundation models are emerging as a powerful paradigm for fMRI analysis, but current approaches face a dual bottleneck of data- and training-efficiency. Atlas-based methods aggregate voxel signals into fixed regions of interest, reducing data dimensionality but discarding fine-grained spatial details, and requiring extremely large cohorts to train effectively as general-purpose foundation models. Atlas-free methods, on the other hand, operate directly on voxel-level information - preserving spatial fidelity but are prohibitively memory- and compute-intensive, making large-scale pre-training infeasible. We introduce SLIM-Brain (Sample-efficient, Low-memory fMRI Foundation Model for Human Brain), a new atlas-free foundation model that simultaneously improves both data- and training-efficiency. SLIM-Brain adopts a two-stage adaptive design: (i) a lightweight temporal extractor captures global context across full sequences and ranks data windows by saliency, and (ii) a 4D hierarchical encoder (Hiera-JEPA) learns fine-grained voxel-level representations only from the top-$k$ selected windows, while deleting about 70% masked patches. Extensive experiments across seven public benchmarks show that SLIM-Brain establishes new state-of-the-art performance on diverse tasks, while requiring only 4 thousand pre-training sessions and approximately 30% of GPU memory comparing to traditional voxel-level methods.

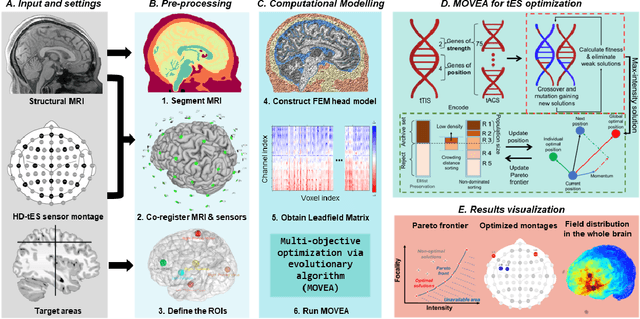

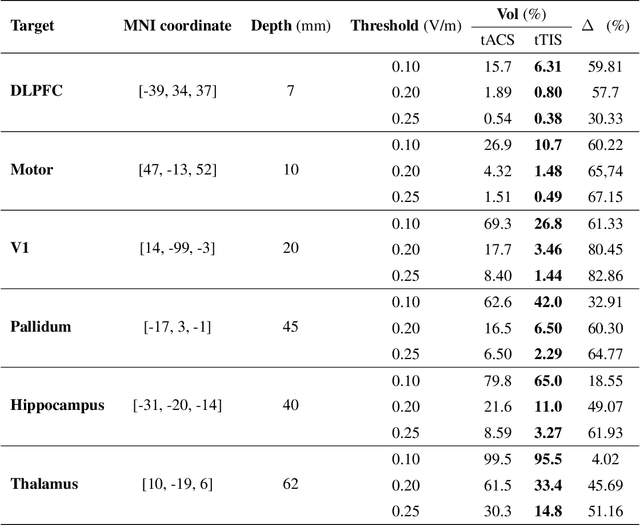

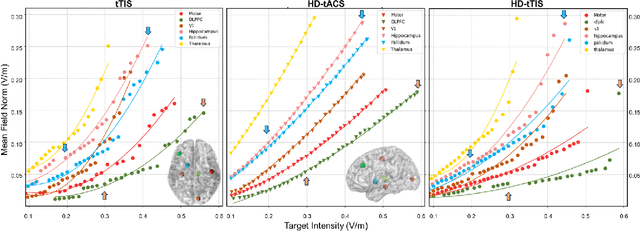

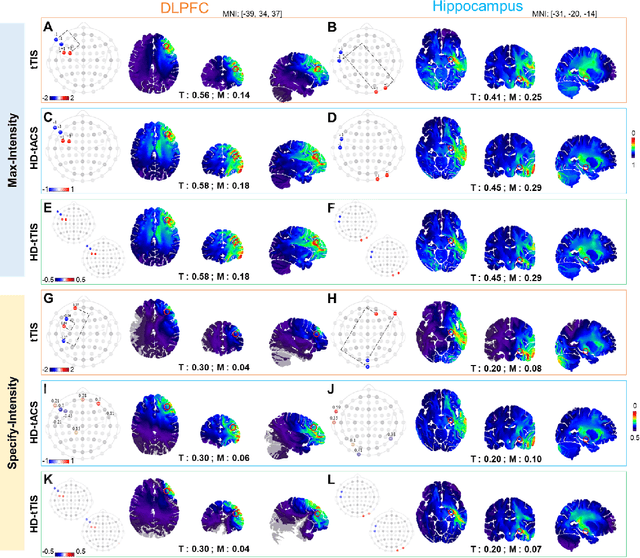

Multi-objective optimization via evolutionary algorithm (MOVEA) for high-definition transcranial electrical stimulation of the human brain

Nov 10, 2022

Abstract:Transcranial temporal interference stimulation (tTIS) has been reported to be effective in stimulating deep brain structures in experimental studies. However, a computational framework for optimizing the tTIS strategy and simulating the impact of tTIS on the brain is still lacking, as previous methods rely on predefined parameters and hardly adapt to additional constraints. Here, we propose a general framework, namely multi-objective optimization via evolutionary algorithm (MOVEA), to solve the nonconvex optimization problem for various stimulation techniques, including tTIS and transcranial alternating current stimulation (tACS). By optimizing the electrode montage in a two-stage structure, MOVEA can be compatible with additional constraints (e.g., the number of electrodes, additional avoidance regions), and MOVEA can accelerate to obtain the Pareto fronts. These Pareto fronts consist of a set of optimal solutions under different requirements, suggesting a trade-off relationship between conflicting objectives, such as intensity and focality. Based on MOVEA, we make comprehensive comparisons between tACS and tTIS in terms of intensity, focality and maneuverability for targets of different depths. Our results show that although the tTIS can only obtain a relatively low maximum achievable electric field strength, for example, the maximum intensity of motor area under tTIS is 0.42V /m, while 0.51V /m under tACS, it helps improve the focality by reducing 60% activated volume outside the target. We further perform ANOVA on the stimulation results of eight subjects with tACS and tTIS. Despite the individual differences in head models, our results suggest that tACS has a greater intensity and tTIS has a higher focality. These findings provide guidance on the choice between tACS and tTIS and indicate a great potential in tTIS-based personalized neuromodulation. Code will be released soon.

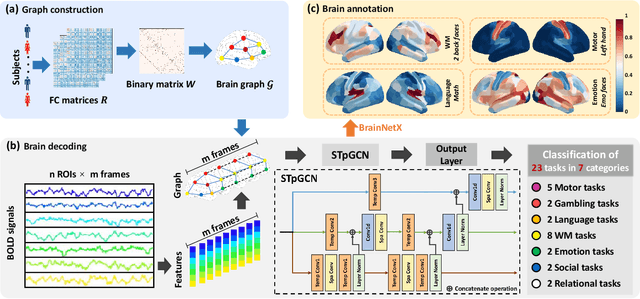

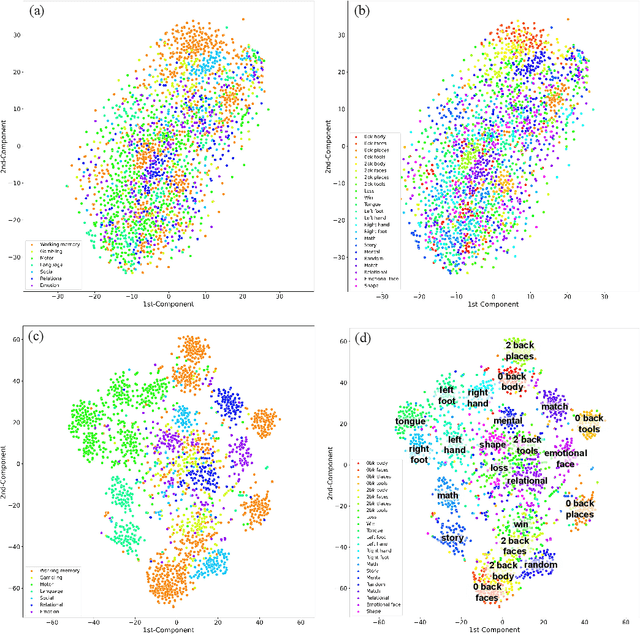

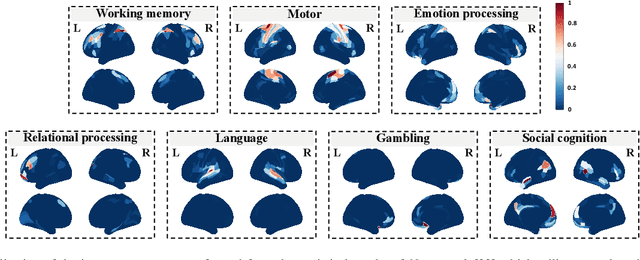

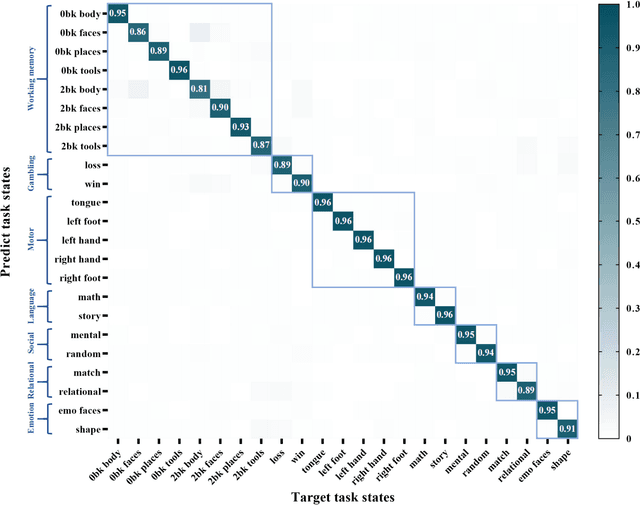

Explainable fMRI-based Brain Decoding via Spatial Temporal-pyramid Graph Convolutional Network

Oct 08, 2022

Abstract:Brain decoding, aiming to identify the brain states using neural activity, is important for cognitive neuroscience and neural engineering. However, existing machine learning methods for fMRI-based brain decoding either suffer from low classification performance or poor explainability. Here, we address this issue by proposing a biologically inspired architecture, Spatial Temporal-pyramid Graph Convolutional Network (STpGCN), to capture the spatial-temporal graph representation of functional brain activities. By designing multi-scale spatial-temporal pathways and bottom-up pathways that mimic the information process and temporal integration in the brain, STpGCN is capable of explicitly utilizing the multi-scale temporal dependency of brain activities via graph, thereby achieving high brain decoding performance. Additionally, we propose a sensitivity analysis method called BrainNetX to better explain the decoding results by automatically annotating task-related brain regions from the brain-network standpoint. We conduct extensive experiments on fMRI data under 23 cognitive tasks from Human Connectome Project (HCP) S1200. The results show that STpGCN significantly improves brain decoding performance compared to competing baseline models; BrainNetX successfully annotates task-relevant brain regions. Post hoc analysis based on these regions further validates that the hierarchical structure in STpGCN significantly contributes to the explainability, robustness and generalization of the model. Our methods not only provide insights into information representation in the brain under multiple cognitive tasks but also indicate a bright future for fMRI-based brain decoding.

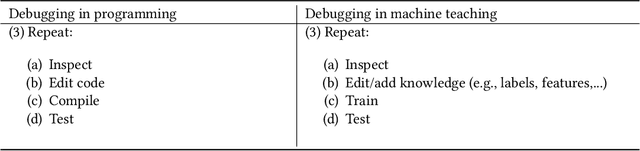

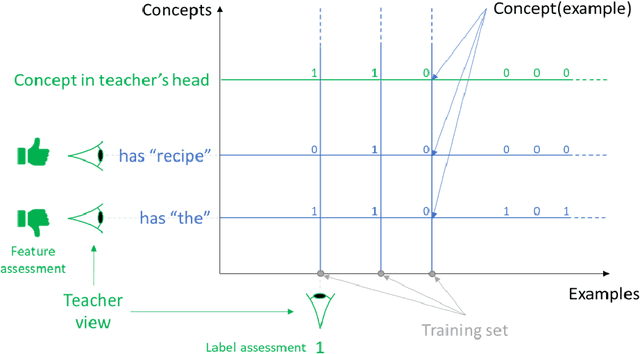

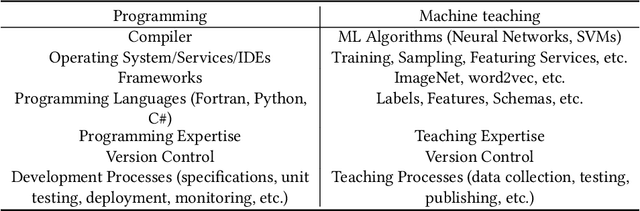

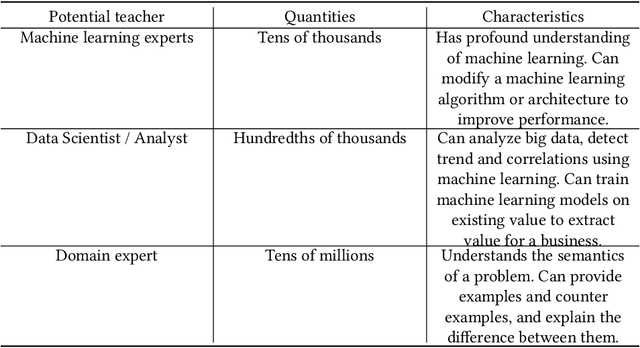

Machine Teaching: A New Paradigm for Building Machine Learning Systems

Aug 11, 2017

Abstract:The current processes for building machine learning systems require practitioners with deep knowledge of machine learning. This significantly limits the number of machine learning systems that can be created and has led to a mismatch between the demand for machine learning systems and the ability for organizations to build them. We believe that in order to meet this growing demand for machine learning systems we must significantly increase the number of individuals that can teach machines. We postulate that we can achieve this goal by making the process of teaching machines easy, fast and above all, universally accessible. While machine learning focuses on creating new algorithms and improving the accuracy of "learners", the machine teaching discipline focuses on the efficacy of the "teachers". Machine teaching as a discipline is a paradigm shift that follows and extends principles of software engineering and programming languages. We put a strong emphasis on the teacher and the teacher's interaction with data, as well as crucial components such as techniques and design principles of interaction and visualization. In this paper, we present our position regarding the discipline of machine teaching and articulate fundamental machine teaching principles. We also describe how, by decoupling knowledge about machine learning algorithms from the process of teaching, we can accelerate innovation and empower millions of new uses for machine learning models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge