Minseok Ryu

Federated Low-Rank Tensor Estimation for Multimodal Image Reconstruction

Feb 04, 2025

Abstract:Low-rank tensor estimation offers a powerful approach to addressing high-dimensional data challenges and can substantially improve solutions to ill-posed inverse problems, such as image reconstruction under noisy or undersampled conditions. Meanwhile, tensor decomposition has gained prominence in federated learning (FL) due to its effectiveness in exploiting latent space structure and its capacity to enhance communication efficiency. In this paper, we present a federated image reconstruction method that applies Tucker decomposition, incorporating joint factorization and randomized sketching to manage large-scale, multimodal data. Our approach avoids reconstructing full-size tensors and supports heterogeneous ranks, allowing clients to select personalized decomposition ranks based on prior knowledge or communication capacity. Numerical results demonstrate that our method achieves superior reconstruction quality and communication compression compared to existing approaches, thereby highlighting its potential for multimodal inverse problems in the FL setting.

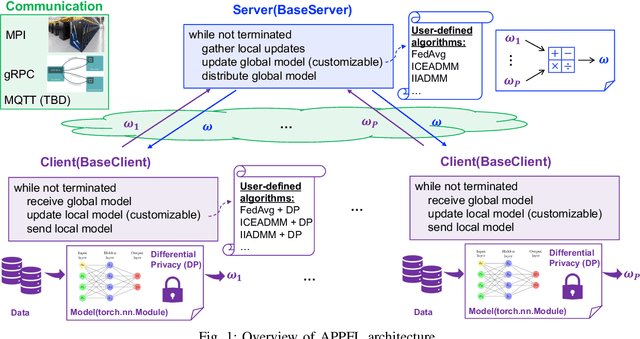

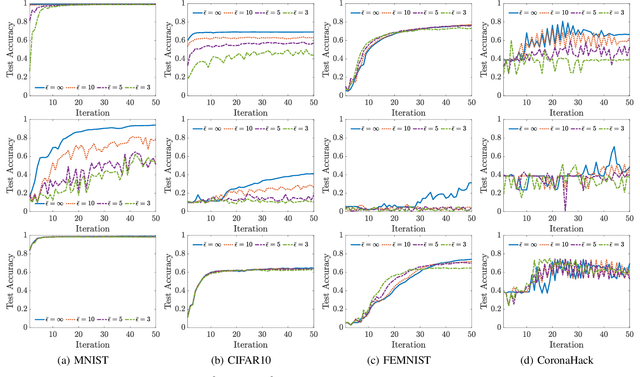

Advances in APPFL: A Comprehensive and Extensible Federated Learning Framework

Sep 17, 2024

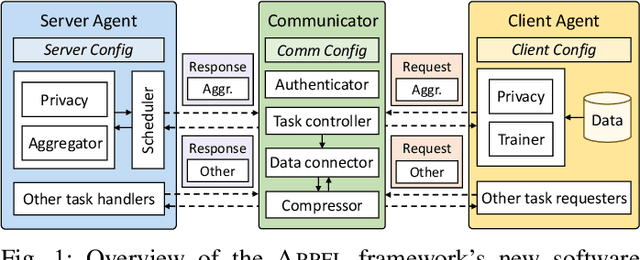

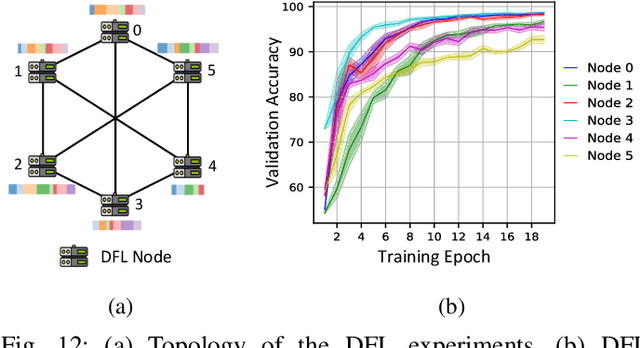

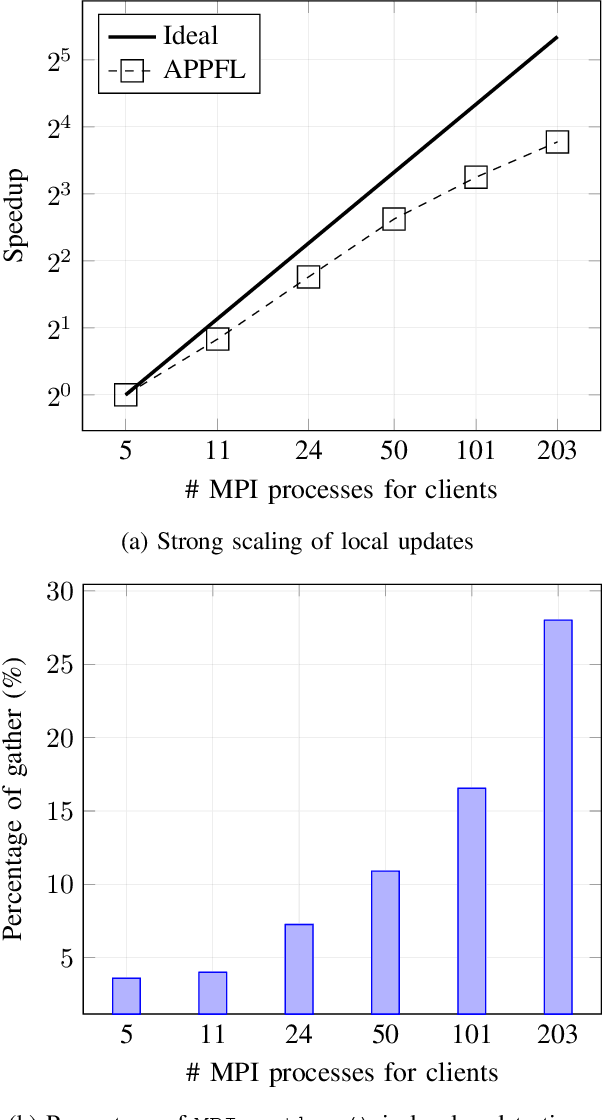

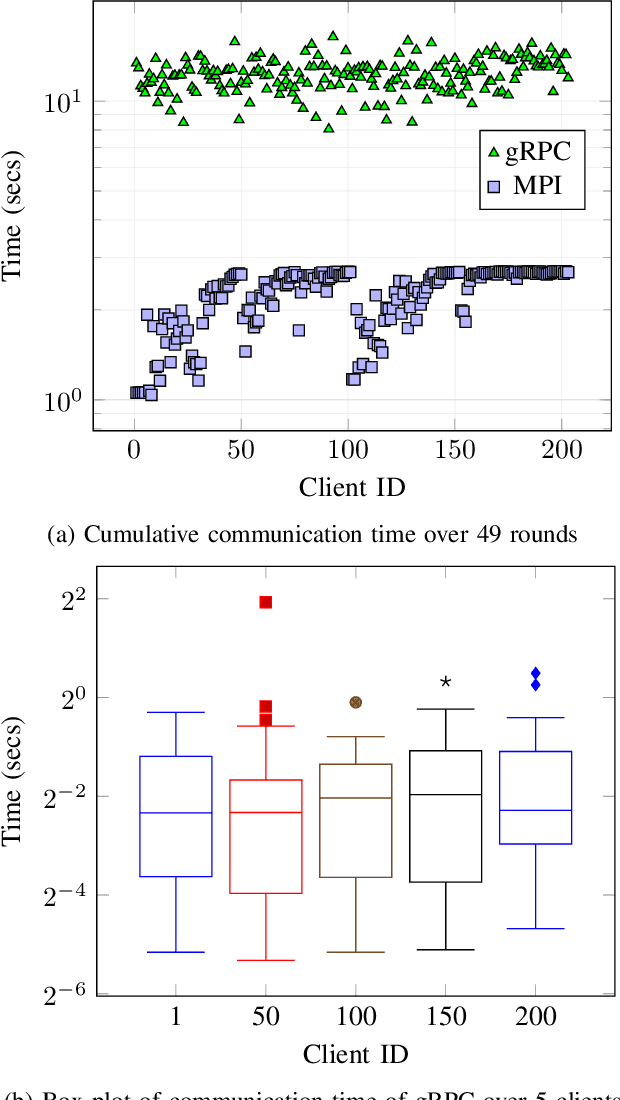

Abstract:Federated learning (FL) is a distributed machine learning paradigm enabling collaborative model training while preserving data privacy. In today's landscape, where most data is proprietary, confidential, and distributed, FL has become a promising approach to leverage such data effectively, particularly in sensitive domains such as medicine and the electric grid. Heterogeneity and security are the key challenges in FL, however; most existing FL frameworks either fail to address these challenges adequately or lack the flexibility to incorporate new solutions. To this end, we present the recent advances in developing APPFL, an extensible framework and benchmarking suite for federated learning, which offers comprehensive solutions for heterogeneity and security concerns, as well as user-friendly interfaces for integrating new algorithms or adapting to new applications. We demonstrate the capabilities of APPFL through extensive experiments evaluating various aspects of FL, including communication efficiency, privacy preservation, computational performance, and resource utilization. We further highlight the extensibility of APPFL through case studies in vertical, hierarchical, and decentralized FL. APPFL is open-sourced at https://github.com/APPFL/APPFL.

APPFLx: Providing Privacy-Preserving Cross-Silo Federated Learning as a Service

Aug 17, 2023

Abstract:Cross-silo privacy-preserving federated learning (PPFL) is a powerful tool to collaboratively train robust and generalized machine learning (ML) models without sharing sensitive (e.g., healthcare of financial) local data. To ease and accelerate the adoption of PPFL, we introduce APPFLx, a ready-to-use platform that provides privacy-preserving cross-silo federated learning as a service. APPFLx employs Globus authentication to allow users to easily and securely invite trustworthy collaborators for PPFL, implements several synchronous and asynchronous FL algorithms, streamlines the FL experiment launch process, and enables tracking and visualizing the life cycle of FL experiments, allowing domain experts and ML practitioners to easily orchestrate and evaluate cross-silo FL under one platform. APPFLx is available online at https://appflx.link

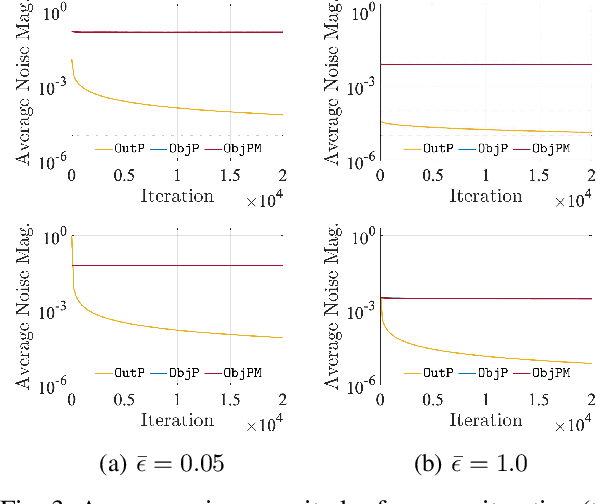

Differentially Private Distributed Convex Optimization

Feb 28, 2023Abstract:This paper considers distributed optimization (DO) where multiple agents cooperate to minimize a global objective function, expressed as a sum of local objectives, subject to some constraints. In DO, each agent iteratively solves a local optimization model constructed by its own data and communicates some information (e.g., a local solution) with its neighbors until a global solution is obtained. Even though locally stored data are not shared with other agents, it is still possible to reconstruct the data from the information communicated among agents, which could limit the practical usage of DO in applications with sensitive data. To address this issue, we propose a privacy-preserving DO algorithm for constrained convex optimization models, which provides a statistical guarantee of data privacy, known as differential privacy, and a sequence of iterates that converges to an optimal solution in expectation. The proposed algorithm generalizes a linearized alternating direction method of multipliers by introducing a multiple local updates technique to reduce communication costs and incorporating an objective perturbation method in the local optimization models to compute and communicate randomized feasible local solutions that cannot be utilized to reconstruct the local data, thus preserving data privacy. Under the existence of convex constraints, we show that, while both algorithms provide the same level of data privacy, the objective perturbation used in the proposed algorithm can provide better solutions than does the widely adopted output perturbation method that randomizes the local solutions by adding some noise. We present the details of privacy and convergence analyses and numerically demonstrate the effectiveness of the proposed algorithm by applying it in two different applications, namely, distributed control of power flow and federated learning, where data privacy is of concern.

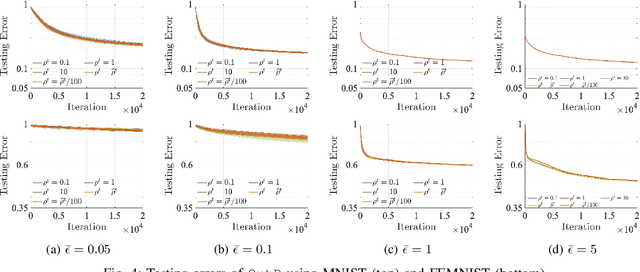

Differentially Private Federated Learning via Inexact ADMM with Multiple Local Updates

Feb 18, 2022

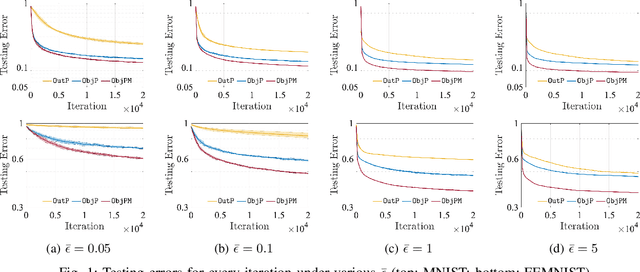

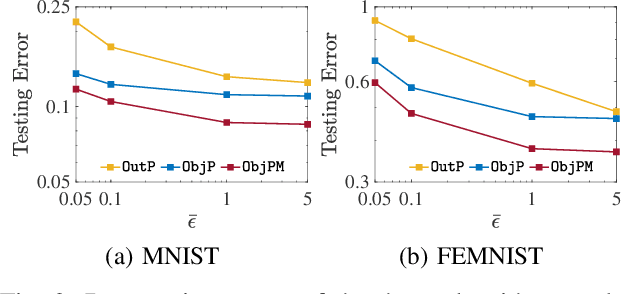

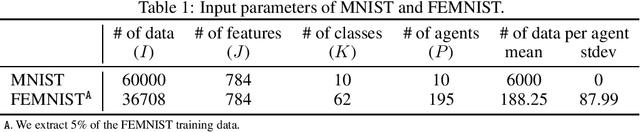

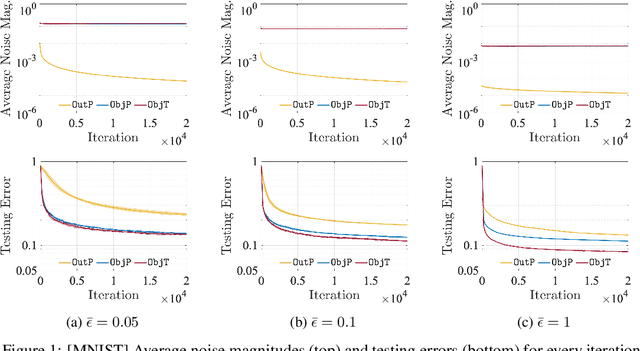

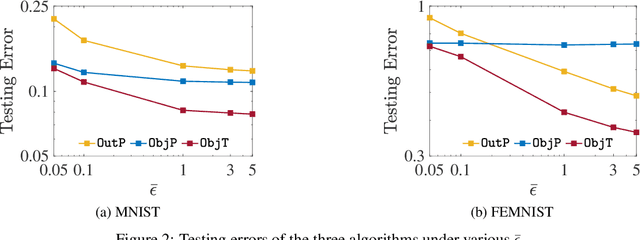

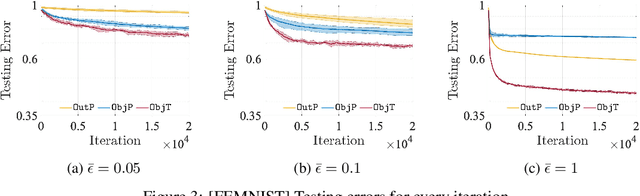

Abstract:Differential privacy (DP) techniques can be applied to the federated learning model to statistically guarantee data privacy against inference attacks to communication among the learning agents. While ensuring strong data privacy, however, the DP techniques hinder achieving a greater learning performance. In this paper we develop a DP inexact alternating direction method of multipliers algorithm with multiple local updates for federated learning, where a sequence of convex subproblems is solved with the objective perturbation by random noises generated from a Laplace distribution. We show that our algorithm provides $\bar{\epsilon}$-DP for every iteration, where $\bar{\epsilon}$ is a privacy budget controlled by the user. We also present convergence analyses of the proposed algorithm. Using MNIST and FEMNIST datasets for the image classification, we demonstrate that our algorithm reduces the testing error by at most $31\%$ compared with the existing DP algorithm, while achieving the same level of data privacy. The numerical experiment also shows that our algorithm converges faster than the existing algorithm.

APPFL: Open-Source Software Framework for Privacy-Preserving Federated Learning

Feb 08, 2022

Abstract:Federated learning (FL) enables training models at different sites and updating the weights from the training instead of transferring data to a central location and training as in classical machine learning. The FL capability is especially important to domains such as biomedicine and smart grid, where data may not be shared freely or stored at a central location because of policy challenges. Thanks to the capability of learning from decentralized datasets, FL is now a rapidly growing research field, and numerous FL frameworks have been developed. In this work, we introduce APPFL, the Argonne Privacy-Preserving Federated Learning framework. APPFL allows users to leverage implemented privacy-preserving algorithms, implement new algorithms, and simulate and deploy various FL algorithms with privacy-preserving techniques. The modular framework enables users to customize the components for algorithms, privacy, communication protocols, neural network models, and user data. We also present a new communication-efficient algorithm based on an inexact alternating direction method of multipliers. The algorithm requires significantly less communication between the server and the clients than does the current state of the art. We demonstrate the computational capabilities of APPFL, including differentially private FL on various test datasets and its scalability, by using multiple algorithms and datasets on different computing environments.

Differentially Private Federated Learning via Inexact ADMM

Jun 11, 2021

Abstract:Differential privacy (DP) techniques can be applied to the federated learning model to protect data privacy against inference attacks to communication among the learning agents. The DP techniques, however, hinder achieving a greater learning performance while ensuring strong data privacy. In this paper we develop a DP inexact alternating direction method of multipliers algorithm that solves a sequence of trust-region subproblems with the objective perturbation by random noises generated from a Laplace distribution. We show that our algorithm provides $\bar{\epsilon}$-DP for every iteration and $\mathcal{O}(1/T)$ rate of convergence in expectation, where $T$ is the number of iterations. Using MNIST and FEMNIST datasets for the image classification, we demonstrate that our algorithm reduces the testing error by at most $22\%$ compared with the existing DP algorithm, while achieving the same level of data privacy. The numerical experiment also shows that our algorithm converges faster than the existing algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge