Milan Mossé

Modeling Discrimination with Causal Abstraction

Jan 14, 2025Abstract:A person is directly racially discriminated against only if her race caused her worse treatment. This implies that race is an attribute sufficiently separable from other attributes to isolate its causal role. But race is embedded in a nexus of social factors that resist isolated treatment. If race is socially constructed, in what sense can it cause worse treatment? Some propose that the perception of race, rather than race itself, causes worse treatment. Others suggest that since causal models require modularity, i.e. the ability to isolate causal effects, attempts to causally model discrimination are misguided. This paper addresses the problem differently. We introduce a framework for reasoning about discrimination, in which race is a high-level abstraction of lower-level features. In this framework, race can be modeled as itself causing worse treatment. Modularity is ensured by allowing assumptions about social construction to be precisely and explicitly stated, via an alignment between race and its constituents. Such assumptions can then be subjected to normative and empirical challenges, which lead to different views of when discrimination occurs. By distinguishing constitutive and causal relations, the abstraction framework pinpoints disagreements in the current literature on modeling discrimination, while preserving a precise causal account of discrimination.

On Probabilistic and Causal Reasoning with Summation Operators

May 05, 2024

Abstract:Ibeling et al. (2023). axiomatize increasingly expressive languages of causation and probability, and Mosse et al. (2024) show that reasoning (specifically the satisfiability problem) in each causal language is as difficult, from a computational complexity perspective, as reasoning in its merely probabilistic or "correlational" counterpart. Introducing a summation operator to capture common devices that appear in applications -- such as the $do$-calculus of Pearl (2009) for causal inference, which makes ample use of marginalization -- van der Zander et al. (2023) partially extend these earlier complexity results to causal and probabilistic languages with marginalization. We complete this extension, fully characterizing the complexity of probabilistic and causal reasoning with summation, demonstrating that these again remain equally difficult. Surprisingly, allowing free variables for random variable values results in a system that is undecidable, so long as the ranges of these random variables are unrestricted. We finally axiomatize these languages featuring marginalization (or more generally summation), resolving open questions posed by Ibeling et al. (2023).

Social Choice for AI Alignment: Dealing with Diverse Human Feedback

Apr 16, 2024

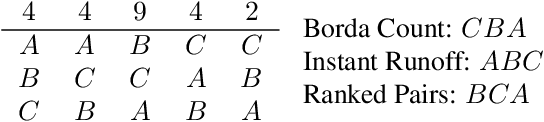

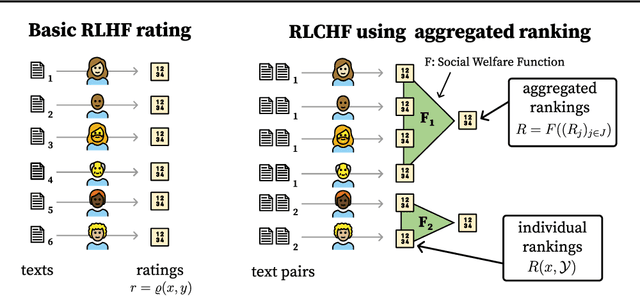

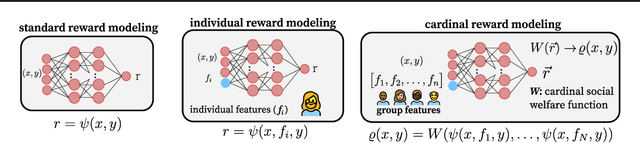

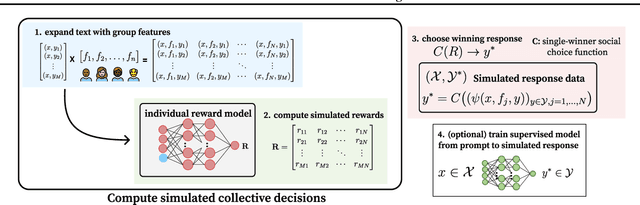

Abstract:Foundation models such as GPT-4 are fine-tuned to avoid unsafe or otherwise problematic behavior, so that, for example, they refuse to comply with requests for help with committing crimes or with producing racist text. One approach to fine-tuning, called reinforcement learning from human feedback, learns from humans' expressed preferences over multiple outputs. Another approach is constitutional AI, in which the input from humans is a list of high-level principles. But how do we deal with potentially diverging input from humans? How can we aggregate the input into consistent data about ''collective'' preferences or otherwise use it to make collective choices about model behavior? In this paper, we argue that the field of social choice is well positioned to address these questions, and we discuss ways forward for this agenda, drawing on discussions in a recent workshop on Social Choice for AI Ethics and Safety held in Berkeley, CA, USA in December 2023.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge