Jobst Heitzig

Model-Based Soft Maximization of Suitable Metrics of Long-Term Human Power

Jul 31, 2025Abstract:Power is a key concept in AI safety: power-seeking as an instrumental goal, sudden or gradual disempowerment of humans, power balance in human-AI interaction and international AI governance. At the same time, power as the ability to pursue diverse goals is essential for wellbeing. This paper explores the idea of promoting both safety and wellbeing by forcing AI agents explicitly to empower humans and to manage the power balance between humans and AI agents in a desirable way. Using a principled, partially axiomatic approach, we design a parametrizable and decomposable objective function that represents an inequality- and risk-averse long-term aggregate of human power. It takes into account humans' bounded rationality and social norms, and, crucially, considers a wide variety of possible human goals. We derive algorithms for computing that metric by backward induction or approximating it via a form of multi-agent reinforcement learning from a given world model. We exemplify the consequences of (softly) maximizing this metric in a variety of paradigmatic situations and describe what instrumental sub-goals it will likely imply. Our cautious assessment is that softly maximizing suitable aggregate metrics of human power might constitute a beneficial objective for agentic AI systems that is safer than direct utility-based objectives.

Non-maximizing policies that fulfill multi-criterion aspirations in expectation

Aug 08, 2024Abstract:In dynamic programming and reinforcement learning, the policy for the sequential decision making of an agent in a stochastic environment is usually determined by expressing the goal as a scalar reward function and seeking a policy that maximizes the expected total reward. However, many goals that humans care about naturally concern multiple aspects of the world, and it may not be obvious how to condense those into a single reward function. Furthermore, maximization suffers from specification gaming, where the obtained policy achieves a high expected total reward in an unintended way, often taking extreme or nonsensical actions. Here we consider finite acyclic Markov Decision Processes with multiple distinct evaluation metrics, which do not necessarily represent quantities that the user wants to be maximized. We assume the task of the agent is to ensure that the vector of expected totals of the evaluation metrics falls into some given convex set, called the aspiration set. Our algorithm guarantees that this task is fulfilled by using simplices to approximate feasibility sets and propagate aspirations forward while ensuring they remain feasible. It has complexity linear in the number of possible state-action-successor triples and polynomial in the number of evaluation metrics. Moreover, the explicitly non-maximizing nature of the chosen policy and goals yields additional degrees of freedom, which can be used to apply heuristic safety criteria to the choice of actions. We discuss several such safety criteria that aim to steer the agent towards more conservative behavior.

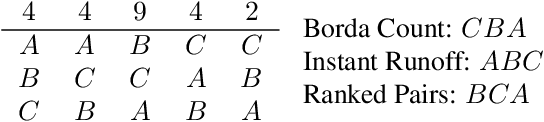

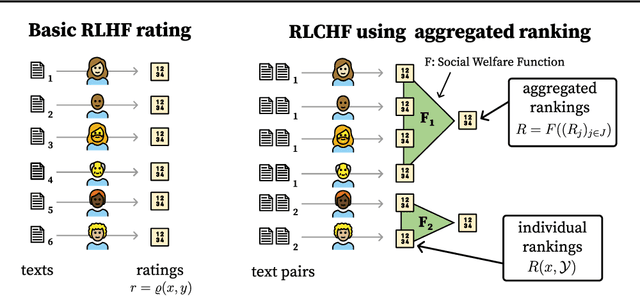

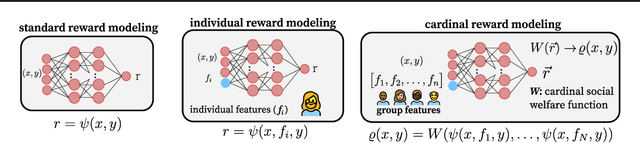

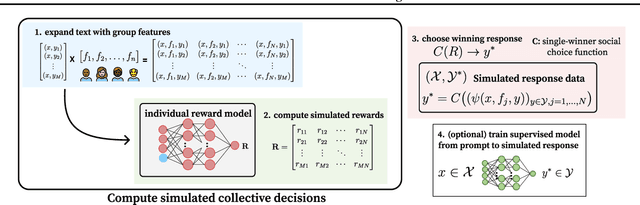

Social Choice for AI Alignment: Dealing with Diverse Human Feedback

Apr 16, 2024

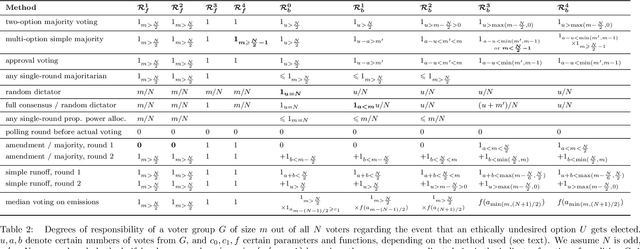

Abstract:Foundation models such as GPT-4 are fine-tuned to avoid unsafe or otherwise problematic behavior, so that, for example, they refuse to comply with requests for help with committing crimes or with producing racist text. One approach to fine-tuning, called reinforcement learning from human feedback, learns from humans' expressed preferences over multiple outputs. Another approach is constitutional AI, in which the input from humans is a list of high-level principles. But how do we deal with potentially diverging input from humans? How can we aggregate the input into consistent data about ''collective'' preferences or otherwise use it to make collective choices about model behavior? In this paper, we argue that the field of social choice is well positioned to address these questions, and we discuss ways forward for this agenda, drawing on discussions in a recent workshop on Social Choice for AI Ethics and Safety held in Berkeley, CA, USA in December 2023.

Improving International Climate Policy via Mutually Conditional Binding Commitments

Jul 26, 2023Abstract:The Paris Agreement, considered a significant milestone in climate negotiations, has faced challenges in effectively addressing climate change due to the unconditional nature of most Nationally Determined Contributions (NDCs). This has resulted in a prevalence of free-riding behavior among major polluters and a lack of concrete conditionality in NDCs. To address this issue, we propose the implementation of a decentralized, bottom-up approach called the Conditional Commitment Mechanism. This mechanism, inspired by the National Popular Vote Interstate Compact, offers flexibility and incentives for early adopters, aiming to formalize conditional cooperation in international climate policy. In this paper, we provide an overview of the mechanism, its performance in the AI4ClimateCooperation challenge, and discuss potential real-world implementation aspects. Prior knowledge of the climate mitigation collective action problem, basic economic principles, and game theory concepts are assumed.

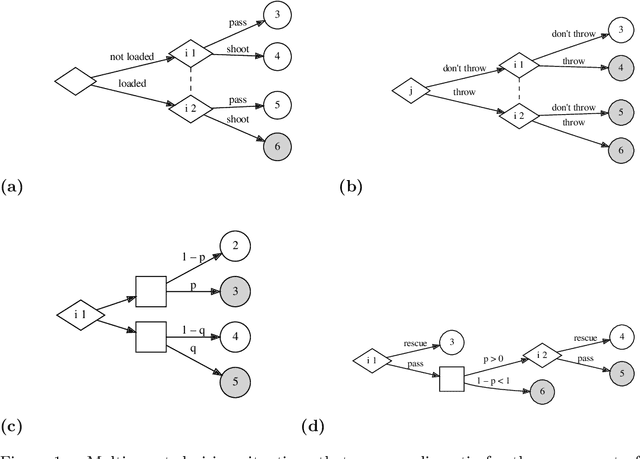

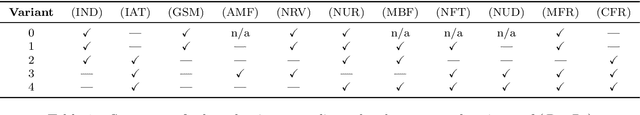

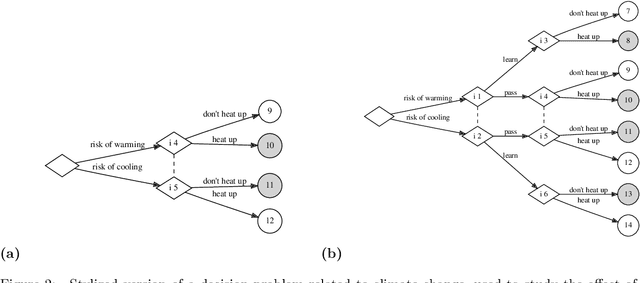

Degrees of individual and groupwise backward and forward responsibility in extensive-form games with ambiguity, and their application to social choice problems

Jul 09, 2020

Abstract:Many real-world situations of ethical relevance, in particular those of large-scale social choice such as mitigating climate change, involve not only many agents whose decisions interact in complicated ways, but also various forms of uncertainty, including quantifiable risk and unquantifiable ambiguity. In such problems, an assessment of individual and groupwise moral responsibility for ethically undesired outcomes or their responsibility to avoid such is challenging and prone to the risk of under- or overdetermination of responsibility. In contrast to existing approaches based on strict causation or certain deontic logics that focus on a binary classification of `responsible' vs `not responsible', we here present several different quantitative responsibility metrics that assess responsibility degrees in units of probability. For this, we use a framework based on an adapted version of extensive-form game trees and an axiomatic approach that specifies a number of potentially desirable properties of such metrics, and then test the developed candidate metrics by their application to a number of paradigmatic social choice situations. We find that while most properties one might desire of such responsibility metrics can be fulfilled by some variant, an optimal metric that clearly outperforms others has yet to be found.

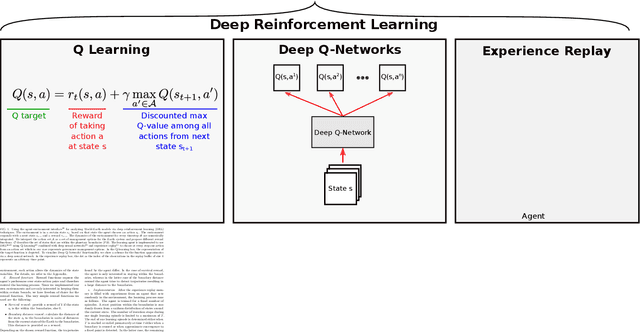

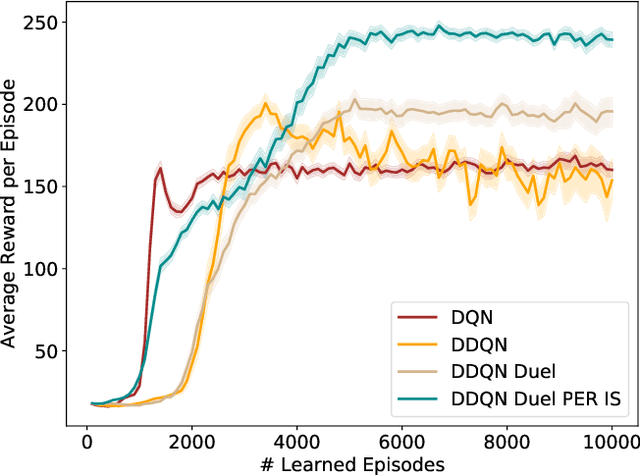

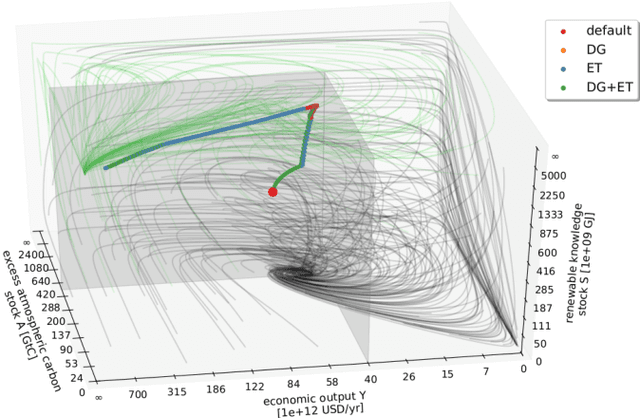

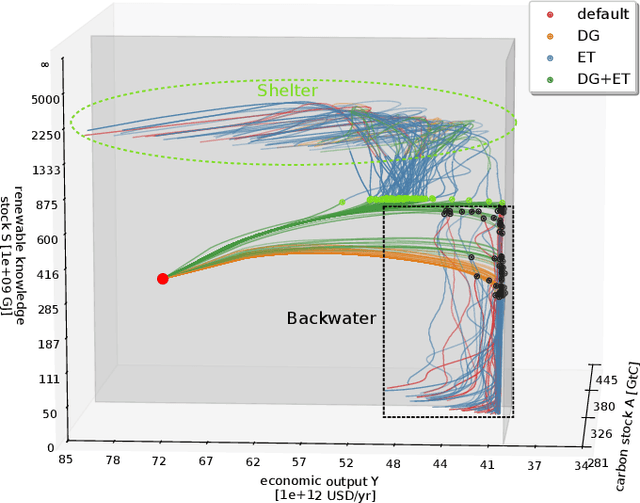

Deep reinforcement learning in World-Earth system models to discover sustainable management strategies

Aug 15, 2019

Abstract:Increasingly complex, non-linear World-Earth system models are used for describing the dynamics of the biophysical Earth system and the socio-economic and socio-cultural World of human societies and their interactions. Identifying pathways towards a sustainable future in these models for informing policy makers and the wider public, e.g. pathways leading to a robust mitigation of dangerous anthropogenic climate change, is a challenging and widely investigated task in the field of climate research and broader Earth system science. This problem is particularly difficult when constraints on avoiding transgressions of planetary boundaries and social foundations need to be taken into account. In this work, we propose to combine recently developed machine learning techniques, namely deep reinforcement learning (DRL), with classical analysis of trajectories in the World-Earth system. Based on the concept of the agent-environment interface, we develop an agent that is generally able to act and learn in variable manageable environment models of the Earth system. We demonstrate the potential of our framework by applying DRL algorithms to two stylized World-Earth system models. Conceptually, we explore thereby the feasibility of finding novel global governance policies leading into a safe and just operating space constrained by certain planetary and socio-economic boundaries. The artificially intelligent agent learns that the timing of a specific mix of taxing carbon emissions and subsidies on renewables is of crucial relevance for finding World-Earth system trajectories that are sustainable on the long term.

Quantifying Causal Coupling Strength: A Lag-specific Measure For Multivariate Time Series Related To Transfer Entropy

Nov 21, 2012

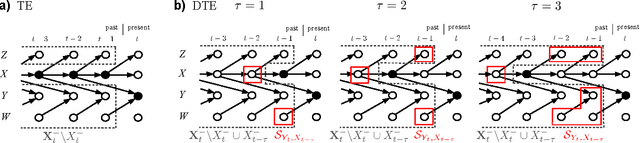

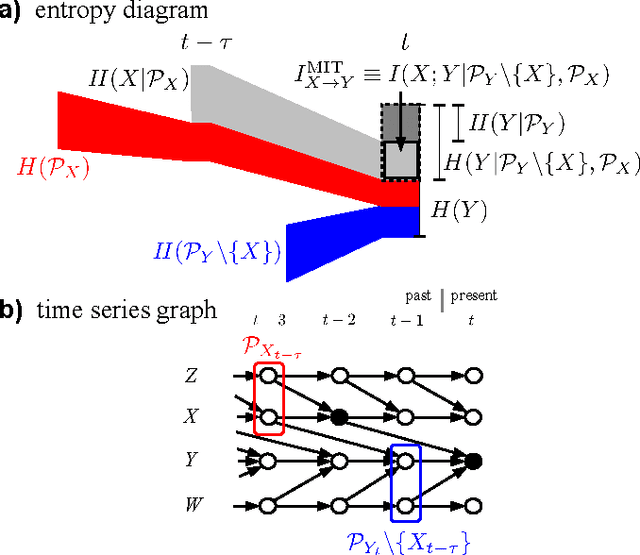

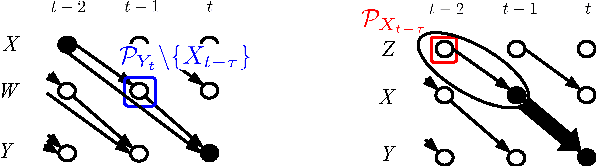

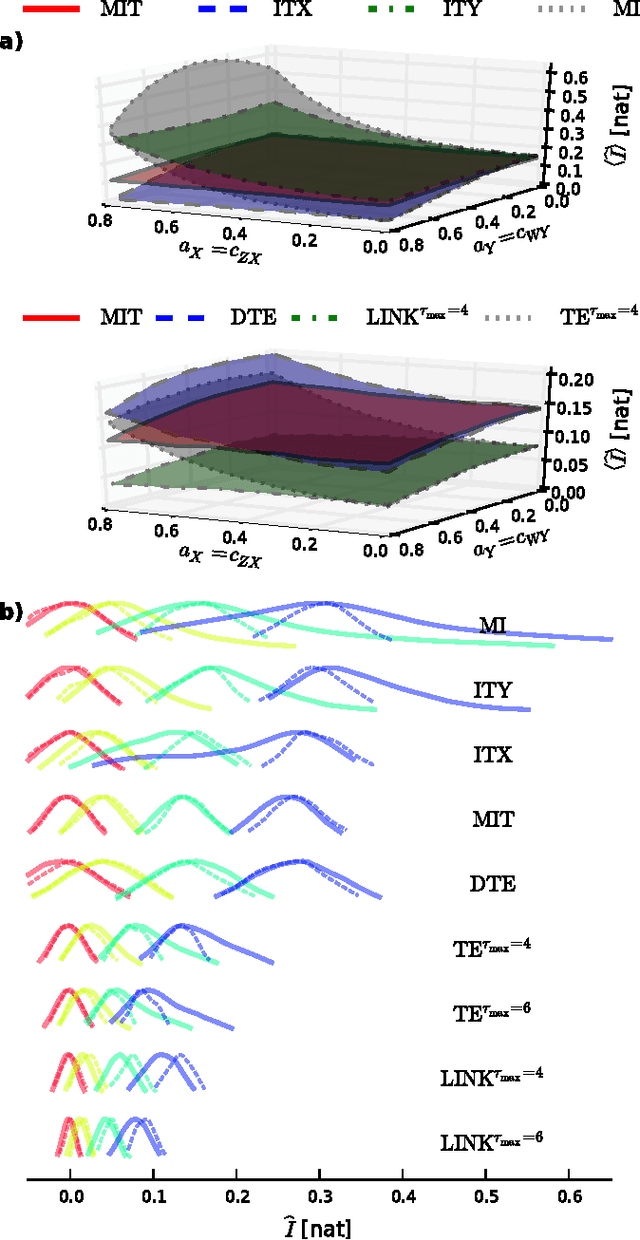

Abstract:While it is an important problem to identify the existence of causal associations between two components of a multivariate time series, a topic addressed in Runge et al. (2012), it is even more important to assess the strength of their association in a meaningful way. In the present article we focus on the problem of defining a meaningful coupling strength using information theoretic measures and demonstrate the short-comings of the well-known mutual information and transfer entropy. Instead, we propose a certain time-delayed conditional mutual information, the momentary information transfer (MIT), as a measure of association that is general, causal and lag-specific, reflects a well interpretable notion of coupling strength and is practically computable. MIT is based on the fundamental concept of source entropy, which we utilize to yield a notion of coupling strength that is, compared to mutual information and transfer entropy, well interpretable, in that for many cases it solely depends on the interaction of the two components at a certain lag. In particular, MIT is thus in many cases able to exclude the misleading influence of autodependency within a process in an information-theoretic way. We formalize and prove this idea analytically and numerically for a general class of nonlinear stochastic processes and illustrate the potential of MIT on climatological data.

* 15 pages, 6 figures; accepted for publication in Physical Review E

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge