Mikolaj Jankowski

Adaptive Early Exiting for Collaborative Inference over Noisy Wireless Channels

Nov 29, 2023

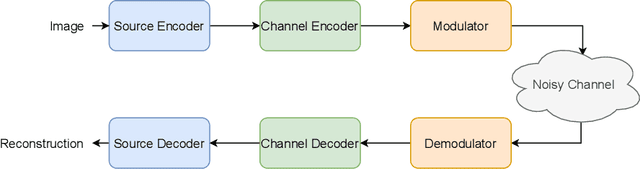

Abstract:Collaborative inference systems are one of the emerging solutions for deploying deep neural networks (DNNs) at the wireless network edge. Their main idea is to divide a DNN into two parts, where the first is shallow enough to be reliably executed at edge devices of limited computational power, while the second part is executed at an edge server with higher computational capabilities. The main advantage of such systems is that the input of the DNN gets compressed as the subsequent layers of the shallow part extract only the information necessary for the task. As a result, significant communication savings can be achieved compared to transmitting raw input samples. In this work, we study early exiting in the context of collaborative inference, which allows obtaining inference results at the edge device for certain samples, without the need to transmit the partially processed data to the edge server at all, leading to further communication savings. The central part of our system is the transmission-decision (TD) mechanism, which, given the information from the early exit, and the wireless channel conditions, decides whether to keep the early exit prediction or transmit the data to the edge server for further processing. In this paper, we evaluate various TD mechanisms and show experimentally, that for an image classification task over the wireless edge, proper utilization of early exits can provide both performance gains and significant communication savings.

DeepJSCC-Q: Constellation Constrained Deep Joint Source-Channel Coding

Jun 16, 2022

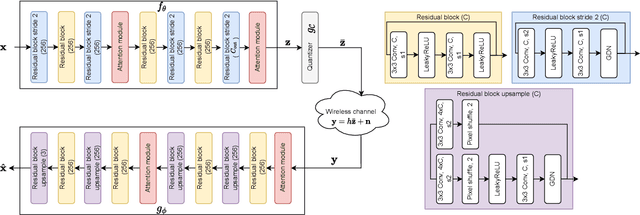

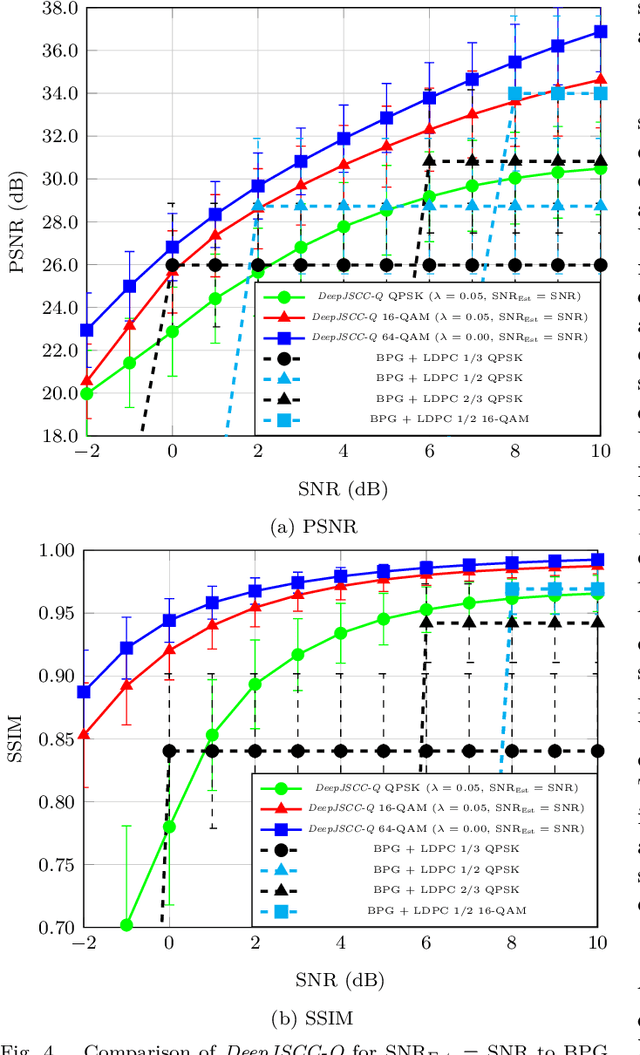

Abstract:Recent works have shown that modern machine learning techniques can provide an alternative approach to the long-standing joint source-channel coding (JSCC) problem. Very promising initial results, superior to popular digital schemes that utilize separate source and channel codes, have been demonstrated for wireless image and video transmission using deep neural networks (DNNs). However, end-to-end training of such schemes requires a differentiable channel input representation; hence, prior works have assumed that any complex value can be transmitted over the channel. This can prevent the application of these codes in scenarios where the hardware or protocol can only admit certain sets of channel inputs, prescribed by a digital constellation. Herein, we propose DeepJSCC-Q, an end-to-end optimized JSCC solution for wireless image transmission using a finite channel input alphabet. We show that DeepJSCC-Q can achieve similar performance to prior works that allow any complex valued channel input, especially when high modulation orders are available, and that the performance asymptotically approaches that of unconstrained channel input as the modulation order increases. Importantly, DeepJSCC-Q preserves the graceful degradation of image quality in unpredictable channel conditions, a desirable property for deployment in mobile systems with rapidly changing channel conditions.

DeepJSCC-Q: Channel Input Constrained Deep Joint Source-Channel Coding

Nov 25, 2021

Abstract:Recent works have shown that the task of wireless transmission of images can be learned with the use of machine learning techniques. Very promising results in end-to-end image quality, superior to popular digital schemes that utilize source and channel coding separation, have been demonstrated through the training of an autoencoder, with a non-trainable channel layer in the middle. However, these methods assume that any complex value can be transmitted over the channel, which can prevent the application of the algorithm in scenarios where the hardware or protocol can only admit certain sets of channel inputs, such as the use of a digital constellation. Herein, we propose DeepJSCC-Q, an end-to-end optimized joint source-channel coding scheme for wireless image transmission, which is able to operate with a fixed channel input alphabet. We show that DeepJSCC-Q can achieve similar performance to models that use continuous-valued channel input. Importantly, it preserves the graceful degradation of image quality observed in prior work when channel conditions worsen, making DeepJSCC-Q much more attractive for deployment in practical systems.

AirNet: Neural Network Transmission over the Air

May 26, 2021

Abstract:State-of-the-art performance for many emerging edge applications is achieved by deep neural networks (DNNs). Often, these DNNs are location and time sensitive, and the parameters of a specific DNN must be delivered from an edge server to the edge device rapidly and efficiently to carry out time-sensitive inference tasks. We introduce AirNet, a novel training and analog transmission method that allows efficient wireless delivery of DNNs. We first train the DNN with noise injection to counter the wireless channel noise. We also employ pruning to reduce the channel bandwidth necessary for transmission, and perform knowledge distillation from a larger model to achieve satisfactory performance, despite the channel perturbations. We show that AirNet achieves significantly higher test accuracy compared to digital alternatives under the same bandwidth and power constraints. It also exhibits graceful degradation with channel quality, which reduces the requirement for accurate channel estimation.

A Novel Look at LIDAR-aided Data-driven mmWave Beam Selection

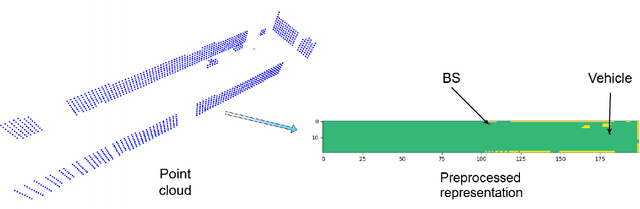

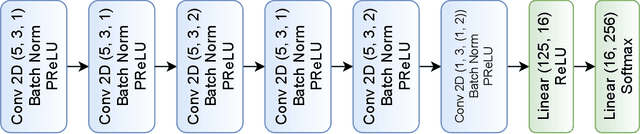

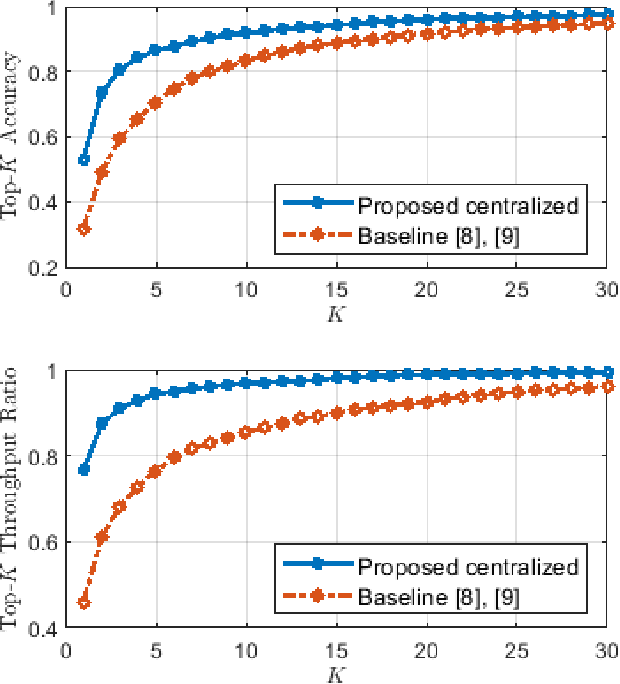

May 03, 2021

Abstract:Efficient millimeter wave (mmWave) beam selection in vehicle-to-infrastructure (V2I) communication is a crucial yet challenging task due to the narrow mmWave beamwidth and high user mobility. To reduce the search overhead of iterative beam discovery procedures, contextual information from light detection and ranging (LIDAR) sensors mounted on vehicles has been leveraged by data-driven methods to produce useful side information. In this paper, we propose a lightweight neural network (NN) architecture along with the corresponding LIDAR preprocessing, which significantly outperforms previous works. Our solution comprises multiple novelties that improve both the convergence speed and the final accuracy of the model. In particular, we define a novel loss function inspired by the knowledge distillation idea, introduce a curriculum training approach exploiting line-of-sight (LOS)/non-line-of-sight (NLOS) information, and we propose a non-local attention module to improve the performance for the more challenging NLOS cases. Simulation results on benchmark datasets show that, utilizing solely LIDAR data and the receiver position, our NN-based beam selection scheme can achieve 79.9% throughput of an exhaustive beam sweeping approach without any beam search overhead and 95% by searching among as few as 6 beams.

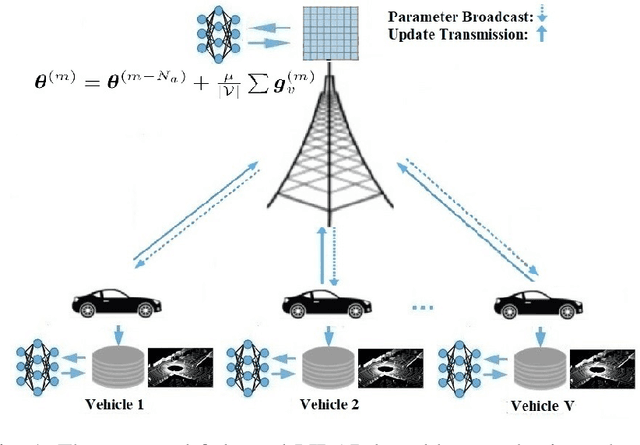

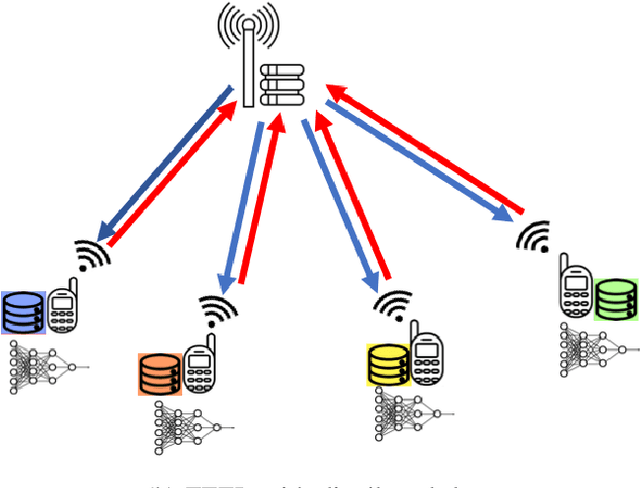

Federated mmWave Beam Selection Utilizing LIDAR Data

Feb 04, 2021

Abstract:Efficient link configuration in millimeter wave (mmWave) communication systems is a crucial yet challenging task due to the overhead imposed by beam selection on the network performance. For vehicle-to-infrastructure (V2I) networks, side information from LIDAR sensors mounted on the vehicles has been leveraged to reduce the beam search overhead. In this letter, we propose distributed LIDAR aided beam selection for V2I mmWave communication systems utilizing federated training. In the proposed scheme, connected vehicles collaborate to train a shared neural network (NN) on their locally available LIDAR data during normal operation of the system. We also propose an alternative reduced-complexity convolutional NN (CNN) architecture and LIDAR preprocessing, which significantly outperforms previous works in terms of both the performance and the complexity.

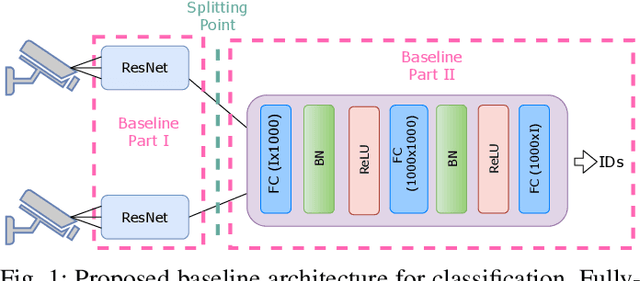

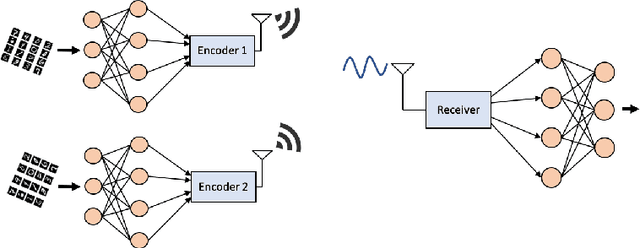

Deep Joint Transmission-Recognition for Multi-View Cameras

Nov 03, 2020

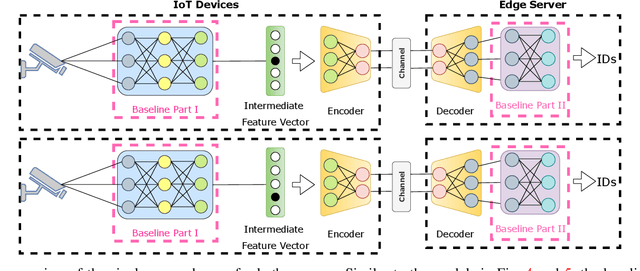

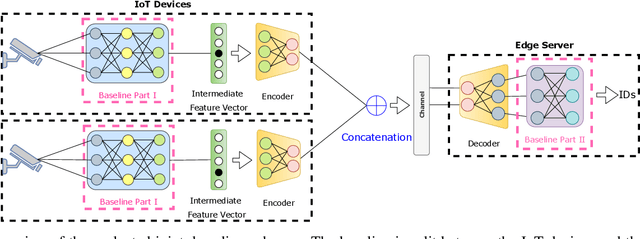

Abstract:We propose joint transmission-recognition schemes for efficient inference at the wireless edge. Motivated by the surveillance applications with wireless cameras, we consider the person classification task over a wireless channel carried out by multi-view cameras operating as edge devices. We introduce deep neural network (DNN) based compression schemes which incorporate digital (separate) transmission and joint source-channel coding (JSCC) methods. We evaluate the proposed device-edge communication schemes under different channel SNRs, bandwidth and power constraints. We show that the JSCC schemes not only improve the end-to-end accuracy but also simplify the encoding process and provide graceful degradation with channel quality.

Communicate to Learn at the Edge

Sep 28, 2020

Abstract:Bringing the success of modern machine learning (ML) techniques to mobile devices can enable many new services and businesses, but also poses significant technical and research challenges. Two factors that are critical for the success of ML algorithms are massive amounts of data and processing power, both of which are plentiful, yet highly distributed at the network edge. Moreover, edge devices are connected through bandwidth- and power-limited wireless links that suffer from noise, time-variations, and interference. Information and coding theory have laid the foundations of reliable and efficient communications in the presence of channel imperfections, whose application in modern wireless networks have been a tremendous success. However, there is a clear disconnect between the current coding and communication schemes, and the ML algorithms deployed at the network edge. In this paper, we challenge the current approach that treats these problems separately, and argue for a joint communication and learning paradigm for both the training and inference stages of edge learning.

Wireless Image Retrieval at the Edge

Jul 21, 2020

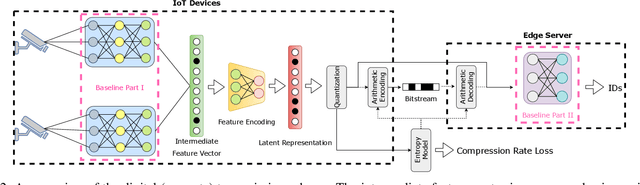

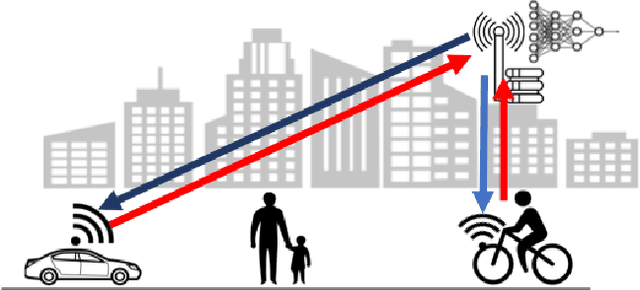

Abstract:We study the image retrieval problem at the wireless edge, where an edge device captures an image, which is then used to retrieve similar images from an edge server. These can be images of the same person or a vehicle taken from other cameras at different times and locations. Our goal is to maximize the accuracy of the retrieval task under power and bandwidth constraints over the wireless link. Due to the stringent delay constraint of the underlying application, sending the whole image at a sufficient quality is not possible. To address this problem we propose two communication schemes, based on digital and analog communications, respectively. In the digital approach, we first propose a deep neural network (DNN) for retrieval-oriented image compression, whose output bit sequence is transmitted over the channel as reliably as possible using conventional channel codes. In the analog joint source and channel coding (JSCC) approach, the feature vectors are directly mapped into channel symbols. We evaluate both schemes on image based re-identification (re-ID) tasks under different channel conditions, including both static and fading channels. We show that the JSCC scheme significantly increases the end-to-end accuracy, speeds up the encoding process, and provides graceful degradation with channel conditions. The proposed architecture is evaluated through extensive simulations on different datasets and channel conditions, as well as through ablation studies.

Deep Joint Transmission-Recognition for Power-Constrained IoT Devices

Mar 04, 2020

Abstract:We propose a joint transmission-recognition scheme for efficient inference at the wireless network edge. Our scheme allows for reliable image recognition over wireless channels with significant computational load reduction at the sender side. We incorporate recently proposed deep joint source-channel coding (JSCC) scheme, and combine it with novel filter pruning strategies aimed at reducing the redundant complexity from neural networks. We evaluate our approach on a classification task, and show satisfactory results in both transmission reliability and workload reduction. This is the first work that combines deep JSCC with network pruning and applies it to images classification over wireless network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge