Mikael Johansson

Controlled disagreement improves generalization in decentralized training

Feb 02, 2026Abstract:Decentralized training is often regarded as inferior to centralized training because the consensus errors between workers are thought to undermine convergence and generalization, even with homogeneous data distributions. This work challenges this view by introducing decentralized SGD with Adaptive Consensus (DSGD-AC), which intentionally preserves non-vanishing consensus errors through a time-dependent scaling mechanism. We prove that these errors are not random noise but systematically align with the dominant Hessian subspace, acting as structured perturbations that guide optimization toward flatter minima. Across image classification and machine translation benchmarks, DSGD-AC consistently surpasses both standard DSGD and centralized SGD in test accuracy and solution flatness. Together, these results establish consensus errors as a useful implicit regularizer and open a new perspective on the design of decentralized learning algorithms.

Personalized Federated Learning under Model Dissimilarity Constraints

May 15, 2025Abstract:One of the defining challenges in federated learning is that of statistical heterogeneity among clients. We address this problem with KARULA, a regularized strategy for personalized federated learning, which constrains the pairwise model dissimilarities between clients based on the difference in their distributions, as measured by a surrogate for the 1-Wasserstein distance adapted for the federated setting. This allows the strategy to adapt to highly complex interrelations between clients, that e.g., clustered approaches fail to capture. We propose an inexact projected stochastic gradient algorithm to solve the constrained problem that the strategy defines, and show theoretically that it converges with smooth, possibly non-convex losses to a neighborhood of a stationary point with rate O(1/K). We demonstrate the effectiveness of KARULA on synthetic and real federated data sets.

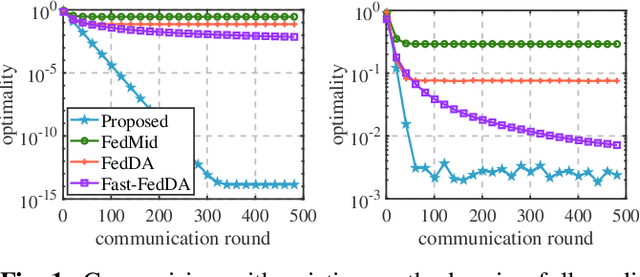

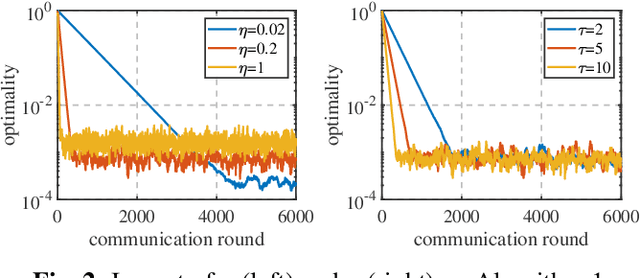

Non-convex composite federated learning with heterogeneous data

Feb 06, 2025Abstract:We propose an innovative algorithm for non-convex composite federated learning that decouples the proximal operator evaluation and the communication between server and clients. Moreover, each client uses local updates to communicate less frequently with the server, sends only a single d-dimensional vector per communication round, and overcomes issues with client drift. In the analysis, challenges arise from the use of decoupling strategies and local updates in the algorithm, as well as from the non-convex and non-smooth nature of the problem. We establish sublinear and linear convergence to a bounded residual error under general non-convexity and the proximal Polyak-Lojasiewicz inequality, respectively. In the numerical experiments, we demonstrate the superiority of our algorithm over state-of-the-art methods on both synthetic and real datasets.

Locally Differentially Private Online Federated Learning With Correlated Noise

Nov 27, 2024Abstract:We introduce a locally differentially private (LDP) algorithm for online federated learning that employs temporally correlated noise to improve utility while preserving privacy. To address challenges posed by the correlated noise and local updates with streaming non-IID data, we develop a perturbed iterate analysis that controls the impact of the noise on the utility. Moreover, we demonstrate how the drift errors from local updates can be effectively managed for several classes of nonconvex loss functions. Subject to an $(\epsilon,\delta)$-LDP budget, we establish a dynamic regret bound that quantifies the impact of key parameters and the intensity of changes in the dynamic environment on the learning performance. Numerical experiments confirm the efficacy of the proposed algorithm.

From promise to practice: realizing high-performance decentralized training

Oct 15, 2024Abstract:Decentralized training of deep neural networks has attracted significant attention for its theoretically superior scalability over synchronous data-parallel methods like All-Reduce. However, realizing this potential in multi-node training is challenging due to the complex design space that involves communication topologies, computation patterns, and optimization algorithms. This paper identifies three key factors that can lead to speedups over All-Reduce training and constructs a runtime model to determine when, how, and to what degree decentralization can yield shorter per-iteration runtimes. Furthermore, to support the decentralized training of transformer-based models, we study a decentralized Adam algorithm that allows for overlapping communications and computations, prove its convergence, and propose an accumulation technique to mitigate the high variance caused by small local batch sizes. We deploy the proposed approach in clusters with up to 64 GPUs and demonstrate its practicality and advantages in both runtime and generalization performance under a fixed iteration budget.

Temporal Predictive Coding for Gradient Compression in Distributed Learning

Oct 03, 2024Abstract:This paper proposes a prediction-based gradient compression method for distributed learning with event-triggered communication. Our goal is to reduce the amount of information transmitted from the distributed agents to the parameter server by exploiting temporal correlation in the local gradients. We use a linear predictor that \textit{combines past gradients to form a prediction of the current gradient}, with coefficients that are optimized by solving a least-square problem. In each iteration, every agent transmits the predictor coefficients to the server such that the predicted local gradient can be computed. The difference between the true local gradient and the predicted one, termed the \textit{prediction residual, is only transmitted when its norm is above some threshold.} When this additional communication step is omitted, the server uses the prediction as the estimated gradient. This proposed design shows notable performance gains compared to existing methods in the literature, achieving convergence with reduced communication costs.

Nonconvex Federated Learning on Compact Smooth Submanifolds With Heterogeneous Data

Jun 12, 2024Abstract:Many machine learning tasks, such as principal component analysis and low-rank matrix completion, give rise to manifold optimization problems. Although there is a large body of work studying the design and analysis of algorithms for manifold optimization in the centralized setting, there are currently very few works addressing the federated setting. In this paper, we consider nonconvex federated learning over a compact smooth submanifold in the setting of heterogeneous client data. We propose an algorithm that leverages stochastic Riemannian gradients and a manifold projection operator to improve computational efficiency, uses local updates to improve communication efficiency, and avoids client drift. Theoretically, we show that our proposed algorithm converges sub-linearly to a neighborhood of a first-order optimal solution by using a novel analysis that jointly exploits the manifold structure and properties of the loss functions. Numerical experiments demonstrate that our algorithm has significantly smaller computational and communication overhead than existing methods.

Differentially Private Online Federated Learning with Correlated Noise

Mar 25, 2024Abstract:We propose a novel differentially private algorithm for online federated learning that employs temporally correlated noise to improve the utility while ensuring the privacy of the continuously released models. To address challenges stemming from DP noise and local updates with streaming noniid data, we develop a perturbed iterate analysis to control the impact of the DP noise on the utility. Moreover, we demonstrate how the drift errors from local updates can be effectively managed under a quasi-strong convexity condition. Subject to an $(\epsilon, \delta)$-DP budget, we establish a dynamic regret bound over the entire time horizon that quantifies the impact of key parameters and the intensity of changes in dynamic environments. Numerical experiments validate the efficacy of the proposed algorithm.

Asynchronous Distributed Optimization with Delay-free Parameters

Dec 11, 2023

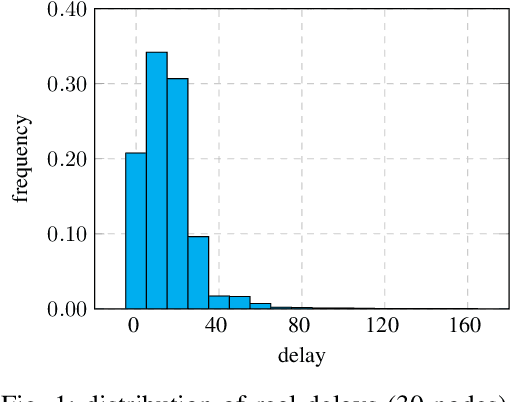

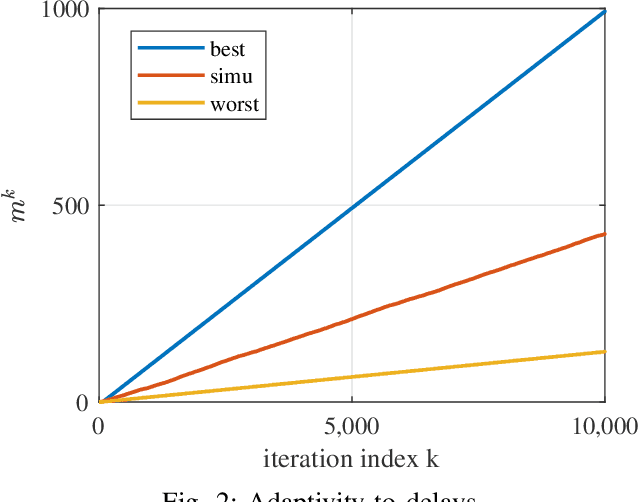

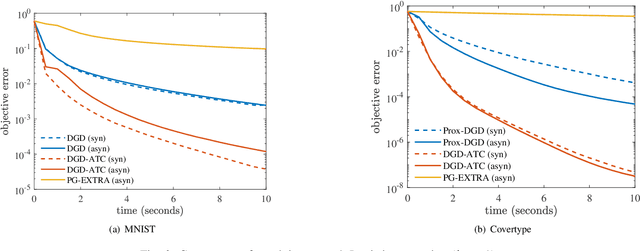

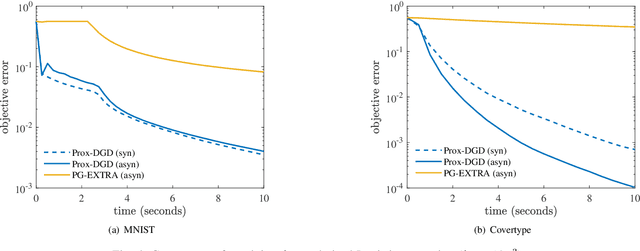

Abstract:Existing asynchronous distributed optimization algorithms often use diminishing step-sizes that cause slow practical convergence, or use fixed step-sizes that depend on and decrease with an upper bound of the delays. Not only are such delay bounds hard to obtain in advance, but they also tend to be large and rarely attained, resulting in unnecessarily slow convergence. This paper develops asynchronous versions of two distributed algorithms, Prox-DGD and DGD-ATC, for solving consensus optimization problems over undirected networks. In contrast to alternatives, our algorithms can converge to the fixed point set of their synchronous counterparts using step-sizes that are independent of the delays. We establish convergence guarantees for strongly and weakly convex problems under both partial and total asynchrony. We also show that the convergence speed of the two asynchronous methods adapts to the actual level of asynchrony rather than being constrained by the worst-case. Numerical experiments demonstrate a strong practical performance of our asynchronous algorithms.

Composite federated learning with heterogeneous data

Sep 04, 2023

Abstract:We propose a novel algorithm for solving the composite Federated Learning (FL) problem. This algorithm manages non-smooth regularization by strategically decoupling the proximal operator and communication, and addresses client drift without any assumptions about data similarity. Moreover, each worker uses local updates to reduce the communication frequency with the server and transmits only a $d$-dimensional vector per communication round. We prove that our algorithm converges linearly to a neighborhood of the optimal solution and demonstrate the superiority of our algorithm over state-of-the-art methods in numerical experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge